RatInABox, a toolkit for modelling locomotion and neuronal activity in continuous environments

Curation statements for this article:-

Curated by eLife

eLife assessment

George et al. present a convincing new Python toolbox ("RatInABox") that allows researchers to generate synthetic behavior and neural data specifically focusing on hippocampal functional cell types (place cells, grid cells, boundary vector cells, head direction cells).

This is valuable for theory-driven research where synthetic benchmarks should be used. Beyond just navigation, it can be highly useful for novel tool development that requires jointly modeling behavior and neural data. The authors provide convincing evidence of its utility with well documented and easy to use code and the corresponding manuscript.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Generating synthetic locomotory and neural data is a useful yet cumbersome step commonly required to study theoretical models of the brain’s role in spatial navigation. This process can be time consuming and, without a common framework, makes it difficult to reproduce or compare studies which each generate test data in different ways. In response, we present RatInABox, an open-source Python toolkit designed to model realistic rodent locomotion and generate synthetic neural data from spatially modulated cell types. This software provides users with (i) the ability to construct one- or two-dimensional environments with configurable barriers and visual cues, (ii) a physically realistic random motion model fitted to experimental data, (iii) rapid online calculation of neural data for many of the known self-location or velocity selective cell types in the hippocampal formation (including place cells, grid cells, boundary vector cells, head direction cells) and (iv) a framework for constructing custom cell types, multi-layer network models and data- or policy-controlled motion trajectories. The motion and neural models are spatially and temporally continuous as well as topographically sensitive to boundary conditions and walls. We demonstrate that out-of-the-box parameter settings replicate many aspects of rodent foraging behaviour such as velocity statistics and the tendency of rodents to over-explore walls. Numerous tutorial scripts are provided, including examples where RatInABox is used for decoding position from neural data or to solve a navigational reinforcement learning task. We hope this tool will significantly streamline computational research into the brain’s role in navigation.

Article activity feed

-

-

Author Response

Reviewer #1 (Public Review):

In this work George et al. describe RatInABox, a software system for generating surrogate locomotion trajectories and neural data to simulate the effects of a rodent moving about an arena. This work is aimed at researchers that study rodent navigation and its neural machinery.

Strengths:

- The software contains several helpful features. It has the ability to import existing movement traces and interpolate data with lower sampling rates. It allows varying the degree to which rodents stay near the walls of the arena. It appears to be able to simulate place cells, grid cells, and some other features.

- The architecture seems fine and the code is in a language that will be accessible to many labs.

- There is convincing validation of velocity statistics. There are examples shown of position …

Author Response

Reviewer #1 (Public Review):

In this work George et al. describe RatInABox, a software system for generating surrogate locomotion trajectories and neural data to simulate the effects of a rodent moving about an arena. This work is aimed at researchers that study rodent navigation and its neural machinery.

Strengths:

- The software contains several helpful features. It has the ability to import existing movement traces and interpolate data with lower sampling rates. It allows varying the degree to which rodents stay near the walls of the arena. It appears to be able to simulate place cells, grid cells, and some other features.

- The architecture seems fine and the code is in a language that will be accessible to many labs.

- There is convincing validation of velocity statistics. There are examples shown of position data, which seem to generally match between data and simulation.

Weaknesses:

- There is little analysis of position statistics. I am not sure this is needed, but the software might end up more powerful and the paper higher impact if some position analysis was done. Based on the traces shown, it seems possible that some additional parameters might be needed to simulate position/occupancy traces whose statistics match the data.

Thank you for this suggestion. We have added a new panel to figure 2 showing a histogram of the time the agent spends at positions of increasing distance from the nearest wall. As you can see, RatInABox is a good fit to the real locomotion data: positions very near the wall are under-explored (in the real data this is probably because whiskers and physical body size block positions very close to the wall) and positions just away from but close to the wall are slightly over explored (an effect known as thigmotaxis, already discussed in the manuscript).

As you correctly suspected, fitting this warranted a new parameter which controls the strength of the wall repulsion, we call this “wall_repel_strength”. The motion model hasn’t mathematically changed, all we did was take a parameter which was originally a fixed constant 1, unavailable to the user, and made it a variable which can be changed (see methods section 6.1.3 for maths). The curves fit best when wall_repel_strength ~= 2. Methods and parameters table have been updated accordingly. See Fig. 2e.

- The overall impact of this work is somewhat limited. It is not completely clear how many labs might use this, or have a need for it. The introduction could have provided more specificity about examples of past work that would have been better done with this tool.

At the point of publication we, like yourself, also didn’t know to what extent there would be a market for this toolkit however we were pleased to find that there was. In its initial 11 months RatInABox has accumulated a growing, global user base, over 120 stars on Github and north of 17,000 downloads through PyPI. We have accumulated a list of testimonials[5] from users of the package vouching for its utility and ease of use, four of which are abridged below. These testimonials come from a diverse group of 9 researchers spanning 6 countries across 4 continents and varying career stages from pre-doctoral researchers with little computational exposure to tenured PIs. Finally, not only does the community use RatInABox they are also building it: at the time of writing RatInABx has received logged 20 GitHub “Issues” and 28 “pull requests” from external users (i.e. those who aren’t authors on this manuscript) ranging from small discussions and bug-fixes to significant new features, demos and wrappers.

Abridged testimonials:

● “As a medical graduate from Pakistan with little computational background…I found RatInABox to be a great learning and teaching tool, particularly for those who are underprivileged and new to computational neuroscience.” - Muhammad Kaleem, King Edward Medical University, Pakistan

● “RatInABox has been critical to the progress of my postdoctoral work. I believe it has the strong potential to become a cornerstone tool for realistic behavioural and neuronal modelling” - Dr. Colleen Gillon, Imperial College London, UK

● “As a student studying mathematics at the University of Ghana, I would recommend RatInABox to anyone looking to learn or teach concepts in computational neuroscience.” - Kojo Nketia, University of Ghana, Ghana

● “RatInABox has established a new foundation and common space for advances in cognitive mapping research.” - Dr. Quinn Lee, McGill, Canada

The introduction continues to include the following sentence highlighting examples of past work which relied of generating artificial movement and/or neural dat and which, by implication could have been done better (or at least accelerated and standardised) using our toolbox.

“Indeed, many past[13, 14, 15] and recent[16, 17, 18, 19, 6, 20, 21] models have relied on artificially generated movement trajectories and neural data.”

- Presentation: Some discussion of case studies in Introduction might address the above point on impact. It would be useful to have more discussion of how general the software is, and why the current feature set was chosen. For example, how well does RatInABox deal with environments of arbitrary shape? T-mazes? It might help illustrate the tool's generality to move some of the examples in supplementary figure to main text - or just summarize them in a main text figure/panel.

Thank you for this question. Since the initial submission of this manuscript RatInABox has been upgraded and environments have become substantially more “general”. Environments can now be of arbitrary shape (including T-mazes), boundaries can be curved, they can contain holes and can also contain objects (0-dimensional points which act as visual cues). A few examples are showcased in the updated figure 1 panel e.

To further illustrate the tools generality beyond the structure of the environment we continue to summarise the reinforcement learning example (Fig. 3e) and neural decoding example in section 3.1. In addition to this we have added three new panels into figure 3 highlighting new features which, we hope you will agree, make RatInABox significantly more powerful and general and satisfy your suggestion of clarifying utility and generality in the manuscript directly.

On the topic of generality, we wrote the manuscript in such a way as to demonstrate how the rich variety of ways RatInABox can be used without providing an exhaustive list of potential applications. For example, RatInABox can be used to study neural decoding and it can be used to study reinforcement learning but not because it was purpose built with these use-cases in mind. Rather because it contains a set of core tools designed to support spatial navigation and neural representations in general. For this reason we would rather keep the demonstrative examples as supplements and implement your suggestion of further raising attention to the large array of tutorials and demos provided on the GitHub repository by modifying the final paragraph of section 3.1 to read:

“Additional tutorials, not described here but available online, demonstrate how RatInABox can be used to model splitter cells, conjunctive grid cells, biologically plausible path integration, successor features, deep actor-critic RL, whisker cells and more. Despite including these examples we stress that they are not exhaustive. RatInABox provides the framework and primitive classes/functions from which highly advanced simulations such as these can be built.”

Reviewer #3 (Public Review):

George et al. present a convincing new Python toolbox that allows researchers to generate synthetic behavior and neural data specifically focusing on hippocampal functional cell types (place cells, grid cells, boundary vector cells, head direction cells). This is highly useful for theory-driven research where synthetic benchmarks should be used. Beyond just navigation, it can be highly useful for novel tool development that requires jointly modeling behavior and neural data. The code is well organized and written and it was easy for us to test.

We have a few constructive points that they might want to consider.

- Right now the code only supports X,Y movements, but Z is also critical and opens new questions in 3D coding of space (such as grid cells in bats, etc). Many animals effectively navigate in 2D, as a whole, but they certainly make a large number of 3D head movements, and modeling this will become increasingly important and the authors should consider how to support this.

Agents now have a dedicated head direction variable (before head direction was just assumed to be the normalised velocity vector). By default this just smoothes and normalises the velocity but, in theory, could be accessed and used to model more complex head direction dynamics. This is described in the updated methods section.

In general, we try to tread a careful line. For example we embrace certain aspects of physical and biological realism (e.g. modelling environments as continuous, or fitting motion to real behaviour) and avoid others (such as the biophysics/biochemisty of individual neurons, or the mechanical complexities of joint/muscle modelling). It is hard to decide where to draw but we have a few guiding principles:

RatInABox is most well suited for normative modelling and neuroAI-style probing questions at the level of behaviour and representations. We consciously avoid unnecessary complexities that do not directly contribute to these domains.

Compute: To best accelerate research we think the package should remain fast and lightweight. Certain features are ignored if computational cost outweighs their benefit.

Users: If, and as, users require complexities e.g. 3D head movements, we will consider adding them to the code base.

For now we believe proper 3D motion is out of scope for RatInABox. Calculating motion near walls is already surprisingly complex and to do this in 3D would be challenging. Furthermore all cell classes would need to be rewritten too. This would be a large undertaking probably requiring rewriting the package from scratch, or making a new package RatInABox3D (BatInABox?) altogether, something which we don’t intend to undertake right now. One option, if users really needed 3D trajectory data they could quite straightforwardly simulate a 2D Environment (X,Y) and a 1D Environment (Z) independently. With this method (X,Y) and (Z) motion would be entirely independent which is of unrealistic but, depending on the use case, may well be sufficient.

Alternatively, as you said that many agents effectively navigate in 2D but show complex 3D head and other body movements, RatInABox could interface with and feed data downstream to other softwares (for example Mujoco[11]) which specialise in joint/muscle modelling. This would be a very legitimate use-case for RatInABox.

We’ve flagged all of these assumptions and limitations in a new body of text added to the discussion:

“Our package is not the first to model neural data[37, 38, 39] or spatial behaviour[40, 41], yet it distinguishes itself by integrating these two aspects within a unified, lightweight framework. The modelling approach employed by RatInABox involves certain assumptions:

It does not engage in the detailed exploration of biophysical[37, 39] or biochemical[38] aspects of neural modelling, nor does it delve into the mechanical intricacies of joint and muscle modelling[40, 41]. While these elements are crucial in specific scenarios, they demand substantial computational resources and become less pertinent in studies focused on higher-level questions about behaviour and neural representations.

A focus of our package is modelling experimental paradigms commonly used to study spatially modulated neural activity and behaviour in rodents. Consequently, environments are currently restricted to being two-dimensional and planar, precluding the exploration of three-dimensional settings. However, in principle, these limitations can be relaxed in the future.

RatInABox avoids the oversimplifications commonly found in discrete modelling, predominant in reinforcement learning[22, 23], which we believe impede its relevance to neuroscience.

Currently, inputs from different sensory modalities, such as vision or olfaction, are not explicitly considered. Instead, sensory input is represented implicitly through efficient allocentric or egocentric representations. If necessary, one could use the RatInABox API in conjunction with a third-party computer graphics engine to circumvent this limitation.

Finally, focus has been given to generating synthetic data from steady-state systems. Hence, by default, agents and neurons do not explicitly include learning, plasticity or adaptation. Nevertheless we have shown that a minimal set of features such as parameterised function-approximator neurons and policy control enable a variety of experience-driven changes in behaviour the cell responses[42, 43] to be modelled within the framework.

- What about other environments that are not "Boxes" as in the name - can the environment only be a Box, what about a circular environment? Or Bat flight? This also has implications for the velocity of the agent, etc. What are the parameters for the motion model to simulate a bat, which likely has a higher velocity than a rat?

Thank you for this question. Since the initial submission of this manuscript RatInABox has been upgraded and environments have become substantially more “general”. Environments can now be of arbitrary shape (including circular), boundaries can be curved, they can contain holes and can also contain objects (0-dimensional points which act as visual cues). A few examples are showcased in the updated figure 1 panel e.

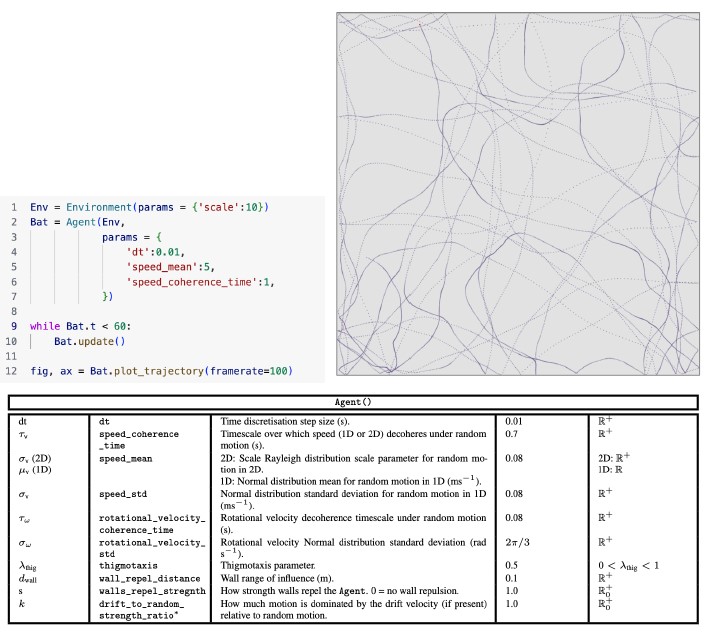

Whilst we don’t know the exact parameters for bat flight users could fairly straightforwardly figure these out themselves and set them using the motion parameters as shown in the table below. We would guess that bats have a higher average speed (speed_mean) and a longer decoherence time due to increased inertia (speed_coherence_time), so the following code might roughly simulate a bat flying around in a 10 x 10 m environment. Author response image 1 shows all Agent parameters which can be set to vary the random motion model.

Author response image 1.

- Semi-related, the name suggests limitations: why Rat? Why not Agent? (But its a personal choice)

We came up with the name “RatInABox” when we developed this software to study hippocampal representations of an artificial rat moving around a closed 2D world (a box). We also fitted the random motion model to open-field exploration data from rats. You’re right that it is not limited to rodents but for better or for worse it’s probably too late for a rebrand!

- A future extension (or now) could be the ability to interface with common trajectory estimation tools; for example, taking in the (X, Y, (Z), time) outputs of animal pose estimation tools (like DeepLabCut or such) would also allow experimentalists to generate neural synthetic data from other sources of real-behavior.

This is actually already possible via our “Agent.import_trajectory()” method. Users can pass an array of time stamps and an array of positions into the Agent class which will be loaded and smoothly interpolated along as shown here in Fig. 3a or demonstrated in these two new papers[9,10] who used RatInABox by loading in behavioural trajectories.

- What if a place cell is not encoding place but is influenced by reward or encodes a more abstract concept? Should a PlaceCell class inherit from an AbstractPlaceCell class, which could be used for encoding more conceptual spaces? How could their tool support this?

In fact PlaceCells already inherit from a more abstract class (Neurons) which contains basic infrastructure for initialisation, saving data, and plotting data etc. We prefer the solution that users can write their own cell classes which inherit from Neurons (or PlaceCells if they wish). Then, users need only write a new get_state() method which can be as simple or as complicated as they like. Here are two examples we’ve already made which can be found on the GitHub:

Author response image 2.

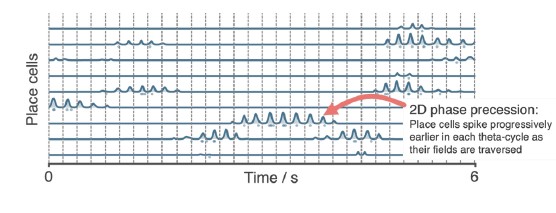

Phase precession: PhasePrecessingPlaceCells(PlaceCells)[12] inherit from PlaceCells and modulate their firing rate by multiplying it by a phase dependent factor causing them to “phase precess”.

Splitter cells: Perhaps users wish to model PlaceCells that are modulated by recent history of the Agent, for example which arm of a figure-8 maze it just came down. This is observed in hippocampal “splitter cell”. In this demo[1] SplitterCells(PlaceCells) inherit from PlaceCells and modulate their firing rate according to which arm was last travelled along.

- This a bit odd in the Discussion: "If there is a small contribution you would like to make, please open a pull request. If there is a larger contribution you are considering, please contact the corresponding author3" This should be left to the repo contribution guide, which ideally shows people how to contribute and your expectations (code formatting guide, how to use git, etc). Also this can be very off-putting to new contributors: what is small? What is big? we suggest use more inclusive language.

We’ve removed this line and left it to the GitHub repository to describe how contributions can be made.

- Could you expand on the run time for BoundaryVectorCells, namely, for how long of an exploration period? We found it was on the order of 1 min to simulate 30 min of exploration (which is of course fast, but mentioning relative times would be useful).

Absolutely. How long it takes to simulate BoundaryVectorCells will depend on the discretisation timestep and how many neurons you simulate. Assuming you used the default values (dt = 0.1, n = 10) then the motion model should dominate compute time. This is evident from our analysis in Figure 3f which shows that the update time for n = 100 BVCs is on par with the update time for the random motion model, therefore for only n = 10 BVCs, the motion model should dominate compute time.

So how long should this take? Fig. 3f shows the motion model takes ~10-3 s per update. One hour of simulation equals this will be 3600/dt = 36,000 updates, which would therefore take about 72,000*10-3 s = 36 seconds. So your estimate of 1 minute seems to be in the right ballpark and consistent with the data we show in the paper.

Interestingly this corroborates the results in a new inset panel where we calculated the total time for cell and motion model updates for a PlaceCell population of increasing size (from n = 10 to 1,000,000 cells). It shows that the motion model dominates compute time up to approximately n = 1000 PlaceCells (for BoundaryVectorCells it’s probably closer to n = 100) beyond which cell updates dominate and the time scales linearly.

These are useful and non-trivial insights as they tell us that the RatInABox neuron models are quite efficient relative to the RatInABox random motion model (something we hope to optimise further down the line). We’ve added the following sentence to the results:

“Our testing (Fig. 3f, inset) reveals that the combined time for updating the motion model and a population of PlaceCells scales sublinearly O(1) for small populations n > 1000 where updating the random motion model dominates compute time, and linearly for large populations n > 1000. PlaceCells, BoundaryVectorCells and the Agent motion model update times will be additionally affected by the number of walls/barriers in the Environment. 1D simulations are significantly quicker than 2D simulations due to the reduced computational load of the 1D geometry.”

And this sentence to section 2:

“RatInABox is fundamentally continuous in space and time. Position and velocity are never discretised but are instead stored as continuous values and used to determine cell activity online, as exploration occurs. This differs from other models which are either discrete (e.g. “gridworld” or Markov decision processes) or approximate continuous rate maps using a cached list of rates precalculated on a discretised grid of locations. Modelling time and space continuously more accurately reflects real-world physics, making simulations smooth and amenable to fast or dynamic neural processes which are not well accommodated by discretised motion simulators. Despite this, RatInABox is still fast; to simulate 100 PlaceCell for 10 minutes of random 2D motion (dt = 0.1 s) it takes about 2 seconds on a consumer grade CPU laptop (or 7 seconds for BoundaryVectorCells).”

- Regarding the Geometry and Boundary conditions, would supporting hyperbolic distance might be useful, given the interest in alternative geometry of representations (ie, https://www.nature.com/articles/s41593-022-01212-4)?

Whilst this would be very interesting it would likely represent quite a significant edit, requiring rewriting of almost all the geometry-handling code. We’re happy to consider changes like these according to (i) how simple they will be to implement, (ii) how disruptive they will be to the existing API, (iii) how many users would benefit from the change. If many users of the package request this we will consider ways to support it.

- In general, the set of default parameters might want to be included in the main text (vs in the supplement).

We also considered this but decided to leave them in the methods for now. The exact value of these parameters are subject to change in future versions of the software. Also, we’d prefer for the main text to provide a low-detail high-level description of the software and the methods to provide a place for keen readers to dive into the mathematical and coding specifics.

- It still says you can only simulate 4 velocity or head directions, which might be limiting.

Thanks for catching this. This constraint has been relaxed. Users can now simulate an arbitrary number of head direction cells with arbitrary tuning directions and tuning widths. The methods have been adjusted to reflect this (see section 6.3.4).

- The code license should be mentioned in the Methods.

We have added the following section to the methods:

6.6 License RatInABox is currently distributed under an MIT License, meaning users are permitted to use, copy, modify, merge publish, distribute, sublicense and sell copies of the software.

-

eLife assessment

George et al. present a convincing new Python toolbox ("RatInABox") that allows researchers to generate synthetic behavior and neural data specifically focusing on hippocampal functional cell types (place cells, grid cells, boundary vector cells, head direction cells).

This is valuable for theory-driven research where synthetic benchmarks should be used. Beyond just navigation, it can be highly useful for novel tool development that requires jointly modeling behavior and neural data. The authors provide convincing evidence of its utility with well documented and easy to use code and the corresponding manuscript.

-

Reviewer #1 (Public Review):

In this work George et al. describe RatInABox, a software system for generating surrogate locomotion trajectories and neural data to simulate the effects of a rodent moving about an arena. This work is aimed at researchers that study rodent navigation and its neural machinery.

Strengths:

+ The software contains several helpful features. It has the ability to import existing movement traces and interpolate data with lower sampling rates. It allows varying the degree to which rodents stay near the walls of the arena. It appears to be able to simulate place cells, grid cells, and some other features.

+ The architecture seems fine and the code is in a language that will be accessible to many labs.

+ There is convincing validation of velocity statistics. There are examples shown of position data, which seem to …Reviewer #1 (Public Review):

In this work George et al. describe RatInABox, a software system for generating surrogate locomotion trajectories and neural data to simulate the effects of a rodent moving about an arena. This work is aimed at researchers that study rodent navigation and its neural machinery.

Strengths:

+ The software contains several helpful features. It has the ability to import existing movement traces and interpolate data with lower sampling rates. It allows varying the degree to which rodents stay near the walls of the arena. It appears to be able to simulate place cells, grid cells, and some other features.

+ The architecture seems fine and the code is in a language that will be accessible to many labs.

+ There is convincing validation of velocity statistics. There are examples shown of position data, which seem to generally match between data and simulation.Weaknesses:

+ There is little analysis of position statistics. I am not sure this is needed, but the software might end up more powerful and the paper higher impact if some position analysis was done. Based on the traces shown, it seems possible that some additional parameters might be needed to simulate position/occupancy traces whose statistics match the data.

+ The overall impact of this work is somewhat limited. It is not completely clear how many labs might use this, or have a need for it. The introduction could have provided more specificity about examples of past work that would have been better done with this tool.

+ Presentation: Some discussion of case studies in Introduction might address the above point on impact. It would be useful to have more discussion of how general the software is, and why the current feature set was chosen. For example, how well does RatInABox deal with environments of arbitrary shape? T-mazes? It might help illustrate the tool's generality to move some of the examples in supplementary figure to main text - or just summarize them in a main text figure/panel. -

Reviewer #2 (Public Review):

George and colleagues present a novel open-source toolbox to model rodent locomotor patterns and electrophysiological responses of spatially modulated neurons, such as hippocampal "place cells". The present manuscript describes a comprehensive Python package ("RatInABox") with powerful capabilities to simulate a variety of environments, exploratory behaviors and concurrent responses of a variety of cell types. In addition, they provide the tools to expand these basics functions and potentially multiple different model designs, new cell types or more complex neural network architectures. The manuscript also illustrated several simple application cases. The authors have also created a comprehensive GitHub repository with more detailed explanations, tutorials and example scripts. Overall, I found both the …

Reviewer #2 (Public Review):

George and colleagues present a novel open-source toolbox to model rodent locomotor patterns and electrophysiological responses of spatially modulated neurons, such as hippocampal "place cells". The present manuscript describes a comprehensive Python package ("RatInABox") with powerful capabilities to simulate a variety of environments, exploratory behaviors and concurrent responses of a variety of cell types. In addition, they provide the tools to expand these basics functions and potentially multiple different model designs, new cell types or more complex neural network architectures. The manuscript also illustrated several simple application cases. The authors have also created a comprehensive GitHub repository with more detailed explanations, tutorials and example scripts. Overall, I found both the manuscript and associated repository very clear, well written and easy the scrips easy to follow and implement, to a superior level of many commercial software packages. RatInABox fills several existing gaps in the literature and features important improvements over previous approaches; for example, the implementation of continuous 2D environments instead of tabularized state spaces. I believe this toolbox will be of great interest for many researchers in the field of spatial navigation and beyond and provide them with a remarkably powerful and flexible tool. I don't have any major issues with the manuscript. However, the manuscript can be further improved by clarifying some aspects of the toolbox, discussing its limitations and biological plausibility.

-

Reviewer #3 (Public Review):

George et al. present a convincing new Python toolbox that allows researchers to generate synthetic behavior and neural data specifically focusing on hippocampal functional cell types (place cells, grid cells, boundary vector cells, head direction cells). This is highly useful for theory-driven research where synthetic benchmarks should be used. Beyond just navigation, it can be highly useful for novel tool development that requires jointly modeling behavior and neural data. The code is well organized and written and it was easy for us to test.

We have a few constructive points that they might want to consider.

- Right now the code only supports X,Y movements, but Z is also critical and opens new questions in 3D coding of space (such as grid cells in bats, etc). Many animals effectively navigate in 2D, as a …

Reviewer #3 (Public Review):

George et al. present a convincing new Python toolbox that allows researchers to generate synthetic behavior and neural data specifically focusing on hippocampal functional cell types (place cells, grid cells, boundary vector cells, head direction cells). This is highly useful for theory-driven research where synthetic benchmarks should be used. Beyond just navigation, it can be highly useful for novel tool development that requires jointly modeling behavior and neural data. The code is well organized and written and it was easy for us to test.

We have a few constructive points that they might want to consider.

- Right now the code only supports X,Y movements, but Z is also critical and opens new questions in 3D coding of space (such as grid cells in bats, etc). Many animals effectively navigate in 2D, as a whole, but they certainly make a large number of 3D head movements, and modeling this will become increasingly important and the authors should consider how to support this.

- What about other environments that are not "Boxes" as in the name - can the environment only be a Box, what about a circular environment? Or Bat flight? This also has implications for the velocity of the agent, etc. What are the parameters for the motion model to simulate a bat, which likely has a higher velocity than a rat?

- Semi-related, the name suggests limitations: why Rat? Why Not Agent? (But its a personal choice)

- A future extension (or now) could be the ability to interface with common trajectory estimation tools; for example, taking in the (X, Y, (Z), time) outputs of animal pose estimation tools (like DeepLabCut or such) would also allow experimentalists to generate neural synthetic data from other sources of real-behavior.

- What if a place cell is not encoding place but is influenced by reward or encodes a more abstract concept? Should a PlaceCell class inherit from an AbstractPlaceCell class, which could be used for encoding more conceptual spaces? How could their tool support this?

- This a bit odd in the Discussion: "If there is a small contribution you would like to make, please open a pull request. If there is a larger contribution you are considering, please contact the corresponding author3" This should be left to the repo contribution guide, which ideally shows people how to contribute and your expectations (code formatting guide, how to use git, etc). Also this can be very off-putting to new contributors: what is small? What is big? we suggest use more inclusive language.

- Could you expand on the run time for BoundaryVectorCells, namely, for how long of an exploration period? We found it was on the order of 1 min to simulate 30 min of exploration (which is of course fast, but mentioning relative times would be useful).

- Regarding the Geometry and Boundary conditions, would supporting hyperbolic distance might be useful, given the interest in alternative geometry of representations (ie, https://www.nature.com/articles/s41593-022-01212-4)?

- In general, the set of default parameters might want to be included in the main text (vs in the supplement).

- It still says you can only simulate 4 velocity or head directions, which might be limiting.

- The code license should be mentioned in the Methods.

-