Semantical and geometrical protein encoding toward enhanced bioactivity and thermostability

Curation statements for this article:-

Curated by eLife

eLife Assessment

ProtSSN is a valuable approach that generates protein embeddings by integrating sequence and structural information, demonstrating improved prediction of mutation effects on thermostability compared to competing models. The evidence supporting the authors' claims is compelling, with well-executed comparisons. This work will be of particular interest to researchers in bioinformatics and structural biology, especially those focused on protein function and stability.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Protein engineering is a pivotal aspect of synthetic biology, involving the modification of amino acids within existing protein sequences to achieve novel or enhanced functionalities and physical properties. Accurate prediction of protein variant effects requires a thorough understanding of protein sequence, structure, and function. Deep learning methods have demonstrated remarkable performance in guiding protein modification for improved functionality. However, existing approaches predominantly rely on protein sequences, which face challenges in efficiently encoding the geometric aspects of amino acids’ local environment and often fall short in capturing crucial details related to protein folding stability, internal molecular interactions, and bio-functions. Furthermore, there lacks a fundamental evaluation for developed methods in predicting protein thermostability, although it is a key physical property that is frequently investigated in practice. To address these challenges, this article introduces a novel pre-training framework that integrates sequential and geometric encoders for protein primary and tertiary structures. This framework guides mutation directions toward desired traits by simulating natural selection on wild-type proteins and evaluates variant effects based on their fitness to perform specific functions. We assess the proposed approach using three benchmarks comprising over 300 deep mutational scanning assays. The prediction results showcase exceptional performance across extensive experiments compared to other zero-shot learning methods, all while maintaining a minimal cost in terms of trainable parameters. This study not only proposes an effective framework for more accurate and comprehensive predictions to facilitate efficient protein engineering, but also enhances the in silico assessment system for future deep learning models to better align with empirical requirements. The PyTorch implementation is available at https://github.com/ai4protein/ProtSSN .

Article activity feed

-

-

-

-

eLife Assessment

ProtSSN is a valuable approach that generates protein embeddings by integrating sequence and structural information, demonstrating improved prediction of mutation effects on thermostability compared to competing models. The evidence supporting the authors' claims is compelling, with well-executed comparisons. This work will be of particular interest to researchers in bioinformatics and structural biology, especially those focused on protein function and stability.

-

Reviewer #1 (Public review):

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous approaches. The approach seems promising and appears to out-compete …

Reviewer #1 (Public review):

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous approaches. The approach seems promising and appears to out-compete previous methods at several tasks. Nonetheless, I have strong concerns about a lack of verbosity as well as exclusion of relevant methods and references.

Advances:

I appreciate the breadth of the analysis and comparisons to other methods. The authors separate tasks, models, and sizes of models in an intuitive, easy-to-read fashion that I find valuable for selecting a method for embedding peptides. Moreover, the authors gather two datasets for evaluating embeddings' utility for predicting thermostability. Overall, the work should be helpful for the field as more groups choose methods/pretraining strategies amenable to their goals, and can do so in an evidence-guided manner.

Considerations:

Primarily, a majority of the results and conclusions (e.g., Table 3) are reached using data and methods from ProteinGym, yet the best-performing methods on ProteinGym are excluded from the paper (e.g., EVE-based models and GEMME). In the ProteinGym database, these methods outperform ProtSSN models. Moreover, these models were published over a year---or even 4 years in the case of GEMME---before ProtSSN, and I do not see justification for their exclusion in the text.

Secondly, related to comparison of other models, there is no section in the methods about how other models were used, or how their scores were computed. When comparing these models, I think it's crucial that there are explicit derivations or explanations for the exact task used for scoring each method. In other words, if the pre-training is indeed the important advance of the paper, the paper needs to show this more explicitly by explaining exactly which components of the model (and previous models) are used for evaluation. Are the authors extracting the final hidden layer representations of the model, treating these as features, then using these features in a regression task to predict fitness/thermostability/DDG etc.? How are the model embeddings of other methods being used, since, for example, many of these methods output a k-dimensional embedding of a given sequence, rather than one single score that can be correlated with some fitness/functional metric. Summarily, I think the text is lacking an explicit mention of how these embeddings are being summarized or used, as well as how this compares to the model presented.

I think the above issues can mainly be addressed by considering and incorporating points from Li et al. 2024[1] and potentially Tang & Koo 2024[2]. Li et al.[1] make extremely explicit the use of pretraining for downstream prediction tasks. Moreover, they benchmark pretraining strategies explicitly on thermostability (one of the main considerations in the submitted manuscript), yet there is no mention of this work nor the dataset used (FLIP (Dallago et al., 2021)) in this current work. I think a reference and discussion of [1] is critical, and I would also like to see comparisons in line with [1], as [1] is very clear about what features from pretraining are used, and how. If the comparisons with previous methods were done in this fashion, this level of detail needs to be included in the text.

To conclude, I think the manuscript would benefit substantially from a more thorough comparison of previous methods. Maybe one way of doing this is following [1] or [2], and using the final embeddings of each method for a variety of regression tasks---to really make clear where these methods are performing relative to one another. I think a more thorough methods section detailing how previous methods did their scoring is also important. Lastly, TranceptEVE (or a model comparable to it) and GEMME should also be mentioned in these results, or at the bare minimum, be given justification for their absence.

[1] Feature Reuse and Scaling: Understanding Transfer Learning with Protein Language Models, Francesca-Zhoufan Li, Ava P. Amini, Yisong Yue, Kevin K. Yang, Alex X. Lu bioRxiv 2024.02.05.578959; doi: https://doi.org/10.1101/2024.02.05.578959

[2] Evaluating the representational power of pre-trained DNA language models for regulatory genomics, Ziqi Tang, Peter K Koo bioRxiv 2024.02.29.582810; doi: https://doi.org/10.1101/2024.02.29.582810Comments on revisions:

My concerns have been addressed. What seems to remain are some semantical disagreements and I'm not sure that these will be answered here. Do MSAs and other embedding methods lead to some notable type of data leakage? Does this leakage qualify as "x-shot" learning under current definitions?

-

Reviewer #2 (Public review):

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

As well, the authors curate a set of assays measuring the effect of mutations on thermostability. They demonstrate their model …

Reviewer #2 (Public review):

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

As well, the authors curate a set of assays measuring the effect of mutations on thermostability. They demonstrate their model also predicts the effects of these mutations better than previous models and make this benchmark available for the community.

Strengths:

The authors describe a method that makes accurate mutation effect predictions by informing its predictions with structure.

The authors curate a new dataset of assays measuring thermostability. These can be used to validate and interpret mutation effect prediction methods in the future.

Weaknesses:

In the review period, the authors included a previous method, SaProt, that similarly uses protein structure to predict the effects of mutations, in their evaluations. They see that SaProt performs similarly to their method.

ProteinGym is largely made of deep mutational scans, which measure the effect of every mutation on a protein. These new benchmarks contain on average measurements of less than a percent of all possible point mutations of their respective proteins. It is unclear what sorts of protein regions these mutations are more likely to lie in; therefore it is challenging to make conclusions about what a model has necessarily learned based on its score on this benchmark. For example, several assays in this new benchmark seem to be similar to each other, such as four assays on ubiquitin performed in pH 2.25 to pH 3.0.

Comments on revisions:

I think the rounds of review have improved the paper and I've raised my score.

-

Author response:

The following is the authors’ response to the previous reviews.

Response to Reviewer 1

Thank you for your recognition of our revised work.

Response to Reviewer 2

It would be useful to have a demonstration of where this model outperforms SaProt systematically, and a discussion about what the success of this model teaches us given there is a similar, previously successful model, SaProt.

As two concurrent works, ProtSSN and SaProt employ different methods to incorporate the structure information of proteins. Generally speaking, for two deep learning models that are developed during a close period, it is challenging to conclude that one model is systematically superior to another. Nonetheless, on DTm and DDG (the two low-throughput datasets that we constructed), ProtSSN demonstrates better empirical performance than SaProt.

Author response:

The following is the authors’ response to the previous reviews.

Response to Reviewer 1

Thank you for your recognition of our revised work.

Response to Reviewer 2

It would be useful to have a demonstration of where this model outperforms SaProt systematically, and a discussion about what the success of this model teaches us given there is a similar, previously successful model, SaProt.

As two concurrent works, ProtSSN and SaProt employ different methods to incorporate the structure information of proteins. Generally speaking, for two deep learning models that are developed during a close period, it is challenging to conclude that one model is systematically superior to another. Nonetheless, on DTm and DDG (the two low-throughput datasets that we constructed), ProtSSN demonstrates better empirical performance than SaProt.

Moreover, ProtSSN is more efficient in both training and inference compared to SaProt. In terms of training cost, SaProt uses 40 million protein structures for pretraining (requiring 64 A100 GPUs for three months), whereas ProtSSN requires only about 30,000 crystal structures from the CATH database (trained on a single 3090 GPU for two days). Despite SaProt’s significantly higher training cost, its pretrained version does not exhibit superior performance on low-throughput datasets such as DTm, DDG, and Clinvar. Furthermore, the high training cost limits many users from retraining or fine-tuning the model for specific needs or datasets.

Regarding the inference cost, ProtSSN requires only one embedding computation for a wild-type protein, regardless of the number of mutants (n). In contrast, SaProt computes a separate embedding and score for each mutant. For instance, when evaluating the scoring performance on ProteinGym, ProtSSN only needs 217 inferences, while SaProt needs more than 2M inferences. This inference speed is important in practice, such as high-throughput design and screening.

Please remove the reference to previous methods as "few shot". This typically refers to their being trained on experimental data, not their using MSAs. A "few shot" model would be ProteinNPT.

The definition of "few-shot" we used here is following ESM1v [1]. This concept originates from providing a certain number of examples as input to GPT-3 [2]. In the context of protein deep learning models, MSA serves as the wild-type protein examples.

Also, Reviewer 1 uses the concept in the same way.

“Readers should note that methods labelled as "few-shot" in comparisons do not make use of experimental labels, but rather use sequences inferred as homologous; these sequences are also often available even if the protein has never been experimentally tested.”

In the main text, we also included this definition as well as the reference of ESM-1v in lines 457-458.

“We extend the evaluation on ProteinGym v0 to include a comparison of our zero-shot ProtSSN with few-shot learning methods that leverage MSA information of proteins (Meier et al., 2021).”

(1) Meier J, Rao R, Verkuil R, et al. Language models enable zero-shot prediction of the effects of mutations on protein function. Advances in Neural Information Processing Systems, 2021.

(2) Brown T, Mann B, Ryder N, et al. Language models are few-shot learners. Advances in Neural Information Processing Systems, 2020.

Furthermore, I don't think it is fair to state that your method is not comparable to these models -- one can run an MSA just as one can predict a structure. A fairer comparison would be to highlight particular assays for which getting an MSA could be challenging -- Transcription did this by showing that they outperform EVE when MSAs are shallow.

We recognize that there are often differences in the definitions and classifications of various methodologies. Here, we follow the definitions provided by ProteinGym. As the most comprehensive and large scale open benchmark in the community, we believe this classification scheme should be widely accepted. All classifications are available on the official website of ProteinGym (https://proteingym.org/benchmarks), which categorizes methods into PLMs, Structure-based models, and Alignment-based models. For example, GEMME is classified as an alignment-based model, and MSA Transformer is considered a hybrid model combining alignment and PLM features.

We believe that methodologies with different inputs and architectures can lead to inherent unfairness. Also, it is generally believed that models including evolutionary relationships tend to outperform end-to-end models due to the extra information and efforts involved during the training phase. Some empirical evidence and discussions are in the ablation studies of retrieval factors in Tranception [3]. Moreover, the choice of MSA search parameters can introduce uncertainty, which could have positive or negative impacts.

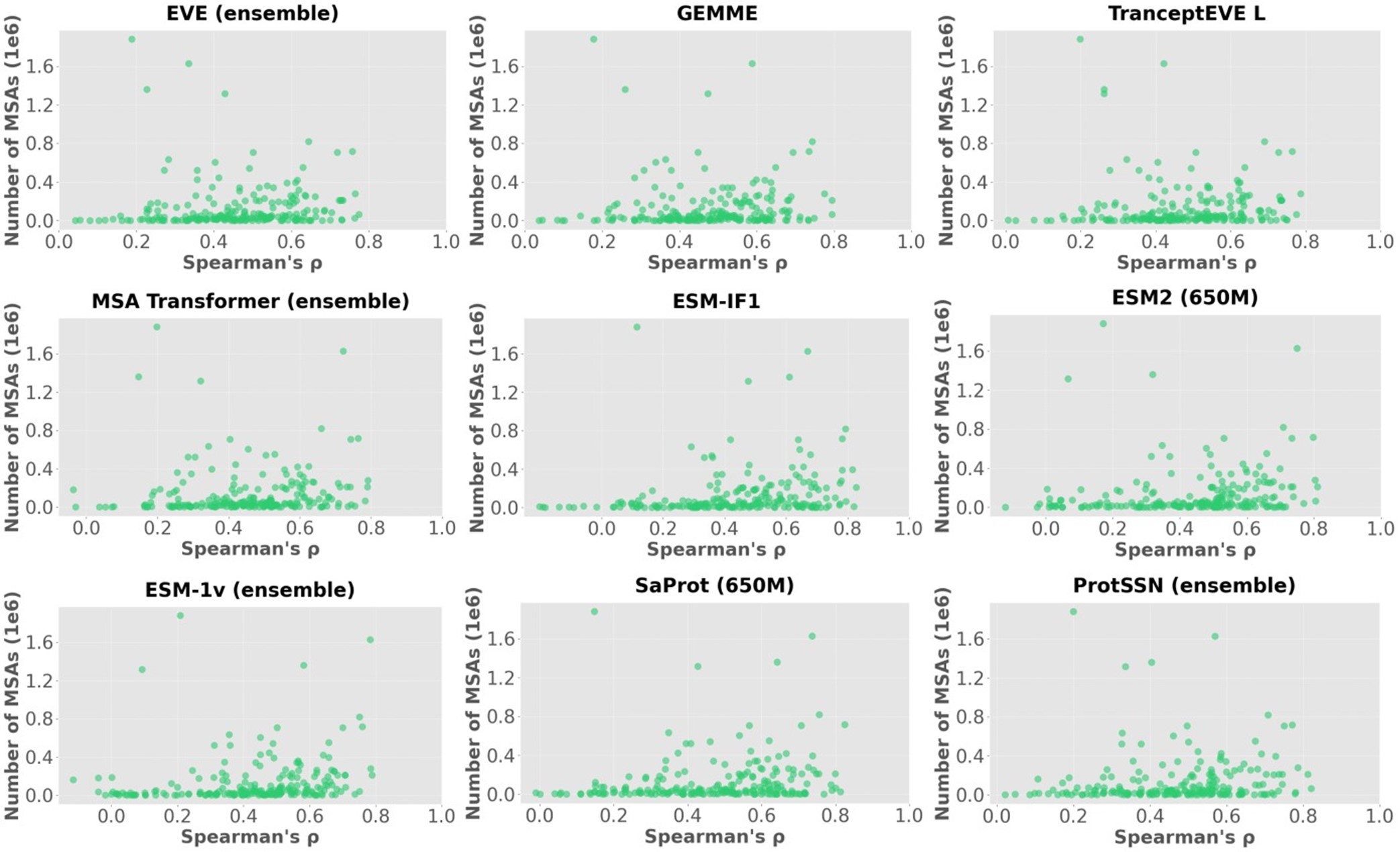

We showcase the impact of MSA depth on model performance with an additional analysis below. Author response image 1 visualizes the Spearman’s correlation between the scores of each model and the number of MSAs on 217 ProteinGym assays, where each point represents one of 217 assays. The summary correlation of each model with respect to all assays are reported in Author response table 1. These results demonstrate no clear correlation between MSA depth and model performance even for MSA-based models.

Author response image 1.

Scatter plots of the number of MSA sequences and spearman’s correlation.

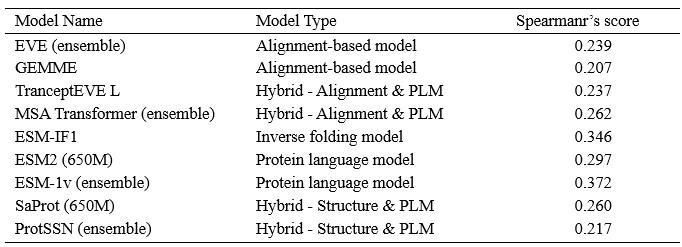

Author response table 1.

Spearmar’s score of the number of MSA sequences and the model’s performance.

(3) Notin P, Dias M, Frazer J, et al. Tranception: protein fitness prediction with autoregressive transformers and inference-time retrieval. International Conference on Machine Learning, 2022.

The authors state that DTm and DDG are conceptually appealing because they come from low-throughput assays with lower experimental noise and are also mutations that are particularly chosen to represent the most interesting regions of the protein. I agree with the conceptual appeal but I don't think these claims have been demonstrated in practice. The cited comparison with Frazer as a particularly noisy source of data I think is particularly unconvincing: ClinVar labels are not only rigorously determined from multiple sources of evidence, Frazer et al demonstrates that these labels are actually more reliable than experiment in some cases. They also state that ProteinGym data doesn't come with environmental conditions, but these can be retrieved from the papers the assays came from. The paper would be strengthened by a demonstration of the conceptual benefit of these new datasets, say a comparison of mutations and signal for a protein that may be in one of these datasets vs ProteinGym.

In the work by Frazer et al. [4], they mentioned that

"However, these technologies do not easily scale to thousands of proteins, especially not to combinations of variants, and depend critically on the availability of assays that are relevant to or at least associated with human disease phenotypes."

It points out that the results of high-throughput experiments are usually based on the design of specific genes (such as BRCA1 and TP53.) and cannot be easily extended to thousands of other genes. At the same time, due to the complexity of the experiment, there may be problems with reproducibility or deviations from clinical relevance.

This statement aligns with our perspective that high-throughput experiments inherently involve a significant amount of noise and error. It is important to clarify that the noise we discuss here arises from the limitations of high-throughput experiments themselves, instead of from the reliability of the data sources, such as systematic errors in experimental measurements. This latter issue is a complex problem common to all wetlab experiments and falls outside the scope of our study.

Under this premise, low-throughput datasets like DTm and DDG can be considered to have less noise than high-throughput datasets, as they have undergone manual curation. As for your suggestion, while valuable, unfortunately, we were unable to identify datasets in DTM and DDG that align with those in ProteinGym after a careful search. Thus, we are unable to conduct this comparative experiment at this stage.

(4) Frazer J, Notin P, Dias M, et al. Disease variant prediction with deep generative models of evolutionary data. Nature, 2021.

-

-

eLife Assessment

ProtSSN is a valuable approach that generates protein embeddings by integrating sequence and structural information, demonstrating improved prediction of mutation effects on thermostability compared to competing models. The evidence supporting the authors' claims is solid, with well-executed comparisons. This work will be of particular interest to researchers in bioinformatics and structural biology, especially those focused on protein function and stability.

-

Reviewer #1 (Public review):

After revisions:

My concerns have been addressed.

Prior to revisions:

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous …Reviewer #1 (Public review):

After revisions:

My concerns have been addressed.

Prior to revisions:

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous approaches. The approach seems promising, and appears to out-compete previous methods at several tasks. Nonetheless, I have strong concerns about a lack of verbosity as well as exclusion of relevant methods and references.Advances:

I appreciate the breadth of the analysis and comparisons to other methods. The authors separate tasks, models, and sizes of models in an intuitive, easy-to-read fashion that I find valuable for selecting a method for embedding peptides. Moreover, the authors gather two datasets for evaluating embeddings' utility for predicting thermostability. Overall, the work should be helpful for the field as more groups choose methods/pretraining strategies amenable to their goals, and can do so in an evidence-guided manner.Considerations:

Primarily, a majority of the results and conclusions (e.g., Table 3) are reached using data and methods from ProteinGym, yet the best-performing methods on ProteinGym are excluded from the paper (e.g., EVE-based models and GEMME). In the ProteinGym database, these methods outperform ProtSSN models. Moreover, these models were published over a year---or even 4 years in the case of GEMME---before ProtSSN, and I do not see justification for their exclusion in the text.Secondly, related to comparison of other models, there is no section in the methods about how other models were used, or how their scores were computed. When comparing these models, I think it's crucial that there are explicit derivations or explanations for the exact task used for scoring each method. In other words, if the pre-training is indeed the important advance of the paper, the paper needs to show this more explicitly by explaining exactly which components of the model (and previous models) are used for evaluation. Are the authors extracting the final hidden layer representations of the model, treating these as features, then using these features in a regression task to predict fitness/thermostability/DDG etc.? How are the model embeddings of other methods being used, since, for example, many of these methods output a k-dimensional embedding of a given sequence, rather than one single score that can be correlated with some fitness/functional metric. Summarily, I think the text is lacking an explicit mention of how these embeddings are being summarized or used, as well as how this compares to the model presented.

I think the above issues can mainly be addressed by considering and incorporating points from Li et al. 2024[1] and potentially Tang & Koo 2024[2]. Li et al.[1] make extremely explicit the use of pretraining for downstream prediction tasks. Moreover, they benchmark pretraining strategies explicitly on thermostability (one of the main considerations in the submitted manuscript), yet there is no mention of this work nor the dataset used (FLIP (Dallago et al., 2021)) in this current work. I think a reference and discussion of [1] is critical, and I would also like to see comparisons in line with [1], as [1] is very clear about what features from pretraining are used, and how. If the comparisons with previous methods were done in this fashion, this level of detail needs to be included in the text.

To conclude, I think the manuscript would benefit substantially from a more thorough comparison of previous methods. Maybe one way of doing this is following [1] or [2], and using the final embeddings of each method for a variety of regression tasks---to really make clear where these methods are performing relative to one another. I think a more thorough methods section detailing how previous methods did their scoring is also important. Lastly, TranceptEVE (or a model comparable to it) and GEMME should also be mentioned in these results, or at the bare minimum, be given justification for their absence.

[1] Feature Reuse and Scaling: Understanding Transfer Learning with Protein Language Models Francesca-Zhoufan Li, Ava P. Amini, Yisong Yue, Kevin K. Yang, Alex X. Lu bioRxiv 2024.02.05.578959; doi: https://doi.org/10.1101/2024.02.05.578959

[2] Evaluating the representational power of pre-trained DNA language models for regulatory genomics Ziqi Tang, Peter K Koo bioRxiv 2024.02.29.582810; doi: https://doi.org/10.1101/2024.02.29.582810 -

Reviewer #2 (Public review):

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

As well, the authors curate a set of assays measuring the effect of mutations on thermostability. They demonstrate their model …

Reviewer #2 (Public review):

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

As well, the authors curate a set of assays measuring the effect of mutations on thermostability. They demonstrate their model also predicts the effects of these mutations better than previous models and make this benchmark available for the community.

Strengths:

The authors describe a method that makes accurate mutation effect predictions by informing its predictions with structure.

Weaknesses:

In the review period, the authors included a previous method, SaProt, that similarly uses protein structure to predict the effects of mutations, in their evaluations.

They see that SaProt performs similarly to their method.Readers should note that methods labelled as "few-shot" in comparisons do not make use of experimental labels, but rather use sequences inferred as homologous; these sequences are also often available even if the protein has never been experimentally tested.

ProteinGym is largely made of deep mutational scans, which measure the effect of every mutation on a protein. These new benchmarks contain on average measurements of less than a percent of all possible point mutations of their respective proteins. It is unclear what sorts of protein regions these mutations are more likely to lie in; therefore it is challenging to make conclusions about what a model has necessarily learned based on its score on this benchmark. For example, several assays in this new benchmark seem to be similar to each other, such as four assays on ubiquitin performed in pH 2.25 to pH 3.0.

The authors state that their new benchmarks are potentially more useful than those of ProteinGym, citing Frazer 2021; readers should be aware that the mutations from the later source are actually mutations whose impact on human health has been determined through multiple sources, including population genetics, clinical evidence and some experiment.

-

Author response:

The following is the authors’ response to the original reviews.

Response to Reviewer 1

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), and then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to …

Author response:

The following is the authors’ response to the original reviews.

Response to Reviewer 1

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), and then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous approaches. The approach seems promising and appears to out-compete previous methods at several tasks. Nonetheless, I have strong concerns about a lack of verbosity as well as the exclusion of relevant methods and references.

Thank you for the comprehensive summary. Regarding the concerns listed in the review below, we have made point-to-point response. We also modified our manuscript in accordance.

Advances:

I appreciate the breadth of the analysis and comparisons to other methods. The authors separate tasks, models, and sizes of models in an intuitive, easy-to-read fashion that I find valuable for selecting a method for embedding peptides. Moreover, the authors gather two datasets for evaluating embeddings' utility for predicting thermostability. Overall, the work should be helpful for the field as more groups choose methods/pretraining strategies amenable to their goals, and can do so in an evidence-guided manner.

Thank you for recognizing the strength of our work in terms of the notable contributions, the solid analysis, and the clear presentation.

Considerations:

(1) Primarily, a majority of the results and conclusions (e.g., Table 3) are reached using data and methods from ProteinGym, yet the best-performing methods on ProteinGym are excluded from the paper (e.g., EVEbased models and GEMME). In the ProteinGym database, these methods outperform ProtSSN models. Moreover, these models were published over a year---or even 4 years in the case of GEMME---before ProtSSN, and I do not see justification for their exclusion in the text.

We decided to exclude the listed methods from the primary table as they are all MSA-based methods, which are considered few-shot methods in deep learning (Rao et al., ICML, 2021). In contrast, the proposed ProtSSN is a zero-shot method that makes inferences based on less information than few-shot methods. Moreover, it is possible for MSA-based methods to query aligned sequences based on predictions. For instance, Tranception (Notin et al., ICML, 2022) selects the model with the optimal proportions of logits and retrieval results according to the average correlation score on ProteinGym (Table 10, Notin et al., 2022).

With this in mind, we only included zero-shot deep learning methods in Table 3, which require no more than the sequence and structure of the underlying wild-type protein when scoring the mutants. In the revision, we have added the performance of SaProt to Table 3, and the performance of GEMME, TranceptEVE, and SaProt to Table 5. Furthermore, we have released the model's performance on the public leaderboard of ProteinGym v1 at proteingym.org.

(2) Secondly, related to the comparison of other models, there is no section in the methods about how other models were used, or how their scores were computed. When comparing these models, I think it's crucial that there are explicit derivations or explanations for the exact task used for scoring each method. In other words, if the pre-training is indeed an important advance of the paper, the paper needs to show this more explicitly by explaining exactly which components of the model (and previous models) are used for evaluation. Are the authors extracting the final hidden layer representations of the model, treating these as features, and then using these features in a regression task to predict fitness/thermostability/DDG etc.? How are the model embeddings of other methods being used, since, for example, many of these methods output a k-dimensional embedding of a given sequence, rather than one single score that can be correlated with some fitness/functional metric? Summarily, I think the text lacks an explicit mention of how these embeddings are being summarized or used, as well as how this compares to the model presented.

Thank you for the suggestion. Below we address the questions in three points.

(1) The task and the scoring for each method. We followed your suggestion and added a new paragraph titled “Scoring Function” on page 9 to provide a detailed explanation of the scoring functions used by other deep learning zero-shot methods.

(2) The importance of individual pre-training modules. The complete architecture of the proposed ProtSSN model has been introduced on page 7-8. Empirically, the influence of each pre-training module on the overall performance has been examined through ablation studies on page 12. In summary, the optimal performance is achieved by combining all the individual modules and designs.

(3) The input of fitness scoring. For a zero-shot prediction task, the final score for a mutant will be calculated by wildly-used functions named log-odds ratio (for encoder models, including ours) or loglikelihood (for autoregressive models or inverse folding models. In the revision, we explicitly define these functions in sections “Inferencing” (page 7) and “Scoring Function” (page 9).

(3) I think the above issues can mainly be addressed by considering and incorporating points from Li et al. 2024[1] and potentially Tang & Koo 2024[2]. Li et al.[1] make extremely explicit the use of pretraining for downstream prediction tasks. Moreover, they benchmark pretraining strategies explicitly on thermostability (one of the main considerations in the submitted manuscript), yet there is no mention of this work nor the dataset used (FLIP (Dallago et al., 2021)) in this current work. I think a reference and discussion of [1] is critical, and I would also like to see comparisons in line with [1], as [1] is very clear about what features from pretraining are used, and how. If the comparisons with previous methods were done in this fashion, this level of detail needs to be included in the text.

The initial version did not include an explicit comparison with the mentioned reference due to the difference in the learning task. In particular, [1] formulates a supervised learning task on predicting the continuous scores of mutants of specific proteins. In comparison, we make zero-shot predictions, where the model is trained in a self-supervised learning manner that requires no labels from experiments. In the revision, we added discussions in “Discussion and Conclusion” (lines 476-484):

Recommendations For The Authors:

Comment 1

I found the methods lacking in the sense that there is never a simple, explicit statement about what is the exact input and output of the model. What are the components of the input that are required by the user (to generate) or supply to the model? Are these inputs different at training vs inference time? The loss function seems like it's trying to de-noise a modified sequence, can you make this more explicit, i.e. exactly what values/objects are being compared in the loss?

We have added a more detailed description in the "Model Pipeline" section (page 7), which explains the distinct input requirements for training and inference, as well as the formulation of the employed loss function. To summarize:

(1) Both sequence and structure information are used in training and inference. Specifically, structure information is represented as a 3D graph with coordinates, while sequence information consists of AA-wise hidden representations encoded by ESM2-650M. During inference, instead of encoding each mutant individually, the model encodes the WT protein and uses the output probability scores relevant to the mutant to calculate the fitness score. This is a standard operation in many zero-shot fitness prediction models, commonly referred to as the log-odds-ratio.

(2) The loss function compares the differences between the noisy input sequence and the output (recovered) AA sequence. Noise is added to the input sequences, and the model is trained to denoise them (see “Ablation Study” for the different types of noise we tested). This approach is similar to a one-step diffusion process or BERT-style token permutation. The model learns to recover the probability of each node (AA) being one of 33 tokens. A cross-entropy loss is then applied to compare this distribution with the ground-truth (unpermuted) AA sequence, aiming to minimize the difference.

To better present the workflow, we revised the manuscript accordingly.

Comment 2

Related to the above, I'm not exactly sure where the structural/tertiary structure information comes from. In the methods, they don't state exactly whether the 3D coordinates are given in the CATH repository or where exactly they come from. In the results section they mention using AlphaFold to obtain coordinates for a specific task---is the use of AlphaFold limited only to these tasks/this is to show robustness whether using AlphaFold or realized coordinates?

The 3D coordinates of all proteins in the training set are derived from the crystal structures in CATH v4.3.0 to ensure a high-quality input dataset (see "Training Setup," Page 8). However, during the inference phase, we used predicted structures from AlphaFold2 and ESMFold as substitutes. This approach enhances the generalizability of our method, as in real-world scenarios, the crystal structure of the template protein to be engineered is not always available. The associated descriptions can be found in “Training Setup” (lines 271-272) and “Folding Methods” (lines 429-435).

Comment 3

Lines 142+144 missing reference "Section establishes", "provided in Section ."

199 "see Section " missing reference

214 missing "Section"

Thank you for pointing this out. We have fixed all missing references in the revision.

Comment 4

Table 2 - seems inconsistent to mention the number of parameters in the first 2 methods, then not in the others (though I see in Table 3 this is included, so maybe should just be omitted in Table 2).

In Table 2, we present the zero-shot methods used as baselines. Since many methods have different versions due to varying hyperparameter settings, we decided to list the number of parameters in the following tables.

We have double-checked both Table 3 and Table 5 and confirm that there is no inconsistency in the reported number of parameters. One potential explanation for the observed difference in the comment could be due to the differences in the number of parameters between single and ensemble methods. The ensemble method averages the predictions of multiple models, and we sum the total number of parameters across all models involved. For example, RITA-ensemble has 2210M parameters, derived from the sum of four individual models with 30M, 300M, 680M, and 1200M parameters.

Comment 5

In general, I found using the word "type" instead of "residue" a bit unnatural. As far as I can tell, the norm in the field is to say "amino acid" or "residue" rather than "type". This somewhat confused me when trying to understand the methods section, especially when talking about injecting noise (I figured "type" may refer to evolutionarily-close, or physicochemically-close residues). Maybe it's not necessary to change this in every instance, but something to consider in terms of ease of reading.

Thank you for your suggestion. The term "type" we used is a common expression similar to "class" in the NLP field. To avoid further confusion to the biologists, we have revised the manuscript accordingly.

Comment 6

197 should this read "based on the kNN "algorithm"" (word missing) or maybe "based on "its" kNN"?

We have corrected the typo accordingly. It now reads “the 𝑘-nearest neighbor algorithm (𝑘NN)” (line 198).

Comment 7

200 weights of dimension 93, where does this number come from?

The edge features are derived by Zhou et al., 2024. We have updated the reference in the manuscript for clarity (lines 201-202).

Comment 8

210-212 "representations of the noisy AA sequence are encoded from the noisy input" what is the "noisy AA sequence?" might be helpful to exactly defined what is "noisy input" or "noisy AA sequence". This sentence could potentially be worded to make it clearer, e.g. "we take the modified input sequence and embed it using [xyz]."

We have revised the text accordingly. In the revised see lines 211-212:

Comment 9

In Table 3

Formatting, DTm (million), (million) should be under "# Params" likely?

Also for DDG this is reported on only a few hundred mutations, it might be worth plotting the confidence intervals over the Spearman correlation (e.g. by bootstrapping the correlation coefficient).

We followed the suggestion and added “million” under the "# Params". We have added the bootstrapped results for DDG and DTm to Table 6. For each dataset, we randomly sampled 50% of the data for ten independent runs. ProtSSN achieves the top performance with a considerably small variance.

Comment 10

The paragraph in lines 319 to lines 328 I feel may lack sufficient evidence.

"While sequence-based analysis cannot entirely replace the role of structure-based analysis, compared to a fully structure-based deep learning method, a protein language model is more likely to capture sufficient information from sequences by increasing the model scale, i.e., the number of trainable parameters."

This claim is made without a citation, such as [1]. Increasing the scale of the model doesn't always align with improving out-of-sample/generalization performance. I don't feel fully convinced by the claim that worse prediction is ameliorated by increasing the number of parameters. In Table 3 the performance is not monotonic with (nor scales with) the number of parameters, even within a model. See ProGen2 Expression scores, or ESM-2 Stability scores, as a function of their model sizes. In [1], the authors discuss whether pretraining strategies are aligned with specific tasks. I think rewording this paragraph and mentioning this paper is important. Figure 3 shows that maybe there's some evidence for this but I don't feel entirely convinced by the plot.

We agree that increasing the number of learnable parameters does not always result in better performance in downstream tasks. However, what we intended to convey is that language models typically need to scale up in size to capture the interactions among residues, while structure-based models can achieve this more efficiently with lower computational costs. We have rephrased this paragraph in the paper to clarify our point in lines 340-342.

Comment 11

Line 327 related to my major comment, " a comprehensive framework, such as ProtSSN, exhibits the best performance." Refers to performance on ProteinGym, yet the best-performing methods on ProteinGym are excluded from the comparison.

The primary comparisons were conducted using zero-shot models for fairness, meaning that the baseline models were not trained on MSA and did not use test performance to tune their hyperparameters. It's also worth noting that SaProt (the current SOTA model) had not been updated on the leaderboard at the time of submitting this paper. In the revised manuscript, we have included GEMME and TranceptEVE in Table 5 and SaProt in Tables 3, 5, and 6. While ProtSSN does not achieve SOTA performance in every individual task, our key argument in the analysis is to highlight the overall advantage of hybrid encoders compared to single sequence-based or structure-based models. We made clearer statement in the revised manuscript (line 349):

Comment 12

Line 347, line abruptly ends "equivariance when embedding protein geometry significantly." (?).

We have fixed the typo, (lines 372-373):

Comment 13

Figure 3 I think can be made clearer. Instead of using True/false maybe be more explicit. For example in 3b, say something like "One-hot encoded" or "ESM-2 embedded".

The labels were set to True/False with the title of the subfigures so that they can be colored consistently.

Following the suggestion, we have updated the captions in the revised manuscript for clarity.

Comment 14

Lines 381-382 "average sequential embedding of all other Glycines" is to say that the score is taken as the average score in which Glycine is substituted at every other position in the peptide? Somewhat confused by the language "average sequential embedding" and think rephrasing could be done to make things clearer.

We have revised the related text accordingly a for clearer presentation (lines 406-413).

Comment 15

Table 5, and in mentions to VEP, if ProtSSN is leveraging AlphaFold for its structural information, I disagree that ProtSSN is not an MSA method, and I find it unfair to place ProtSSN in the "non-MSA" categories. If this isn't the case, then maybe making clearer the inputs etc. in the Methods will help.

Your response is well-articulated and clear, but here is a slight revision for improved clarity and flow:

We respectfully disagree with classifying a protein encoding method based solely on its input structure. While AF2 leverages MSA sequences to predict protein structures, this information is not used in our model, and our model is not exclusive to AF2-predicted structures. When applicable, the model can encode structures derived from experimental data or other folding methods. For example, in the manuscript, we compared the performance of ProtSSN using proteins folded by both AF2 and ESMFold.

However, we would like to emphasize that comparing the sensitivity of an encoding method across different structures or conformations is not the primary focus of our work. In contrast, some methods explicitly use MSA during model training. For instance, MSA-Transformer encodes MSA information directly into the protein embedding, and Tranception-retrieval utilizes different sets of MSA hyperparameters depending on the validation set's performance.

To avoid further confusion, we have revised the terms "MSA methods" and "non-MSA methods" in the manuscript to "zero-shot methods" and "few-shot methods."

Comment 16

Table 3 they're highlighted as the best, yet on ProteinGym there's several EVE models that do better as well as GEMMA, which are not referenced.

The comparison in Table 3 focuses on zero-shot methods, whereas GEMME and EVE are few-shot models. Since these methods have different input requirements, directly comparing them could lead to

unfair conclusions. For this reason, we reserved the comparisons with these few-shot models for Table 5, where we aim to provide a more comprehensive evaluation of all available methods.

Response to Reviewer 2

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper, the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

Thank you for your thorough review and clear summary of our work. Below, we provide a detailed, pointby-point response to each of your questions and concerns.

Strengths:

The authors describe a method that makes accurate mutation effect predictions by informing its predictions with structure.

Thank you for your clear summary of our highlights.

Weaknesses:

Comment 1

It is unclear how this model compares to other methods of incorporating structure into models of biological sequences, most notably SaProt.

(https://www.biorxiv.org/content/10.1101/2023.10.01.560349v1.full.pdf).

In the revision, we have updated the performance of SaProt single models (with both masked and unmasked versions with the pLDDT score) and ensemble models in the Tables 3, 5, and 6.

In the revised manuscript, we have updated the performance results for SaProt's single models (both masked and unmasked versions with the pLDDT score) as well as the ensemble models. These updates are reflected in Tables 3, 5, and 6.

Comment 2

ProteinGym is largely made of deep mutational scans, which measure the effect of every mutation on a protein. These new benchmarks contain on average measurements of less than a percent of all possible point mutations of their respective proteins. It is unclear what sorts of protein regions these mutations are more likely to lie in; therefore it is challenging to make conclusions about what a model has necessarily learned based on its score on this benchmark. For example, several assays in this new benchmark seem to be similar to each other, such as four assays on ubiquitin performed at pH 2.25 to pH 3.0.

We agree that both DTm and DDG are smaller datasets, making them less comprehensive than ProteinGym. However, we believe DTm and DDG provide valuable supplementary insights for the following reasons:

(1) These two datasets are low-throughput and manually curated. Compared to datasets from highthroughput experiments like ProteinGym, they contain fewer errors from experimental sources and data processing, offering cleaner and more reliable data.

(2) Environmental factors are crucial for the function and properties of enzymes, which is a significant concern for many biologists when discussing enzymatic functions. Existing benchmarks like ProteinGym tend to simplify these factors and focus more on global protein characteristics (e.g., AA sequence), overlooking the influence of environmental conditions.

(3) While low-throughput datasets like DTm and DDG do not cover all AA positions or perform extensive saturation mutagenesis, these experiments often target mutations at sites with higher potential for positive outcomes, guided by prior knowledge. As a result, the positive-to-negative ratio is more meaningful than random mutagenesis datasets, making these benchmarks more relevant for evaluating model performance.

We would like to emphasize that DTm and DDG are designed to complement existing benchmarks rather than replace ProteinGym. They address different scales and levels of detail in fitness prediction, and their inclusion allows for a more comprehensive evaluation of deep learning models.

Recommendations For The Authors:

Comment 1

I recommend including SaProt in your benchmarks.

In the revision, we added comparisons with SaProt in all the Tables (3, 5 and 6).

Comment 2

I also recommend investigating and giving a description of the bias in these new datasets.

The bias of the new benchmarks could be found in Table 1, where the mutants are distributed evenly at different level of pH values.

In the revision, we added a discussion regarding the new datasets in “Discussion and Conclusion” (lines 496-504 of the revised version).

Comment 3

I also recommend reporting the model's ability to predict disease using ClinVar -- this experiment is conspicuously absent.

Following the suggestion, we retrieved 2,525 samples from the ClinVar dataset available on ProteinGym’s website. Since the official source did not provide corresponding structure files, we performed the following three steps:

(1) We retrieved the UniProt IDs for the sequences from the UniProt website and downloaded the corresponding AlphaFold2 structures for 2,302 samples.

(2) For the remaining proteins, we used ColabFold 1.5.5 to perform structure prediction.

(3) Among these, 12 proteins were too long to be folded by ColabFold, for which we used the AlphaFold3 server for prediction.

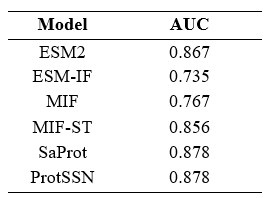

All processed structural data can be found at https://huggingface.co/datasets/tyang816/ClinVar_PDB. Our test results are provided in the following table. ProtSSN achieves the top performance over baseline methods.

Author response table 1.

-

-

eLife assessment

ProtSSN is a valuable approach that generates protein embeddings by integrating sequence and structural information, demonstrating improved prediction of mutation effects on thermostability compared to sequence-only models. The work is currently incomplete as it lacks a thorough comparison against other recent top-performing methods that also incorporate structural data, such as SaProt, EVE-based models, and GEMME. Providing a comprehensive analysis benchmarking ProtSSN against these state-of-the-art structure-based approaches would significantly strengthen the evidence supporting the utility of ProtSSN's joint sequence-structure representations.

-

Reviewer #1 (Public Review):

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), and then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous approaches. The approach seems promising and appears to out-compete …

Reviewer #1 (Public Review):

Summary:

The authors introduce a denoising-style model that incorporates both structure and primary-sequence embeddings to generate richer embeddings of peptides. My understanding is that the authors use ESM for the primary sequence embeddings, take resolved structures (or use structural predictions from AlphaFold when they're not available), and then develop an architecture to combine these two with a loss that seems reminiscent of diffusion models or masked language model approaches. The embeddings can be viewed as ensemble-style embedding of the two levels of sequence information, or with AlphaFold, an ensemble of two methods (ESM+AlphaFold). The authors also gather external datasets to evaluate their approach and compare it to previous approaches. The approach seems promising and appears to out-compete previous methods at several tasks. Nonetheless, I have strong concerns about a lack of verbosity as well as the exclusion of relevant methods and references.

Advances:

I appreciate the breadth of the analysis and comparisons to other methods. The authors separate tasks, models, and sizes of models in an intuitive, easy-to-read fashion that I find valuable for selecting a method for embedding peptides. Moreover, the authors gather two datasets for evaluating embeddings' utility for predicting thermostability. Overall, the work should be helpful for the field as more groups choose methods/pretraining strategies amenable to their goals, and can do so in an evidence-guided manner.

Considerations:

Primarily, a majority of the results and conclusions (e.g., Table 3) are reached using data and methods from ProteinGym, yet the best-performing methods on ProteinGym are excluded from the paper (e.g., EVE-based models and GEMME). In the ProteinGym database, these methods outperform ProtSSN models. Moreover, these models were published over a year---or even 4 years in the case of GEMME---before ProtSSN, and I do not see justification for their exclusion in the text.

Secondly, related to the comparison of other models, there is no section in the methods about how other models were used, or how their scores were computed. When comparing these models, I think it's crucial that there are explicit derivations or explanations for the exact task used for scoring each method. In other words, if the pre-training is indeed an important advance of the paper, the paper needs to show this more explicitly by explaining exactly which components of the model (and previous models) are used for evaluation. Are the authors extracting the final hidden layer representations of the model, treating these as features, and then using these features in a regression task to predict fitness/thermostability/DDG etc.? How are the model embeddings of other methods being used, since, for example, many of these methods output a k-dimensional embedding of a given sequence, rather than one single score that can be correlated with some fitness/functional metric? Summarily, I think the text lacks an explicit mention of how these embeddings are being summarized or used, as well as how this compares to the model presented.

I think the above issues can mainly be addressed by considering and incorporating points from Li et al. 2024[1] and potentially Tang & Koo 2024[2]. Li et al.[1] make extremely explicit the use of pretraining for downstream prediction tasks. Moreover, they benchmark pretraining strategies explicitly on thermostability (one of the main considerations in the submitted manuscript), yet there is no mention of this work nor the dataset used (FLIP (Dallago et al., 2021)) in this current work. I think a reference and discussion of [1] is critical, and I would also like to see comparisons in line with [1], as [1] is very clear about what features from pretraining are used, and how. If the comparisons with previous methods were done in this fashion, this level of detail needs to be included in the text.

To conclude, I think the manuscript would benefit substantially from a more thorough comparison of previous methods. Maybe one way of doing this is following [1] or [2], and using the final embeddings of each method for a variety of regression tasks---to really make clear where these methods are performing relative to one another. I think a more thorough methods section detailing how previous methods did their scoring is also important. Lastly, TranceptEVE (or a model comparable to it) and GEMME should also be mentioned in these results, or at the bare minimum, be given justification for their absence.

[1] Feature Reuse and Scaling: Understanding Transfer Learning with Protein Language Models

Francesca-Zhoufan Li, Ava P. Amini, Yisong Yue, Kevin K. Yang, Alex X. Lu

bioRxiv 2024.02.05.578959; doi: https://doi.org/10.1101/2024.02.05.578959[2] Evaluating the representational power of pre-trained DNA language models for regulatory genomics

Ziqi Tang, Peter K Koo

bioRxiv 2024.02.29.582810; doi: https://doi.org/10.1101/2024.02.29.582810 -

Reviewer #2 (Public Review):

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper, the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

As well, the authors curate a set of assays measuring the effect of mutations on thermostability. They demonstrate their model …

Reviewer #2 (Public Review):

Summary:

To design proteins and predict disease, we want to predict the effects of mutations on the function of a protein. To make these predictions, biologists have long turned to statistical models that learn patterns that are conserved across evolution. There is potential to improve our predictions however by incorporating structure. In this paper, the authors build a denoising auto-encoder model that incorporates sequence and structure to predict mutation effects. The model is trained to predict the sequence of a protein given its perturbed sequence and structure. The authors demonstrate that this model is able to predict the effects of mutations better than sequence-only models.

As well, the authors curate a set of assays measuring the effect of mutations on thermostability. They demonstrate their model also predicts the effects of these mutations better than previous models and make this benchmark available for the community.

Strengths:

The authors describe a method that makes accurate mutation effect predictions by informing its predictions with structure.

Weaknesses:

It is unclear how this model compares to other methods of incorporating structure into models of biological sequences, most notably SaProt (https://www.biorxiv.org/content/10.1101/2023.10.01.560349v1.full.pdf).

ProteinGym is largely made of deep mutational scans, which measure the effect of every mutation on a protein. These new benchmarks contain on average measurements of less than a percent of all possible point mutations of their respective proteins. It is unclear what sorts of protein regions these mutations are more likely to lie in; therefore it is challenging to make conclusions about what a model has necessarily learned based on its score on this benchmark. For example, several assays in this new benchmark seem to be similar to each other, such as four assays on ubiquitin performed at pH 2.25 to pH 3.0.

-