Omissions of threat trigger subjective relief and prediction error-like signaling in the human reward and salience systems

Curation statements for this article:-

Curated by eLife

eLife assessment

This study presents valuable findings on the relationship between prediction errors and brain activation in response to unexpected omissions of painful electric shocks. The strengths are the research question posed, as it has remained unresolved if prediction errors in the context of biologically aversive outcomes resemble reward-based prediction errors. The evidence is solid but there are weaknesses in the experimental design, where verbal instructions do not align with experienced outcome probabilities. It is further unclear how to interpret neural prediction error signaling in the assumed absence of learning. The work will be of interest to cognitive neuroscientists and psychologists studying appetitive and aversive learning.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

The unexpected absence of danger constitutes a pleasurable event that is critical for the learning of safety. Accumulating evidence points to similarities between the processing of absent threat and the well-established reward prediction error (PE). However, clear-cut evidence for this analogy in humans is scarce. In line with recent animal data, we showed that the unexpected omission of (painful) electrical stimulation triggers activations within key regions of the reward and salience pathways and that these activations correlate with the pleasantness of the reported relief. Furthermore, by parametrically violating participants’ probability and intensity related expectations of the upcoming stimulation, we showed for the first time in humans that omission-related activations in the VTA/SN were stronger following omissions of more probable and intense stimulations, like a positive reward PE signal. Together, our findings provide additional support for an overlap in the neural processing of absent danger and rewards in humans.

Article activity feed

-

-

-

-

eLife assessment

This study presents valuable findings on the relationship between prediction errors and brain activation in response to unexpected omissions of painful electric shocks. The strengths are the research question posed, as it has remained unresolved if prediction errors in the context of biologically aversive outcomes resemble reward-based prediction errors. The evidence is solid but there are weaknesses in the experimental design, where verbal instructions do not align with experienced outcome probabilities. It is further unclear how to interpret neural prediction error signaling in the assumed absence of learning. The work will be of interest to cognitive neuroscientists and psychologists studying appetitive and aversive learning.

-

Reviewer #1 (Public Review):

Summary:

Willems and colleagues test whether unexpected shock omissions are associated with reward-related prediction errors by using an axiomatic approach to investigate brain activation in response to unexpected shock omission. Using an elegant design that parametrically varies shock expectancy through verbal instructions, they see a variety of responses in reward-related networks, only some of which adhere to the axioms necessary for prediction error. In addition, there were associations between omission-related responses and subjective relief. They also use machine learning to predict relief-related pleasantness and find that none of the a priori "reward" regions were predictive of relief, which is an interesting finding that can be validated and pursued in future work.

Strengths:

The authors …

Reviewer #1 (Public Review):

Summary:

Willems and colleagues test whether unexpected shock omissions are associated with reward-related prediction errors by using an axiomatic approach to investigate brain activation in response to unexpected shock omission. Using an elegant design that parametrically varies shock expectancy through verbal instructions, they see a variety of responses in reward-related networks, only some of which adhere to the axioms necessary for prediction error. In addition, there were associations between omission-related responses and subjective relief. They also use machine learning to predict relief-related pleasantness and find that none of the a priori "reward" regions were predictive of relief, which is an interesting finding that can be validated and pursued in future work.

Strengths:

The authors pre-registered their approach and the analyses are sound. In particular, the axiomatic approach tests whether a given region can truly be called a reward prediction error. Although several a priori regions of interest satisfied a subset of axioms, no ROI satisfied all three axioms, and the authors were candid about this. A second strength was their use of machine learning to identify a relief-related classifier. Interestingly, none of the ROIs that have been traditionally implicated in reward prediction error reliably predicted relief, which opens important questions for future research.

Weaknesses:

The authors have done many analyses to address weaknesses in response to reviews. I will still note that given that one third of participants (n=10) did not show parametric SCR in response to instructions, it seems like some learning did occur. As prediction error is so important to such learning, a weakness of the paper is that conclusions about prediction error might differ if dynamic learning were taken into account using quantitative models.

-

Reviewer #2 (Public Review):

The paper by Willems aimed to uncover the neural implementations of threat omissions and showed that the VTA/SN activity was stronger following the omissions of more probable and intense shock stimulation, mimicking a reward PE signal.

My main concern remains regarding the interpretation of the task as a learning paradigm (extinction) or simply an expectation violation task (difference between instructed and experienced probability), though I appreciate some of the extra analyses in the responses to the reviewers. Looking at both the behavioral and neural data, a clear difference emerges among different US intensities and non-0% vs. 0% contrasts, however, the difference across probabilities was not clear in the figures, potentially partly due to the false instructions subjects received about the shock …

Reviewer #2 (Public Review):

The paper by Willems aimed to uncover the neural implementations of threat omissions and showed that the VTA/SN activity was stronger following the omissions of more probable and intense shock stimulation, mimicking a reward PE signal.

My main concern remains regarding the interpretation of the task as a learning paradigm (extinction) or simply an expectation violation task (difference between instructed and experienced probability), though I appreciate some of the extra analyses in the responses to the reviewers. Looking at both the behavioral and neural data, a clear difference emerges among different US intensities and non-0% vs. 0% contrasts, however, the difference across probabilities was not clear in the figures, potentially partly due to the false instructions subjects received about the shock probabilities.

The lack of probability related PE demonstration, both in behavior and to a less extent in imaging data, does not fully support the PE axioms (0% and 100% are by themselves interesting categories since the instruction and experience matched well and therefore might need to be interpreted differently from other probabilities).

As the other reviewers pointed out, the application of instruction together with extinction paradigm complicates the interpretation of results. Also, the trial-by-trial analysis suggestion was responded by the probability x run interaction analysis, which still averaged over trials within each run to estimate a beta coefficient. So my evaluation remains that this is a valuable study to test PE axioms in the human reward and salience systems but authors need to be extremely careful with their wordings as to why this task is not a particularly learning paradigm (or the learning component did not affect their results, which was in conflict with the probability related SCR, pleasantness ratings as well as BOLD signals).

-

Reviewer #3 (Public Review):

Summary:

The authors conducted a human fMRI study investigating the omission of expected electrical shocks with varying probabilities. Participants were informed of the probability of shock and shock intensity trial-by-trial. The time point corresponding to the absence of the expected shock (with varying probability) was framed as a prediction error producing the cognitive state of relief/pleasure for the participant. fMRI activity in the VTA/SN and ventral putamen corresponded to the surprising omission of a high probability shock. Participants' subjective relief at having not been shocked correlated with activity in brain regions typically associated with reward-prediction errors. The overall conclusion of the manuscript was that the absence of an expected aversive outcome in human fMRI looks like a …

Reviewer #3 (Public Review):

Summary:

The authors conducted a human fMRI study investigating the omission of expected electrical shocks with varying probabilities. Participants were informed of the probability of shock and shock intensity trial-by-trial. The time point corresponding to the absence of the expected shock (with varying probability) was framed as a prediction error producing the cognitive state of relief/pleasure for the participant. fMRI activity in the VTA/SN and ventral putamen corresponded to the surprising omission of a high probability shock. Participants' subjective relief at having not been shocked correlated with activity in brain regions typically associated with reward-prediction errors. The overall conclusion of the manuscript was that the absence of an expected aversive outcome in human fMRI looks like a reward-prediction error seen in other studies that use positive outcomes.

Strengths:

Overall, I found this to be a well-written human neuroimaging study investigating an often overlooked question on the role of aversive prediction errors, and how they may differ from reward-related prediction errors. The paper is well-written and the fMRI methods seem mostly rigorous and solid.

Once again, the authors were very responsive to feedback. I have no further comments.

-

Author response:

The following is the authors’ response to the previous reviews.

Reviewer #1 (Public Review):

The reviewer retained most of their comments from the previous reviewing round. In order to meet these comments and to further examine the dynamic nature of threat omission-related fMRI responses, we now re-analyzed our fMRI results using the single trial estimates. The results of these additional analyses are added below in our response to the recommendations for the authors of reviewer 1. However, we do want to reiterate that there was a factually incorrect statement concerning our design in the reviewer’s initial comments. Specifically, the reviewer wrote that “25% of shocks are omitted, regardless of whether subjects are told that the probability is 100%, 75%, 50%, 25%, or 0%.” We want to repeat that this is not what we …

Author response:

The following is the authors’ response to the previous reviews.

Reviewer #1 (Public Review):

The reviewer retained most of their comments from the previous reviewing round. In order to meet these comments and to further examine the dynamic nature of threat omission-related fMRI responses, we now re-analyzed our fMRI results using the single trial estimates. The results of these additional analyses are added below in our response to the recommendations for the authors of reviewer 1. However, we do want to reiterate that there was a factually incorrect statement concerning our design in the reviewer’s initial comments. Specifically, the reviewer wrote that “25% of shocks are omitted, regardless of whether subjects are told that the probability is 100%, 75%, 50%, 25%, or 0%.” We want to repeat that this is not what we did. 100% trials were always reinforced (100% reinforcement rate); 0% trials were never reinforced (0% reinforcement rate). For all other instructed probability levels (25%, 50%, 75%), the stimulation was delivered in 25% of the trials (25% reinforcement rate). We have elaborated on this misconception in our previous letter and have added this information more explicitly in the previous revision of the manuscript (e.g., lines 125-129; 223-224; 486-492).

Reviewer #1 (Recommendations For The Authors):

I do not have any further recommendations, although I believe an analysis of learning-related changes is still possible with the trial-wise estimates from unreinforced trials. The authors' response does not clarify whether they tested for interactions with run, and thus the fact that there are main effects does not preclude learning. I kept my original comments regarding limitations, with the exception of the suggestion to modify the title.

We thank the reviewer for this recommendation. In line with their suggestion, we have now reanalyzed our main ROI results using the trial-by-trial estimates we obtained from the firstlevel omission>baseline contrasts. Specifically, we extracted beta-estimates from each ROI and entered them into the same Probability x Intensity x Run LMM we used for the relief and SCR analyses. Results from these analyses (in the full sample) were similar to our main results. For the VTA/SN model, we found main effects of Probability (F = 3.12, p = .04), and Intensity (F = 7.15, p < .001) (in the model where influential outliers were rescored to 2SD from mean). There was no main effect of Run (F = 0.92, p = .43) and no Probability x Run interaction (F = 1.24, p = .28). If the experienced contingency would have interfered with the instructions, there should have been a Probability x Run interaction (with the effect of Probability only being present in the first runs). Since we did not observe such an interaction, our results indicate that even though some learning might still have taken place, the main effect of Probability remained present throughout the task.

There is an important side note regarding these analyses: For the first level GLM estimation, we concatenated the functional runs and accounted for baseline differences between runs by adding run-specific intercepts as regressors of no-interest. Hence, any potential main effect of run was likely modeled out at first level. This might explain why, in contrast to the rating and SCR results (see Supplemental Figure 5), we found no main effect of Run. Nevertheless, interaction effects should not be affected by including these run-specific intercepts.

Note that when we ran the single-trial analysis for the ventral putamen ROI, the effect of intensity became significant (F = 3.89, p = .02). Results neither changed for the NAc, nor the vmPFC ROIs.

Reviewer #2 (Public Review):

Comments on revised version:

I want to thank the authors for their thorough and comprehensive work in revising this manuscript. I agree with the authors that learning paradigms might not be a necessity when it comes to study the PE signals, but I don't particularly agree with some of the responses in the rebuttal letter ("Furthermore, conditioning paradigms generally only include one level of aversive outcome: the electrical stimulation is either delivered or omitted."). This is of course correct description for the conditioning paradigm, but the same can be said for an instructed design: the aversive outcome was either delivered or not. That being said, adopting the instructed design itself is legitimate in my opinion.

We thank the reviewer for this comment. We have now modified the phrasing of this argument to clarify our reasoning (see lines 102-104: “First, these only included one level of aversive outcome: the electrical stimulation was either delivered at a fixed intensity, or omitted; but the intensity of the stimulation was never experimentally manipulated within the same task.”).

The reason why we mentioned that “the aversive outcome is either delivered or omitted” is because in most contemporary conditioning paradigms only one level of aversive US is used. In these cases, it is therefore not possible to investigate the effect of US Intensity. In our paradigm, we included multiple levels of aversive US, allowing us to assess how the level of aversiveness influences threat omission responding. It is indeed true that each level was delivered or not. However, our data clearly (and robustly across experiments, see Willems & Vervliet, 2021) demonstrate that the effects of the instructed and perceived unpleasantness of the US (as operationalized by the mean reported US unpleasantness during the task) on the reported relief and the omission fMRI responses are stronger than the effect of instructed probability.

My main concern, which the authors spent quite some length in the rebuttal letter to address, still remains about the validity for different instructed probabilities. Although subjects were told that the trials were independent, the big difference between 75% and 25% would more than likely confuse the subjects, especially given that most of us would fall prey to the Gambler's fallacy (or the law of small numbers) to some degree. When the instruction and subjective experience collides, some form of inference or learning must have occurred, making the otherwise straightforward analysis more complex. Therefore, I believe that a more rigorous/quantitative learning modeling work can dramatically improve the validity of the results. Of course, I also realize how much extra work is needed to append the computational part but without it there is always a theoretical loophole in the current experimental design.

We agree with the reviewer that some learning may have occurred in our task. However, we believe the most important question in relation to our study is: to what extent did this learning influence our manipulations of interest?

In our reply to reviewer 1, we already showed that a re-analysis of the fMRI results using the trial-by-trial estimates of the omission contrasts revealed no Probability x Run interaction, suggesting that – overall – the probability effect remained stable over the course of the experiment. However, inspired by the alternative explanation that was proposed by this reviewer, we now also assessed the role of the Gambler’s fallacy in a separate set of analyses. Indeed, it is possible that participants start to expect a stimulation more after more time has passed since the last stimulation was experienced. To test this alternative hypothesis, we specified two new regressors that calculated for each trial of each participant how many trials had passed since the last stimulation (or since the beginning of the experiment) either overall (across all trials of all probability types; hence called the overall-lag regressor) or per probability level (across trials of each probability type separately; hence called the lag-per-probability regressor). For both regressors a value of 0 indicates that the previous trial was either a stimulation trial or the start of experiment, a value of 1 means that the last stimulation trial was 2 trials ago, etc.

The results of these additional analyses are added in a supplemental note (see supplemental note 6), and referred to in the main text (see lines 231-236: “Likewise, a post-hoc trial-by-trial analysis of the omission-related fMRI activations confirmed that the Probability effect for the VTA/SN activations was stable over the course of the experiment (no Probability x Run interaction) and remained present when accounting for the Gambler’s fallacy (i.e., the possibility that participants start to expect a stimulation more when more time has passed since the last stimulation was experienced) (see supplemental note 6). Overall, these post-hoc analyses further confirm the PE-profile of omission-related VTA/SN responses”.

Addition to supplemental material (pages 16-18)

Supplemental Note 6: The effect of Run and the Gambler’s Fallacy

A question that was raised by the reviewers was whether omission-related responses could be influenced by dynamical learning or the Gambler’s Fallacy, which might have affected the effectiveness of the Probability manipulation.

Inspired by this question, we exploratorily assessed the role of the Gambler’s Fallacy and the effects of Run in a separate set of analyses. Indeed, it is possible that participants start to expect a stimulation more when more time has passed since the last stimulation was experienced. To test this alternative hypothesis, we specified two new regressors that calculated for each trial of each participant how many trials had passed since the last stimulation (or since the beginning of the experiment) either overall (across all trials of all probability types; hence called the overall-lag regressor) or per probability level (across trials of each probability type separately; hence called the lag-per-probability regressor). For both regressors a value of 0 indicates that the previous trial was either a stimulation trial or the start of experiment, a value of 1 means that the last stimulation trial was 2 trials ago, etc.

The new models including these regressors for each omission response type (i.e., omission-related activations for each ROI, relief, and omission-SCR) were specified as follows:

(1) For the overall lag:

Omission response ~ Probability * Intensity * Run + US-unpleasantness + Overall-lag + (1|Subject).

(2) For the lag per probability level:

Omission response ~ Probability * Intensity * Run + US-unpleasantness + Lag-perprobability : Probability + (1|Subject).

Where US-unpleasantness scores were mean-centered across participants; “*” represents main effects and interactions, and “:” represents an interaction (without main effect). Note that we only included an interaction for the lag-per-probability model to estimate separate lag-parameters for each probability level.

The results of these analyses are presented in the tables below. Overall, we found that adding these lag-regressors to the model did not alter our main results. That is: for the VTA/SN, relief and omission-SCR, the main effects of Probability and Intensity remained. Interestingly, the overall-lag-effect itself was significant for VTA/SN activations and omission SCR, indicating that VTA/SN activations were larger when more time had passed since the last stimulation (beta = 0.19), whereas SCR were smaller when more time had passed (beta = -0.03). This pattern is reminiscent of the Perruchet effect, namely that the explicit expectancy of a US increases over a run of non-reinforced trials (in line with the gambler’s fallacy effect) whereas the conditioned physiological response to the conditional stimulus declines (in line with an extinction effect, Perruchet, 1985; McAndrew, Jones, McLaren, & McLaren, 2012). Thus, the observed dissociation between the VTA/SN activations and omission SCR might similarly point to two distinctive processes where VTA/SN activations are more dependent on a consciously controlled process that is subjected to the gambler’s fallacy, whereas the strength of the omission SCR responses is more dependent on an automatic associative process that is subjected to extinction. Importantly, however, even though the temporal distance to the last stimulation had these opposing effects on VTA/SN activations and omission SCRs, the main effects of the probability manipulation remained significant for both outcome variables. This means that the core results of our study still hold.

Next to the overall-lag effect, the lag-per-probability regressor was only significant for the vmPFC. A follow-up of the beta estimates of the lag-per-probability regressors for each probability level revealed that vmPFC activations increased with increasing temporal distance from the stimulation, but only for the 50% trials (beta = 0.47, t = 2.75, p < .01), and not the 25% (beta = 0.25, t = 1.49, p = .14) or the 75% trials (beta = 0.28, t = 1.62, p = .10).

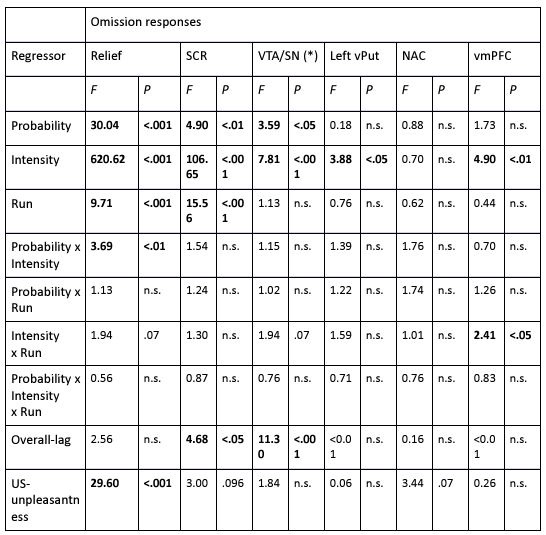

Author response table 1.

F-statistics and corresponding p-values from the overall lag model. (*) F-test and p-values were based on the model where outliers were rescored to 2SD from the mean. Note that when retaining the influential outliers for this model, the p-value of the probability effect was p = .06. For all other outcome variables, rescoring the outliers did not change the results. Significant effects are indicated in bold.

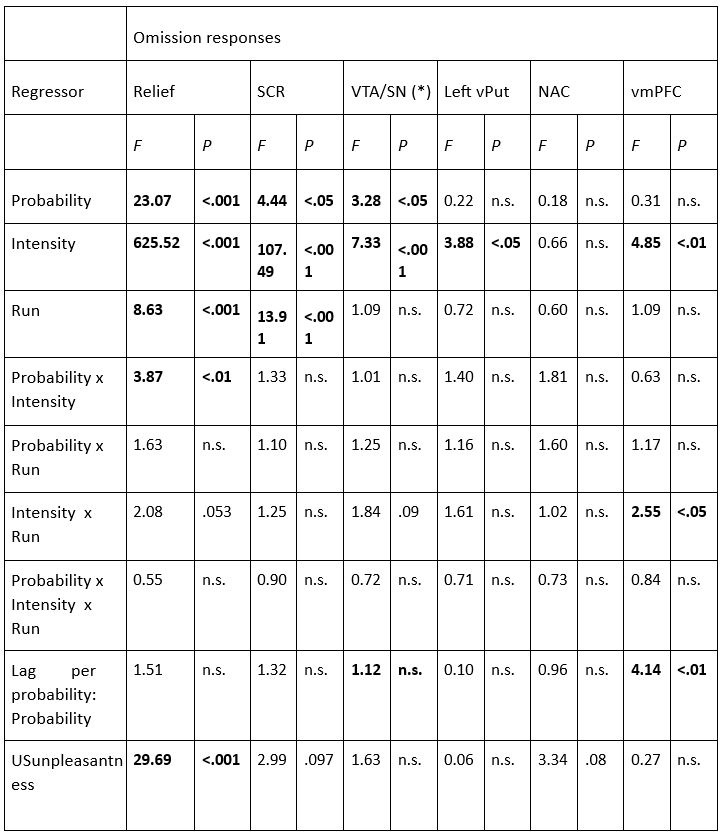

Author response table 2.

F-statistics and corresponding p-values from the lag per probability level model. (*) F-test and p-values were based on the model where outliers were rescored to 2SD from the mean. Note that when retaining the influential outliers for this model, the p-value of the Intensity x Run interaction was p = .05. For all other outcome variables, rescoring the outliers did not change the results. Significant effects are indicated in bold.

As the authors mentioned in the rebuttal letter, "selecting participants only if their anticipatory SCR monotonically increased with each increase in instructed probability 0% < 25% < 50% < 75% < 100%, N = 11 participants", only ~1/3 of the subjects actually showed strong evidence for the validity of the instructions. This further raises the question of whether the instructed design, due to the interference of false instruction and the dynamic learning among trials, is solid enough to test the hypothesis .

We agree with the reviewer that a monotonic increase in anticipatory SCR with increasing probability instructions would provide the strongest evidence that the manipulation worked. However, it is well known that SCR is a noisy measure, and so the chances to see this monotonic increase are rather small, even if the underlying threat anticipation increases monotonically. Furthermore, between-subject variation is substantial in physiological measures, and it is not uncommon to observe, e.g., differential fear conditioning in one measure, but not in another (Lonsdorf & Merz, 2017). It is therefore not so surprising that ‘only’ 1/3 of our participants showed the perfect pattern of monotonically increasing SCR with increasing probability instructions. That being said, it is also important to note that not all participants were considered for these follow-up analyses because valid SCR data was not always available.

Specifically, N = 4 participants were identified as anticipation non-responders (i.e. participant with smaller average SCR to the clock on 100% than on 0% trials; pre-registered criterium) and were excluded from the SCR-related analyses, and N = 1 participant had missing data due to technical difficulties. This means that only 26 (and not 31) participants were considered for the post hoc analyses. Taking this information into account, this means that 21 out of 26 participants (approximately 80%) showed stronger anticipatory SCR following 75% instructions compared to 25% instructions and that 11 out of 26 participants (approximately 40%) even showed the monotonical increase in their anticipatory SCR (see supplemental figure 4). Furthermore, although anticipatory SCR gradually decreased over the course of the experiment, there was no Run x Probability interaction, indicating that the instructions remained stable throughout the task (see supplemental figure 3).

Reviewer #2 (Recommendations For The Authors):

A more operational approach might be to break the trials into different sections along the timeline and examine how much the results might have been affected across time. I expect the manipulation checks would hold for the first one or two runs and the authors then would have good reasons to focus on the behavioral and imaging results for those runs.

This recommendation resembles the recommendation by reviewer 1. In our reply to reviewer 1, we showed the results of a re-analysis of the fMRI data using the trial-by-trial estimates of the omission contrasts, which revealed no Probability x Run interaction, suggesting that – overall - the probability effect remained (more or less) stable over the course of the experiment. For a more in depth discussion of the results of this additional analysis, we refer to our answer to reviewer 1.

Reviewer #3 (Public Review):

Comments on revised version:

The authors were extremely responsive to the comments and provided a comprehensive rebuttal letter with a lot of detail to address the comments. The authors clarified their methodology, and rationale for their task design, which required some more explanation (at least for me) to understand. Some of the design elements were not clear to me in the original paper.

The initial framing for their study is still in the domain of learning. The paper starts off with a description of extinction as the prime example of when threat is omitted. This could lead a reader to think the paper would speak to the role of prediction errors in extinction learning processes. But this is not their goal, as they emphasize repeatedly in their rebuttal letter. The revision also now details how using a conditioning/extinction framework doesn't suit their experimental needs.

We thank the reviewer for pointing out this potential cause of confusion. We have now rewritten the starting paragraph of the introduction to more closely focus on prediction errors, and only discuss fear extinction as a potential paradigm that has been used to study the role of threat omission PE for fear extinction learning (see lines 40-55). We hope that these adaptations are sufficient to prevent any false expectations. However, as we have mentioned in our previous response letter, not talking about fear extinction at all would also not make sense in our opinion, since most of the knowledge we have gained about threat omission prediction errors to date is based on studies that employed these paradigms.

Adaptation in the revised manuscript (lines 40-55):

“We experience pleasurable relief when an expected threat stays away1. This relief indicates that the outcome we experienced (“nothing”) was better than we expected it to be (“threat”). Such a mismatch between expectation and outcome is generally regarded as the trigger for new learning, and is typically formalized as the prediction error (PE) that determines how much there can be learned in any given situation2. Over the last two decades, the PE elicited by the absence of expected threat (threat omission PE) has received increasing scientific interest, because it is thought to play a central role in learning of safety. Impaired safety learning is one of the core features of clinical anxiety4. A better understanding of how the threat omission PE is processed in the brain may therefore be key to optimizing therapeutic efforts to boost safety learning. Yet, despite its theoretical and clinical importance, research on how the threat omission PE is computed in the brain is only emerging.

To date, the threat omission PE has mainly been studied using fear extinction paradigms that mimic safety learning by repeatedly confronting a human or animal with a threat predicting cue (conditional stimulus, CS; e.g. a tone) in the absence of a previously associated aversive event (unconditional stimulus, US; e.g., an electrical stimulation). These (primarily non-human) studies have revealed that there are striking similarities between the PE elicited by unexpected threat omission and the PE elicited by unexpected reward.”

It is reasonable to develop a new task to answer their experimental questions. By no means is there a requirement to use a conditioning/extinction paradigm to address their questions. As they say, "it is not necessary to adopt a learning paradigm to study omission responses", which I agree with. But the authors seem to want to have it both ways: they frame their paper around how important prediction errors are to extinction processes, but then go out of their way to say how they can't test their hypotheses with a learning paradigm.

Part of their argument that they needed to develop their own task "outside of a learning context" goes as follows:

(1) "...conditioning paradigms generally only include one level of aversive outcome: the electrical stimulation is either delivered or omitted. As a result, the magnitude-related axiom cannot be tested."

(2) "....in conditioning tasks people generally learn fast, rendering relatively few trials on which the prediction is violated. As a result, there is generally little intra-individual variability in the PE responses"

(3) "...because of the relatively low signal to noise ratio in fMRI measures, fear extinction studies often pool across trials to compare omission-related activity between early and late extinction, which further reduces the necessary variability to properly evaluate the probability axiom"

These points seem to hinge on how tasks are "generally" constructed. However, there are many adaptations to learning tasks:

(1) There is no rule that conditioning can't include different levels of aversive outcomes following different cues. In fact, their own design uses multiple cues that signal different intensities and probabilities. Saying that conditioning "generally only include one level of aversive outcome" is not an explanation for why "these paradigms are not tailored" for their research purposes. There are also several conditioning studies that have used different cues to signal different outcome probabilities. This is not uncommon, and in fact is what they use in their study, only with an instruction rather than through learning through experience, per se.

(2) Conditioning/extinction doesn't have to occur fast. Just because people "generally learn fast" doesn't mean this has to be the case. Experiments can be designed to make learning more challenging or take longer (e.g., partial reinforcement). And there can be intra-individual differences in conditioning and extinction, especially if some cues have a lower probability of predicting the US than others. Again, because most conditioning tasks are usually constructed in a fairly simplistic manner doesn't negate the utility of learning paradigms to address PEaxioms.

(3) Many studies have tracked trial-by-trial BOLD signal in learning studies (e.g., using parametric modulation). Again, just because other studies "often pool across trials" is not an explanation for these paradigms being ill-suited to study prediction errors. Indeed, most computational models used in fMRI are predicated on analyzing data at the trial level.

We thank the reviewer for these remarks. The “fear conditioning and extinction paradigms” that we were referring to in this paragraph were the ones that have been used to study threat omission PE responses in previous research (e.g., Raczka et al., 2011; Thiele et al. 2021; Lange et al. 2020; Esser et al., 2021; Papalini et al., 2021; Vervliet et al. 2017). These studies have mainly used differential/multiple-cue protocols where either one (or two) CS+ and one CS- are trained in an acquisition phase and extinguished in the next phase. Thus, in these paradigms: (1) only one level of aversive US is used; and (2) as safety learning develops over the course of extinction, there are relatively few omission trials during which “large” threat omission PEs can be observed (e.g. from the 24 CS+ trials that were used during extinction in Esser et al., the steepest decreases in expectancy – and thus the largest PE – were found in first 6 trials); and (3) there was never absolute certainty that the stimulation will no longer follow. Some of these studies have indeed estimated the threat omission PE during the extinction phase based on learning models, and have entered these estimates as parametric modulators to CS-offset regressors. This is very informative. However, the exact model that was used differed per study (e.g. Rescorla-Wagner in Raczka et al. and Thiele et al.; or a Rescorla- Wagner–Pearce- Hall hybrid model in Esser et al.). We wanted to analyze threat omission-responses without commitment to a particular learning model. Thus, in order to examine how threat omissionresponses vary as a function of probability-related expectations, a paradigm that has multiple probability levels is recommended (e.g. Rutledge et al., 2010; Ojala et al., 2022)

The reviewer rightfully pointed out that conditioning paradigms (more generally) can be tailored to fit our purposes as well. Still, when doing so, the same adaptations as we outlined above need to be considered: i.e. include different levels of US intensity; different levels of probability; and conditions with full certainty about the US (non)occurrence. In our attempt to keep the experimental design as simple and straightforward as possible, we decided to rely on instructions for this purpose, rather than to train 3 (US levels) x 5 (reinforcement levels) = 15 different CSs. It is certainly possible to train multiple CSs of varying reinforcement rates (e.g. Grings et al. 1971, Ojala et al., 2022). However, given that US-expectation on each trial would primarily depend on the individual learning processes of the participants, using a conditioning task would make it more difficult to maintain experimental control over the level of USexpectation elicited by each CS. As a result, this would likely require more extensive training, and thus prolong the study procedure considerably. Furthermore, even though previous studies have trained different CSs for different reinforcement rates, most of these studies have only used one level of US. Thus, in order to not complexify our task to much, we decided to rely on instructions rather than to train CSs for multiple US levels (in addition to multiple reinforcement rates).

We have tried to clarify our reasoning in the revised version of the manuscript (see introduction, lines 100-113):

“The previously discussed fear conditioning and extinction studies have been invaluable for clarifying the role of the threat omission PE within a learning context. However, these studies were not tailored to create the varying intensity and probability-related conditions that are required to systematically evaluate the threat omission PE in the light of the PE axioms. First, these only included one level of aversive outcome: the electrical stimulation was either delivered or omitted; but the intensity of the stimulation was never experimentally manipulated within the same task. As a result, the magnitude-related axiom could not be tested. Second, as safety learning progressively developed over the course of extinction learning, the most informative trials to evaluate the probability axiom (i.e. the trials with the largest PE) were restricted to the first few CS+ offsets of the extinction phase, and the exact number of these informative trials likely differed across participants as a result of individually varying learning rates. This limited the experimental control and necessary variability to systematically evaluate the probability axiom. Third, because CS-US contingencies changed over the course of the task (e.g. from acquisition to extinction), there was never complete certainty about whether the US would (not) follow. This precluded a direct comparison of fully predicted outcomes. Finally, within a learning context, it remains unclear whether brain responses to the threat omission are in fact responses to the violation of expectancy itself, or whether they are the result of subsequent expectancy updating.”

Again, the authors are free to develop their own task design that they think is best suited to address their experimental questions. For instance, if they truly believe that omission-related responses should be studied independent of updating. The question I'm still left puzzling is why the paper is so strongly framed around extinction (the word appears several times in the main body of the paper), which is a learning process, and yet the authors go out of their way to say that they can only test their hypotheses outside of a learning paradigm.

As we have mentioned before, the reason why we refer to extinction studies is because most evidence on threat omission PE to date comes from fear extinction paradigms.

The authors did address other areas of concern, to varying extents. Some of these issues were somewhat glossed over in the rebuttal letter by noting them as limitations. For example, the issue with comparing 100% stimulation to 0% stimulation, when the shock contaminates the fMRI signal. This was noted as a limitation that should be addressed in future studies, bypassing the critical point.

It is unclear to us what the reviewer means with “bypassing the critical point”. We argued in the manuscript that the contrast we initially specified and preregistered to study axiom 3 (fully predicted outcomes elicit equivalent activation) could not be used for this purpose, as it was confounded by the delivery of the stimulation. Because 100% trials aways included the stimulation and 0% trials never included stimulation, there was no way to disentangle activations related to full predictability from activations related to the stimulation as such.

Reviewer #3 (Recommendations For The Authors):

I'm not sure the new paragraph explaining why they can't use a learning task to test their hypotheses is very convincing, as I noted in my review. Again, it is not a problem to develop a new task to address their questions. They can justify why they want to use their task without describing (incorrectly in my opinion) that other tasks "generally" are constructed in a way that doesn't suit their needs.

For an overview of the changes we made in response to this recommendation, we refer to our reply to the public review.

We look forward to your reply and are happy to provide answers to any further questions or comments you may have.

-

-

Author response:

The following is the authors’ response to the original reviews.

As you will see, the main changes in the revised manuscript pertain to the structure and content of the introduction. Specifically, we have tried to more clearly introduce our paradigm, the rationale behind the paradigm, why it is different from learning paradigms, and why we study “relief”.

In this rebuttal letter, we will go over the reviewers’ comments one-by-one and highlight how we have adapted our manuscript accordingly. However, because one concern was raised by all reviewers, we will start with an in-depth discussion of this concern.

The shared concern pertained to the validity of the EVA task as a model to study threat omission responses. Specifically, all reviewers questioned the effectivity of our so-called “inaccurate”, “false” or “ruse” …

Author response:

The following is the authors’ response to the original reviews.

As you will see, the main changes in the revised manuscript pertain to the structure and content of the introduction. Specifically, we have tried to more clearly introduce our paradigm, the rationale behind the paradigm, why it is different from learning paradigms, and why we study “relief”.

In this rebuttal letter, we will go over the reviewers’ comments one-by-one and highlight how we have adapted our manuscript accordingly. However, because one concern was raised by all reviewers, we will start with an in-depth discussion of this concern.

The shared concern pertained to the validity of the EVA task as a model to study threat omission responses. Specifically, all reviewers questioned the effectivity of our so-called “inaccurate”, “false” or “ruse” instructions in triggering an equivalent level of shock expectancy, and relatedly, how this effectivity was affected by dynamic learning over the course of the task.

We want to thank the reviewers for raising this important issue. Indeed, it is a vital part of our design and it therefore deserves considerable attention. It is now clear to us that in the previous version of the manuscript we may have focused too little on why we moved away from a learning paradigm, and how we made sure that the instructions were successful at raising the necessary expectations; and how the instructions were affected by learning. We believe this has resulted in some misunderstandings, which consequently may have cast doubts on our results. In the following sections, we will go into these issues.

The rationale behind our instructed design

The main aim of our study was to investigate brain responses to unexpected omissions of threat in greater detail by examining their similarity to the reward prediction error axioms (Caplin & Dean, 2008), and exploring the link with subjective relief. Specifically, we hypothesized that omission-related responses should be dependent on the probability and the intensity of the expected-but-omitted aversive event (i.e., electrical stimulation), meaning that the response should be larger when the expected stimulation was stronger and more expected, and that fully predicted outcomes should not trigger a difference in responding.

To this end, we required that participants had varying levels of threat probability and intensity predictions, and that these predictions would most of the time be violated. Although we fully agree with the reviewers that fear conditioning and extinction paradigms can provide an excellent way to track the teaching properties of prediction error responses (i.e., how they are used to update expectancies on future trials), we argued that they are less suited to create the varying probability and intensity-related conditions we required (see Willems & Vervliet, 2021). Specifically, in a standard conditioning task participants generally learn fast, rendering relatively few trials on which the prediction is violated. As a result, there is generally little intraindividual variability in the prediction error responses. This precludes an in-depth analysis of the probability-related effects. Furthermore, conditioning paradigms generally only include one level of aversive outcome: the electrical stimulation is either delivered or omitted. As a result, intensity-related effects cannot be tested. Finally, because CS-US contingencies change over the course of a fear conditioning and extinction study (e.g. from acquisition to extinction), there is never complete certainty about when the US will (not) follow. This precludes a direct comparison of fully predicted outcomes.

Another added value of studying responses to the prediction error at threat omission outside a learning context is that it can offer a way to disentangle responses to the violation of threat expectancy, with those of subsequent expectancy updating.

Also note that Rutledge and colleagues (2010), who were the first to show that human fMRI responses in the Nucleus Accumbens comply to the reward prediction error axioms also did not use learning experiences to induce expectancy. In that sense, we argued it was not necessary to adopt a learning paradigm to study threat omission responses.

Adaptations in the revised manuscript: We included two new paragraphs in the introduction of the revised manuscript to elaborate on why we opted not to use a learning paradigm in the present study (lines 90-112).

“However, is a correlation with the theoretical PE over time sufficient for neural activations/relief to be classified as a PE-signal? In the context of reward, Caplin and colleagues proposed three necessary and sufficient criteria all PE-signals should comply to, independent of the exact operationalizations of expectancy and reward (the socalled axiomatic approach24,25; which has also been applied to aversive PE26–28). Specifically, the magnitude of a PE signal should: (1) be positively related to the magnitude of the reward (larger rewards trigger larger PEs); (2) be negatively related to likelihood of the reward (more probable rewards trigger smaller PEs); and (3) not differentiate between fully predicted outcomes of different magnitudes (if there is no error in prediction, there should be no difference in the PE signal).”

“It is evident that fear conditioning and extinction paradigms have been invaluable for studying the role of the threat omission PE within a learning context. However, these paradigms are not tailored to create the varying intensity and probability-related conditions that are required to evaluate the threat omission PE in the light of the PE axioms. First, conditioning paradigms generally only include one level of aversive outcome: the electrical stimulation is either delivered or omitted. As a result, the magnitude-related axiom cannot be tested. Second, in conditioning tasks people generally learn fast, rendering relatively few trials on which the prediction is violated. As a result, there is generally little intra-individual variability in the PE responses. Moreover, because of the relatively low signal to noise ratio in fMRI measures, fear extinction studies often pool across trials to compare omission-related activity between early and late extinction16, which further reduces the necessary variability to properly evaluate the probability axiom. Third, because CS-US contingencies change over the course of the task (e.g. from acquisition to extinction), there is never complete certainty about whether the US will (not) follow. This precludes a direct comparison of fully predicted outcomes. Finally, within a learning context, it remains unclear whether PErelated responses are in fact responses to the violation of expectancy itself, or whether they are the result of subsequent expectancy updating.”

Can verbal instructions be used to raise the expectancy of shock?

The most straightforward way to obtain sufficient variability in both probability and intensityrelated predictions is by directly providing participants with instructions on the probability and intensity of the electrical stimulation. In a previous behavioral study, we have shown that omission responses (self-reported relief and omission SCR) indeed varied with these instructions (Willems & Vervliet, 2021). In addition, the manipulation checks that are reported in the supplemental material provided further support that the verbal instructions were effective at raising the associated expectancy of stimulation. Specifically, participants recollected having received more stimulations after higher probability instructions (see Supplemental Figure 2). Furthermore, we found that anticipatory SCR, which we used as a proxy of fearful expectation, increased with increasing probability and intensity (see Supplemental Figure 3). This suggests that it is not necessary to have expectation based on previous experience if we want to evaluate threat omission responses in the light of the prediction error axioms.

Adaptations in the revised manuscript: We more clearly referred to the manipulation checks that are presented in the supplementary material in the results section of the main paper (lines 135-141).

“The verbal instructions were effective at raising the expectation of receiving the electrical stimulation in line with the provided probability and intensity levels. Anticipatory SCR, which we used as a proxy of fearful expectation, increased as a function of the probability and intensity instructions (see Supplementary Figure 3). Accordingly, post-experimental questions revealed that by the end of the experiment participants recollected having received more stimulations after higher probability instructions, and were willing to exert more effort to prevent stronger hypothetical stimulations (see Supplementary Figure 2).”

How did the inconsistency between the instructed and experienced probability impact our results?

All reviewers questioned how the inconsistency between the instructed and experienced probability might have impacted the probability-related results. However, judging from the way the comments were framed, it seems that part of the concern was based on a misunderstanding of the design we employed. Specifically, reviewer 1 mentions that “To ensure that the number of omissions is similar across conditions, the task employs inaccurate verbal instructions; I.e., 25% of shocks are omitted regardless of whether subjects are told that the probability is 100%, 75%, 50%, 25%, 0%.”, and reviewer 3 states that “... the fact remains that they do not get shocks outside of the 100% probability shock. So learning is occurring, at least for subjects who realize the probability cue is actually a ruse.” We want to emphasize that this was not what we did, and if it were true, we fully agree with the reviewers that it would have caused serious trust- and learning related issues, given that it would be immediately evident to participants that probability instructions were false. It is clear that under such circumstances, dynamic learning would be a big issue.

However, in our task 0% and 100% instructions were always accurate. This means that participants never received a stimulus following 0% instructions and always received the stimulation of the given intensity on the 100% instructions (see Supplemental Figure 1 for an overview of the trial types). Only for the 25%, 50% and 75% trials an equal reinforcement rate (25%) was maintained, meaning that the stimulation followed in 25% of the trials, irrespective of whether a 25%, 50% or 75% instruction was given. The reason for this was that we wanted to maximize and balance the number of omission trials across the different probability levels, while also keeping the total number of presentations per probability instruction constant. We reasoned that equating the reinforcement rate across the 25%, 50% and 75% instructions should not be detrimental, because (1) in these trials there was always the possibility that a stimulation would follow; and (2) we instructed the participants that each trial is independent of the previous ones, which should have discouraged them to actively count the number of shocks in order to predict future shocks.

Adaptations in the revised manuscript: We have tried to further clarify the design in several sections of the manuscript, including the introduction (lines 121-125), results (line 220) and methods (lines 478-484) sections:

Adaptation in the Introduction section: “Specifically, participants received trial-by-trial instructions about the probability (0%, 25%, 50%, 75% and 100%) and intensity (weak, moderate, strong) of a potentially painful upcoming electrical stimulation, time-locked by a countdown clock (see Fig.1A). While stimulations were always delivered on 100% trials and never on 0% trials, most of the other trials (25%-75%) did not contain the expected stimulation and hence provoked an omission PE.”

Adaptation in the Results section: “Indeed, the provided instructions did not map exactly onto the actually experienced probabilities, but were all followed by stimulation in 25% on the trials (except for the 0% trials and the 100% trials).”

Adaptation in the Methods section: “Since we were mainly interested in how omissions of threat are processed, we wanted to maximize and balance the number of omission trials across the different probability and intensity levels, while also keeping the total number of presentations per probability and intensity instruction constant. Therefore, we crossed all non-0% probability levels (25, 50, 75, 100) with all intensity levels (weak, moderate, strong) (12 trials). The three 100% trials were always followed by the stimulation of the instructed intensity, while stimulations were omitted in the remaining nine trials. Six additional trials were intermixed in each run: Three 0% omission trials with the information that no electrical stimulation would follow (akin to 0% Probability information, but without any Intensity information as it does not apply); and three trials from the Probability x Intensity matrix that were followed by electrical stimulation (across the four runs, each Probability x Intensity combination was paired at least once, and at most twice with the electrical stimulation).”

Could the incongruence between the instructed and experienced reinforcement rate have detrimental effects on the probability effect? We agree with reviewer 2 that it is possible that the inconsistency between instructed and experienced reinforcement rates could have rendered the exact probability information less informative to participants, which might have resulted in them paying less attention to the probability information whenever the probability was not 0% or 100%. This might to some extent explain the relatively larger difference in responding between 0% and 25% to 75% trials, but the relatively smaller differences between the 25% to 75% trials.

However, there are good reasons to believe that the relatively smaller difference between 25% to 75% trials was not caused by the “inaccurate” nature of our instructions, but is inherent to “uncertain” probabilities.

We added a description of these reasons to the supplementary materials in a supplementary note (supplementary note 4; lines 97-129 in supplementary materials), and added a reference to this note in the methods section (lines 488-490).

“Supplementary Note 4: “Accurate” probability instructions do not alter the Probability-effect

A question that was raised by the reviewers was whether the inconsistency between the probability instruction and the experienced reinforcement rate could have detrimental effects on the Probability-related results; especially because the effect of Probability was smaller when only including non-0% trials.

However, there are good reasons to believe that the relatively smaller difference between 25% to 75% trials was not caused by the “inaccurate” nature of our instructions, but that they are inherent to “uncertain” probabilities.

First, in a previously unpublished pilot study, we provided participants with “accurate” probability instructions, meaning that the instruction corresponded to the actual reinforcement rate (e.g., 75% instructions were followed by a stimulation in 75% of the trials etc.). In line with the present results and our previous behavioral study (Willems & Vervliet, 2021), the results of this pilot (N = 20) showed that the difference in the reported relief between the different probability levels was largest when comparing 0% and the rest (25%, 50% and 75%). Furthermore the overall effect size of Probability (excluding 0%) matched the one of our previous behavioral study (Willems & Vervliet, 2021): ηp2 = +/- 0.50.”

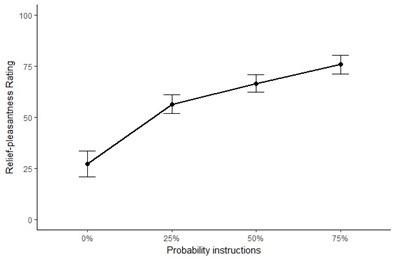

Author response image 1.

Main effect of Probability including 0% : F(1.74,31.23) = 53.94, p < .001, ηp2 = 0.75. Main effect of Probability excluding 0%: F(1.50, 28.43) = 21.03, p < .001, ηp2 = 0.53.

Second, also in other published studies that used CSs with varying reinforcement rates (which either included explicit written instructions of the reinforcement rates or not) showed that the difference in expectations, anticipatory SCR or omission SCR was largest when comparing the CS0% to the other CSs of varying reinforcement rates (Grings & Sukoneck, 1971; Öhman et al., 1973; Ojala et al., 2022).

Together, this suggests that when there is a possibility of stimulation, any additional difference in probability will have a smaller effect on the omission responses, irrespective of whether the underlying reinforcement rate is accurate or not.

Adaptation to methods section: “Note that, based on previous research, we did not expect the inconsistency between the instructed and perceived reinforcement rate to have a negative effect on the Probability manipulation (see Supplementary Note 4).”

Did dynamic learning impact the believability of the instructions?

Although we tried to minimize learning in our paradigm by providing instructions that trials are independent from one another, we agree with the reviewers that this cannot preclude all learning. Any remaining learning effects should present themselves by downweighing the effect of the probability instructions over time. We controlled for this time-effect by including a “run” regressor in our analyses. Results of the Run regressor for subjective relief and omission-related SCR are presented in Supplemental Figure 5. These figures show that although there was a general drop in reported relief pleasantness and omission SCR over time, the effects of probability and intensity remained present until the last run. This indicates that even though some learning might have taken place, the main manipulations of probability and intensity were still present until the end of the task.

Adaptations in the revised manuscript: We more clearly referred to the results of the Blockregressor which were presented in the supplementary material in the results section of the main paper (lines 159-162).

Note that while there was a general drop in reported relief pleasantness and omission SCR over time, the effects of Probability and Intensity remained present until the last run (see Supplementary Figure 5). This further confirms that probability and intensity manipulations were effective until the end of the task.

In the following sections of the rebuttal letter, we will go over the rest of the comments and our responses one by one.

Reviewer #1 (Public Review):

Summary:

Willems and colleagues test whether unexpected shock omissions are associated with reward-related prediction errors by using an axiomatic approach to investigate brain activation in response to unexpected shock omission. Using an elegant design that parametrically varies shock expectancy through verbal instructions, they see a variety of responses in reward-related networks, only some of which adhere to the axioms necessary for prediction error. In addition, there were associations between omission-related responses and subjective relief. They also use machine learning to predict relief-related pleasantness, and find that none of the a priori "reward" regions were predictive of relief, which is an interesting finding that can be validated and pursued in future work.

Strengths:

The authors pre-registered their approach and the analyses are sound. In particular, the axiomatic approach tests whether a given region can truly be called a reward prediction error. Although several a priori regions of interest satisfied a subset of axioms, no ROI satisfied all three axioms, and the authors were candid about this. A second strength was their use of machine learning to identify a relief-related classifier. Interestingly, none of the ROIs that have been traditionally implicated in reward prediction error reliably predicted relief, which opens important questions for future research.

Weaknesses:

To ensure that the number of omissions is similar across conditions, the task employs inaccurate verbal instructions; i.e. 25% of shocks are omitted, regardless of whether subjects are told that the probability is 100%, 75%, 50%, 25%, or 0%. Given previous findings on interactions between verbal instruction and experiential learning (Doll et al., 2009; Li et al., 2011; Atlas et al., 2016), it seems problematic a) to treat the instructions as veridical and b) average responses over time. Based on this prior work, it seems reasonable to assume that participants would learn to downweight the instructions over time through learning (particularly in the 100% and 0% cases); this would be the purpose of prediction errors as a teaching signal. The authors do recognize this and perform a subset analysis in the 21 participants who showed parametric increases in anticipatory SCR as a function of instructed shock probability, which strengthened findings in the VTA/SN; however given that one-third of participants (n=10) did not show parametric SCR in response to instructions, it seems like some learning did occur. As prediction error is so important to such learning, a weakness of the paper is that conclusions about prediction error might differ if dynamic learning were taken into account.

We thank the reviewer for raising this important concern. We believe we replied to all the issues raised in the general reply above.

Lastly, I think that findings in threat-sensitive regions such as the anterior insula and amygdala may not be adequately captured in the title or abstract which strictly refers to the "human reward system"; more nuance would also be warranted.

We fully agree with this comment and have changed the title and abstract accordingly.

Adaptations in the revised manuscript: We adapted the title of the manuscript.

“Omissions of Threat Trigger Subjective Relief and Prediction Error-Like Signaling in the Human Reward and Salience Systems”

Adaptations in the revised manuscript: We adapted the abstract (lines 27-29).

“In line with recent animal data, we showed that the unexpected omission of (painful) electrical stimulation triggers activations within key regions of the reward and salience pathways and that these activations correlate with the pleasantness of the reported relief.”

Reviewer #2 (Public Review):

The question of whether the neural mechanisms for reward and punishment learning are similar has been a constant debate over the last two decades. Numerous studies have shown that the midbrain dopamine neurons respond to both negative and salient stimuli, some of which can't be well accounted for by the classic RL theory (Delgado et al., 2007). Other research even proposed that aversive learning can be viewed as reward learning, by treating the omission of aversive stimuli as a negative PE (Seymour et al., 2004).

Although the current study took an axiomatic approach to search for the PE encoding brain regions, which I like, I have major concerns regarding their experimental design and hence the results they obtained. My biggest concern comes from the false description of their task to the participants. To increase the number of "valid" trials for data analysis, the instructed and actual probabilities were different. Under such a circumstance, testing axiom 2 seems completely artificial. How does the experimenter know that the participants truly believe that the 75% is more probable than, say, the 25% stimulation? The potential confusion of the subjects may explain why the SCR and relief report were rather flat across the instructed probability range, and some of the canonical PE encoding regions showed a rather mixed activity pattern across different probabilities. Also for the post-hoc selection criteria, why pick the larger SCR in the 75% compared to the 25% instructions? How would the results change if other criteria were used?

We thank the reviewer for raising this important concern. We believe the general reply above covers most of the issues raised in this comment. Concerning the post-hoc selection criteria, we took 25% < 75% as criterium because this was a quite “lenient” criterium in the sense that it looked only at the effects of interest (i.e., did anticipatory SCR increase with increasing instructed probability?). However, also when the criterium was more strict (e.g., selecting participants only if their anticipatory SCR monotonically increased with each increase in instructed probability 0% < 25% < 50% < 75% < 100%, N = 11 participants), the probability effect (ωp2 = 0.08), but not the intensity effect, for the VTA/SN remained.

To test axiom 3, which was to compare the 100% stimulation to the 0% stimulation conditions, how did the actual shock delivery affect the fMRI contrast result? It would be more reasonable if this analysis could control for the shock delivery, which itself could contaminate the fMRI signal, with extra confound that subjects may engage certain behavioral strategies to "prepare for" the aversive outcome in the 100% stimulation condition. Therefore, I agree with the authors that this contrast may not be a good way to test axiom 3, not only because of the arguments made in the discussion but also the technical complexities involved in the contrast.

We thank the reviewer for addressing this additional confound. It was indeed impossible to control for the delivery of shock since the delivery of the shock was always present on the 100% trials (and thus completely overlapped with the contrast of interest). We added this limitation to our discussion in the manuscript. In addition, we have also added a suggestion for a contrast that can test the “no surprise equivalence” criterium.

Adaptations in the revised manuscript: We adapted lines 358-364.

“Thus, given that we could not control for the delivery of the stimulation in the 100% > 0% contrast (the delivery of the stimulation completely overlapped with the contrast of interest), it is impossible to disentangle responses to the salience of the stimulation from those to the predictability of the outcome. A fairer evaluation of the third axiom would require outcomes that are roughly similar in terms of salience. When evaluating threat omission PE, this implies comparing fully expected threat omissions following 0% instructions to fully expected absence of stimulation at another point in the task (e.g. during a safe intertrial interval).”

Reviewer #3 (Public Review):

We thank the reviewer for their comments. Overall, based on the reviewer’s comments, we noticed that there was an imbalance between a focus on “relief” in the introduction and the rest of the manuscript and preregistration. We believe this focus raised the expectation that all outcome measures were interpreted in terms of the relief emotion. However, this was not what we did nor what we preregistered. We therefore restructured the introduction to reduce the focus on relief.

Adaptations in the revised manuscript: We restructured the introduction of the manuscript. Specifically, after our opening sentence: “We experience a pleasurable relief when an expected threat stays away1” we only introduce the role of relief for our research in lines 79-89.

“Interestingly, unexpected omissions of threat not only trigger neural activations that resemble a reward PE, they are also accompanied by a pleasurable emotional experience: relief. Because these feelings of relief coincide with the PE at threat omission, relief has been proposed to be an emotional correlate of the threat omission PE. Indeed, emerging evidence has shown that subjective experiences of relief follow the same time-course as theoretical PE during fear extinction. Participants in fear extinction experiments report high levels of relief pleasantness during early US omissions (when the omission was unexpected and the theoretical PE was high) and decreasing relief pleasantness over later omissions (when the omission was expected and the theoretical PE was low)22,23. Accordingly, preliminary fMRI evidence has shown that the pleasantness of this relief is correlated to activations in the NAC at the time of threat omission. In that sense, studying relief may offer important insights in the mechanism driving safety learning.”

Summary:

The authors conducted a human fMRI study investigating the omission of expected electrical shocks with varying probabilities. Participants were informed of the probability of shock and shock intensity trial-by-trial. The time point corresponding to the absence of the expected shock (with varying probability) was framed as a prediction error producing the cognitive state of relief/pleasure for the participant. fMRI activity in the VTA/SN and ventral putamen corresponded to the surprising omission of a high probability shock. Participants' subjective relief at having not been shocked correlated with activity in brain regions typically associated with reward-prediction errors. The overall conclusion of the manuscript was that the absence of an expected aversive outcome in human fMRI looks like a reward-prediction error seen in other studies that use positive outcomes.

Strengths:

Overall, I found this to be a well-written human neuroimaging study investigating an often overlooked question on the role of aversive prediction errors, and how they may differ from reward-related prediction errors. The paper is well-written and the fMRI methods seem mostly rigorous and solid.

Weaknesses:

I did have some confusion over the use of the term "prediction-error" however as it is being used in this task. There is certainly an expectancy violation when participants are told there is a high probability of shock, and it doesn't occur. Yet, there is no relevant learning or updating, and participants are explicitly told that each trial is independent and the outcome (or lack thereof) does not affect the chances of getting the shock on another trial with the same instructed outcome probability. Prediction errors are primarily used in the context of a learning model (reinforcement learning, etc.), but without a need to learn, the utility of that signal is unclear.

We operationalized “prediction error” as the response to the error in prediction or the violation of expectancy at the time of threat omission. In that sense, prediction error and expectancy violation (which is more commonly used in clinical research and psychotherapy; Craske et al., 2014) are synonymous. While prediction errors (or expectancy violations) are predominantly studied in learning situations, the definition in itself does not specify how the “expectancy” or “prediction” arises: whether it was through learning based on previous experience or through mere instruction. The rationale why we moved away from a conditioning study in the present manuscript is discussed in our general reply above.

We agree with the reviewer that studying prediction errors outside a learning context limits the ecological validity of the task. However, we do believe there is also a strength to this approach. Specifically, the omission-related responses we measure are less confounded by subsequent learning (or updating of the wrongful expectation). Any difference between our results and prediction error responses in learning situation can therefore point to this exact difference in paradigm, and can thus identify responses that are specific to learning situations.

An overarching question posed by the researchers is whether relief from not receiving a shock is a reward. They take as neural evidence activity in regions usually associated with reward prediction errors, like the VTA/SN . This seems to be a strong case of reverse inference. The evidence may have been stronger had the authors compared activity to a reward prediction error, for example using a similar task but with reward outcomes. As it stands, the neural evidence that the absence of shock is actually "pleasurable" is limited-albeit there is a subjective report asking subjects if they felt relief.

We thank the reviewer for cautioning us and letting us critically reflect on our interpretation. We agree that it is important not to be overly enthusiastic when interpreting fMRI results and to attribute carelessly psychological functions to mere activations. Therefore, we will elaborate on the precautions we took not to minimize detrimental reverse inference.

First, prior to analyzing our results, we preregistered clear hypotheses that were based on previous research, in addition to clear predictions, regions of interest and a testing approach on OSF. With our study, we wanted to investigate whether unexpected omissions of threat: (1) triggered activations in the VTA/SN, putamen, NAc and vmPFC (as has previously been shown in animal and human studies); (2) represent PE signals; and (3) were related to self-reported relief, which has also been shown to follow a PE time-curve in fear extinction (Vervliet et al., 2017). Based on previous research, we selected three criteria all PE signals should comply to. This means that if omission-related activations were to represent true PE signals, they should comply to these criteria. However, we agree that it would go too far to conclude based on our research that relief is a reward, or even that the omission-related activations represent only PE signals. While we found support for most of our hypotheses, this does not preclude alternative explanations. In fact, in the discussion, we acknowledge this and also discuss alternative explanations, such as responding to the salience (lines 395-397; “One potential explanation is therefore that the deactivation resulted from a switch from default mode to salience network, triggered by the salience of the unexpected threat omission or by the salience of the experienced stimulation.”), or anticipation (line 425-426; “... we cannot conclusively dismiss the alternative interpretation that we assessed (part of) expectancy instead”).

Second, we have deliberately opted to only use descriptive labels such as omission-related activations when we are discussing fMRI results. Only when we are talking about how the activations were related to self-reported relief, we talk about relief-related activations.

I have some other comments, and I elaborate on those above comments, below:

(1) A major assumption in the paper is that the unexpected absence of danger constitutes a pleasurable event, as stated in the opening sentence of the abstract. This may sometimes be the case, but it is not universal across contexts or people. For instance, for pathological fears, any relief derived from exposure may be short-lived (the dog didn't bite me this time, but that doesn't mean it won't next time or that all dogs are safe). And even if the subjective feeling one gets is temporary relief at that moment when the expected aversive event is not delivered, I believe there is an overall conflation between the concepts of relief and pleasure throughout the manuscript. Overall, the manuscript seems to be framed on the assumption that "aversive expectations can transform neutral outcomes into pleasurable events," but this is situationally dependent and is not a common psychological construct as far as I am aware.

We thank the reviewer for their comment. We have restructured the introduction because we agree with the reviewer that the introduction might have set false expectations concerning our interpretation of the results. The statements related to relief have been toned down in the revised manuscript.