The resource elasticity of control

Curation statements for this article:-

Curated by eLife

eLife Assessment

This study makes the valuable claim that people track, specifically, the elasticity of control (that is, the degree to which outcome depends on how many resources - such as money - are invested), and that control elasticity is impaired in certain types of psychopathologies. A novel task is introduced that provides solid evidence that this learning process occurs and that human behavior is sensitive to changes in the elasticity of control. Evidence that elasticity inference is distinct from more general learning mechanisms and is related to psychopathology remains incomplete.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Abstract

The ability to determine how much the environment can be controlled through our actions has long been viewed as fundamental to adaptive behavior. While traditional accounts treat controllability as a fixed property of the environment, we argue that real-world controllability often depends on the effort, time and money we are able and willing to invest. In such cases, controllability can be said to be elastic to invested resources. Here we propose that inferring this elasticity is essential for efficient resource allocation, and thus, elasticity misestimations result in maladaptive behavior. To test this hypothesis, we developed a novel treasure hunt game where participants encountered environments with varying degrees of controllability and elasticity.

Across two pre-registered studies (N=514), we first demonstrate that people infer elasticity and adapt their resource allocation accordingly. We then present a computational model that explains how people make this inference, and identify individual elasticity biases that lead to suboptimal resource allocation. Finally, we show that overestimation of elasticity is associated with elevated psychopathology involving an impaired sense of control. These findings establish the elasticity of control as a distinct cognitive construct guiding adaptive behavior, and a computational marker for control-related maladaptive behavior.

Article activity feed

-

-

-

eLife Assessment

This study makes the valuable claim that people track, specifically, the elasticity of control (that is, the degree to which outcome depends on how many resources - such as money - are invested), and that control elasticity is impaired in certain types of psychopathologies. A novel task is introduced that provides solid evidence that this learning process occurs and that human behavior is sensitive to changes in the elasticity of control. Evidence that elasticity inference is distinct from more general learning mechanisms and is related to psychopathology remains incomplete.

-

Reviewer #1 (Public review):

Summary:

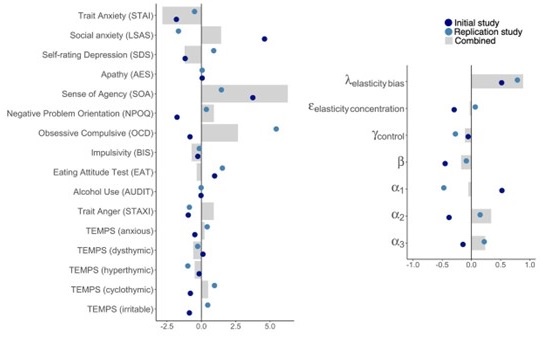

The authors investigated the elasticity of controllability by developing a task that manipulates the probability of achieving a goal with a baseline investment (which they refer to as inelastic controllability) and the probability that additional investment would increase the probability of achieving a goal (which they refer to as elastic controllability). They found that a computational model representing the controllability and elasticity of the environment accounted better for the data than a model representing only the controllability. They also found that prior biases about the controllability and elasticity of the environment was associated with a composite psychopathology score. The authors conclude that elasticity inference and bias guide resource allocation.

Strengths:

This research takes a …

Reviewer #1 (Public review):

Summary:

The authors investigated the elasticity of controllability by developing a task that manipulates the probability of achieving a goal with a baseline investment (which they refer to as inelastic controllability) and the probability that additional investment would increase the probability of achieving a goal (which they refer to as elastic controllability). They found that a computational model representing the controllability and elasticity of the environment accounted better for the data than a model representing only the controllability. They also found that prior biases about the controllability and elasticity of the environment was associated with a composite psychopathology score. The authors conclude that elasticity inference and bias guide resource allocation.

Strengths:

This research takes a novel theoretical and methodological approach to understanding how people estimate the level of control they have over their environment, and how they adjust their actions accordingly. The task is innovative and both it and the findings are well-described (with excellent visuals). They also offer thorough validation for the particular model they develop. The research has the potential to theoretically inform understanding of control across domains, which is a topic of great importance.

Weaknesses:

In its revised form, the manuscript addresses most of my previous concerns. The main remaining weakness pertains to the analyses aimed at addressing my suggesting of Bayesian updating as an alternative to the model proposed by the authors. My suggestion was to assume that people perform a form of function approximation to relate resource expenditure to success probability. The authors performed a version of this where people were weighing evidence for a few canonical functions (flat, step, linear), and found that this model underperforms theirs. However, this Bayesian model is quite constrained in its ability to estimate the function relating resources. A more robust test would be to assume a more flexible form of updating that is able to capture a wide range of distributions (e.g., using basis functions, gaussian processes, or nonparametric estimators); see, e.g., work by Griffiths on human function learning). The benefit of testing this type of model is that it would make contact with a known form of inference that individuals engage in across various settings, and therefore could offer a more parsimonious and generalizable account of function learning, whereby learning of resource elasticity is a special case. I defer to the authors as to whether they'd like to pursue this direction, but if not I think it's still important that they acknowledge that they are unable to rule out a more general process like this as an alternative to their model. This also pertains to inferences about individual differences, which currently hinge on their preferred model being the most parsimonious.

-

Reviewer #2 (Public review):

Summary:

In this paper, the authors test whether controllability beliefs and associated actions/resource allocation are modulated by things like time, effort, and monetary costs (what they call "elastic" as opposed to "inelastic" controllability). Using a novel behavioral task and computational modeling, they find that participants do indeed modulate their resources depending on whether they are in an "elastic," "inelastic," or "low controllability" environment. The authors also find evidence that psychopathology is related to specific biases in controllability.

Strengths:

This research investigates how people might value different factors that contribute to controllability in a creative and thorough way. The authors use computational modeling to try to dissociate "elasticity" from "overall controllability," …

Reviewer #2 (Public review):

Summary:

In this paper, the authors test whether controllability beliefs and associated actions/resource allocation are modulated by things like time, effort, and monetary costs (what they call "elastic" as opposed to "inelastic" controllability). Using a novel behavioral task and computational modeling, they find that participants do indeed modulate their resources depending on whether they are in an "elastic," "inelastic," or "low controllability" environment. The authors also find evidence that psychopathology is related to specific biases in controllability.

Strengths:

This research investigates how people might value different factors that contribute to controllability in a creative and thorough way. The authors use computational modeling to try to dissociate "elasticity" from "overall controllability," and find some differential associations with psychopathology. This was a convincing justification for using modeling above and beyond behavioral output, and yielded interesting results. Notably, the authors conclude that these findings suggest that biased elasticity could distort agency beliefs via maladaptive resource allocation. Overall, this paper reveals important findings about how people consider components of controllability.

Weaknesses:

The authors have gone to great lengths to revise the manuscript to clarify their definitions of "elastic" and "inelastic" and bolster evidence for their computational model, resulting in an overall strong manuscript that is valuable for elucidating controllability dynamics and preferences. One minor weakness is that the justification for the analysis technique for the relationships between the model parameters and the psychopathology measures remains lacking given the fact that simple correlational analyses did not reveal any significant associations nor were there results of any regression analyses. That said, the authors did preregister the CCA analysis, so while perhaps not the best method, it was justified to complete it. Regardless of method, the psychopathology results are not particularly convincing, but provide an interesting jumping-off point for further exploration in future work.

-

Reviewer #3 (Public review):

A bias in how people infer the amount of control they have over their environment is widely believed to be a key component of several mental illnesses including depression, anxiety, and addiction. Accordingly, this bias has been a major focus in computational models of those disorders. However, all of these models treat control as a unidimensional property, roughly, how strongly outcomes depend on action. This paper proposes---correctly, I think---that the intuitive notion of "control" captures multiple dimensions in the relationship between action and outcome. In particular, the authors identify one key dimension: the degree to which outcome depends on how much *effort* we exert, calling this dimension the "elasticity of control". They additionally argue that this dimension (rather than the more holistic …

Reviewer #3 (Public review):

A bias in how people infer the amount of control they have over their environment is widely believed to be a key component of several mental illnesses including depression, anxiety, and addiction. Accordingly, this bias has been a major focus in computational models of those disorders. However, all of these models treat control as a unidimensional property, roughly, how strongly outcomes depend on action. This paper proposes---correctly, I think---that the intuitive notion of "control" captures multiple dimensions in the relationship between action and outcome. In particular, the authors identify one key dimension: the degree to which outcome depends on how much *effort* we exert, calling this dimension the "elasticity of control". They additionally argue that this dimension (rather than the more holistic notion of controllability) may be specifically impaired in certain types of psychopathology. This idea has the potential to change how we think about several major mental disorders in a substantial way, and can additionally help us better understand how healthy people navigate challenging decision-making problems. More concisely, it is a *very good idea*.

The more concrete contributions, however, are not as strong. In particular, evidence for the paper's most striking claims is weak. Quoting the abstract, these claims are (1) "the elasticity of control [is] a distinct cognitive construct guiding adaptive behavior" and (2) "overestimation of elasticity is associated with elevated psychopathology involving an impaired sense of control."

Main issues

I'll highlight the key points.

- The task cannot distinguish elasticity inference from general learning processes

- Participants were explicitly instructed about elasticity, with labeled examples

- The psychopathology claims rely on an invalid interpretation of CCA, and are contradicted by simple correlations (elasticity bias and the sense of agency scale is r=0.03)

Distinct construct

Starting with claim 1, there are three subclaims here. (1A) People's behavior is sensitive to differences in elasticity; (1B) there are mental processes specific to elasticity inference, i.e., not falling out of general learning mechanisms; and, implicitly, (1C) people infer elasticity naturally as they go about their daily lives. The results clearly support 1A. However, 1B and 1C are not well supported.

(1B) The data cannot support the "distinct cognitive construct" claim because the task is too simple to dissociate elasticity inference from more general learning processes (also raised by Reviewer 1). The key behavioral signature for elasticity inference (vs. generic controllability inference) is the transfer across ticket numbers, illustrated in Fig 4. However, this pattern is also predicted by a standard Bayesian learner equipped with an intuitive causal model of the task. Each ticket gives you another chance to board and the agent infers the probability that each attempt succeeds. Crucially, this logic is not at all specific to elasticity or even control. An identical model could be applied to inferring the bias of a coin from observations of whether any of N tosses were heads-a task that is formally identical to this one (at least, the intuitive model of the task; see first minor comment).

Importantly, this point cannot be addressed by showing that the author's model fits data better than this or any other specific Bayesian model. It is not a question of whether one particular updating rule explains data better than another. Rather, it is a question of whether the task can distinguish between biases in *elasticity* inference versus biases in probabilistic inference more generally. The present task cannot make this distinction because it does not make separate measurements of the two types of inference. To provide compelling evidence that elasticity inference is a "distinct cognitive construct", one would need to show that there are reliable individual differences in elasticity inference that generalize across contexts but do not generalize to computationally similar types of probabilistic inference (e.g. the coin flipping example).

(1C) The implicit claim that people infer elasticity outside of the experimental task is undermined by the experimental design. The authors explicitly tell people about the two notions of control as part of the training phase: "To reinforce participants' understanding of how elasticity and controllability were manifested in each planet, [participants] were informed of the planet type they had visited after every 15 trips."

In the revisions, the authors seem to go back and forth on whether they are claiming that people infer elasticity without instruction (I won't quote it here). I'll just note that the examples they provide in the most recent rebuttal are all cases in which one never receives explicit labels about elasticity. If people only infer elasticity when it is explicitly labeled, I struggle to see its relevance for understanding human cognition and behavior.

Psychopathology

Finally, I turn to claim 2, that "overestimation of elasticity is associated with elevated psychopathology involving an impaired sense of control." The CCA analysis is in principle unable to support this claim. As the authors correctly note in their latest rebuttal, the CCA does show that "there is a relationship between psychopathology traits and task parameters". The lesion analysis further shows that "elasticity bias specifically contributes to this relationship" (and similarly for the Sense of Agency scale). Crucially, however, this does *not* imply that there is a relationship between those two variables. The most direct test of that relationship is the simple correlation, which the authors report only in a supplemental figure: there is no relationship (r=0.03). Although it is of course possible that there is a relationship that is obscured by confounding variables, the paper provides no evidence-statistical or otherwise-that such a relationship exists.

Minor comments

The statistical structure of the task is inconsistent with the framing. In the framing, participants can make either one or two second boarding attempts (jumps) by purchasing extra tickets. The additional attempt(s) will thus succeed with probability p for one ticket and 2p - p^2 for two tickets; the p^2 captures the fact that you only take the second attempt if you fail on the first. A consequence of this is buying more tickets has diminishing returns. In contrast, in the task, participants always jumped twice after purchasing two tickets, and the probability of success with two tickets was exactly double that with one ticket. Thus, if participants are applying an intuitive causal model to the task, the researcher could infer "biases" in elasticity inference that are probably better characterized as effective use of prior information (encoded in the causal model).

The model is heuristically defined and does not reflect Bayesian updating. For example, it over-estimates maximum control by not using losses with less than 3 tickets (intuitively, the inference here depends on what your beliefs about elasticity). Including forced three-ticket trials at the beginning of each round makes this less of an issue; but if you want to remove those trials, you might need to adjust the model. The need to introduce the modified model with kappa is likely another symptom of the heuristic nature of the model updating equations.

-

Author response:

The following is the authors’ response to the previous reviews

Reviewer #1 (Public review):

This research takes a novel theoretical and methodological approach to understanding how people estimate the level of control they have over their environment and how they adjust their actions accordingly. The task is innovative and both it and the findings are well-described (with excellent visuals). They also offer thorough validation for the particular model they develop. The research has the potential to theoretically inform understanding of control across domains, which is a topic of great importance.

We thank the Reviewer for their favorable appraisal and valuable suggestions, which have helped clarify and strengthen the study’s conclusion.

In its revised form, the manuscript addresses most of my previous concerns. The …

Author response:

The following is the authors’ response to the previous reviews

Reviewer #1 (Public review):

This research takes a novel theoretical and methodological approach to understanding how people estimate the level of control they have over their environment and how they adjust their actions accordingly. The task is innovative and both it and the findings are well-described (with excellent visuals). They also offer thorough validation for the particular model they develop. The research has the potential to theoretically inform understanding of control across domains, which is a topic of great importance.

We thank the Reviewer for their favorable appraisal and valuable suggestions, which have helped clarify and strengthen the study’s conclusion.

In its revised form, the manuscript addresses most of my previous concerns. The main remaining weakness pertains to the analyses aimed at addressing my suggesting of Bayesian updating as an alternative to the model proposed by the authors. My suggestion was to assume that people perform a form of function approximation to relate resource expenditure to success probability. The authors performed a version of this where people were weighing evidence for a few canonical functions (flat, step, linear), and found that this model underperformed theirs. However, this Bayesian model is quite constrained in its ability to estimate the function relating resources. A more robust test would be to assume a more flexible form of updating that is able to capture a wide range of distributions (e.g., using basis functions, gaussian processes, or nonparametric estimators); see, e.g., work by Griffiths on human function learning). The benefit of testing this type of model is that it would make contact with a known form of inference that individuals engage in across various settings and therefore could offer a more parsimonious and generalizable account of function learning, whereby learning of resource elasticity is a special case. I defer to the authors as to whether they'd like to pursue this direction, but if not I think it's still important that they acknowledge that they are unable to rule out a more general process like this as an alternative to their model. This pertains also to inferences about individual differences, which currently hinge on their preferred model being the most parsimonious.

We thank the Reviewer for this thoughtful suggestion. We acknowledge that more flexible function learning approaches could provide a stronger test in favor of a more general account. Our Bayesian model implemented a basis function approach where the weights of three archetypal functions (flat, step, linear) are learned from experience Testing models with more flexible basis functions would likely require a task with more than three levels of resource investment (1, 2, or 3 tickets). This would make an interesting direction for future work expanding on our current findings. We now incorporate this suggestion in more detail in our updated manuscript (335-341):

“Second, future models could enable generalization to levels of resource investment not previously experienced. For example, controllability and its elasticity could be jointly estimated via function approximation that considers control as a function of invested resources. Although our implementation of this model did not fit participants’ choices well (see Methods), other modeling assumptions drawn from human function learning [30] or experimental designs with continuous action spaces may offer a better test of this idea.”

Reviewer #2 (Public review):

This research investigates how people might value different factors that contribute to controllability in a creative and thorough way. The authors use computational modeling to try to dissociate "elasticity" from "overall controllability," and find some differential associations with psychopathology. This was a convincing justification for using modeling above and beyond behavioral output and yielded interesting results. Notably, the authors conclude that these findings suggest that biased elasticity could distort agency beliefs via maladaptive resource allocation. Overall, this paper reveals important findings about how people consider components of controllability. The authors have gone to great lengths to revise the manuscript to clarify their definitions of "elastic" and "inelastic" and bolster evidence for their computational model, resulting in an overall strong manuscript that is valuable for elucidating controllability dynamics and preferences.

We thank the Reviewer for their constructive feedback throughout the review process, which has substantially strengthened our manuscript and clarified our theoretical framework.

One minor weakness is that the justification for the analysis technique for the relationships between the model parameters and the psychopathology measures remains lacking given the fact that simple correlational analyses did not reveal any significant associations.

We note that the existence of bivariate relationships is not a prerequisite for the existence of multivariate relationships. Conditioning the latter on the former, therefore, would risk missing out on important relationships existing in the data. Ultimately, correlations between pairs of variables do not offer a sensitive test for the general hypothesis that there is a relationship between two sets of variables. As an illustration, consider that elasticity bias correlated in our data (r = .17, p<.001) with the difference between SOA (sense of agency) and SDS (self-rating depression). Notably, SOA and SDS were positively correlated (r = .47, p<.001), and neither of them was correlated with elasticity bias (SOA: r=.04 p=.43, SDS: r=-.06, p=.16). It was a dimension that ran between them that mapped onto elasticity bias. This specific finding is incidental and uncorrected for multiple comparisons, hence we do not report it in the manuscript, but it illustrates the kinds of relationships that cannot be accounted for by looking at bivariate relationships alone.

Reviewer #3 (Public review):

A bias in how people infer the amount of control they have over their environment is widely believed to be a key component of several mental illnesses including depression, anxiety, and addiction. Accordingly, this bias has been a major focus in computational models of those disorders. However, all of these models treat control as a unidimensional property, roughly, how strongly outcomes depend on action. This paper proposes---correctly, I think---that the intuitive notion of "control" captures multiple dimensions in the relationship between action and outcome.

In particular, the authors identify one key dimension: the degree to which outcome depends on how much *effort* we exert, calling this dimension the "elasticity of control". They additionally argue that this dimension (rather than the more holistic notion of controllability) may be specifically impaired in certain types of psychopathology. This idea has the potential to change how we think about several major mental disorders in a substantial way and can additionally help us better understand how healthy people navigate challenging decision-making problems. More concisely, it is a very good idea.

We thank the Reviewer for their thoughtful engagement with our manuscript. We appreciate their recognition of elasticity as a key dimension of control that has the potential to advance our understanding of psychopathology and healthy decision-making.

Starting with theory, the authors do not provide a strong formal characterization of the proposed notion of elasticity. There are existing, highly general models of controllability (e.g., Huys & Dayan, 2009; Ligneul, 2021) and the elasticity idea could naturally be embedded within one of these frameworks. The authors gesture at this in the introduction; however, this formalization is not reflected in the implemented model, which is highly task-specific.

Our formal definition of elasticity, detailed in Supplementary Note 1, naturally extends the reward-based and information-theoretic definitions of controllability by Huys & Dayan (2009) and Ligneul (2021). We now further clarify how the model implements this formalized definition (lines 156-159).

“Conversely, in the ‘elastic controllability model’, the beta distributions represent a belief about the maximum achievable level of control (𝑎Control, 𝑏Control) coupled with two elasticity estimates that specify the degree to which successful boarding requires purchasing at least one (𝑎elastic≥1, 𝑏elastic≥1) or specifically two (𝑎elastic2, 𝑏elastic2) extra tickets. As such, these elasticity estimates quantify how resource investment affects control. The higher they are, the more controllability estimates can be made more precise by knowing how much resources the agent is willing and able to invest (Supplementary Note 1).”

Moreover, the authors present elasticity as if it is somehow "outside of" the more general notion of controllability. However, effort and investment are just specific dimensions of action; and resources like money, strength, and skill (the "highly trained birke") are just specific dimensions of state. Accordingly, the notion of elasticity is necessarily implicitly captured by the standard model. Personally, I am compelled by the idea that effort and resource (and therefore elasticity) are particularly important dimensions, ones that people are uniquely tuned to. However, by framing elasticity as a property that is different in kind from controllability (rather than just a dimension of controllability), the authors only make it more difficult to integrate this exciting idea into generalizable models.

We respectfully disagree that we present elasticity as outside of, or different in kind from, controllability. Throughout the manuscript, we explicitly describe elasticity as a dimension of controllability (e.g., lines 70-72, along many other examples). This is also expressed in our formal definition of elasticity (Supplementary Note 1).

The argument that vehicle/destination choice is not trivial because people occasionally didn't choose the instructed location is not compelling to me-if anything, the exclusion rate is unusually low for online studies. The finding that people learn more from non-random outcomes is helpful, but this could easily be cast as standard model-based learning very much like what one measures with the Daw two-step task (nothing specific to control here). Their final argument is the strongest, that to explain behavior the model must assume "a priori that increased effort could enhance control." However, more literally, the necessary assumption is that each attempt increases the probability of success-e.g. you're more likely to get a heads in two flips than one. I suppose you can call that "elasticity inference", but I would call it basic probabilistic reasoning.

We appreciate the Reviewer’s concerns but feel that some of the more subjective comments might not benefit from further discussion. We only note that controllability and its elasticity are features of environmental structure, so in principle any controllability-related inference is a form of model-based learning. The interesting question is whether people account in their world model for that particular feature of the environment.

The authors try to retreat, saying "our research question was whether people can distinguish between elastic and inelastic controllability." I struggle to reconcile this with the claim in the abstract "These findings establish the elasticity of control as a distinct cognitive construct guiding adaptive behavior". That claim is the interesting one, and the one I am evaluating the evidence in light of.

In real-world contexts, it is often trivial that sometimes further investment enhances control and sometimes it does not. For example, students know that if they prepare more extensively for their exams they will likely be able to achieve better grades, but they also know that there is uncertainty in this regard – their grades could improve significantly, modestly, or in some cases, they might not improve at all, depending on the type of exams their study program administers and the knowledge or skills being tested. Our research question was whether in such contexts people learn from experience the degree to which controllability is elastic to invested resources and adapt their resource investment accordingly. Our findings show that they do.

The authors argue for CCA by appeal to the need to "account for the substantial variance that is typically shared among different forms of psychopathology". I agree. A simple correlation would indeed be fairly weak evidence. Strong evidence would show a significant correlation after *controlling for* other factors (e.g. a regression predicting elasticity bias from all subscales simultaneously). CCA effectively does the opposite, asking whether-with the help of all the parameters and all the surveys-one can find any correlation between the two sets of variables. The results are certainly suggestive, but they provide very little statistical evidence that the elasticity parameter is meaningfully related to any particular dimension of psychopathology.

We agree with the Reviewer on the relationship between elasticity and any particular dimension of psychopathology. The CCA asks a different question, namely, whether there is a relationship between psychopathology traits and task parameters, and whether elasticity bias specifically contributes to this relationship.

I am very concerned to see that the authors removed the discussion of this limitation in response to my first review. I quote the original explanation here:

- In interpreting the present findings, it needs to be noted that we designed our task to be especially sensitive to overestimation of elasticity. We did so by giving participants free 3 tickets at their initial visits to each planet, which meant that upon success with 3 tickets, people who overestimate elasticity were more likely to continue purchasing extra tickets unnecessarily. Following the same logic, had we first had participants experience 1 ticket trips, this could have increased the sensitivity of our task to underestimation of elasticity in elastic environments. Such underestimation could potentially relate to a distinct psychopathological profile that more heavily loads on depressive symptoms. Thus, by altering the initial exposure, future studies could disambiguate the dissociable contributions of overestimating versus underestimating elasticity to different forms of psychopathology.

The logic of this paragraph makes perfect sense to me. If you assume low elasticity, you will infer that you could catch the train with just one ticket. However, when elasticity is in fact high, you would find that you don't catch the train, leading you to quickly infer high elasticity eliminating the bias. In contrast, if you assume high elasticity, you will continue purchasing three tickets and will never have the opportunity to learn that you could be purchasing only one-the bias remains.

The authors attempt to argue that this isn't happening using parameter recovery. However, they only report the *correlation* in the parameter, whereas the critical measure is the *bias*. Furthermore, in parameter recovery, the data-generating and data-fitting models are identical-this will yield the best possible recovery results. Although finding no bias in this setting would support the claims, it cannot outweigh the logical argument for the bias that they originally laid out. Finally, parameter recovery should be performed across the full range of plausible parameter values; using fitted parameters (a detail I could only determine by reading the code) yields biased results because the fitted parameters are themselves subject to the bias (if present). That is, if true low elasticity is inferred as high elasticity, then you will not have any examples of low elasticity in the fitted parameters and will not detect the inability to recover them.

The logic the Reviewer describes breaks down when one considers the dynamics of participants’ resource investment choices. A low elasticity bias in a participant’s prior belief would make them persist for longer in purchasing a single ticket despite failure, as compared to a person without such a bias. Indeed, the ability of the experimental design to demonstrate low elasticity biases is evidenced by the fact that the majority of participants were fitted with a low elasticity bias (μ = .16 ± .14, where .5 is unbiased).

Originally, the Reviewer was concerned that elasticity bias was being confounded with a general deficit in learning. The weak inter-parameter correlations in the parameter recovery test resolved this concern, especially given that, as we now noted, the simulated parameter space encompassed both low and high elasticity biases (range=[.02,.76]). Furthermore, regarding the Reviewer's concern about bias in the parameter recovery, we found no such significant bias with respect to the elasticity bias parameter (Δ(Simulated, Recovered)= -.03, p=.25), showing that our experiment could accurately identify low and high elasticity biases.

The statistical structure of the task is inconsistent with the framing. In the framing, participants can make either one or two second boarding attempts (jumps) by purchasing extra tickets. The additional attempt(s) will thus succeed with probability p for one ticket and 2p – p^2 for two tickets; the p^2 captures the fact that you only take the second attempt if you fail on the first. A consequence of this is buying more tickets has diminishing returns. In contrast, in the task, participants always jumped twice after purchasing two tickets, and the probability of success with two tickets was exactly double that with one ticket. Thus, if participants are applying an intuitive causal model to the task, they will appear to "underestimate" the elasticity of control. I don't think this seriously jeopardizes the key results, but any follow-up work should ensure that the task's structure is consistent with the intuitive causal model.

We thank the Reviewer for this comment, and agree the participants may have employed the intuitive understanding the Reviewer describes. This is consistent with our model comparison results, which showed that participants did not assume that control increases linearly with resource investment (lines 677-692). Consequently, this is also not assumed by our model, except perhaps by how the prior is implemented (a property that was supported by model comparison). In the text, we acknowledge that this aspect of the model and participants’ behavior deviates from the true task's structure, and it would be worthwhile to address this deviation in future studies.

That said, there is no reason that this will make participants appear to be generally underestimating elasticity. Following exposure to outcomes for one and three tickets, any nonlinear understanding of probabilities would only affect the controllability estimate for two tickets. This would have contrasting effects on the elasticity estimated to the second and third tickets, but on average, it would not change the overall elasticity estimated. On the other hand, such a participant is only exposed to outcomes for two and three tickets, they would come to judge the difference between the first and second tickets too highly, thereby overestimating elasticity.

The model is heuristically defined and does not reflect Bayesian updating. For example, it overestimates maximum control by not using losses with less than 3 tickets (intuitively, the inference here depends on what your beliefs about elasticity). Including forced three-ticket trials at the beginning of each round makes this less of an issue; but if you want to remove those trials, you might need to adjust the model. The need to introduce the modified model with kappa is likely another symptom of the heuristic nature of the model updating equations.

Note that we have tested a fully Bayesian model (lines 676-691), but found that this model fitted participants’ choices worse.

You're right; saying these analyses provides "no information" was unfair. I agree that this is a useful way to link model parameters with behavior, and they should remain in the paper. However, my key objection still holds: these analyses do not tell us anything about how *people's* prior assumptions influence behavior. Instead, they tell us about how *fitted model parameters* depend on observed behavior. You can easily avoid this misreading by adding a small parenthetical, e.g.

Thus, a prior assumption that control is likely available **(operationalized by \gamma_controllability)** was reflected in a futile investment of resources in uncontrollable environments.

We thank the Reviewer for the suggestion and have added this parenthetical (lines 219, 225).

-

-

eLife Assessment

This study makes the valuable claim that people track, specifically, the elasticity of control (that is, the degree to which outcome depends on how many resources - such as money - are invested), and that control elasticity is impaired in certain types of psychopathology. A novel task is introduced that provides solid evidence that this learning process occurs and that human behavior is sensitive to changes in the elasticity of control. Evidence that elasticity inference is distinct from more general learning mechanisms and is related to psychopathology remains incomplete.

-

Reviewer #1 (Public review):

Summary:

The authors investigated the elasticity of controllability by developing a task that manipulates the probability of achieving a goal with a baseline investment (which they refer to as inelastic controllability) and the probability that additional investment would increase the probability of achieving a goal (which they refer to as elastic controllability). They found that a computational model representing the controllability and elasticity of the environment accounted better for the data than a model representing only the controllability. They also found that prior biases about the controllability and elasticity of the environment was associated with a composite psychopathology score. The authors conclude that elasticity inference and bias guide resource allocation.

Strengths:

This research takes a …

Reviewer #1 (Public review):

Summary:

The authors investigated the elasticity of controllability by developing a task that manipulates the probability of achieving a goal with a baseline investment (which they refer to as inelastic controllability) and the probability that additional investment would increase the probability of achieving a goal (which they refer to as elastic controllability). They found that a computational model representing the controllability and elasticity of the environment accounted better for the data than a model representing only the controllability. They also found that prior biases about the controllability and elasticity of the environment was associated with a composite psychopathology score. The authors conclude that elasticity inference and bias guide resource allocation.

Strengths:

This research takes a novel theoretical and methodological approach to understanding how people estimate the level of control they have over their environment and how they adjust their actions accordingly. The task is innovative and both it and the findings are well-described (with excellent visuals). They also offer thorough validation for the particular model they develop. The research has the potential to theoretically inform understanding of control across domains, which is a topic of great importance.

Weaknesses:

In its revised form, the manuscript addresses most of my previous concerns. The main remaining weakness pertains to the analyses aimed at addressing my suggesting of Bayesian updating as an alternative to the model proposed by the authors. My suggestion was to assume that people perform a form of function approximation to relate resource expenditure to success probability. The authors performed a version of this where people were weighing evidence for a few canonical functions (flat, step, linear), and found that this model underperformed theirs. However, this Bayesian model is quite constrained in its ability to estimate the function relating resources. A more robust test would be to assume a more flexible form of updating that is able to capture a wide range of distributions (e.g., using basis functions, gaussian processes, or nonparametric estimators); see, e.g., work by Griffiths on human function learning). The benefit of testing this type of model is that it would make contact with a known form of inference that individuals engage in across various settings and therefore could offer a more parsimonious and generalizable account of function learning, whereby learning of resource elasticity is a special case. I defer to the authors as to whether they'd like to pursue this direction, but if not I think it's still important that they acknowledge that they are unable to rule out a more general process like this as an alternative to their model. This pertains also to inferences about individual differences, which currently hinge on their preferred model being the most parsimonious.

-

Reviewer #2 (Public review):

Summary:

In this paper, the authors test whether controllability beliefs and associated actions/resource allocation are modulated by things like time, effort, and monetary costs (what they call "elastic" as opposed to "inelastic" controllability). Using a novel behavioral task and computational modeling, they find that participants do indeed modulate their resources depending on whether they are in an "elastic," "inelastic," or "low controllability" environment. The authors also find evidence that psychopathology is related to specific biases in controllability.

Strengths:

This research investigates how people might value different factors that contribute to controllability in a creative and thorough way. The authors use computational modeling to try to dissociate "elasticity" from "overall controllability," …

Reviewer #2 (Public review):

Summary:

In this paper, the authors test whether controllability beliefs and associated actions/resource allocation are modulated by things like time, effort, and monetary costs (what they call "elastic" as opposed to "inelastic" controllability). Using a novel behavioral task and computational modeling, they find that participants do indeed modulate their resources depending on whether they are in an "elastic," "inelastic," or "low controllability" environment. The authors also find evidence that psychopathology is related to specific biases in controllability.

Strengths:

This research investigates how people might value different factors that contribute to controllability in a creative and thorough way. The authors use computational modeling to try to dissociate "elasticity" from "overall controllability," and find some differential associations with psychopathology. This was a convincing justification for using modeling above and beyond behavioral output and yielded interesting results. Notably, the authors conclude that these findings suggest that biased elasticity could distort agency beliefs via maladaptive resource allocation. Overall, this paper reveals important findings about how people consider components of controllability.

Weaknesses:

The authors have gone to great lengths to revise the manuscript to clarify their definitions of "elastic" and "inelastic" and bolster evidence for their computational model, resulting in an overall strong manuscript that is valuable for elucidating controllability dynamics and preferences. One minor weakness is that the justification for the analysis technique for the relationships between the model parameters and the psychopathology measures remains lacking given the fact that simple correlational analyses did not reveal any significant associations.

-

Reviewer #3 (Public review):

A bias in how people infer the amount of control they have over their environment is widely believed to be a key component of several mental illnesses including depression, anxiety, and addiction. Accordingly, this bias has been a major focus in computational models of those disorders. However, all of these models treat control as a unidimensional property, roughly, how strongly outcomes depend on action. This paper proposes---correctly, I think---that the intuitive notion of "control" captures multiple dimensions in the relationship between action and outcome. In particular, the authors identify one key dimension: the degree to which outcome depends on how much *effort* we exert, calling this dimension the "elasticity of control". They additionally argue that this dimension (rather than the more holistic …

Reviewer #3 (Public review):

A bias in how people infer the amount of control they have over their environment is widely believed to be a key component of several mental illnesses including depression, anxiety, and addiction. Accordingly, this bias has been a major focus in computational models of those disorders. However, all of these models treat control as a unidimensional property, roughly, how strongly outcomes depend on action. This paper proposes---correctly, I think---that the intuitive notion of "control" captures multiple dimensions in the relationship between action and outcome. In particular, the authors identify one key dimension: the degree to which outcome depends on how much *effort* we exert, calling this dimension the "elasticity of control". They additionally argue that this dimension (rather than the more holistic notion of controllability) may be specifically impaired in certain types of psychopathology. This idea has the potential to change how we think about several major mental disorders in a substantial way and can additionally help us better understand how healthy people navigate challenging decision-making problems. More concisely, it is a very good idea.

Unfortunately, my view is that neither the theoretical nor empirical aspects of the paper really deliver on that promise. In particular, most (perhaps all) of the interesting claims in the paper have weak empirical support.

Starting with theory, the authors do not provide a strong formal characterization of the proposed notion of elasticity. There are existing, highly general models of controllability (e.g., Huys & Dayan, 2009; Ligneul, 2021) and the elasticity idea could naturally be embedded within one of these frameworks. The authors gesture at this in the introduction; however, this formalization is not reflected in the implemented model, which is highly task-specific. Moreover, the authors present elasticity as if it is somehow "outside of" the more general notion of controllability. However, effort and investment are just specific dimensions of action; and resources like money, strength, and skill (the "highly trained birke") are just specific dimensions of state. Accordingly, the notion of elasticity is necessarily implicitly captured by the standard model. Personally, I am compelled by the idea that effort and resource (and therefore elasticity) are particularly important dimensions, ones that people are uniquely tuned to. However, by framing elasticity as a property that is different in kind from controllability (rather than just a dimension of controllability), the authors only make it more difficult to integrate this exciting idea into generalizable models.

Turning to experiment, the authors make two key claims: (1) people infer the elasticity of control, and (2) individual differences in how people make this inference are importantly related to psychopathology.

Starting with claim 1, there are three subclaims here; implicitly, the authors make all three. (1A) People's behavior is sensitive to differences in elasticity, (1B) people actually represent/track something like elasticity, and (1C) people do so naturally as they go about their daily lives. The results clearly support 1A. However, 1B and 1C are not strongly supported.

(1B) The experiment cannot support the claim that people represent or track elasticity because effort is the only dimension over which participants can engage in any meaningful decision-making. The other dimension, selecting which destination to visit, simply amounts to selecting the location where you were just told the treasure lies. Thus, any adaptive behavior will necessarily come out in a sensitivity to how outcomes depend on effort.

Notes on rebuttal: The argument that vehicle/destination choice is not trivial because people occasionally didn't choose the instructed location is not compelling to me-if anything, the exclusion rate is unusually low for online studies. The finding that people learn more from non-random outcomes is helpful, but this could easily be cast as standard model-based learning very much like what one measures with the Daw two-step task (nothing specific to control here). Their final argument is the strongest, that to explain behavior the model must assume "a priori that increased effort could enhance control." However, more literally, the necessary assumption is that each attempt increases the probability of success-e.g. you're more likely to get a heads in two flips than one. I suppose you can call that "elasticity inference", but I would call it basic probabilistic reasoning.

For 1C, the claim that people infer elasticity outside of the experimental task cannot be supported because the authors explicitly tell people about the two notions of control as part of the training phase: "To reinforce participants' understanding of how elasticity and controllability were manifested in each planet, [participants] were informed of the planet type they had visited after every 15 trips." (line 384).

Notes on rebuttal: The authors try to retreat, saying "our research question was whether people can distinguish between elastic and inelastic controllability." I struggle to reconcile this with the claim in the abstract "These findings establish the elasticity of control as a distinct cognitive construct guiding adaptive behavior". That claim is the interesting one, and the one I am evaluating the evidence in light of.

Finally, I turn to claim 2, that individual differences in how people infer elasticity are importantly related to psychopathology. There is much to say about the decision to treat psychopathology as a unidimensional construct (the authors claim otherwise, but see Fig 6C). However, I will keep it concrete and simply note that CCA (by design) obscures the relationship between any two variables. Thus, as suggestive as Figure 6B is, we cannot conclude that there is a strong relationship between Sense of Agency (SOA) and the elasticity bias---this result is consistent with any possible relationship (even a negative one). As it turns out, Figure S3 shows that there is effectively no relationship (r=0.03).

Notes on rebuttal: The authors argue for CCA by appeal to the need to "account for the substantial variance that is typically shared among different forms of psychopathology". I agree. A simple correlation would indeed be fairly weak evidence. Strong evidence would show a significant correlation after *controlling for* other factors (e.g. a regression predicting elasticity bias from all subscales simultaneously). CCA effectively does the opposite, asking whether-with the help of all the parameters and all the surveys-one can find any correlation between the two sets of variables. The results are certainly suggestive, but they provide very little statistical evidence that the elasticity parameter is meaningfully related to any particular dimension of psychopathology.

There is also a feature of the task that limits our ability to draw strong conclusions about individual differences about elasticity inference. In the original submission, the authors stated that the study was designed to be "especially sensitive to overestimation of elasticity". A straightforward consequence of this is that the resulting *empirical* estimate of estimation bias (i.e., the gamma_elasticity parameter) is itself biased. This immediately undermines any claim that references the directionality of the elasticity bias (e.g. in the abstract). Concretely, an undirected deficit such as slower learning of elasticity would appear as a directed overestimation bias.

When we further consider that elasticity inference is the only meaningful learning/decision-making problem in the task (argued above), the situation becomes much worse. Many general deficits in learning or decision-making would be captured by the elasticity bias parameter. Thus, a conservative interpretation of the results is simply that psychopathology is associated with impaired learning and decision-making.

Notes on rebuttal: I am very concerned to see that the authors removed the discussion of this limitation in response to my first review. I quote the original explanation here:

- In interpreting the present findings, it needs to be noted that we designed our task to be especially sensitive to overestimation of elasticity. We did so by giving participants free 3 tickets at their initial visits to each planet, which meant that upon success with 3 tickets, people who overestimate elasticity were more likely to continue purchasing extra tickets unnecessarily. Following the same logic, had we first had participants experience 1 ticket trips, this could have increased the sensitivity of our task to underestimation of elasticity in elastic environments. Such underestimation could potentially relate to a distinct psychopathological profile that more heavily loads on depressive symptoms. Thus, by altering the initial exposure, future studies could disambiguate the dissociable contributions of overestimating versus underestimating elasticity to different forms of psychopathology.

The logic of this paragraph makes perfect sense to me. If you assume low elasticity, you will infer that you could catch the train with just one ticket. However, when elasticity is in fact high, you would find that you don't catch the train, leading you to quickly infer high elasticity-eliminating the bias. In contrast, if you assume high elasticity, you will continue purchasing three tickets and will never have the opportunity to learn that you could be purchasing only one-the bias remains.

The authors attempt to argue that this isn't happening using parameter recovery. However, they only report the *correlation* in the parameter, whereas the critical measure is the *bias*. Furthermore, in parameter recovery, the data-generating and data-fitting models are identical-this will yield the best possible recovery results. Although finding no bias in this setting would support the claims, it cannot outweigh the logical argument for the bias that they originally laid out. Finally, parameter recovery should be performed across the full range of plausible parameter values; using fitted parameters (a detail I could only determine by reading the code) yields biased results because the fitted parameters are themselves subject to the bias (if present). That is, if true low elasticity is inferred as high elasticity, then you will not have any examples of low elasticity in the fitted parameters and will not detect the inability to recover them.

Minor comments:

Below are things to keep in mind.

The statistical structure of the task is inconsistent with the framing. In the framing, participants can make either one or two second boarding attempts (jumps) by purchasing extra tickets. The additional attempt(s) will thus succeed with probability p for one ticket and 2p - p^2 for two tickets; the p^2 captures the fact that you only take the second attempt if you fail on the first. A consequence of this is buying more tickets has diminishing returns. In contrast, in the task, participants always jumped twice after purchasing two tickets, and the probability of success with two tickets was exactly double that with one ticket. Thus, if participants are applying an intuitive causal model to the task, they will appear to "underestimate" the elasticity of control. I don't think this seriously jeopardizes the key results, but any follow-up work should ensure that the task's structure is consistent with the intuitive causal model.

The model is heuristically defined and does not reflect Bayesian updating. For example, it over-estimates maximum control by not using losses with less than 3 tickets (intuitively, the inference here depends on what your beliefs about elasticity). Including forced three-ticket trials at the beginning of each round makes this less of an issue; but if you want to remove those trials, you might need to adjust the model. The need to introduce the modified model with kappa is likely another symptom of the heuristic nature of the model updating equations.

-

Author response:

The following is the authors’ response to the original reviews

We thank the Reviewers for their thorough reading and thoughtful feedback. Below, we address each of the concerns raised in the public reviews, and outline our revisions that aim to further clarify and strengthen the manuscript.

In our response, we clarify our conceptualization of elasticity as a dimension of controllability, formalizing it within an information-theoretic framework, and demonstrating that controllability and its elasticity are partially dissociable. Furthermore, we provide clarifications and additional modeling results showing that our experimental design and modeling approach are well-suited to dissociating elasticity inference from more general learning processes, and are not inherently biased to find overestimates of elasticity. Finally, …

Author response:

The following is the authors’ response to the original reviews

We thank the Reviewers for their thorough reading and thoughtful feedback. Below, we address each of the concerns raised in the public reviews, and outline our revisions that aim to further clarify and strengthen the manuscript.

In our response, we clarify our conceptualization of elasticity as a dimension of controllability, formalizing it within an information-theoretic framework, and demonstrating that controllability and its elasticity are partially dissociable. Furthermore, we provide clarifications and additional modeling results showing that our experimental design and modeling approach are well-suited to dissociating elasticity inference from more general learning processes, and are not inherently biased to find overestimates of elasticity. Finally, we clarify the advantages and disadvantages of our canonical correlation analysis (CCA) approach for identifying latent relationships between multidimensional data sets, and provide additional analyses that strengthen the link between elasticity estimation biases and a specific psychopathology profile.

Public Reviews:

Reviewer 1 (Public review):

This research takes a novel theoretical and methodological approach to understanding how people estimate the level of control they have over their environment, and how they adjust their actions accordingly. The task is innovative and both it and the findings are well-described (with excellent visuals). They also offer thorough validation for the particular model they develop. The research has the potential to theoretically inform the understanding of control across domains, which is a topic of great importance.

We thank the Reviewer for their favorable appraisal and valuable suggestions, which have helped clarify and strengthen the study’s conclusion.

An overarching concern is that this paper is framed as addressing resource investments across domains that include time, money, and effort, and the introductory examples focus heavily on effort-based resources (e.g., exercising, studying, practicing). The experiments, though, focus entirely on the equivalent of monetary resources - participants make discrete actions based on the number of points they want to use on a given turn. While the same ideas might generalize to decisions about other kinds of resources (e.g., if participants were having to invest the effort to reach a goal), this seems like the kind of speculation that would be better reserved for the Discussion section rather than using effort investment as a means of introducing a new concept (elasticity of control) that the paper will go on to test.

We thank the Reviewer for pointing out a lack of clarity regarding the kinds of resources tested in the present experiment. Investing additional resources in the form of extra tickets did not only require participants to pay more money. It also required them to invest additional time – since each additional ticket meant making another attempt to board the vehicle, extending the duration of the trial, and attentional effort – since every attempt required precisely timing a spacebar press as the vehicle crossed the screen. Given this involvement of money, time, and effort resources, we believe it would be imprecise to present the study as concerning monetary resources in particular. That said, we agree with the Reviewer that results might differ depending on the resource type that the experiment or the participant considers most. Thus, we now clarify the kinds of resources the experiment involved (lines 87-97):

“To investigate how people learn the elasticity of control, we allowed participants to invest different amounts of resources in attempting to board their preferred vehicle. Participants could purchase one (40 coins), two (60 coins), or three tickets (80 coins) or otherwise walk for free to the nearest location. Participants were informed that a single ticket allowed them to board only if the vehicle stopped at the station, while additional tickets provided extra chances to board even after the vehicle had left the platform. For each additional ticket, the chosen vehicle appeared moving from left to right across the screen, and participants could attempt to board it by pressing the spacebar when it reached the center of the screen. Thus, each additional ticket could increase the chance of boarding but also required a greater investment of resources—decreasing earnings, extending the trial duration, and demanding attentional effort to precisely time a button press when attempting to board.”

In addition, in the revised discussion, we now highlight the open question of whether inferences concerning the elasticity of control generalize across different resource domains (lines 341-348):

“Another interesting possibility is that individual elasticity biases vary across different resource types (e.g., money, time, effort). For instance, a given individual may assume that controllability tends to be highly elastic to money but inelastic to effort. Although the task incorporated multiple resource types (money, time, and attentional effort), the results may differ depending on the type of resources on which the participant focuses. Future studies could explore this possibility by developing tasks that separately manipulate elasticity with respect to different resource types. This would clarify whether elasticity biases are domain-specific or domaingeneral, and thus elucidate their impact on everyday decision-making.”

Setting aside the framing of the core concepts, my understanding of the task is that it effectively captures people's estimates of the likelihood of achieving their goal (Pr(success)) conditional on a given investment of resources. The ground truth across the different environments varies such that this function is sometimes flat (low controllability), sometimes increases linearly (elastic controllability), and sometimes increases as a step function (inelastic controllability). If this is accurate, then it raises two questions.

First, on the modeling front, I wonder if a suitable alternative to the current model would be to assume that the participants are simply considering different continuous functions like these and, within a Bayesian framework, evaluating the probabilistic evidence for each function based on each trial's outcome. This would give participants an estimate of the marginal increase in Pr(success) for each ticket, and they could then weigh the expected value of that ticket choice (Pr(success)*150 points) against the marginal increase in point cost for each ticket. This should yield similar predictions for optimal performance (e.g., opt-out for lower controllability environments, i.e., flatter functions), and the continuous nature of this form of function approximation also has the benefit of enabling tests of generalization to predict changes in behavior if there was, for instance, changes in available tickets for purchase (e.g., up to 4 or 5) or changes in ticket prices. Such a model would of course also maintain a critical role for priors based on one's experience within the task as well as over longer timescales, and could be meaningfully interpreted as such (e.g., priors related to the likelihood of success/failure and whether one's actions influence these). It could also potentially reduce the complexity of the model by replacing controllability-specific parameters with multiple candidate functions (presumably learned through past experience, and/or tuned by experience in this task environment), each of which is being updated simultaneously.

We thank the Reviewer for suggesting this interesting alternative modeling approach. We agree that a Bayesian framework evaluating different continuous functions could offer advantages, particularly in its ability to generalize to other ticket quantities and prices. To test the Reviewer's suggestion, we implemented a Bayesian model where participants continuously estimate both controllability and its elasticity as a mixture of three archetypal functions mapping ticket quantities to success probabilities. The flat function provides no control regardless of how many tickets are purchased (corresponding to low controllability). The step function provides the same level of control as long as at least one ticket is purchased (inelastic controllability). The linear function increases control proportionally with each additional ticket (elastic controllability). The model computes the likelihood that each of the functions produced each new observation, and accordingly updates its beliefs. Using these beliefs, the model estimates the probability of success for purchasing each number of tickets, allowing participants to weigh expected control against increasing ticket costs. Despite its theoretical advantages for generalization to different ticket quantities, this continuous function approximation model performed significantly worse than our elastic controllability model (log Bayes Factor > 4100 on combined datasets). We surmise that the main advantage offered by the elastic controllability model is that it does not assume a linear increase in control as a function of resource investment – even though this linear relationship was actually true in our experiment and is required for generalizing to other ticket quantities, it likely does not match what participants were doing. We present these findings in a new section ‘Testing alternative methods’ (lines 686-701):

“We next examined whether participant behavior would be better characterized as a continuous function approximation rather than the discrete inferences in our model. To test this, we implemented a Bayesian model where participants continuously estimate both controllability and its elasticity as a mixture of three archetypal functions mapping ticket quantities to success probabilities. The flat function provides no control regardless of how many tickets are purchased (corresponding to low controllability). The step function provides full control as long as at least one ticket is purchased (inelastic controllability). The linear function linearly increases control with the number of extra tickets (i.e., 0%, 50%, and 100% control for 1, 2, and 3 tickets, respectively; elastic controllability). The model computes the likelihood that each of the functions produced each new observation, and accordingly updates its beliefs. Using these beliefs, the model estimates the probability of success for purchasing each number of tickets, allowing participants to weigh expected control against increasing ticket costs. Despite its theoretical advantages for generalization to different ticket quantities, this continuous function approximation model performed significantly worse than the elastic controllability model (log Bayes Factor > 4100 on combined datasets), suggesting that participants did not assume that control increases linearly with resource investment.”

We also refer to this analysis in our updated discussion (326-339):

“Second, future models could enable generalization to levels of resource investment not previously experienced. For example, controllability and its elasticity could be jointly estimated via function approximation that considers control as a function of invested resources. Although our implementation of this model did not fit participants’ choices well (see Methods), other modeling assumptions or experimental designs may offer a better test of this idea.”

Second, if the reframing above is apt (regardless of the best model for implementing it), it seems like the taxonomy being offered by the authors risks a form of "jangle fallacy," in particular by positing distinct constructs (controllability and elasticity) for processes that ultimately comprise aspects of the same process (estimation of the relationship between investment and outcome likelihood). Which of these two frames is used doesn't bear on the rigor of the approach or the strength of the findings, but it does bear on how readers will digest and draw inferences from this work. It is ultimately up to the authors which of these they choose to favor, but I think the paper would benefit from some discussion of a common-process alternative, at least to prevent too strong of inferences about separate processes/modes that may not exist. I personally think the approach and findings in this paper would also be easier to digest under a common-construct approach rather than forcing new terminology but, again, I defer to the authors on this.

We acknowledge the Reviewer's important point about avoiding a potential "jangle fallacy." We entirely agree with the Reviewer that elasticity and controllability inferences are not distinct processes. Specifically, we view resource elasticity as a dimension of controllability, hence the name of our ‘elastic controllability’ model. In response to this and other Reviewers’ comments, in the revised manuscript, we now offer a formal definition of elasticity as the reduction in uncertainty about controllability due to knowing the amount of resources available to the agent (lines 16-20; see further details in response to Reviewer 3 below).

With respect to how this conceptualization is expressed in the modeling, we note that the representation in our model of maximum controllability and its elasticity via different variables is analogous to how a distribution may be represented by separate mean and variance parameters. Even the model suggested by the Reviewer required a dedicated variable representing elastic controllability, namely the probability of the linear controllability function. More generally, a single-process account allows that different aspects of the said process would be differently biased (e.g., one can have an accurate estimate of the mean of a distribution but overestimate its variance). Therefore, our characterization of distinct elasticity and controllability biases (or to put it more accurately, 'elasticity of controllability bias' and 'maximum controllability bias') is consistent with a common construct account.

To avoid misunderstandings, we have now modified the text to clarify that we view elasticity as a dimension of controllability that can only be estimated in conjunction with controllability. Here are a few examples:

Lines 21-28: “While only controllable environments can be elastic, the inverse is not necessarily true – controllability can be high, yet inelastic to invested resources – for example, choosing between bus routes affords equal control over commute time to anyone who can afford the basic fare (Figure 1; Supplementary Note 1). That said, since all actions require some resource investment, no controllable environment is completely inelastic when considering the full spectrum of possible agents, including those with insufficient resources to act (e.g., those unable to purchase a bus fare or pay for a fixed-price meal).”

Lines 45-47: “Experimental paradigms to date have conflated overall controllability and its elasticity, such that controllability was either low or elastic[16-20]. The elasticity of control, however, must be dissociated from overall controllability to accurately diagnose mismanagement of resources.”

Lines 70-72: “These findings establish elasticity as a crucial dimension of controllability that guides adaptive behavior, and a computational marker of control-related psychopathology.”

Lines 87-88: “To investigate how people learn the elasticity of control, we allowed participants to invest different amounts of resources in attempting to board their preferred vehicle.”

Reviewer 2 (Public review):

This research investigates how people might value different factors that contribute to controllability in a creative and thorough way. The authors use computational modeling to try to dissociate "elasticity" from "overall controllability," and find some differential associations with psychopathology. This was a convincing justification for using modeling above and beyond behavioral output and yielded interesting results. Interestingly, the authors conclude that these findings suggest that biased elasticity could distort agency beliefs via maladaptive resource allocation. Overall, this paper reveals some important findings about how people consider components of controllability.

We appreciate the Reviewer's positive assessment of our findings and computational approach to dissociating elasticity and overall controllability.

The primary weakness of this research is that it is not entirely clear what is meant by "elastic" and "inelastic" and how these constructs differ from existing considerations of various factors/calculations that contribute to perceptions of and decisions about controllability. I think this weakness is primarily an issue of framing, where it's not clear whether elasticity is, in fact, theoretically dissociable from controllability. Instead, it seems that the elements that make up "elasticity" are simply some of the many calculations that contribute to controllability. In other words, an "elastic" environment is inherently more controllable than an "inelastic" one, since both environments might have the same level of predictability, but in an "elastic" environment, one can also partake in additional actions to have additional control overachieving the goal (i.e., expend effort, money, time).