Sequence action representations contextualize during early skill learning

Curation statements for this article:-

Curated by eLife

eLife Assessment

This valuable study asks how the neural representation of individual finger movements changes during the early periods of sequence learning. By combining a new method for extracting features from human magnetoencephalography data and decoding analyses, the authors provide solid evidence of an early, swift change in the brain regions correlated with sequence learning, including a set of previously unreported frontal cortical regions. The authors also show that offline contextualization during short rest periods is the basis for improved performance. Further confirmation of these results on multiple movement sequences would further strengthen the key claims.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Activities of daily living rely on our ability to acquire new motor skills composed of precise action sequences. Here, we asked in humans if the millisecond-level neural representation of an action performed at different contextual sequence locations within a skill differentiates or remains stable during early motor learning. We first optimized machine learning decoders predictive of sequence-embedded finger movements from magnetoencephalographic (MEG) activity. Using this approach, we found that the neural representation of the same action performed in different contextual sequence locations progressively differentiated—primarily during rest intervals of early learning (offline)—correlating with skill gains. In contrast, representational differentiation during practice (online) did not reflect learning. The regions contributing to this representational differentiation evolved with learning, shifting from the contralateral pre- and post-central cortex during early learning (trials 1–11) to increased involvement of the superior and middle frontal cortex once skill performance plateaued (trials 12–36). Thus, the neural substrates supporting finger movements and their representational differentiation during early skill learning differ from those supporting stable performance during the subsequent skill plateau period. Representational contextualization extended to Day 2, exhibiting specificity for the practiced skill sequence. Altogether, our findings indicate that sequence action representations in the human brain contextually differentiate during early skill learning, an issue relevant to brain-computer interface applications in neurorehabilitation.

Article activity feed

-

-

-

-

eLife Assessment

This valuable study asks how the neural representation of individual finger movements changes during the early periods of sequence learning. By combining a new method for extracting features from human magnetoencephalography data and decoding analyses, the authors provide solid evidence of an early, swift change in the brain regions correlated with sequence learning, including a set of previously unreported frontal cortical regions. The authors also show that offline contextualization during short rest periods is the basis for improved performance. Further confirmation of these results on multiple movement sequences would further strengthen the key claims.

-

Reviewer #1 (Public review):

Summary:

This study addresses the issue of rapid skill learning and whether individual sequence elements (here: finger presses) are differentially represented in human MEG data. The authors use a decoding approach to classify individual finger elements, and accomplish an accuracy of around 94%. A relevant finding is that the neural representations of individual finger elements dynamically change over the course of learning. This would be highly relevant for any attempts to develop better brain machine interfaces - one now can decode individual elements within a sequence with high precision, but these representations are not static but develop over the course of learning.

Strengths:

The work follows from a large body of work from the same group on the behavioural and neural foundations of sequence learning. …

Reviewer #1 (Public review):

Summary:

This study addresses the issue of rapid skill learning and whether individual sequence elements (here: finger presses) are differentially represented in human MEG data. The authors use a decoding approach to classify individual finger elements, and accomplish an accuracy of around 94%. A relevant finding is that the neural representations of individual finger elements dynamically change over the course of learning. This would be highly relevant for any attempts to develop better brain machine interfaces - one now can decode individual elements within a sequence with high precision, but these representations are not static but develop over the course of learning.

Strengths:

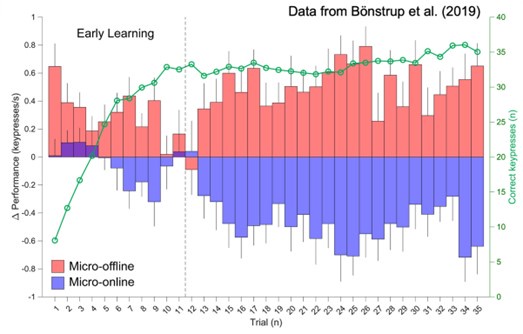

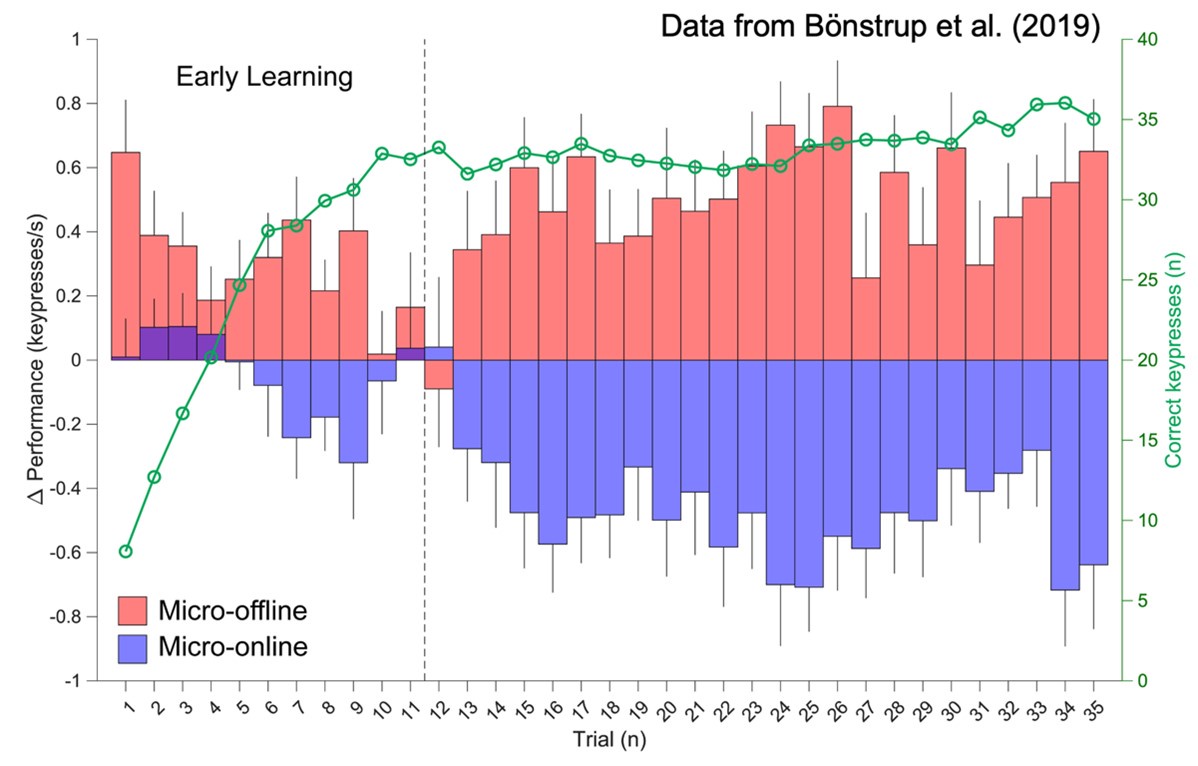

The work follows from a large body of work from the same group on the behavioural and neural foundations of sequence learning. The behavioural task is well established a neatly designed to allow for tracking learning and how individual sequence elements contribute. The inclusion of short offline rest periods between learning epochs has been influential because it has revealed that a lot, if not most of the gains in behaviour (ie speed of finger movements) occur in these so-called micro-offline rest periods.

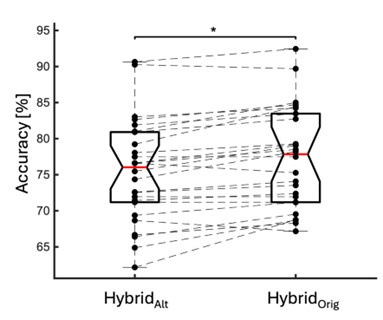

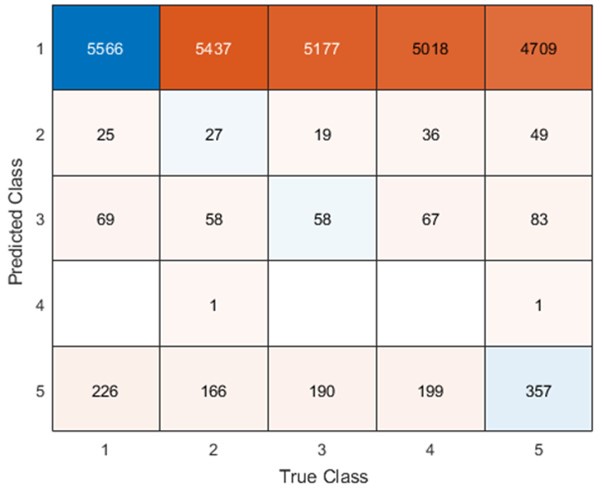

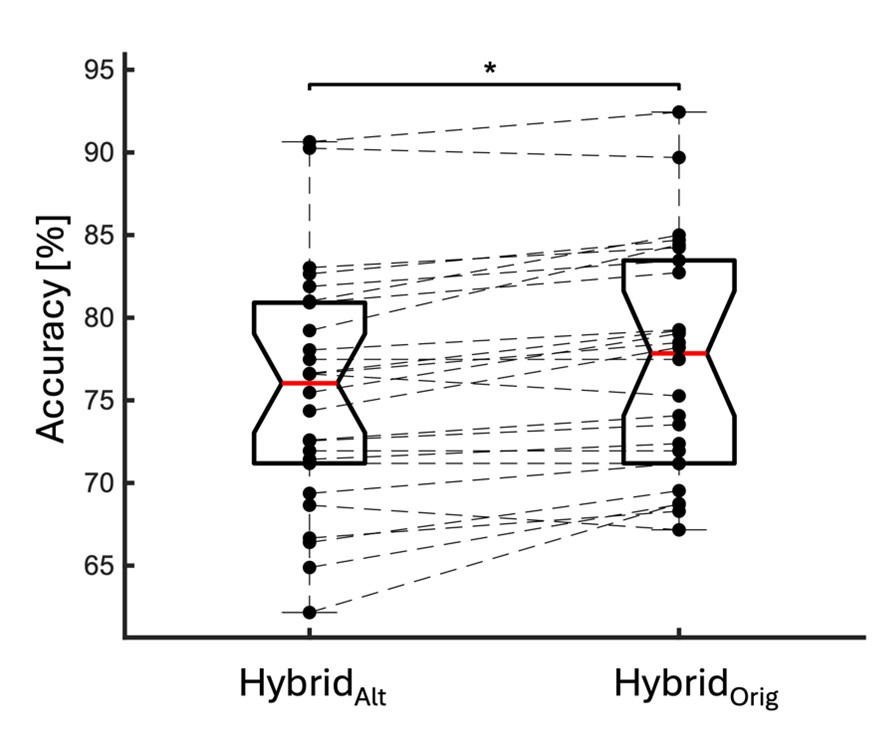

The authors use a range of new decoding techniques, and exhaustively interrogate their data in different ways, using different decoding approaches. Regardless of the approach, impressively high decoding accuracies are observed, but when using a hybrid approach that combines the MEG data in different ways, the authors observe decoding accuracies of individual sequence elements from the MEG data of up to 94%.

-

Reviewer #2 (Public review):

Summary:

The current paper consists of two parts. The first part is the rigorous feature optimization of the MEG signal to decode individual finger identity performed in a sequence (4-1-3-2-4; 1~4 corresponds to little~index fingers of the left hand). By optimizing various parameters for the MEG signal, in terms of (i) reconstructed source activity in voxel- and parcel-level resolution and their combination, (ii) frequency bands, and (iii) time window relative to press onset for each finger movement, as well as the choice of decoders, the resultant "hybrid decoder" achieved extremely high decoding accuracy (~95%).

In the second part of the paper, armed with the successful 'hybrid decoder,' the authors asked how neural representation of individual finger movement that is embedded in a sequence, changes during …

Reviewer #2 (Public review):

Summary:

The current paper consists of two parts. The first part is the rigorous feature optimization of the MEG signal to decode individual finger identity performed in a sequence (4-1-3-2-4; 1~4 corresponds to little~index fingers of the left hand). By optimizing various parameters for the MEG signal, in terms of (i) reconstructed source activity in voxel- and parcel-level resolution and their combination, (ii) frequency bands, and (iii) time window relative to press onset for each finger movement, as well as the choice of decoders, the resultant "hybrid decoder" achieved extremely high decoding accuracy (~95%).

In the second part of the paper, armed with the successful 'hybrid decoder,' the authors asked how neural representation of individual finger movement that is embedded in a sequence, changes during a very early period of skill learning and whether and how such representational change can predict skill learning. They assessed the difference in MEG feature patterns between the first and the last press 4 in sequence 41324 at each training trial and found that the pattern differentiation progressively increased over the course of early learning trials. Additionally, they found that this pattern differentiation specifically occurred during the rest period rather than during the practice trial. With a significant correlation between the trial-by-trial profile of this pattern differentiation and that for accumulation of offline learning, the authors argue that such "contextualization" of finger movement in a sequence (e.g., what-where association) underlies the early improvement of sequential skill. This is an important and timely topic for the field of motor learning and beyond.

Strengths:

The use of temporally rich neural information (MEG signal) has a significant advantage over previous studies testing sequential representations using fMRI. This allowed the authors to examine the earliest period (= the first few minutes of training) of skill learning with finer temporal resolution. Through the optimization of MEG feature extraction, the current study achieved extremely high decoding accuracy (approx. 94%) compared to previous works. The finding of the early "contextualization" of the finger movement in a sequence and its correlation to early (offline) skill improvement is interesting and important. The comparison between "online" and "offline" pattern distance is a neat idea.

Weaknesses:

One potential weakness, in terms of the generality, is that the study assessed the single sequence, the "41324" across all participants. Future confirmation test of using different sequences would be important.

-

Reviewer #3 (Public review):

Summary:

One goal of this paper is to introduce a new approach for highly accurate decoding of finger movements from human magnetoencephalography data via dimension reduction of a "multi-scale, hybrid" feature space. Following this decoding approach, the authors aim to show that early skill learning involves "contextualization" of the neural coding of individual movements, relative to their position in a sequence of consecutive movements. Furthermore, they aim to show that this "contextualization" develops primarily during short rest periods interspersed with skill training, and correlates with a performance metric which the authors interpret as an indicator of offline learning.

Strengths:

A strength of the paper is the innovative decoding approach, which achieves impressive decoding accuracies via dimension …

Reviewer #3 (Public review):

Summary:

One goal of this paper is to introduce a new approach for highly accurate decoding of finger movements from human magnetoencephalography data via dimension reduction of a "multi-scale, hybrid" feature space. Following this decoding approach, the authors aim to show that early skill learning involves "contextualization" of the neural coding of individual movements, relative to their position in a sequence of consecutive movements. Furthermore, they aim to show that this "contextualization" develops primarily during short rest periods interspersed with skill training, and correlates with a performance metric which the authors interpret as an indicator of offline learning.

Strengths:

A strength of the paper is the innovative decoding approach, which achieves impressive decoding accuracies via dimension reduction of a "multi-scale, hybrid space". This hybrid-space approach follows the neurobiologically plausible idea of concurrent distribution of neural coding across local circuits as well as large-scale networks.

Weaknesses:

A clear weakness of the paper lies in the authors' conclusions regarding "contextualization". Several potential confounds, which partly arise from the experimental design, and which are described below, question the neurobiological implications proposed by the authors, and offer a simpler explanation of the results. Furthermore, the paper follows the assumption that short breaks result in offline skill learning, while recent evidence casts doubt on this assumption.

Specifically:

The authors interpret the ordinal position information captured by their decoding approach as a reflection of neural coding dedicated to the local context of a movement (Figure 4). One way to dissociate ordinal position information from information about the moving effectors is to train a classifier on one sequence, and test the classifier on other sequences that require the same movements, but in different positions (Kornysheva et al., Neuron 2019). In the present study, however, participants trained to repeat a single sequence (4-1-3-2-4). As a result, ordinal position information is potentially confounded by the fixed finger transitions around each of the two critical positions (first and fifth press). Across consecutive correct sequences, the first keypress in a given sequence was always preceded by a movement of the index finger (=last movement of the preceding sequence), and followed by a little finger movement. The last keypress, on the other hand, was always preceded by a ring finger movement, and followed by an index finger movement (=first movement of the next sequence). Figure 3 - supplement 5 shows that finger identity can be decoded with high accuracy (>70%) across a large time window around the time of the keypress, up to at least {plus minus}100 ms (and likely beyond, given that decoding accuracy is still high at the boundaries of the window depicted in that figure). This time window approaches the keypress transition times in this study. Given that distinct finger transitions characterized the first and fifth keypress, the classifier could thus rely on persistent (or "lingering") information from the preceding finger movement, and/or "preparatory" information about the subsequent finger movement, in order to dissociate the first and fifth keypress. Currently, the manuscript provides little evidence that the context information captured by the decoding approach is more than a by-product of temporally extended, and therefore overlapping, but independent neural representations of consecutive keypresses that are executed in close temporal proximity - rather than a neural representation dedicated to context.

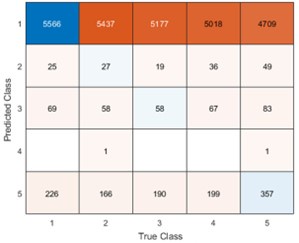

During the review process, the authors pointed out that a "mixing" of temporally overlapping information from consecutive keypresses, as described above, should result in systematic misclassifications and therefore be detectable in the confusion matrices in Figures 3C and 4B, which indeed do not provide any evidence that consecutive keypresses are systematically confused. However, such absence of evidence (of systematic misclassification) should be interpreted with caution. The authors also reported that there was only a weak relation between inter-press intervals and "online contextualization" (Figure 5 - figure supplement 6), however, their analysis suprisingly includes a keypress transition that is shared between OP1 and OP5 ("4-4"), rather than focusing solely on the two distinctive transitions ("2-4" and "4-1").

Such temporal overlap of consecutive, independent finger representations may also account for the dynamics of "ordinal coding"/"contextualization", i.e., the increase in 2-class decoding accuracy, across Day 1 (Figure 4C). As learning progresses, both tapping speed and the consistency of keypress transition times increase (Figure 1), i.e., consecutive keypresses are closer in time, and more consistently so. As a result, information related to a given keypress is increasingly overlapping in time with information related to the preceding and subsequent keypresses. Furthermore, learning should increase the number of (consecutively) correct sequences, and, thus, the consistency of finger transitions. Therefore, the increase in 2-class decoding accuracy may simply reflect an increasing overlap in time of increasingly consistent information from consecutive keypresses, which allows the classifier to dissociate the first and fifth keypress more reliably as learning progresses, simply based on the characteristic finger transitions associated with each. In other words, given that the physical context of a given keypress changes as learning progresses - keypresses move closer together in time, and are more consistently correct - it seems problematic to conclude that the mental representation of that context changes. During the review process, authors pointed at absence of evidence of a relation between tapping speed and "ordinal coding" (Figure 5 - figure supplement 7). However, a rigorous test of the idea that the mental representation of context changes would require a task design in which the physical context remains constant.

A similar difference in physical context may explain why neural representation distances ("differentiation") differ between rest and practice (Figure 5). The authors define "offline differentiation" by comparing the hybrid space features of the last index finger movement of a trial (ordinal position 5) and the first index finger movement of the next trial (ordinal position 1). However, the latter is not only the first movement in the sequence, but also the very first movement in that trial (at least in trials that started with a correct sequence), i.e., not preceded by any recent movement. In contrast, the last index finger of the last correct sequence in the preceding trial includes the characteristic finger transition from the fourth to the fifth movement. Thus, there is more overlapping information arising from the consistent, neighbouring keypresses for the last index finger movement, compared to the first index finger movement of the next trial. A strong difference (larger neural representation distance) between these two movements is, therefore, not surprising, given the task design, and this difference is also expected to increase with learning, given the increase in tapping speed, and the consequent stronger overlap in representations for consecutive keypresses.

A further complication in interpreting the results stems from the visual feedback that participants received during the task. Each keypress generated an asterisk shown above the string on the screen. It is not clear why the authors introduced this complicating visual feedback in their task, besides consistency with their previous studies. The resulting systematic link between the pattern of visual stimulation (the number of asterisks on the screen) and the ordinal position of a keypress makes the interpretation of "contextual information" that differentiates between ordinal positions difficult. While the authors report the surprising finding that their eye-tracking data could not predict asterisk position on the task display above chance level, the mean gaze position seemed to vary systematically as a function of ordinal position of a movement - see Figure 4 - figure supplement 3.

The authors report a significant correlation between "offline differentiation" and cumulative micro-offline gains. However, to reach the conclusion that "the degree of representational differentiation -particularly prominent over rest intervals - correlated with skill gains.", the critical question is rather whether "offline differentiation" correlates with micro-offline gains (not with cumulative micro-offline gains). That is, does the degree to which representations differentiate "during" a given rest period correlate with the degree to which performance improves from before to after the same rest period (not: does "offline differentiation" in a given rest period correlate with the degree to which performance has improved "during" all rest periods up to the current rest period - but this is what Figure 5 - figure supplements 1 and 4 show).

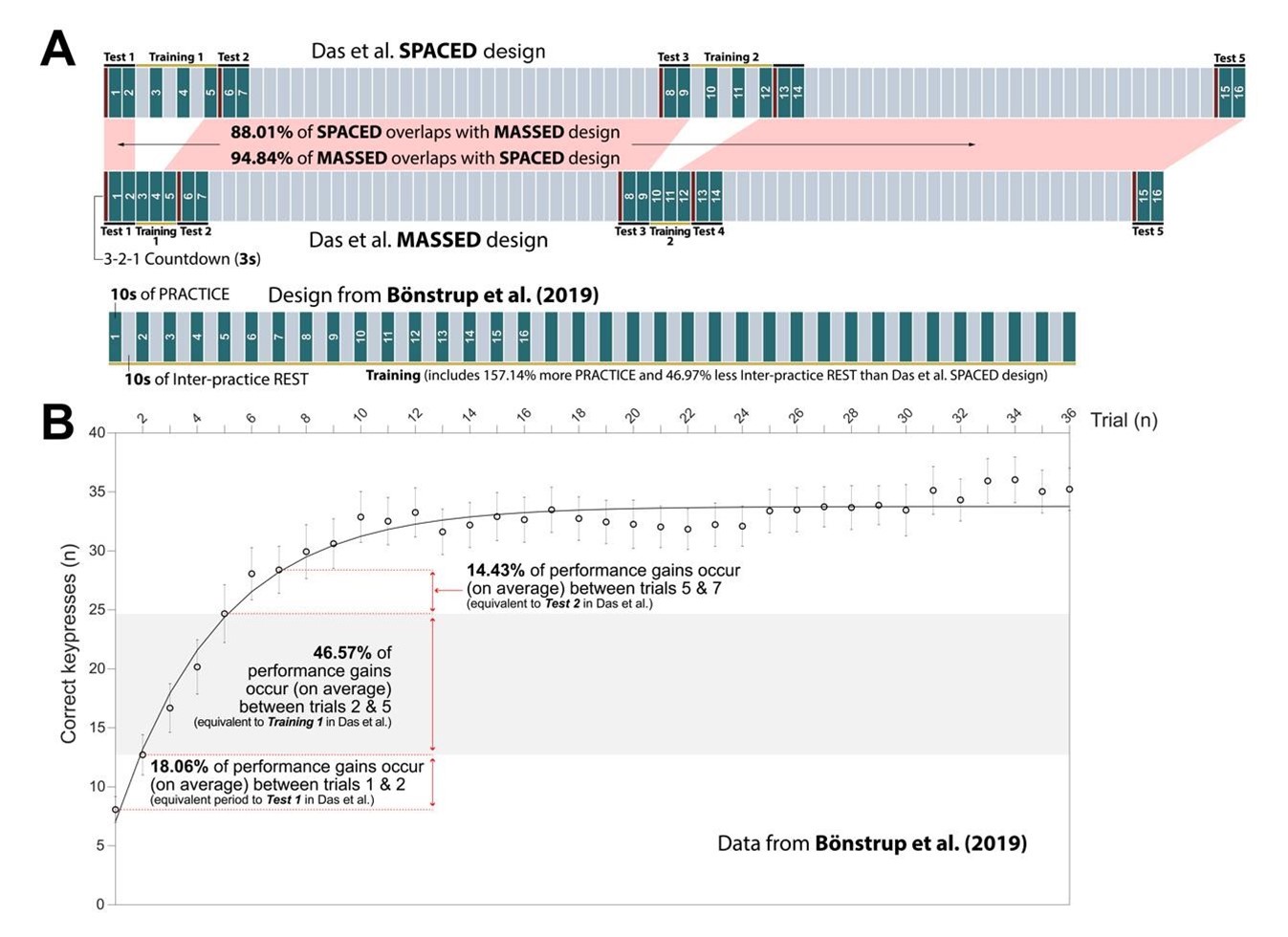

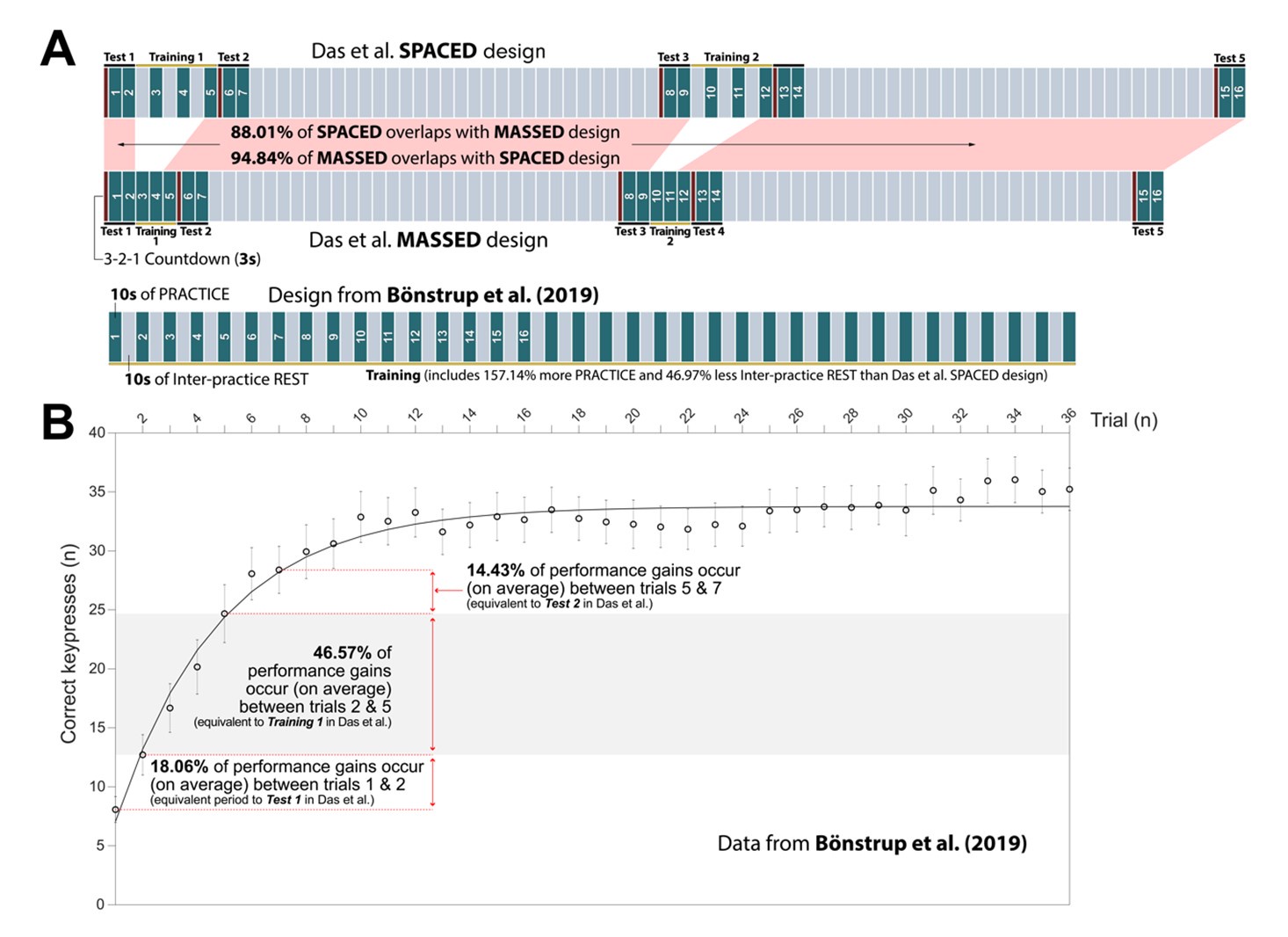

The authors follow the assumption that micro-offline gains reflect offline learning. However, there is no compelling evidence in the literature, and no evidence in the present manuscript, that micro-offline gains (during any training phase) reflect offline learning. Instead, emerging evidence in the literature indicates that they do not (Das et al., bioRxiv 2024), and instead reflect transient performance benefits when participants train with breaks, compared to participants who train without breaks, however, these benefits vanish within seconds after training if both groups of participants perform under comparable conditions (Das et al., bioRxiv 2024). During the review process, the authors argued that differences in the design between Das et al. (2024) on the one hand (Experiments 1 and 2), and the study by Bönstrup et al. (2019) on the other hand, may have prevented Das et al. (2024) from finding the assumed (lasting) learning benefit by micro-offline consolidation. However, the Supplementary Material of Das et al. (2024) includes an experiment (Experiment S1) whose design closely follows the early learning phase of Bönstrup et al. (2019), and which, nevertheless, demonstrates that there is no lasting benefit of taking breaks for the acquired skill level, despite the presence of micro-offline gains.

Along these lines, the authors argue that their practice schedule "minimizes reactive inhibition effects", in particular their short practice periods of 10 seconds each. However, 10 seconds are sufficient to result in motor slowing, as report in Bächinger et al., elife 2019, or Rodrigues et al., Exp Brain Res 2009.

An important conceptual problem with the current study is that the authors conclude that performance improves, and representation manifolds differentiate, "during" rest periods. However, micro-offline gains (as well as offline contextualization) are computed from data obtained during practice, not rest, and may, thus, just as well reflect a change that occurs "online", e.g., at the very onset of practice (like pre-planning) or throughout practice (like fatigue, or reactive inhibition).

The authors' conclusion that "low-frequency oscillations (LFOs) result in higher decoding accuracy compared to other narrow-band activity" should be taken with caution, given that the critical decoding analysis for this conclusion was based on data averaged across a time window of 200 ms (Figure 2), essentially smoothing out higher frequency components.

-

Author response:

The following is the authors’ response to the previous reviews

Overview of reviewer's concerns after peer review:

As for the initial submission, the reviewers' unanimous opinion is that the authors should perform additional controls to show that their key findings may not be affected by experimental or analysis artefacts, and clarify key aspects of their core methods, chiefly:

(1) The fact that their extremely high decoding accuracy is driven by frequency bands that would reflect the key press movements and that these are located bilaterally in frontal brain regions (with the task being unilateral) are seen as key concerns,

The above statement that decoding was driven by bilateral frontal brain regions is not entirely consistent with our results. The confusion was likely caused by the way we originally presented our …

Author response:

The following is the authors’ response to the previous reviews

Overview of reviewer's concerns after peer review:

As for the initial submission, the reviewers' unanimous opinion is that the authors should perform additional controls to show that their key findings may not be affected by experimental or analysis artefacts, and clarify key aspects of their core methods, chiefly:

(1) The fact that their extremely high decoding accuracy is driven by frequency bands that would reflect the key press movements and that these are located bilaterally in frontal brain regions (with the task being unilateral) are seen as key concerns,

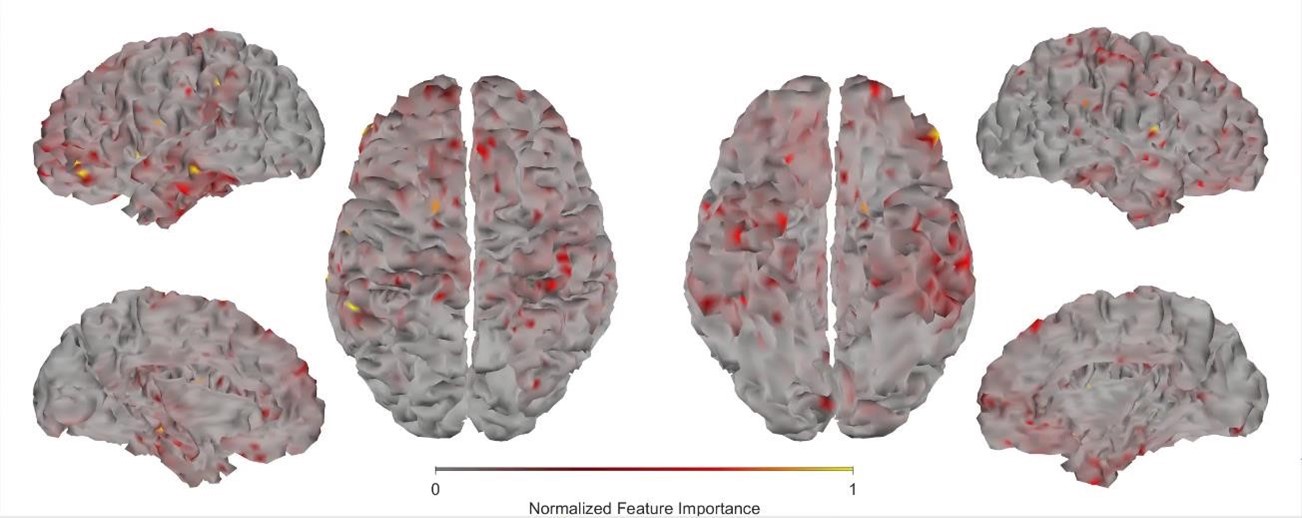

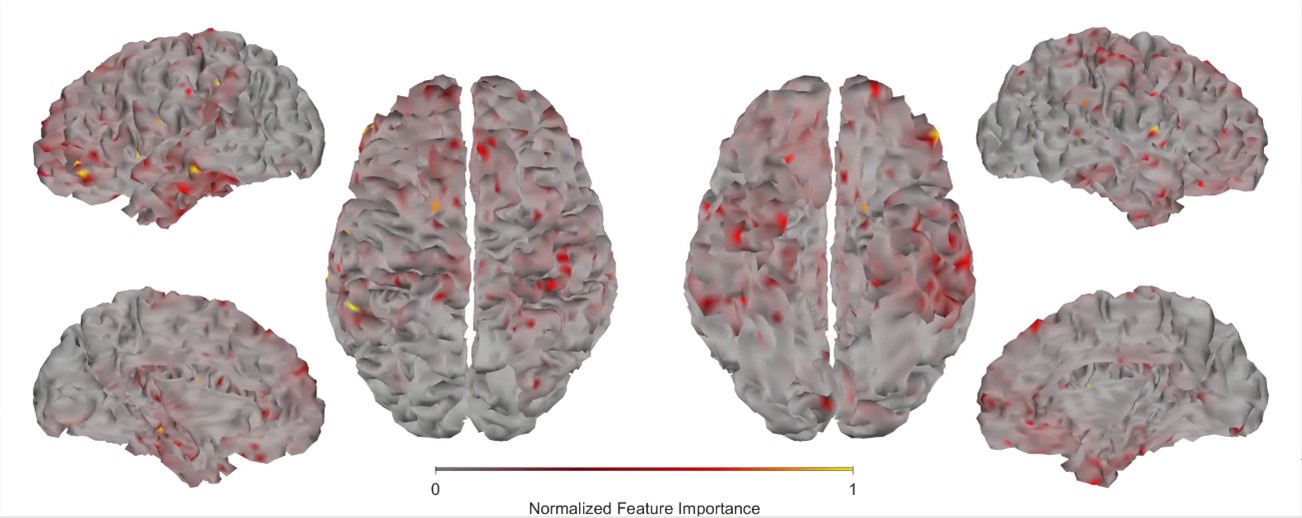

The above statement that decoding was driven by bilateral frontal brain regions is not entirely consistent with our results. The confusion was likely caused by the way we originally presented our data in Figure 2. We have revised that figure to make it more clear that decoding performance at both the parcel- (Figure 2B) and voxel-space (Figure 2C) level is predominantly driven by contralateral (as opposed to ipsilateral) sensorimotor regions. Figure 2D, which highlights bilateral sensorimotor and premotor regions, displays accuracy of individual regional voxel-space decoders assessed independently. This was the criteria used to determine which regional voxel-spaces were included in the hybridspace decoder. This result is not surprising given that motor and premotor regions are known to display adaptive interhemispheric interactions during motor sequence learning [1, 2], and particularly so when the skill is performed with the non-dominant hand [3-5]. We now discuss this important detail in the revised manuscript:

Discussion (lines 348-353)

“The whole-brain parcel-space decoder likely emphasized more stable activity patterns in contralateral frontoparietal regions that differed between individual finger movements [21,35], while the regional voxel-space decoder likely incorporated information related to adaptive interhemispheric interactions operating during motor sequence learning [32,36,37], particularly pertinent when the skill is performed with the non-dominant hand [38-40].”

We now also include new control analyses that directly address the potential contribution of movement-related artefact to the results. These changes are reported in the revised manuscript as follows:

Results (lines 207-211):

“An alternate decoder trained on ICA components labeled as movement or physiological artefacts (e.g. – head movement, ECG, eye movements and blinks; Figure 3 – figure supplement 3A, D) and removed from the original input feature set during the pre-processing stage approached chance-level performance (Figure 4 – figure supplement 3), indicating that the 4-class hybrid decoder results were not driven by task-related artefacts.”

Results (lines 261-268):

“As expected, the 5-class hybrid-space decoder performance approached chance levels when tested with randomly shuffled keypress labels (18.41%± SD 7.4% for Day 1 data; Figure 4 – figure supplement 3C). Task-related eye movements did not explain these results since an alternate 5-class hybrid decoder constructed from three eye movement features (gaze position at the KeyDown event, gaze position 200ms later, and peak eye movement velocity within this window; Figure 4 – figure supplement 3A) performed at chance levels (cross-validated test accuracy = 0.2181; Figure 4 – figure supplement 3B, C). “

Discussion (Lines 362-368):

“Task-related movements—which also express in lower frequency ranges—did not explain these results given the near chance-level performance of alternative decoders trained on (a) artefact-related ICA components removed during MEG preprocessing (Figure 3 – figure supplement 3A-C) and on (b) task-related eye movement features (Figure 4 – figure supplement 3B, C). This explanation is also inconsistent with the minimal average head motion of 1.159 mm (± 1.077 SD) across the MEG recording (Figure 3 – figure supplement 3D).“

(2) Relatedly, the use of a wide time window (~200 ms) for a 250-330 ms typing speed makes it hard to pinpoint the changes underpinning learning,

The revised manuscript now includes analyses carried out with decoding time windows ranging from 50 to 250ms in duration. These additional results are now reported in:

Results (lines 258-261):

“The improved decoding accuracy is supported by greater differentiation in neural representations of the index finger keypresses performed at positions 1 and 5 of the sequence (Figure 4A), and by the trial-by-trial increase in 2-class decoding accuracy over early learning (Figure 4C) across different decoder window durations (Figure 4 – figure supplement 2).”

Results (lines 310-312):

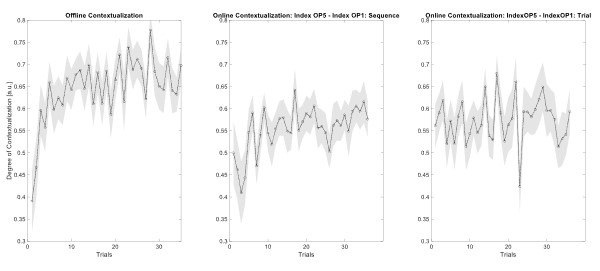

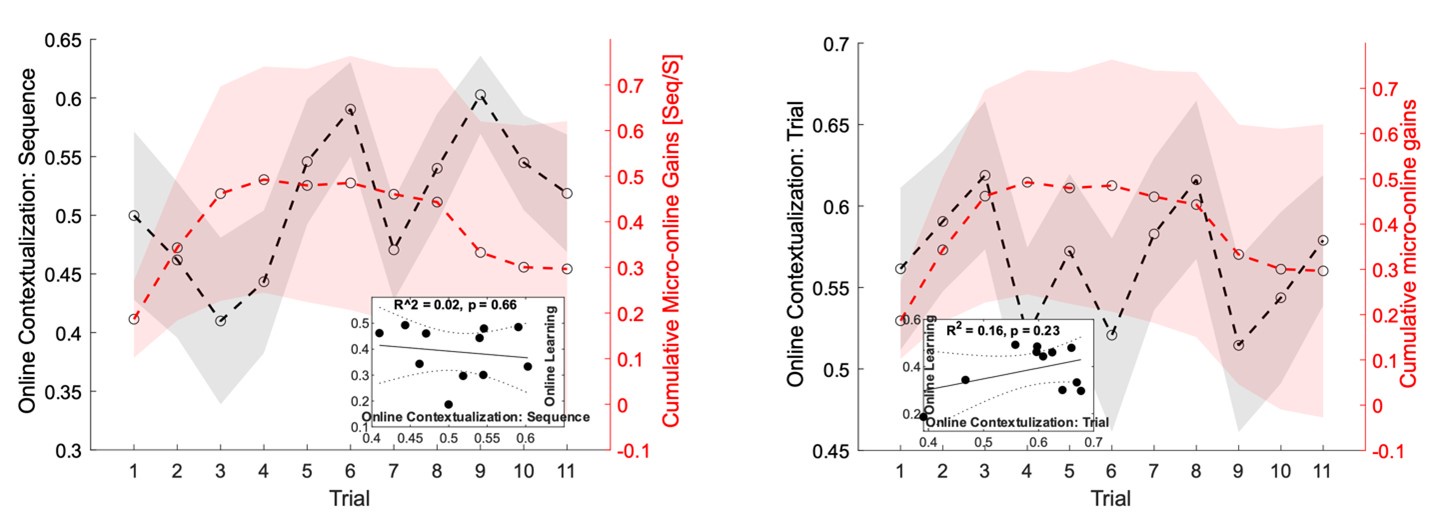

“Offline contextualization strongly correlated with cumulative micro-offline gains (r = 0.903, R2 = 0.816, p < 0.001; Figure 5 – figure supplement 1A, inset) across decoder window durations ranging from 50 to 250ms (Figure 5 – figure supplement 1B, C).“

Discussion (lines 382-385):

“This was further supported by the progressive differentiation of neural representations of the index finger keypress (Figure 4A) and by the robust trial-bytrial increase in 2-class decoding accuracy across time windows ranging between 50 and 250ms (Figure 4C; Figure 4 – figure supplement 2).”

Discussion (lines 408-9):

“Offline contextualization consistently correlated with early learning gains across a range of decoding windows (50–250ms; Figure 5 – figure supplement 1).”

(3) These concerns make it hard to conclude from their data that learning is mediated by "contextualisation" ---a key claim in the manuscript;

We believe the revised manuscript now addresses all concerns raised in Editor points 1 and 2.

(4) The hybrid voxel + parcel space decoder ---a key contribution of the paper--- is not clearly explained;

We now provide additional details regarding the hybrid-space decoder approach in the following sections of the revised manuscript:

Results (lines 158-172):

“Next, given that the brain simultaneously processes information more efficiently across multiple spatial and temporal scales [28, 32, 33], we asked if the combination of lower resolution whole-brain and higher resolution regional brain activity patterns further improve keypress prediction accuracy. We constructed hybrid-space decoders (N = 1295 ± 20 features; Figure 3A) combining whole-brain parcel-space activity (n = 148 features; Figure 2B) with regional voxel-space activity from a datadriven subset of brain areas (n = 1147 ± 20 features; Figure 2D). This subset covers brain regions showing the highest regional voxel-space decoding performances (top regions across all subjects shown in Figure 2D; Methods – Hybrid Spatial Approach).

[…]

Note that while features from contralateral brain regions were more important for whole-brain decoding (in both parcel- and voxel-spaces), regional voxel-space decoders performed best for bilateral sensorimotor areas on average across the group. Thus, a multi-scale hybrid-space representation best characterizes the keypress action manifolds.”

Results (lines 275-282):

“We used a Euclidian distance measure to evaluate the differentiation of the neural representation manifold of the same action (i.e. - an index-finger keypress) executed within different local sequence contexts (i.e. - ordinal position 1 vs. ordinal position 5; Figure 5). To make these distance measures comparable across participants, a new set of classifiers was then trained with group-optimal parameters (i.e. – broadband hybrid-space MEG data with subsequent manifold extraction (Figure 3 – figure supplements 2) and LDA classifiers (Figure 3 – figure supplements 7) trained on 200ms duration windows aligned to the KeyDown event (see Methods, Figure 3 – figure supplements 5). “

Discussion (lines 341-360):

“The initial phase of the study focused on optimizing the accuracy of decoding individual finger keypresses from MEG brain activity. Recent work showed that the brain simultaneously processes information more efficiently across multiple—rather than a single—spatial scale(s) [28, 32]. To this effect, we developed a novel hybridspace approach designed to integrate neural representation dynamics over two different spatial scales: (1) whole-brain parcel-space (i.e. – spatial activity patterns across all cortical brain regions) and (2) regional voxel-space (i.e. – spatial activity patterns within select brain regions) activity. We found consistent spatial differences between whole-brain parcel-space feature importance (predominantly contralateral frontoparietal, Figure 2B) and regional voxel-space decoder accuracy (bilateral sensorimotor regions, Figure 2D). The whole-brain parcel-space decoder likely emphasized more stable activity patterns in contralateral frontoparietal regions that differed between individual finger movements [21, 35], while the regional voxelspace decoder likely incorporated information related to adaptive interhemispheric interactions operating during motor sequence learning [32, 36, 37], particularly pertinent when the skill is performed with the non-dominant hand [38-40]. The observation of increased cross-validated test accuracy (as shown in Figure 3 – Figure Supplement 6) indicates that the spatially overlapping information in parcel- and voxel-space time-series in the hybrid decoder was complementary, rather than redundant [41]. The hybrid-space decoder which achieved an accuracy exceeding 90%—and robustly generalized to Day 2 across trained and untrained sequences— surpassed the performance of both parcel-space and voxel-space decoders and compared favorably to other neuroimaging-based finger movement decoding strategies [6, 24, 42-44].”

Methods (lines 636-647):

“Hybrid Spatial Approach. First, we evaluated the decoding performance of each individual brain region in accurately labeling finger keypresses from regional voxelspace (i.e. - all voxels within a brain region as defined by the Desikan-Killiany Atlas) activity. Brain regions were then ranked from 1 to 148 based on their decoding accuracy at the group level. In a stepwise manner, we then constructed a “hybridspace” decoder by incrementally concatenating regional voxel-space activity of brain regions—starting with the top-ranked region—with whole-brain parcel-level features and assessed decoding accuracy. Subsequently, we added the regional voxel-space features of the second-ranked brain region and continued this process until decoding accuracy reached saturation. The optimal “hybrid-space” input feature set over the group included the 148 parcel-space features and regional voxelspace features from a total of 8 brain regions (bilateral superior frontal, middle frontal, pre-central and post-central; N = 1295 ± 20 features).”

(5) More controls are needed to show that their decoder approach is capturing a neural representation dedicated to context rather than independent representations of consecutive keypresses;

These controls have been implemented and are now reported in the manuscript:

Results (lines 318-328):

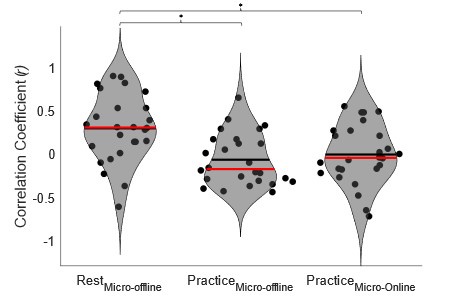

“Within-subject correlations were consistent with these group-level findings. The average correlation between offline contextualization and micro-offline gains within individuals was significantly greater than zero (Figure 5 – figure supplement 4, left; t = 3.87, p = 0.00035, df = 25, Cohen's d = 0.76) and stronger than correlations between online contextualization and either micro-online (Figure 5 – figure supplement 4, middle; t = 3.28, p = 0.0015, df = 25, Cohen's d = 1.2) or micro-offline gains (Figure 5 – figure supplement 4, right; t = 3.7021, p = 5.3013e-04, df = 25, Cohen's d = 0.69). These findings were not explained by behavioral changes of typing rhythm (t = -0.03, p = 0.976; Figure 5 – figure supplement 5), adjacent keypress transition times (R2 = 0.00507, F[1,3202] = 16.3; Figure 5 – figure supplement 6), or overall typing speed (between-subject; R2 = 0.028, p = 0.41; Figure 5 – figure supplement 7).”

Results (lines 385-390):

“Further, the 5-class classifier—which directly incorporated information about the sequence location context of each keypress into the decoding pipeline—improved decoding accuracy relative to the 4-class classifier (Figure 4C). Importantly, testing on Day 2 revealed specificity of this representational differentiation for the trained skill but not for the same keypresses performed during various unpracticed control sequences (Figure 5C).”

Discussion (lines 408-423):

“Offline contextualization consistently correlated with early learning gains across a range of decoding windows (50–250ms; Figure 5 – figure supplement 1). This result remained unchanged when measuring offline contextualization between the last and second sequence of consecutive trials, inconsistent with a possible confounding effect of pre-planning [30] (Figure 5 – figure supplement 2A). On the other hand, online contextualization did not predict learning (Figure 5 – figure supplement 3). Consistent with these results the average within-subject correlation between offline contextualization and micro-offline gains was significantly stronger than withinsubject correlations between online contextualization and either micro-online or micro-offline gains (Figure 5 – figure supplement 4).

Offline contextualization was not driven by trial-by-trial behavioral differences, including typing rhythm (Figure 5 – figure supplement 5) and adjacent keypress transition times (Figure 5 – figure supplement 6) nor by between-subject differences in overall typing speed (Figure 5 – figure supplement 7)—ruling out a reliance on differences in the temporal overlap of keypresses. Importantly, offline contextualization documented on Day 1 stabilized once a performance plateau was reached (trials 11-36), and was retained on Day 2, documenting overnight consolidation of the differentiated neural representations.”

(6) The need to show more convincingly that their data is not affected by head movements, e.g., by regressing out signal components that are correlated with the fiducial signal;

We now include data in Figure 3 – figure supplement 3D showing that head movement was minimal in all participants (mean of 1.159 mm ± 1.077 SD). Further, the requested additional control analyses have been carried out and are reported in the revised manuscript:

Results (lines 204-211):

“Testing the keypress state (4-class) hybrid decoder performance on Day 1 after randomly shupling keypress labels for held-out test data resulted in a performance drop approaching expected chance levels (22.12%± SD 9.1%; Figure 3 – figure supplement 3C). An alternate decoder trained on ICA components labeled as movement or physiological artefacts (e.g. – head movement, ECG, eye movements and blinks; Figure 3 – figure supplement 3A, D) and removed from the original input feature set during the pre-processing stage approached chance-level performance (Figure 4 – figure supplement 3), indicating that the 4-class hybrid decoder results were not driven by task-related artefacts.” Results (lines 261-268):

“As expected, the 5-class hybrid-space decoder performance approached chance levels when tested with randomly shuffled keypress labels (18.41%± SD 7.4% for Day 1 data; Figure 4 – figure supplement 3C). Task-related eye movements did not explain these results since an alternate 5-class hybrid decoder constructed from three eye movement features (gaze position at the KeyDown event, gaze position 200ms later, and peak eye movement velocity within this window; Figure 4 – figure supplement 3A) performed at chance levels (cross-validated test accuracy = 0.2181; Figure 4 – figure supplement 3B, C). “

Discussion (Lines 362-368):

“Task-related movements—which also express in lower frequency ranges—did not explain these results given the near chance-level performance of alternative decoders trained on (a) artefact-related ICA components removed during MEG preprocessing (Figure 3 – figure supplement 3A-C) and on (b) task-related eye movement features (Figure 4 – figure supplement 3B, C). This explanation is also inconsistent with the minimal average head motion of 1.159 mm (± 1.077 SD) across the MEG recording (Figure 3 – figure supplement 3D). “

(7) The offline neural representation analysis as executed is a bit odd, since it seems to be based on comparing the last key press to the first key press of the next sequence, rather than focus on the inter-sequence interval

While we previously evaluated replay of skill sequences during rest intervals, identification of how offline reactivation patterns of a single keypress state representation evolve with learning presents non-trivial challenges. First, replay events tend to occur in clusters with irregular temporal spacing as previously shown by our group and others. Second, replay of experienced sequences is intermixed with replay of sequences that have never been experienced but are possible. Finally, and perhaps the most significant issue, replay is temporally compressed up to 20x with respect to the behavior [6]. That means our decoders would need to accurately evaluate spatial pattern changes related to individual keypresses over much smaller time windows (i.e. - less than 10 ms) than evaluated here. This future work, which is undoubtably of great interest to our research group, will require more substantial tool development before we can apply them to this question. We now articulate this future direction in the Discussion:

Discussion (lines 423-427):

“A possible neural mechanism supporting contextualization could be the emergence and stabilization of conjunctive “what–where” representations of procedural memories [64] with the corresponding modulation of neuronal population dynamics [65, 66] during early learning. Exploring the link between contextualization and neural replay could provide additional insights into this issue [6, 12, 13, 15].”

(8) And this analysis could be confounded by the fact that they are comparing the last element in a sequence vs the first movement in a new one.

We have now addressed this control analysis in the revised manuscript:

Results (Lines 310-316)

“Offline contextualization strongly correlated with cumulative micro-offline gains (r = 0.903, R2 = 0.816, p < 0.001; Figure 5 – figure supplement 1A, inset) across decoder window durations ranging from 50 to 250ms (Figure 5 – figure supplement 1B, C). The offline contextualization between the final sequence of each trial and the second sequence of the subsequent trial (excluding the first sequence) yielded comparable results. This indicates that pre-planning at the start of each practice trial did not directly influence the offline contextualization measure [30] (Figure 5 – figure supplement 2A, 1st vs. 2nd Sequence approaches).”

Discussion (lines 408-416):

“Offline contextualization consistently correlated with early learning gains across a range of decoding windows (50–250ms; Figure 5 – figure supplement 1). This result remained unchanged when measuring offline contextualization between the last and second sequence of consecutive trials, inconsistent with a possible confounding effect of pre-planning [30] (Figure 5 – figure supplement 2A). On the other hand, online contextualization did not predict learning (Figure 5 – figure supplement 3). Consistent with these results the average within-subject correlation between offline contextualization and micro-offline gains was significantly stronger than within-subject correlations between online contextualization and either micro-online or micro-offline gains (Figure 5 – figure supplement 4).”

It also seems to be the case that many analyses suggested by the reviewers in the first round of revisions that could have helped strengthen the manuscript have not been included (they are only in the rebuttal). Moreover, some of the control analyses mentioned in the rebuttal seem not to be described anywhere, neither in the manuscript, nor in the rebuttal itself; please double check that.

All suggested analyses carried out and mentioned are now in the revised manuscript.

eLife Assessment

This valuable study investigates how the neural representation of individual finger movements changes during the early period of sequence learning. By combining a new method for extracting features from human magnetoencephalography data and decoding analyses, the authors provide incomplete evidence of an early, swift change in the brain regions correlated with sequence learning…

We have now included all the requested control analyses supporting “an early, swift change in the brain regions correlated with sequence learning”:

The addition of more control analyses to rule out that head movement artefacts influence the findings,

We now include data in Figure 3 – figure supplement 3D showing that head movement was minimal in all participants (mean of 1.159 mm ± 1.077 SD). Further, we have implemented the requested additional control analyses addressing this issue:

Results (lines 207-211):

“An alternate decoder trained on ICA components labeled as movement or physiological artefacts (e.g. – head movement, ECG, eye movements and blinks; Figure 3 – figure supplement 3A, D) and removed from the original input feature set during the pre-processing stage approached chance-level performance (Figure 4 – figure supplement 3), indicating that the 4-class hybrid decoder results were not driven by task-related artefacts.”

Results (lines 261-268):

“As expected, the 5-class hybrid-space decoder performance approached chance levels when tested with randomly shuffled keypress labels (18.41%± SD 7.4% for Day 1 data; Figure 4 – figure supplement 3C). Task-related eye movements did not explain these results since an alternate 5-class hybrid decoder constructed from three eye movement features (gaze position at the KeyDown event, gaze position 200ms later, and peak eye movement velocity within this window; Figure 4 – figure supplement 3A) performed at chance levels (cross-validated test accuracy = 0.2181; Figure 4 – figure supplement 3B, C). “

Discussion (Lines 362-368):

“Task-related movements—which also express in lower frequency ranges—did not explain these results given the near chance-level performance of alternative decoders trained on (a) artefact-related ICA components removed during MEG preprocessing (Figure 3 – figure supplement 3A-C) and on (b) task-related eye movement features (Figure 4 – figure supplement 3B, C). This explanation is also inconsistent with the minimal average head motion of 1.159 mm (± 1.077 SD) across the MEG recording (Figure 3 – figure supplement 3D).“

and to further explain the proposal of offline contextualization during short rest periods as the basis for improvement performance would strengthen the manuscript.

We have edited the manuscript to clarify that the degree of representational differentiation (contextualization) parallels skill learning. We have no evidence at this point to indicate that “offline contextualization during short rest periods is the basis for improvement in performance”. The following areas of the revised manuscript now clarify this point:

Summary (Lines 455-458):

“In summary, individual sequence action representations contextualize during early learning of a new skill and the degree of differentiation parallels skill gains. Differentiation of the neural representations developed during rest intervals of early learning to a larger extent than during practice in parallel with rapid consolidation of skill.”

Additional control analyses are also provided supporting a link between offline contextualization and early learning:

Results (lines 302-318):

“The Euclidian distance between neural representations of IndexOP1 (i.e. - index finger keypress at ordinal position 1 of the sequence) and IndexOP5 (i.e. - index finger keypress at ordinal position 5 of the sequence) increased progressively during early learning (Figure 5A)—predominantly during rest intervals (offline contextualization) rather than during practice (online) (t = 4.84, p < 0.001, df = 25, Cohen's d = 1.2; Figure 5B; Figure 5 – figure supplement 1A). An alternative online contextualization determination equaling the time interval between online and offline comparisons (Trial-based; 10 seconds between IndexOP1 and IndexOP5 observations in both cases) rendered a similar result (Figure 5 – figure supplement 2B).

Offline contextualization strongly correlated with cumulative micro-offline gains (r = 0.903, R2 = 0.816, p < 0.001; Figure 5 – figure supplement 1A, inset) across decoder window durations ranging from 50 to 250ms (Figure 5 – figure supplement 1B, C). The offline contextualization between the final sequence of each trial and the second sequence of the subsequent trial (excluding the first sequence) yielded comparable results. This indicates that pre-planning at the start of each practice trial did not directly influence the offline contextualization measure [30] (Figure 5 – figure supplement 2A, 1st vs. 2nd Sequence approaches). Conversely, online contextualization (using either measurement approach) did not explain early online learning gains (i.e. – Figure 5 – figure supplement 3).”

Public Reviews:

Reviewer #1 (Public review):

Summary:

This study addresses the issue of rapid skill learning and whether individual sequence elements (here: finger presses) are differentially represented in human MEG data. The authors use a decoding approach to classify individual finger elements and accomplish an accuracy of around 94%. A relevant finding is that the neural representations of individual finger elements dynamically change over the course of learning. This would be highly relevant for any attempts to develop better brain machine interfaces - one now can decode individual elements within a sequence with high precision, but these representations are not static but develop over the course of learning.

Strengths:

The work follows a large body of work from the same group on the behavioural and neural foundations of sequence learning. The behavioural task is well established a neatly designed to allow for tracking learning and how individual sequence elements contribute. The inclusion of short offline rest periods between learning epochs has been influential because it has revealed that a lot, if not most of the gains in behaviour (ie speed of finger movements) occur in these so-called micro-offline rest periods.

The authors use a range of new decoding techniques, and exhaustively interrogate their data in different ways, using different decoding approaches. Regardless of the approach, impressively high decoding accuracies are observed, but when using a hybrid approach that combines the MEG data in different ways, the authors observe decoding accuracies of individual sequence elements from the MEG data of up to 94%.

Weaknesses:

A formal analysis and quantification of how head movement may have contributed to the results should be included in the paper or supplemental material. The type of correlated head movements coming from vigorous key presses aren't necessarily visible to the naked eye, and even if arms etc are restricted, this will not preclude shoulder, neck or head movement necessarily; if ICA was conducted, for example, the authors are in the position to show the components that relate to such movement; but eye-balling the data would not seem sufficient. The related issue of eye movements is addressed via classifier analysis. A formal analysis which directly accounts for finger/eye movements in the same analysis as the main result (ie any variance related to these factors) should be presented.

We now present additional data related to head (Figure 3 – figure supplement 3; note that average measured head movement across participants was 1.159 mm ± 1.077 SD) and eye movements (Figure 4 – figure supplement 3) and have implemented the requested control analyses addressing this issue. They are reported in the revised manuscript in the following locations: Results (lines 207-211), Results (lines 261-268), Discussion (Lines 362-368).

This reviewer recommends inclusion of a formal analysis that the intra-vs inter parcels are indeed completely independent. For example, the authors state that the inter-parcel features reflect "lower spatially resolved whole-brain activity patterns or global brain dynamics". A formal quantitative demonstration that the signals indeed show "complete independence" (as claimed by the authors) and are orthogonal would be helpful.

Please note that we never claim in the manuscript that the parcel-space and regional voxelspace features show “complete independence”. More importantly, input feature orthogonality is not a requirement for the machine learning-based decoding methods utilized in the present study while non-redundancy is [7] (a requirement satisfied by our data, see below). Finally, our results show that the hybrid space decoder out-performed all other methods even after input features were fully orthogonalized with LDA (the procedure used in all contextualization analyses) or PCA dimensionality reduction procedures prior to the classification step (Figure 3 – figure supplement 2).

Relevant to this issue, please note that if spatially overlapping parcel- and voxel-space timeseries only provided redundant information, inclusion of both as input features should increase model over-fitting to the training dataset and decrease overall cross-validated test accuracy [8]. In the present study however, we see the opposite effect on decoder performance. First, Figure 3 – figure supplement 1 & 2 clearly show that decoders constructed from hybrid-space features outperform the other input feature (sensor-, wholebrain parcel- and whole-brain voxel-) spaces in every case (e.g. – wideband, all narrowband frequency ranges, and even after the input space is fully orthogonalized through dimensionality reduction procedures prior to the decoding step). Furthermore, Figure 3 – figure supplement 6 shows that hybrid-space decoder performance supers when parceltime series that spatially overlap with the included regional voxel-spaces are removed from the input feature set.

We state in the Discussion (lines 353-356)

“The observation of increased cross-validated test accuracy (as shown in Figure 3 – Figure Supplement 6) indicates that the spatially overlapping information in parcel- and voxel-space time-series in the hybrid decoder was complementary, rather than redundant [41].”

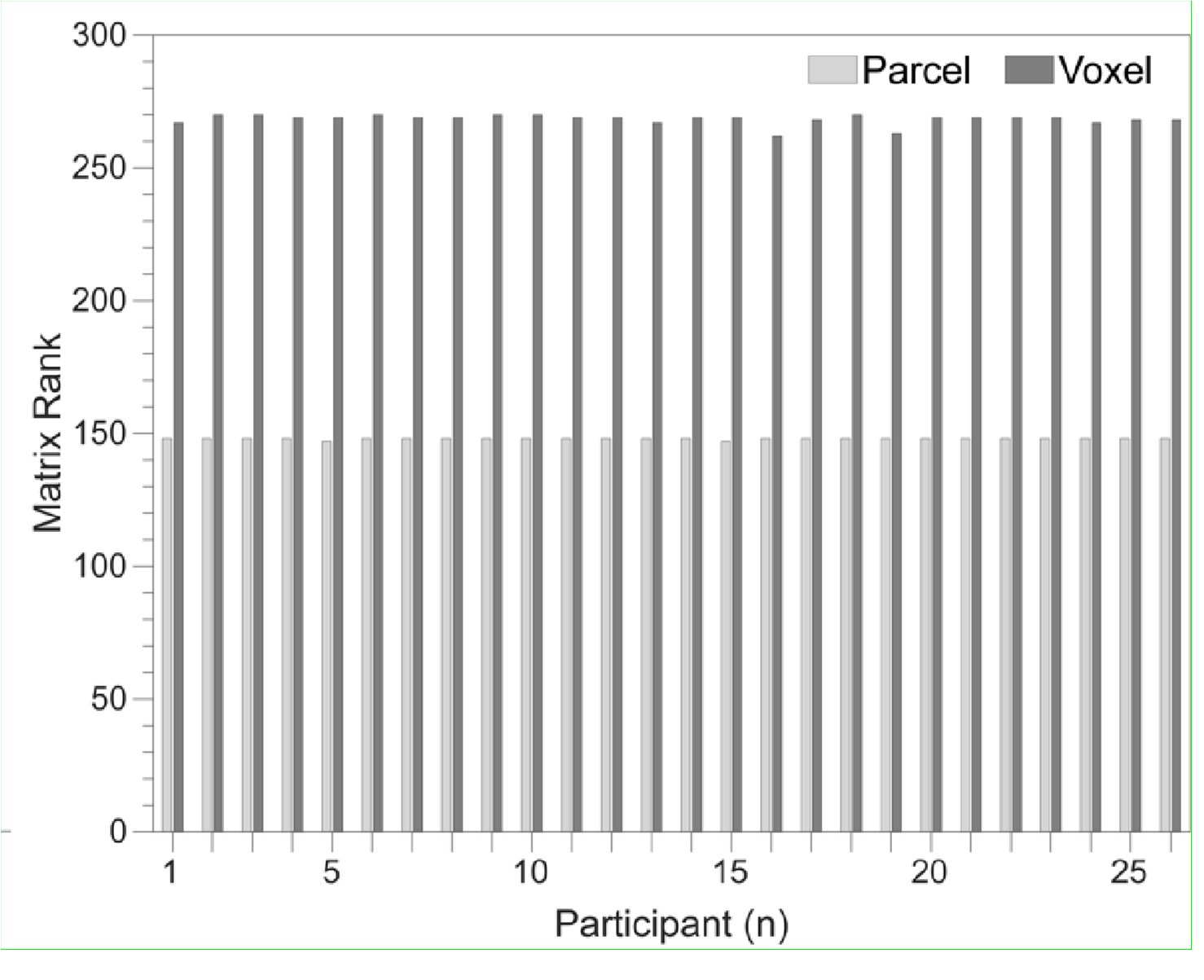

To gain insight into the complimentary information contributed by the two spatial scales to the hybrid-space decoder, we first independently computed the matrix rank for whole-brain parcel- and voxel-space input features for each participant (shown in Author response image 1). The results indicate that whole-brain parcel-space input features are full rank (rank = 148) for all participants (i.e. - MEG activity is orthogonal between all parcels). The matrix rank of voxelspace input features (rank = 267± 17 SD), exceeded the parcel-space rank for all participants and approached the number of useable MEG sensor channels (n = 272). Thus, voxel-space features provide both additional and complimentary information to representations at the parcel-space scale.

Author response image 1.

Matrix rank computed for whole-brain parcel- and voxel-space time-series in individual subjects across the training run. The results indicate that whole-brain parcel-space input features are full rank (rank = 148) for all participants (i.e. - MEG activity is orthogonal between all parcels). The matrix rank of voxel-space input features (rank = 267 ± 17 SD), on the other hand, approached the number of useable MEG sensor channels (n = 272). Although not full rank, the voxel-space rank exceeded the parcel-space rank for all participants. Thus, some voxel-space features provide additional orthogonal information to representations at the parcel-space scale. An expression of this is shown in the correlation distribution between parcel and constituent voxel time-series in Figure 2—figure Supplement 2.

Figure 2—figure Supplement 2 in the revised manuscript now shows that the degree of dependence between the two spatial scales varies over the regional voxel-space. That is, some voxels within a given parcel correlate strongly with the time-series of the parcel they belong to, while others do not. This finding is consistent with a documented increase in correlational structure of neural activity across spatial scales that does not reflect perfect dependency or orthogonality [9]. Notably, the regional voxel-spaces included in the hybridspace decoder are significantly less correlated with the averaged parcel-space time-series than excluded voxels. We now point readers to this new figure in the results.

Taken together, these results indicate that the multi-scale information in the hybrid feature set is complimentary rather than orthogonal. This is consistent with the idea that hybridspace features better represent multi-scale temporospatial dynamics reported to be a fundamental characteristic of how the brain stores and adapts memories, and generates behavior across species [9].

Reviewer #2 (Public review):

Summary:

The current paper consists of two parts. The first part is the rigorous feature optimization of the MEG signal to decode individual finger identity performed in a sequence (4-1-3-2-4; 1~4 corresponds to little~index fingers of the left hand). By optimizing various parameters for the MEG signal, in terms of (i) reconstructed source activity in voxel- and parcel-level resolution and their combination, (ii) frequency bands, and (iii) time window relative to press onset for each finger movement, as well as the choice of decoders, the resultant "hybrid decoder" achieved extremely high decoding accuracy (~95%). This part seems driven almost by pure engineering interest in gaining as high decoding accuracy as possible.

In the second part of the paper, armed with the successful 'hybrid decoder,' the authors asked more scientific questions about how neural representation of individual finger movement that is embedded in a sequence, changes during a very early period of skill learning and whether and how such representational change can predict skill learning. They assessed the difference in MEG feature patterns between the first and the last press 4 in sequence 41324 at each training trial and found that the pattern differentiation progressively increased over the course of early learning trials. Additionally, they found that this pattern differentiation specifically occurred during the rest period rather than during the practice trial. With a significant correlation between the trial-by-trial profile of this pattern differentiation and that for accumulation of offline learning, the authors argue that such "contextualization" of finger movement in a sequence (e.g., what-where association) underlies the early improvement of sequential skill. This is an important and timely topic for the field of motor learning and beyond.

Strengths:

Each part has its own strength. For the first part, the use of temporally rich neural information (MEG signal) has a significant advantage over previous studies testing sequential representations using fMRI. This allowed the authors to examine the earliest period (= the first few minutes of training) of skill learning with finer temporal resolution. Through the optimization of MEG feature extraction, the current study achieved extremely high decoding accuracy (approx. 94%) compared to previous works. For the second part, the finding of the early "contextualization" of the finger movement in a sequence and its correlation to early (offline) skill improvement is interesting and important. The comparison between "online" and "offline" pattern distance is a neat idea.

Weaknesses:

Despite the strengths raised, the specific goal for each part of the current paper, i.e., achieving high decoding accuracy and answering the scientific question of early skill learning, seems not to harmonize with each other very well. In short, the current approach, which is solely optimized for achieving high decoding accuracy, does not provide enough support and interpretability for the paper's interesting scientific claim. This reminds me of the accuracy-explainability tradeoff in machine learning studies (e.g., Linardatos et al., 2020). More details follow.

There are a number of different neural processes occurring before and after a key press, such as planning of upcoming movement and ahead around premotor/parietal cortices, motor command generation in primary motor cortex, sensory feedback related processes in sensory cortices, and performance monitoring/evaluation around the prefrontal area. Some of these may show learning-dependent change and others may not.

In this paper, the focus as stated in the Introduction was to evaluate “the millisecond-level differentiation of discrete action representations during learning”, a proposal that first required the development of more accurate computational tools. Our first step, reported here, was to develop that tool. With that in hand, we then proceeded to test if neural representations differentiated during early skill learning. Our results showed they did. Addressing the question the Reviewer asks is part of exciting future work, now possible based on the results presented in this paper. We acknowledge this issue in the revised Discussion:

Discussion (Lines 428-434):

“In this study, classifiers were trained on MEG activity recorded during or immediately after each keypress, emphasizing neural representations related to action execution, memory consolidation and recall over those related to planning. An important direction for future research is determining whether separate decoders can be developed to distinguish the representations or networks separately supporting these processes. Ongoing work in our lab is addressing this question. The present accuracy results across varied decoding window durations and alignment with each keypress action support the feasibility of this approach (Figure 3—figure supplement 5).”

Given the use of whole-brain MEG features with a wide time window (up to ~200 ms after each key press) under the situation of 3~4 Hz (i.e., 250~330 ms press interval) typing speed, these different processes in different brain regions could have contributed to the expression of the "contextualization," making it difficult to interpret what really contributed to the "contextualization" and whether it is learning related. Critically, the majority of data used for decoder training has the chance of such potential overlap of signal, as the typing speed almost reached a plateau already at the end of the 11th trial and stayed until the 36th trial. Thus, the decoder could have relied on such overlapping features related to the future presses. If that is the case, a gradual increase in "contextualization" (pattern separation) during earlier trials makes sense, simply because the temporal overlap of the MEG feature was insufficient for the earlier trials due to slower typing speed. Several direct ways to address the above concern, at the cost of decoding accuracy to some degree, would be either using the shorter temporal window for the MEG feature or training the model with the early learning period data only (trials 1 through 11) to see if the main results are unaffected would be some example.

We now include additional analyses carried out with decoding time windows ranging from 50 to 250ms in duration, which have been added to the revised manuscript as follows:

Results (lines 258-261):

“The improved decoding accuracy is supported by greater differentiation in neural representations of the index finger keypresses performed at positions 1 and 5 of the sequence (Figure 4A), and by the trial-by-trial increase in 2-class decoding accuracy over early learning (Figure 4C) across different decoder window durations (Figure 4 – figure supplement 2).”

Results (lines 310-312):

“Offline contextualization strongly correlated with cumulative micro-offline gains (r = 0.903, R2 = 0.816, p < 0.001; Figure 5 – figure supplement 1A, inset) across decoder window durations ranging from 50 to 250ms (Figure 5 – figure supplement 1B, C).“

Discussion (lines 382-385):

“This was further supported by the progressive differentiation of neural representations of the index finger keypress (Figure 4A) and by the robust trial-by trial increase in 2-class decoding accuracy across time windows ranging between 50 and 250ms (Figure 4C; Figure 4 – figure supplement 2).”

Discussion (lines 408-9):

“Offline contextualization consistently correlated with early learning gains across a range of decoding windows (50–250ms; Figure 5 – figure supplement 1).”

Several new control analyses are also provided addressing the question of overlapping keypresses:

Reviewer #3 (Public review):

Summary:

One goal of this paper is to introduce a new approach for highly accurate decoding of finger movements from human magnetoencephalography data via dimension reduction of a "multi-scale, hybrid" feature space. Following this decoding approach, the authors aim to show that early skill learning involves "contextualization" of the neural coding of individual movements, relative to their position in a sequence of consecutive movements.

Furthermore, they aim to show that this "contextualization" develops primarily during short rest periods interspersed with skill training and correlates with a performance metric which the authors interpret as an indicator of offline learning.

Strengths:

A strength of the paper is the innovative decoding approach, which achieves impressive decoding accuracies via dimension reduction of a "multi-scale, hybrid space". This hybridspace approach follows the neurobiologically plausible idea of concurrent distribution of neural coding across local circuits as well as large-scale networks. A further strength of the study is the large number of tested dimension reduction techniques and classifiers.

Weaknesses:

A clear weakness of the paper lies in the authors' conclusions regarding "contextualization". Several potential confounds, which partly arise from the experimental design (mainly the use of a single sequence) and which are described below, question the neurobiological implications proposed by the authors and provide a simpler explanation of the results. Furthermore, the paper follows the assumption that short breaks result in offline skill learning, while recent evidence, described below, casts doubt on this assumption.

Please, see below for detailed response to each of these points.

Specifically: The authors interpret the ordinal position information captured by their decoding approach as a reflection of neural coding dedicated to the local context of a movement (Figure 4). One way to dissociate ordinal position information from information about the moving effectors is to train a classifier on one sequence and test the classifier on other sequences that require the same movements, but in different positions (Kornysheva et al., Neuron 2019). In the present study, however, participants trained to repeat a single sequence (4-1-3-2-4).

A crucial difference between our present study and the elegant study from Kornysheva et al. (2019) in Neuron highlighted by the Reviewer is that while ours is a learning study, the Kornysheva et al. study is not. Kornysheva et al. included an initial separate behavioral training session (i.e. – performed outside of the MEG) during which participants learned associations between fractal image patterns and different keypress sequences. Then in a separate, later MEG session—after the stimulus-response associations had been already learned in the first session—participants were tasked with recalling the learned sequences in response to a presented visual cue (i.e. – the paired fractal pattern).

Our rationale for not including multiple sequences in the same Day 1 training session of our study design was that it would lead to prominent interference effects, as widely reported in the literature [10-12]. Thus, while we had to take the issue of interference into consideration for our design, the Kornysheva et al. study did not. While Kornysheva et al. aimed to “dissociate ordinal position information from information about the moving effectors”, we tested various untrained sequences on Day 2 allowing us to determine that the contextualization result was specific to the trained sequence. By using this approach, we avoided interference effects on the learning of the primary skill caused by simultaneous acquisition of a second skill.

The revised manuscript states our findings related to the Day 2 Control data in the following locations:

Results (lines 117-122):

“On the following day, participants were retested on performance of the same sequence (4-1-3-2-4) over 9 trials (Day 2 Retest), as well as on the single-trial performance of 9 different untrained control sequences (Day 2 Controls: 2-1-3-4-2, 4-2-4-3-1, 3-4-2-3-1, 1-4-3-4-2, 3-2-4-3-1, 1-4-2-3-1, 3-2-4-2-1, 3-2-1-4-2, and 4-23-1-4). As expected, an upward shift in performance of the trained sequence (0.68 ± SD 0.56 keypresses/s; t = 7.21, p < 0.001) was observed during Day 2 Retest, indicative of an overnight skill consolidation effect (Figure 1 – figure supplement 1A).”

Results (lines 212-219):

“Utilizing the highest performing decoders that included LDA-based manifold extraction, we assessed the robustness of hybrid-space decoding over multiple sessions by applying it to data collected on the following day during the Day 2 Retest (9-trial retest of the trained sequence) and Day 2 Control (single-trial performance of 9 different untrained sequences) blocks. The decoding accuracy for Day 2 MEG data remained high (87.11% ± SD 8.54% for the trained sequence during Retest, and 79.44% ± SD 5.54% for the untrained Control sequences; Figure 3 – figure supplement 4). Thus, index finger classifiers constructed using the hybrid decoding approach robustly generalized from Day 1 to Day 2 across trained and untrained keypress sequences.”

Results (lines 269-273):

“On Day 2, incorporating contextual information into the hybrid-space decoder enhanced classification accuracy for the trained sequence only (improving from 87.11% for 4-class to 90.22% for 5-class), while performing at or below-chance levels for the Control sequences (≤ 30.22% ± SD 0.44%). Thus, the accuracy improvements resulting from inclusion of contextual information in the decoding framework was specific for the trained skill sequence.”

As a result, ordinal position information is potentially confounded by the fixed finger transitions around each of the two critical positions (first and fifth press). Across consecutive correct sequences, the first keypress in a given sequence was always preceded by a movement of the index finger (=last movement of the preceding sequence), and followed by a little finger movement. The last keypress, on the other hand, was always preceded by a ring finger movement, and followed by an index finger movement (=first movement of the next sequence). Figure 4 - supplement 2 shows that finger identity can be decoded with high accuracy (>70%) across a large time window around the time of the keypress, up to at least +/-100 ms (and likely beyond, given that decoding accuracy is still high at the boundaries of the window depicted in that figure). This time window approaches the keypress transition times in this study. Given that distinct finger transitions characterized the first and fifth keypress, the classifier could thus rely on persistent (or "lingering") information from the preceding finger movement, and/or "preparatory" information about the subsequent finger movement, in order to dissociate the first and fifth keypress.

Currently, the manuscript provides little evidence that the context information captured by the decoding approach is more than a by-product of temporally extended, and therefore overlapping, but independent neural representations of consecutive keypresses that are executed in close temporal proximity - rather than a neural representation dedicated to context.

During the review process, the authors pointed out that a "mixing" of temporally overlapping information from consecutive keypresses, as described above, should result in systematic misclassifications and therefore be detectable in the confusion matrices in Figures 3C and 4B, which indeed do not provide any evidence that consecutive keypresses are systematically confused. However, such absence of evidence (of systematic misclassification) should be interpreted with caution, and, of course, provides no evidence of absence. The authors also pointed out that such "mixing" would hamper the discriminability of the two ordinal positions of the index finger, given that "ordinal position 5" is systematically followed by "ordinal position 1". This is a valid point which, however, cannot rule out that "contextualization" nevertheless reflects the described "mixing".

The revised manuscript contains several control analyses which rule out this potential confound.

Results (lines 318-328):

“Within-subject correlations were consistent with these group-level findings. The average correlation between offline contextualization and micro-offline gains within individuals was significantly greater than zero (Figure 5 – figure supplement 4, left; t = 3.87, p = 0.00035, df = 25, Cohen's d = 0.76) and stronger than correlations between online contextualization and either micro-online (Figure 5 – figure supplement 4, middle; t = 3.28, p = 0.0015, df = 25, Cohen's d = 1.2) or micro-offline gains (Figure 5 – figure supplement 4, right; t = 3.7021, p = 5.3013e-04, df = 25, Cohen's d = 0.69). These findings were not explained by behavioral changes of typing rhythm (t = -0.03, p = 0.976; Figure 5 – figure supplement 5), adjacent keypress transition times (R2 = 0.00507, F[1,3202] = 16.3; Figure 5 – figure supplement 6), or overall typing speed (between-subject; R2 = 0.028, p = 0.41; Figure 5 – figure supplement 7).”

Results (lines 385-390):

“Further, the 5-class classifier—which directly incorporated information about the sequence location context of each keypress into the decoding pipeline—improved decoding accuracy relative to the 4-class classifier (Figure 4C). Importantly, testing on Day 2 revealed specificity of this representational differentiation for the trained skill but not for the same keypresses performed during various unpracticed control sequences (Figure 5C).”

Discussion (lines 408-423):

“Offline contextualization consistently correlated with early learning gains across a range of decoding windows (50–250ms; Figure 5 – figure supplement 1). This result remained unchanged when measuring offline contextualization between the last and second sequence of consecutive trials, inconsistent with a possible confounding effect of pre-planning [30] (Figure 5 – figure supplement 2A). On the other hand, online contextualization did not predict learning (Figure 5 – figure supplement 3). Consistent with these results the average within-subject correlation between offline contextualization and micro-offline gains was significantly stronger than within subject correlations between online contextualization and either micro-online or micro-offline gains (Figure 5 – figure supplement 4).

Offline contextualization was not driven by trial-by-trial behavioral differences, including typing rhythm (Figure 5 – figure supplement 5) and adjacent keypress transition times (Figure 5 – figure supplement 6) nor by between-subject differences in overall typing speed (Figure 5 – figure supplement 7)—ruling out a reliance on differences in the temporal overlap of keypresses. Importantly, offline contextualization documented on Day 1 stabilized once a performance plateau was reached (trials 11-36), and was retained on Day 2, documenting overnight consolidation of the differentiated neural representations.”

During the review process, the authors responded to my concern that training of a single sequence introduces the potential confound of "mixing" described above, which could have been avoided by training on several sequences, as in Kornysheva et al. (Neuron 2019), by arguing that Day 2 in their study did include control sequences. However, the authors' findings regarding these control sequences are fundamentally different from the findings in Kornysheva et al. (2019), and do not provide any indication of effector-independent ordinal information in the described contextualization - but, actually, the contrary. In Kornysheva et al. (Neuron 2019), ordinal, or positional, information refers purely to the rank of a movement in a sequence. In line with the idea of competitive queuing, Kornysheva et al. (2019) have shown that humans prepare for a motor sequence via a simultaneous representation of several of the upcoming movements, weighted by their rank in the sequence. Importantly, they could show that this gradient carries information that is largely devoid of information about the order of specific effectors involved in a sequence, or their timing, in line with competitive queuing. They showed this by training a classifier to discriminate between the five consecutive movements that constituted one specific sequence of finger movements (five classes: 1st, 2nd, 3rd, 4th, 5th movement in the sequence) and then testing whether that classifier could identify the rank (1st, 2nd, 3rd, etc) of movements in another sequence, in which the fingers moved in a different order, and with different timings. Importantly, this approach demonstrated that the graded representations observed during preparation were largely maintained after this cross decoding, indicating that the sequence was represented via ordinal position information that was largely devoid of information about the specific effectors or timings involved in sequence execution. This result differs completely from the findings in the current manuscript. Dash et al. report a drop in detected ordinal position information (degree of contextualization in figure 5C) when testing for contextualization in their novel, untrained sequences on Day 2, indicating that context and ordinal information as defined in Dash et al. is not at all devoid of information about the specific effectors involved in a sequence. In this regard, a main concern in my public review, as well as the second reviewer's public review, is that Dash et al. cannot tell apart, by design, whether there is truly contextualization in the neural representation of a sequence (which they claim), or whether their results regarding "contextualization" are explained by what they call "mixing" in their author response, i.e., an overlap of representations of consecutive movements, as suggested as an alternative explanation by Reviewer 2 and myself.