Towards biologically plausible phosphene simulation for the differentiable optimization of visual cortical prostheses

Curation statements for this article:-

Curated by eLife

eLife assessment

This simulation work with open source code will be of interest to those developing visual prostheses and demonstrates useful improvements over past visual prosthesis simulations. While the authors provide compelling evidence to support the generation of individual phosphenes and integration into deep-learning algorithms, the assumptions beyond individual phosphenes and the overall validation process are inadequate to support the claim of fitting the needs of cortical neuroprosthetic vision development.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Blindness affects millions of people around the world. A promising solution to restoring a form of vision for some individuals are cortical visual prostheses, which bypass part of the impaired visual pathway by converting camera input to electrical stimulation of the visual system. The artificially induced visual percept (a pattern of localized light flashes, or ‘phosphenes’) has limited resolution, and a great portion of the field’s research is devoted to optimizing the efficacy, efficiency, and practical usefulness of the encoding of visual information. A commonly exploited method is non-invasive functional evaluation in sighted subjects or with computational models by using simulated prosthetic vision (SPV) pipelines. An important challenge in this approach is to balance enhanced perceptual realism, biologically plausibility, and real-time performance in the simulation of cortical prosthetic vision. We present a biologically plausible, PyTorch-based phosphene simulator that can run in real-time and uses differentiable operations to allow for gradient-based computational optimization of phosphene encoding models. The simulator integrates a wide range of clinical results with neurophysiological evidence in humans and non-human primates. The pipeline includes a model of the retinotopic organization and cortical magnification of the visual cortex. Moreover, the quantitative effects of stimulation parameters and temporal dynamics on phosphene characteristics are incorporated. Our results demonstrate the simulator’s suitability for both computational applications such as end-to-end deep learning-based prosthetic vision optimization as well as behavioral experiments. The modular and open-source software provides a flexible simulation framework for computational, clinical, and behavioral neuroscientists working on visual neuroprosthetics.

Article activity feed

-

-

Author Response

Reviewer #1 (Public Review):

The authors present a PyTorch-based simulator for prosthetic vision. The model takes in the anatomical location of a visual cortical prostheses as well as a series of electrical stimuli to be applied to each electrode, and outputs the resulting phosphenes. To demonstrate the usefulness of the simulator, the paper reproduces psychometric curves from the literature and uses the simulator in the loop to learn optimized stimuli.

One of the major strengths of the paper is its modeling work - the authors make good use of existing knowledge about retinotopic maps and psychometric curves that describe phosphene appearance in response to single-electrode stimulation. Using PyTorch as a backbone is another strength, as it allows for GPU integration and seamless integration with common deep …

Author Response

Reviewer #1 (Public Review):

The authors present a PyTorch-based simulator for prosthetic vision. The model takes in the anatomical location of a visual cortical prostheses as well as a series of electrical stimuli to be applied to each electrode, and outputs the resulting phosphenes. To demonstrate the usefulness of the simulator, the paper reproduces psychometric curves from the literature and uses the simulator in the loop to learn optimized stimuli.

One of the major strengths of the paper is its modeling work - the authors make good use of existing knowledge about retinotopic maps and psychometric curves that describe phosphene appearance in response to single-electrode stimulation. Using PyTorch as a backbone is another strength, as it allows for GPU integration and seamless integration with common deep learning models. This work is likely to be impactful for the field of sight restoration.

- However, one of the major weaknesses of the paper is its model validation - while some results seem to be presented for data the model was fit on (as opposed to held-out test data), other results lack quantitative metrics and a comparison to a baseline ("null hypothesis") model. On the one hand, it appears that the data presented in Figs. 3-5 was used to fit some of the open parameters of the model, as mentioned in Subsection G of the Methods. Hence it is misleading to present these as model "predictions", which are typically presented for held-out test data to demonstrate a model's ability to generalize. Instead, this is more of a descriptive model than a predictive one, and its ability to generalize to new patients remains yet to be demonstrated.

We agree that the original presentation of the model fits might give rise to unwanted confusion. In the revision, we have adapted the fit of the thresholding mechanism to include a 3-fold cross validation, where part of the data was excluded during the fitting, and used as test sets to calculate the model’s performance. The results of the cross- validation are now presented in panel D of Figure 3. The fitting of the brightness and temporal dynamics parameters using cross-validation was not feasible due to the limited amount of quantitative data describing temporal dynamics and phosphene size and brightness for intracortical electrodes. To avoid confusion, we have adapted the corresponding text and figure captions to specify that we are using a fit as description of the data.

We note that the goal of the simulator is not to provide a single set of parameters that describes precise phosphene perception for all patients but that it could also be used to capture variability among patients. Indeed, the model can be tailored to new patients based on a small data set. Figure 3-figure supplement 1 exemplifies how our simulator can be tailored to several data sets collected from patients with surface electrodes. Future clinical experiments might be used to verify how well the simulator can be tailored to the data of other patients.

Specifically, we have made the following changes to the manuscript:

Caption Figure 2: the fitted peak brightness levels reproduced by our model

Caption Figure 3: The model's probability of phosphene perception is visualized as a function of charge per phase

Caption Figure 3: Predicted probabilities in panel (d) are the results of a 3-fold cross- validation on held-out test data.

Line 250: we included biologically inspired methods to model the perceptual effects of different stimulation parameters

Line 271: Each frame, the simulator maps electrical stimulation parameters (stimulation current, pulse width and frequency) to an estimated phosphene perception

Lines 335-336: such that 95% of the Gaussian falls within the fitted phosphene size.

Line 469-470: Figure 4 displays the simulator's fit on the temporal dynamics found in a previous published study by Schmidt et al. (1996).

Lines 922-925: Notably, the trade-off between model complexity and accurate psychophysical fits or predictions is a recurrent theme in the validation of the components implemented in our simulator.

- On the other hand, the results presented in Fig. 8 as part of the end-to-end learning process are not accompanied by any sorts of quantitative metrics or comparison to a baseline model.

We now realize that the presentation of the end-to-end results might have given the impression that we present novel image processing strategies. However, the development of a novel image processing strategy is outside the scope of the study. Instead, The study aims to provide an improved simulation which can be used for more realistic assessment of different stimulation protocols. The simulator needs to fit experimental data, and it should run fast (so it can be used in behavioral experiments). Importantly, as demonstrated in our end-to-end experiments, the model can be used in differentiable programming pipelines (so it can be used in computational optimization experiments), which is a valuable contribution in itself because it lends itself to many machine learning approaches which can improve the realism of the simulation.

We have rephrased our study aims in the discussion to improve clarity.

Lines 275-279: In the sections below, we discuss the different components of the simulator model, followed by a description of some showcase experiments that assess the ability to fit recent clinical data and the practical usability of our simulator in simulation experiments

Lines 810-814: Computational optimization approaches can also aid in the development of safe stimulation protocols, because they allow a faster exploration of the large parameter space and enable task-driven optimization of image processing strategies (Granley et al., 2022; Fauvel et al., 2022; White et al., 2019; Küçükoglü et al. 2022; de Ruyter van Steveninck et al., 2022; Ghaffari et al., 2021).

Lines 814-819: Ultimately, the development of task-relevant scene-processing algorithms will likely benefit both from computational optimization experiments as well as exploratory SPV studies with human observers. With the presented simulator we aim to contribute a flexible toolkit for such experiments.

Lines 842-853: Eventually, the functional quality of the artificial vision will not only depend on the correspondence between the visual environment and the phosphene encoding, but also on the implant recipient's ability to extract that information into a usable percept. The functional quality of end-to-end generated phosphene encodings in daily life tasks will need to be evaluated in future experiments. Regardless of the implementation, it will always be important to include human observers (both sighted experimental subjects and actual prosthetic implant users in the optimization cycle to ensure subjective interpretability for the end user (Fauvel et al., 2022; Beyeler & Sanchez-Garcia, 2022).

- The results seem to assume that all phosphenes are small Gaussian blobs, and that these phosphenes combine linearly when multiple electrodes are stimulated. Both assumptions are frequently challenged by the field. For all these reasons, it is challenging to assess the potential and practical utility of this approach as well as get a sense of its limitations.

The reviewer raises a valid point and a similar point was raised by a different reviewer (our response is duplicated). As pointed out in the discussion, many aspects about multi- electrode phosphene perception are still unclear. On the one hand, the literature is in agreement that there is some degree of predictability: some papers explicitly state that phosphenes produced by multiple patterns are generally additive (Dobelle & Mladejovsky, 1974), that the locations are predictable (Bosking et al., 2018) and that multi-electrode stimulation can be used to generate complex, interpretable patterns of phosphenes (Chen et al., 2020, Fernandez et al., 2021). On the other hand, however, in some cases, the stimulation of multiple electrodes is reported to lead to brighter phosphenes (Fernandez et al., 2021), fused or displaced phosphenes (Schmidt et al., 1996, Bak et al., 1990) or unpredicted phosphene patterns (Fernández et al., 2021). It is likely that the probability of these interference patterns decreases when the distance between the stimulated electrodes increases. An empirical finding is that the critical distance for intracortical stimulation is approximately 1 mm (Ghose & Maunsell, 2012).

We note that our simulator is not restricted to the simulation of linearly combined Gaussian blobs. Some irregularities, such as elongated phosphene shapes were already supported in the previous version of our software. Furthermore, we added a supplementary figure that displays a possible approach to simulate some of the more complex electrode interactions that are reported in the literature, with only minor adaptations to the code. Our study thereby aims to present a flexible simulation toolkit that can be adapted to the needs of the user.

Adjustments:

Added Figure 1-figure supplement 3 on irregular phosphene percepts.

Lines 957-970: Furthermore, in contrast to the assumptions of our model, interactions between simultaneous stimulation of multiple electrodes can have an effect on the phosphene size and sometimes lead to unexpected percepts (Fernandez et al., 2021, Dobelle & Mladejovsky 1974, Bak et al., 1990). Although our software supports basic exploratory experimentation of non-linear interactions (see Figure 1-figure supplement 3), by default, our simulator assumes independence between electrodes. Multi- phosphene percepts are modeled using linear summation of the independent percepts. These assumptions seem to hold for intracortical electrodes separated by more than 1 mm (Ghose & Maunsell, 2012), but may underestimate the complexities observed when electrodes are nearer. Further clinical and theoretical modeling work could help to improve our understanding of these non-linear dynamics.

- Another weakness of the paper is the term "biologically plausible", which appears throughout the manuscript but is not clearly defined. In its current form, it is not clear what makes this simulator "biologically plausible" - it certainly contains a retinotopic map and is fit on psychophysical data, but it does not seem to contain any other "biological" detail.

We thank the reviewer for the remark. We improved our description of what makes the simulator “biologically plausible” in the introduction (line 78): ‘‘Biological plausibility, in our work's context, points to the simulation's ability to capture essential biological features of the visual system in a manner consistent with empirical findings: our simulator integrates quantitative findings and models from the literature on cortical stimulation in V1 [...]”. In addition, we mention in the discussion (lines 611 - 621): “The aim of this study is to present a biologically plausible phosphene simulator, which takes realistic ranges of stimulation parameters, and generates a phenomenologically accurate representation of phosphene vision using differentiable functions. In order to achieve this, we have modeled and incorporated an extensive body of work regarding the psychophysics of phosphene perception. From the results presented in section H, we observe that our simulator is able to produce phosphene percepts that match the descriptions of phosphene vision that were gathered in basic and clinical visual neuroprosthetics studies over the past decades.”

- In fact, for the most part the paper seems to ignore the fact that implanting a prosthesis in one cerebral hemisphere will produce phosphenes that are restricted to one half of the visual field. Yet Figures 6 and 8 present phosphenes that seemingly appear in both hemifields. I do not find this very "biologically plausible".

We agree with the reviewer that contemporary experiments with implantable electrodes usually test electrodes in a single hemisphere. However, future clinically useful approaches should use bilaterally implanted electrode arrays. Our simulator can either present phosphene locations in either one or both hemifields.

We have made the following textual changes:

Fig. 1 caption: Example renderings after initializing the simulator with four 10 × 10 electrode arrays (indicated with roman numerals) placed in the right hemisphere (electrode spacing: 4 mm, in correspondence with the commonly used 'Utah array' (Maynard et al., 1997)).

Line 518-525: The simulator is initialized with 1000 possible phosphenes in both hemifields, covering a field of view of 16 degrees of visual angle. Note that the simulated electrode density and placement differs from current prototype implants and the simulation can be considered to be an ambitious scenario from a surgical point of view, given the folding of the visual cortex and the part of the retinotopic map in V1 that is buried in the calcarine sulcus. Line 546-547: with the same phosphene coverage as the previously described experiment

Reviewer #2 (Public Review):

Van der Grinten and De Ruyter van Steveninck et al. present a design for simulating cortical- visual-prosthesis phosphenes that emphasizes features important for optimizing the use of such prostheses. The characteristics of simulated individual phosphenes were shown to agree well with data published from the use of cortical visual prostheses in humans. By ensuring that functions used to generate the simulations were differentiable, the authors permitted and demonstrated integration of the simulations into deep-learning algorithms. In concept, such algorithms could thereby identify parameters for translating images or videos into stimulation sequences that would be most effective for artificial vision. There are, however, limitations to the simulation that will limit its applicability to current prostheses.

The verification of how phosphenes are simulated for individual electrodes is very compelling. Visual-prosthesis simulations often do ignore the physiologic foundation underlying the generation of phosphenes. The authors' simulation takes into account how stimulation parameters contribute to phosphene appearance and show how that relationship can fit data from actual implanted volunteers. This provides an excellent foundation for determining optimal stimulation parameters with reasonable confidence in how parameter selections will affect individual-electrode phosphenes.

We thank the reviewer for these supportive comments.

Issues with the applicability and reliability of the simulation are detailed below:

- The utility of this simulation design, as described, unfortunately breaks down beyond the scope of individual electrodes. To model the simultaneous activation of multiple electrodes, the authors' design linearly adds individual-electrode phosphenes together. This produces relatively clean collections of dots that one could think of as pixels in a crude digital display. Modeling phosphenes in such a way assumes that each electrode and the network it activates operate independently of other electrodes and their neuronal targets. Unfortunately, as the authors acknowledge and as noted in the studies they used to fit and verify individual-electrode phosphene characteristics, simultaneous stimulation of multiple electrodes often obscures features of individual-electrode phosphenes and can produce unexpected phosphene patterns. This simulation does not reflect these nonlinearities in how electrode activations combine. Nonlinearities in electrode combinations can be as subtle the phosphenes becoming brighter while still remaining distinct, or as problematic as generating only a single small phosphene that is indistinguishable from the activation of a subset of the electrodes activated, or that of a single electrode.

If a visual prosthesis happens to generate some phosphenes that can be elicited independently, a simulator of this type could perhaps be used by processing stimulation from independent groups of electrodes and adding their phosphenes together in the visual field.

The reviewer raises a valid point and a similar point was raised by a different reviewer (our response is duplicated). As pointed out in the discussion, many aspects about multi- electrode phosphene perception are still unclear. On the one hand, the literature is in agreement that there is some degree of predictability: some papers explicitly state that phosphenes produced by multiple patterns are generally additive (Dobelle & Mladejovsky, 1974), that the locations are predictable (Bosking et al., 2018) and that multi-electrode stimulation can be used to generate complex, interpretable patterns of phosphenes (Chen et al., 2020, Fernandez et al., 2021). On the other hand, however, in some cases, the stimulation of multiple electrodes is reported to lead to brighter phosphenes (Fernandez et al., 2021), fused or displaced phosphenes (Schmidt et al., 1996, Bak et al., 1990) or unpredicted phosphene patterns (Fernández et al., 2021). It is likely that the probability of these interference patterns decreases when the distance between the stimulated electrodes increases. An empirical finding is that the critical distance for intracortical stimulation is approximately 1 mm (Ghose & Maunsell, 2012).

We note that our simulator is not restricted to the simulation of linearly combined Gaussian blobs. Some irregularities, such as elongated phosphene shapes were already supported in the previous version of our software. Furthermore, we added a supplementary figure that displays a possible approach to simulate some of the more complex electrode interactions that are reported in the literature, with only minor adaptations to the code. Our study thereby aims to present a flexible simulation toolkit that can be adapted to the needs of the user.

Adjustments:

Lines 957-970: Furthermore, in contrast to the assumptions of our model, interactions between simultaneous stimulation of multiple electrodes can have an effect on the phosphene size and sometimes lead to unexpected percepts (Fernandez et al., 2021, Dobelle & Mladejovsky 1974, Bak et al., 1990). Although our software supports basic exploratory experimentation of non-linear interactions (see Figure 1-figure supplement 3), by default, our simulator assumes independence between electrodes. Multi- phosphene percepts are modeled using linear summation of the independent percepts. These assumptions seem to hold for intracortical electrodes separated by more than 1 mm (Ghose & Maunsell, 2012), but may underestimate the complexities observed when electrodes are nearer. Further clinical and theoretical modeling work could help to improve our understanding of these non-linear dynamics.

Added Figure 1-figure supplement 3 on irregular phosphene percepts.

- Verification of how the simulation renders individual phosphenes based on stimulation parameters is an important step in confirming agreement between the simulation and the function of implanted devices. That verification was well demonstrated. The end use a visual-prosthesis simulation, however, would likely not be optimizing just the appearance of phosphenes, but predicting and optimizing functional performance in visual tasks. Investigating whether this simulator can suggest visual-task performance, either with sighted volunteers or a decoder model, that is similar to published task performance from visual-prosthesis implantees would be a necessary step for true validation.

We agree with the reviewer that it will be vital to investigate the utility of the simulator in tasks. However, the literature on the performance of users of a cortical prosthesis in visually-guided tasks is scarce, making it difficult to compare task performance between simulated versus real prosthetic vision.

Secondly, the main objective of the current study is to propose a simulator that emulates the sensory / perceptual experience, i.e. the low-level perceptual correspondence. Once more behavioral data from prosthetic users become available, studies can use the simulator to make these comparisons.

Regarding the comparison to simulated prosthetic vision in sighted volunteers, there are some fundamental limitations. For instance, sighted subjects are exposed for a shorter duration to the (simulated) artificial percept and lack the experience and training that prosthesis users get. Furthermore, sighted subjects may be unfamiliar with compensation strategies that blind individuals have developed. It will therefore be important to conduct clinical experiments.

To convey more clearly that our experiments are performed to verify the practical usability in future behavioral experiments, we have incorporated the following textual adjustments:

Lines 275-279: In the sections below, we discuss the different components of the simulator model, followed by a description of some showcase experiments that assess the ability to fit recent clinical data and the practical usability of our simulator in simulation experiments.

Lines 842-853: Eventually, the functional quality of the artificial vision will not only depend on the correspondence between the visual environment and the phosphene encoding, but also on the implant recipient's ability to extract that information into a usable percept. The functional quality of end-to-end generated phosphene encodings in daily life tasks will need to be evaluated in future experiments. Regardless of the implementation, it will always be important to include human observers (both sighted experimental subjects and actual prosthetic implant users in the optimization cycle to ensure subjective interpretability for the end (Fauvel et al., 2022; Beyeler & Sanchez- Garcia, 2022).

- A feature of this simulation is being able to convert stimulation of V1 to phosphenes in the visual field. If used, this feature would likely only be able to simulate a subset of phosphenes generated by a prosthesis. Much of V1 is buried within the calcarine sulcus, and electrode placement within the calcarine sulcus is not currently feasible. As a result, stimulation of visual cortex typically involves combinations of the limited portions of V1 that lie outside the sulcus and higher visual areas, such as V2.

We agree that some areas (most notably the calcarine sulcus) are difficult to access in a surgical implantation procedure. A realistic simulation of state-of-the-art cortical stimulation should only partially cover the visual field with phosphenes. However, it may be predicted that some of these challenges will be addressed by new technologies. We chose to make the simulator as generally applicable as possible and users of the simulator can decide which phosphene locations are simulated. To demonstrate that our simulator can be flexibly initialized to simulate specific implantation locations using third- party software, we have now added a supplementary figure (Figure 1-figure supplement 1) that displays a demonstration of an electrode grid placement on a 3D brain model, generating the phosphene locations from receptive field maps. However, the simulator is general and can also be used to guide future strategies that aim to e.g. cover the entire field with electrodes, compare performance between upper and lower hemifields etc.

Reviewer #3 (Public Review):

The authors are presenting a new simulation for artificial vision that incorporates many recent advances in our understanding of the neural response to electrical stimulation, specifically within the field of visual prosthetics. The authors succeed in integrating multiple results from other researchers on aspects of V1 response to electrical stimulation to create a system that more accurately models V1 activation in a visual prosthesis than other simulators. The authors then attempt to demonstrate the value of such a system by adding a decoding stage and using machine-learning techniques to optimize the system to various configurations.

- While there is merit to being able to apply various constraints (such as maximum current levels) and have the system attempt to find a solution that maximizes recoverable information, the interpretability of such encodings to a hypothetical recipient of such a system is not addressed. The authors demonstrate that they are able to recapitulate various standard encodings through this automated mechanism, but the advantages to using it as opposed to mechanisms that directly detect and encode, e.g., edges, are insufficiently justified.

We thank the reviewer for this constructive remark. Our simulator is designed for more realistic assessment of different stimulation protocols in behavioral experiments or in computational optimization experiments. The presented end-to-end experiments are a demonstration of the practical usability of our simulator in computational experiments, building on a previously existing line of research. In fact, our simulator is compatible with any arbitrary encoding strategy.

As our paper is focused on the development of a novel tool for this existing line of research, we do not aim to make claims about the functional quality of end-to-end encoders compared to alternative encoding methods (such as edge detection). That said, we agree with the reviewer that it is useful to discuss the benefits of end-to-end optimization compared to e.g. edge detection will be useful.

We have incorporated several textual changes to give a more nuanced overview and to acknowledge that many benefits remain to be tested. Furthermore, we have restated our study aims more clearly in the discussion to clarify the distinction between the goals of the current paper and the various encoding strategies that remain to be tested.

Lines 275-279: In the sections below, we discuss the different components of the simulator model, followed by a description of some showcase experiments that assess the ability to fit recent clinical data and the practical usability of our simulator in simulation experiments

Lines 810-814: Computational optimization approaches can also aid in the development of safe stimulation protocols, because they allow a faster exploration of the large parameter space and enable task-driven optimization of image processing strategies (Granley et al., 2022; Fauvel et al., 2022; White et al., 2019; Küçükoglü et al. 2022; de Ruyter van Steveninck, Güçlü et al., 2022; Ghaffari et al., 2021).

Lines 842-853: Eventually, the functional quality of the artificial vision will not only depend on the correspondence between the visual environment and the phosphene encoding, but also on the implant recipient's ability to extract that information into a usable percept. The functional quality of end-to-end generated phosphene encodings in daily life tasks will need to be evaluated in future experiments. Regardless of the implementation, it will always be important to include human observers (both sighted experimental subjects and actual prosthetic implant users in the optimization cycle to ensure subjective interpretability for the end user (Fauvel et al., 2022; Beyeler & Sanchez-Garcia, 2022).

- The authors make a few mistakes in their interpretation of biological mechanisms, and the introduction lacks appropriate depth of review of existing literature, giving the reader the mistaken impression that this is simulator is the only attempt ever made at biologically plausible simulation, rather than merely the most recent refinement that builds on decades of work across the field.

We thank the reviewer for this insight. We have improved the coverage of the previous literature to give credit where credit is due, and to address the long history of simulated phosphene vision.

Textual changes:

Lines 64-70: Although the aforementioned SPV literature has provided us with major fundamental insights, the perceptual realism of electrically generated phosphenes and some aspects of the biological plausibility of the simulations can be further improved and by integrating existing knowledge of phosphene vision and its underlying physiology.

Lines 164-190: The aforementioned studies used varying degrees of simplification of phosphene vision in their simulations. For instance, many included equally-sized phosphenes that were uniformly distributed over the visual field (informally referred to as the ‘scoreboard model’). Furthermore, most studies assumed either full control over phosphene brightness or used binary levels of brightness (e.g. 'on' / 'off'), but did not provide a description of the associated electrical stimulation parameters. Several studies have explicitly made steps towards more realistic phosphene simulations, by taking into account cortical magnification or using visuotopic maps (Fehervari et al., 2010;, Li et al., 2013; Srivastava et al., 2009; Paraskevoudi et al., 2021), simulating noise and electrode dropout (Dagnelie et al., 2007), or using varying levels of brightness (Vergnieux et al., 2017; Sanchez-Garcia et al., 2022; Parikh et al., 2013). However, no phosphene simulations have modeled temporal dynamics or provided a description of the parameters used for electrical stimulation. Some recent studies developed descriptive models of the phosphene size or brightness as a function of the stimulation parameters (Winawer et al., 2016; Bosking et al., 2017). Another very recent study has developed a deep-learning based model for predicting a realistic phosphene percept for single stimulating electrodes (Granley et al., 2022). These studies have made important contributions to improve our understanding of the effects of different stimulation parameters. The present work builds on these previous insights to provide a full simulation model that can be used for the functional evaluation of cortical visual prosthetic systems.

Lines 137-140: Due to the cortical magnification (the foveal information is represented by a relatively large surface area in the visual cortex as a result of variation of retinal RF size) the size of the phosphene increases with its eccentricity (Winawer & Parvizi, 2016, Bosking et al., 2017).

Lines 883-893: Even after loss of vision, the brain integrates eye movements for the localization of visual stimuli (Reuschel et al., 2012), and in cortical prostheses the position of the artificially induced percept will shift along with eye movements (Brindley & Lewin, 1968, Schmidt et al., 1996). Therefore, in prostheses with a head-mounted camera, misalignment between the camera orientation and the pupillary axes can induce localization problems (Caspi et al., 2018; Paraskevoudi & Pezaris, 2019; Sabbah et al., 2014; Schmidt et al., 1996). Previous SPV studies have demonstrated that eye-tracking can be implemented to simulate the gaze-coupled perception of phosphenes (Cha et al., 1992; Sommerhalder et al., 2004; Dagnelie et al., 2006; McIntosh et al., 2013, Paraskevoudi & Pezaris, 2021; Rassia & Pezaris 2018, Titchener et al., 2018, Srivastava et al., 2009)

- The authors have importantly not included gaze position compensation which adds more complexity than the authors suggest it would, and also means the simulator lacks a basic, fundamental feature that strongly limits its utility.

We agree with the reviewer that the inclusion of gaze position to simulate gaze-centered phosphene locations is an important requirement for a realistic simulation. We have made several textual adjustments to section M1 to improve the clarity of the explanation and we have added several references to address the simulation literature that took eye movements into account.

In addition, we included a link to some demonstration videos in which we illustrate that the simulator can be used for gaze-centered phosphene simulation. The simulation models the phosphene locations based on the gaze direction, and updates the input with changes in the gaze direction. The stimulation pattern is chosen to encode the visual environment at the location where the gaze is directed. Gaze contingent processing has been implemented in prior simulation studies (for instance: Paraskevoudi et al., 2021; Rassia et al., 2018; Titchener et al., 2018) and even in the clinical setting with users of the Argus II implant (Caspi et al., 2018). From a modeling perspective, it is relatively straightforward to simulate gaze-centered phosphene locations and gaze contingent image processing (our code will be made publicly available). At the same time, however, seen from a clinical and hardware engineering perspective, the implementation of eye-tracking in a prosthetic system for blind individuals might come with additional complexities. This is now acknowledged explicitly in the manuscript.

Textual adjustment:

Lines 883-910: Even after loss of vision, the brain integrates eye movements for the localization of visual stimuli (Reuschel et al., 2012), and in cortical prostheses the position of the artificially induced percept will shift along with eye movements (Brindley & Lewin, 1968, Schmidt et al., 1996). Therefore, in prostheses with a head-mounted camera, misalignment between the camera orientation and the pupillary axes can induce localization problems (Caspi et al., 2018; Paraskevoudi & Pezaris, 2019; Sabbah et al., 2014; Schmidt et al., 1996). Previous SPV studies have demonstrated that eye-tracking can be implemented to simulate the gaze-coupled perception of phosphenes (Cha et al., 1992; Sommerhalder et al., 2004; Dagnelie et al., 2006, McIntosh et al., 2013; Paraskevoudi et al., 2021; Rassia et al., 2018; Titchener et al., 2018; Srivastava et al., 2009). Note that some of the cited studies implemented a simulation condition where not only the simulated phosphene locations, but also the stimulation protocol depended on the gaze direction. More specifically, instead of representing the head-centered camera input, the stimulation pattern was chosen to encode the external environment at the location where the gaze was directed. While further research is required, there is some preliminary evidence that such a gaze-contingent image processing can improve the functional and subjective quality of prosthetic vision (Caspi et al., 2018; Paraskevoudi et al., 2021; Rassia et al., 2018; Titchener et al., 2018). Some example videos of gaze-contingent simulated prosthetic vision can be retrieved from our repository (https://github.com/neuralcodinglab/dynaphos/blob/main/examples/). Note that an eye-tracker will be required to produce gaze-contingent image processing in visual prostheses and there might be unforeseen complexities in the clinical implementation thereof. The study of oculomotor behavior in blind individuals (with or without a visual prosthesis) is still an ongoing line of research (Caspi et al.,2018; Kwon et al., 2013; Sabbah et al., 2014; Hafed et al., 2016).

- Finally, the computational capacity required to run the described system is substantial and is not one that would plausibly be used as part of an actual device, suggesting that there may be difficulties with converting results from this simulator to an implantable system.

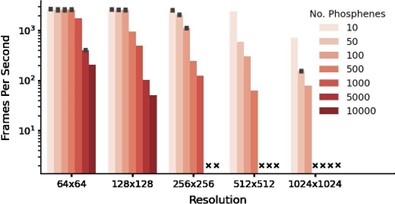

The software runs in real time with affordable, consumer-grade hardware. In Author response image 1 we present the results of performance testing with a 2016 model MSI GeForce GTX 1080 (priced around €600).

Author response image 1.

Note that the GPU is used only for the computation and rendering of the phosphene representations from given electrode stimulation patterns, which will never be part of any prosthetic device. The choice of encoder to generate the stimulation patterns will determine the required processing capacity that needs to be included in the prosthetic system, which is unrelated to the simulator’s requirements.

The following addition was made to the text:

- Lines 488-492: Notably, even on a consumer-grade GPU (e.g. a 2016 model GeForce GTX 1080) the simulator still reaches real-time processing speeds (>100 fps) for simulations with 1000 phosphenes at 256x256 resolution.

- With all of that said, the results do represent an advance, and one that could have wider impact if the authors were to reduce the computational requirements, and add gaze correction.

We appreciate the kind compliment from the reviewer and sincerely hope that our revised manuscript meets their expectations. Their feedback has been critical to reshape and improve this work.

-

eLife assessment

This simulation work with open source code will be of interest to those developing visual prostheses and demonstrates useful improvements over past visual prosthesis simulations. While the authors provide compelling evidence to support the generation of individual phosphenes and integration into deep-learning algorithms, the assumptions beyond individual phosphenes and the overall validation process are inadequate to support the claim of fitting the needs of cortical neuroprosthetic vision development.

-

Reviewer #1 (Public Review):

The authors present a PyTorch-based simulator for prosthetic vision. The model takes in the anatomical location of a visual cortical prostheses as well as a series of electrical stimuli to be applied to each electrode, and outputs the resulting phosphenes. To demonstrate the usefulness of the simulator, the paper reproduces psychometric curves from the literature and uses the simulator in the loop to learn optimized stimuli.

One of the major strengths of the paper is its modeling work - the authors make good use of existing knowledge about retinotopic maps and psychometric curves that describe phosphene appearance in response to single-electrode stimulation. Using PyTorch as a backbone is another strength, as it allows for GPU integration and seamless integration with common deep learning models. This work …

Reviewer #1 (Public Review):

The authors present a PyTorch-based simulator for prosthetic vision. The model takes in the anatomical location of a visual cortical prostheses as well as a series of electrical stimuli to be applied to each electrode, and outputs the resulting phosphenes. To demonstrate the usefulness of the simulator, the paper reproduces psychometric curves from the literature and uses the simulator in the loop to learn optimized stimuli.

One of the major strengths of the paper is its modeling work - the authors make good use of existing knowledge about retinotopic maps and psychometric curves that describe phosphene appearance in response to single-electrode stimulation. Using PyTorch as a backbone is another strength, as it allows for GPU integration and seamless integration with common deep learning models. This work is likely to be impactful for the field of sight restoration.

However, one of the major weaknesses of the paper is its model validation - while some results seem to be presented for data the model was fit on (as opposed to held-out test data), other results lack quantitative metrics and a comparison to a baseline ("null hypothesis") model.

- On the one hand, it appears that the data presented in Figs. 3-5 was used to fit some of the open parameters of the model, as mentioned in Subsection G of the Methods. Hence it is misleading to present these as model "predictions", which are typically presented for held-out test data to demonstrate a model's ability to generalize. Instead, this is more of a descriptive model than a predictive one, and its ability to generalize to new patients remains yet to be demonstrated.

- On the other hand, the results presented in Fig. 8 as part of the end-to-end learning process are not accompanied by any sorts of quantitative metrics or comparison to a baseline model. The results seem to assume that all phosphenes are small Gaussian blobs, and that these phosphenes combine linearly when multiple electrodes are stimulated. Both assumptions are frequently challenged by the field. For all these reasons, it is challenging to assess the potential and practical utility of this approach as well as get a sense of its limitations.Another weakness of the paper is the term "biologically plausible", which appears throughout the manuscript but is not clearly defined. In its current form, it is not clear what makes this simulator "biologically plausible" - it certainly contains a retinotopic map and is fit on psychophysical data, but it does not seem to contain any other "biological" detail. In fact, for the most part the paper seems to ignore the fact that implanting a prosthesis in one cerebral hemisphere will produce phosphenes that are restricted to one half of the visual field. Yet Figures 6 and 8 present phosphenes that seemingly appear in both hemifields. I do not find this very "biologically plausible".

-

Reviewer #2 (Public Review):

Van der Grinten and De Ruyter van Steveninck et al. present a design for simulating cortical-visual-prosthesis phosphenes that emphasizes features important for optimizing the use of such prostheses. The characteristics of simulated individual phosphenes were shown to agree well with data published from the use of cortical visual prostheses in humans. By ensuring that functions used to generate the simulations were differentiable, the authors permitted and demonstrated integration of the simulations into deep-learning algorithms. In concept, such algorithms could thereby identify parameters for translating images or videos into stimulation sequences that would be most effective for artificial vision. There are, however, limitations to the simulation that will limit its applicability to current prostheses.

The…

Reviewer #2 (Public Review):

Van der Grinten and De Ruyter van Steveninck et al. present a design for simulating cortical-visual-prosthesis phosphenes that emphasizes features important for optimizing the use of such prostheses. The characteristics of simulated individual phosphenes were shown to agree well with data published from the use of cortical visual prostheses in humans. By ensuring that functions used to generate the simulations were differentiable, the authors permitted and demonstrated integration of the simulations into deep-learning algorithms. In concept, such algorithms could thereby identify parameters for translating images or videos into stimulation sequences that would be most effective for artificial vision. There are, however, limitations to the simulation that will limit its applicability to current prostheses.

The verification of how phosphenes are simulated for individual electrodes is very compelling. Visual-prosthesis simulations often do ignore the physiologic foundation underlying the generation of phosphenes. The authors' simulation takes into account how stimulation parameters contribute to phosphene appearance and show how that relationship can fit data from actual implanted volunteers. This provides an excellent foundation for determining optimal stimulation parameters with reasonable confidence in how parameter selections will affect individual-electrode phosphenes.

Issues with the applicability and reliability of the simulation are detailed below:

- The utility of this simulation design, as described, unfortunately breaks down beyond the scope of individual electrodes. To model the simultaneous activation of multiple electrodes, the authors' design linearly adds individual-electrode phosphenes together. This produces relatively clean collections of dots that one could think of as pixels in a crude digital display. Modeling phosphenes in such a way assumes that each electrode and the network it activates operate independently of other electrodes and their neuronal targets. Unfortunately, as the authors acknowledge and as noted in the studies they used to fit and verify individual-electrode phosphene characteristics, simultaneous stimulation of multiple electrodes often obscures features of individual-electrode phosphenes and can produce unexpected phosphene patterns. This simulation does not reflect these nonlinearities in how electrode activations combine. Nonlinearities in electrode combinations can be as subtle the phosphenes becoming brighter while still remaining distinct, or as problematic as generating only a single small phosphene that is indistinguishable from the activation of a subset of the electrodes activated, or that of a single electrode.

If a visual prosthesis happens to generate some phosphenes that can be elicited independently, a simulator of this type could perhaps be used by processing stimulation from independent groups of electrodes and adding their phosphenes together in the visual field.

Verification of how the simulation renders individual phosphenes based on stimulation parameters is an important step in confirming agreement between the simulation and the function of implanted devices. That verification was well demonstrated. The end use a visual-prosthesis simulation, however, would likely not be optimizing just the appearance of phosphenes, but predicting and optimizing functional performance in visual tasks. Investigating whether this simulator can suggest visual-task performance, either with sighted volunteers or a decoder model, that is similar to published task performance from visual-prosthesis implantees would be a necessary step for true validation.

A feature of this simulation is being able to convert stimulation of V1 to phosphenes in the visual field. If used, this feature would likely only be able to simulate a subset of phosphenes generated by a prosthesis. Much of V1 is buried within the calcarine sulcus, and electrode placement within the calcarine sulcus is not currently feasible. As a result, stimulation of visual cortex typically involves combinations of the limited portions of V1 that lie outside the sulcus and higher visual areas, such as V2.

-

Reviewer #3 (Public Review):

The authors are presenting a new simulation for artificial vision that incorporates many recent advances in our understanding of the neural response to electrical stimulation, specifically within the field of visual prosthetics. The authors succeed in integrating multiple results from other researchers on aspects of V1 response to electrical stimulation to create a system that more accurately models V1 activation in a visual prosthesis than other simulators. The authors then attempt to demonstrate the value of such a system by adding a decoding stage and using machine-learning techniques to optimize the system to various configurations. While there is merit to being able to apply various constraints (such as maximum current levels) and have the system attempt to find a solution that maximizes recoverable …

Reviewer #3 (Public Review):

The authors are presenting a new simulation for artificial vision that incorporates many recent advances in our understanding of the neural response to electrical stimulation, specifically within the field of visual prosthetics. The authors succeed in integrating multiple results from other researchers on aspects of V1 response to electrical stimulation to create a system that more accurately models V1 activation in a visual prosthesis than other simulators. The authors then attempt to demonstrate the value of such a system by adding a decoding stage and using machine-learning techniques to optimize the system to various configurations. While there is merit to being able to apply various constraints (such as maximum current levels) and have the system attempt to find a solution that maximizes recoverable information, the interpretability of such encodings to a hypothetical recipient of such a system is not addressed. The authors demonstrate that they are able to recapitulate various standard encodings through this automated mechanism, but the advantages to using it as opposed to mechanisms that directly detect and encode, e.g., edges, are insufficiently justified. The authors make a few mistakes in their interpretation of biological mechanisms, and the introduction lacks appropriate depth of review of existing literature, giving the reader the mistaken impression that this is simulator is the only attempt ever made at biologically plausible simulation, rather than merely the most recent refinement that builds on decades of work across the field. The authors have importantly not included gaze position compensation which adds more complexity than the authors suggest it would, and also means the simulator lacks a basic, fundamental feature that strongly limits it utility. Finally, the computational capacity required to run the described system is substantial and is not one that would plausibly be used as part of an actual device, suggesting that there may be difficulties with converting results from this simulator to an implantable system. With all of that said, the results do represent an advance, and one that could have wider impact if the authors were to reduce the computational requirements, and add gaze correction.

-