Interpersonal alignment of neural evidence accumulation to social exchange of confidence

Curation statements for this article:-

Curated by eLife

eLife assessment

In this important study, Esmaily and colleagues investigate the "confidence matching" between two agents and present a useful exploration of its computational and physiological correlates. Further analyses would be helpful to provide a tighter link between fluctuations of confidence, pupil size, EEG response, and computational variables, to delineate the causal relations between these quantities, which are nevertheless incompletely documented at present.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Private, subjective beliefs about uncertainty have been found to have idiosyncratic computational and neural substrates yet, humans share such beliefs seamlessly and cooperate successfully. Bringing together decision making under uncertainty and interpersonal alignment in communication, in a discovery plus pre-registered replication design, we examined the neuro-computational basis of the relationship between privately held and socially shared uncertainty. Examining confidence-speed-accuracy trade-off in uncertainty-ridden perceptual decisions under social vs isolated context, we found that shared (i.e. reported confidence) and subjective (inferred from pupillometry) uncertainty dynamically followed social information. An attractor neural network model incorporating social information as top-down additive input captured the observed behavior and demonstrated the emergence of social alignment in virtual dyadic simulations. Electroencephalography showed that social exchange of confidence modulated the neural signature of perceptual evidence accumulation in the central parietal cortex. Our findings offer a neural population model for interpersonal alignment of shared beliefs.

Article activity feed

-

-

Author Response

Reviewer #1 (Public Review):

Esmaily and colleagues report two experimental studies in which participants make simple perceptual decisions, either in isolation or in the context of a joint decision-making procedure. In this "social" condition, participants are paired with a partner (in fact, a computer), they learn the decision and confidence of the partner after making their own decision, and the joint decision is made on the basis of the most confident decision between the participant and the partner. The authors found that participants' confidence, response times, pupil dilation, and CPP (i.e. the increase of centro-parietal EEG over time during the decision process) are all affected by the overall confidence of the partner, which was manipulated across blocks in the experiments. They describe a computational model …

Author Response

Reviewer #1 (Public Review):

Esmaily and colleagues report two experimental studies in which participants make simple perceptual decisions, either in isolation or in the context of a joint decision-making procedure. In this "social" condition, participants are paired with a partner (in fact, a computer), they learn the decision and confidence of the partner after making their own decision, and the joint decision is made on the basis of the most confident decision between the participant and the partner. The authors found that participants' confidence, response times, pupil dilation, and CPP (i.e. the increase of centro-parietal EEG over time during the decision process) are all affected by the overall confidence of the partner, which was manipulated across blocks in the experiments. They describe a computational model in which decisions result from a competition between two accumulators, and in which the confidence of the partner would be an input to the activity of both accumulators. This model qualitatively produced the variation in confidence and RTs across blocks.

The major strength of this work is that it puts together many ingredients (behavioral data, pupil and EEG signals, computational analysis) to build a picture of how the confidence of a partner, in the context of joint decision-making, would influence our own decision process and confidence evaluations. Many of these effects are well described already in the literature, but putting them all together remains a challenge.

We are grateful for this positive assessment.

However, the construction is fragile in many places: the causal links between the different variables are not firmly established, and it is not clear how pupil and EEG signals mediate the effect of the partner's confidence on the participant's behavior.

We have modified the language of the manuscript to avoid the implication of a causal link.

Finally, one limitation of this setting is that the situation being studied is very specific, with a joint decision that is not the result of an agreement between partners, but the automatic selection of the most confident decisions. Thus, whether the phenomena of confidence matching also occurs outside of this very specific setting is unclear.

We have now acknowledged this caveat in the discussion in line 485 to 504. The final paragraph of the discussion now reads as follows:

“Finally, one limitation of our experimental setup is that the situation being studied is confined to the design choices made by the experimenters. These choices were made in order to operationalize the problem of social interaction within the psychophysics laboratory. For example, the joint decisions were not made through verbal agreement (Bahrami et al., 2010, 2012). Instead, following a number of previous works (Bang et al., 2017, 2020) joint decisions were automatically assigned to the most confident choice. In addition, the partner’s confidence and choice were random variables drawn from a distribution prespecified by the experimenter and therefore, by design, unresponsive to the participant’s behaviour. In this sense, one may argue that the interaction partner’s behaviour was not “natural” since they did not react to the participant's confidence communications (note however that the partner’s confidence and accuracy were not entirely random but matched carefully to the participant’s behavior prerecorded in the individual session). How much of the findings are specific to these experimental setting and whether the behavior observed here would transfer to real-life settings is an open question. For example, it is plausible that participants may show some behavioral reaction to a human partner’s response time variations since there is some evidence indicating that for binary choices such as those studied here, response times also systematically communicate uncertainty to others (Patel et al., 2012). Future studies could examine the degree to which the results might be paradigm-specific.”

Reviewer #2 (Public Review):

This study is impressive in several ways and will be of interest to behavioral and brain scientists working on diverse topics.

First, from a theoretical point of view, it very convincingly integrates several lines of research (confidence, interpersonal alignment, psychophysical, and neural evidence accumulation) into a mechanistic computational framework that explains the existing data and makes novel predictions that can inspire further research. It is impressive to read that the corresponding model can account for rather non-intuitive findings, such as that information about high confidence by your collaborators means people are faster but not more accurate in their judgements.

Second, from a methodical point of view, it combines several sophisticated approaches (psychophysical measurements, psychophysical and neural modelling, electrophysiological and pupil measurements) in a manner that draws on their complementary strengths and that is most compelling (but see further below for some open questions). The appeal of the study in that respect is that it combines these methods in creative ways that allow it to answer its specific questions in a much more convincing manner than if it had used just either of these approaches alone.

Third, from a computational point of view, it proposes several interesting ways by which biologically realistic models of perceptual decision-making can incorporate socially communicated information about other's confidence, to explain and predict the effects of such interpersonal alignment on behavior, confidence, and neural measurements of the processes related to both. It is nice to see that explicit model comparison favor one of these ways (top-down driving inputs to the competing accumulators) over others that may a priori have seemed more plausible but mechanistically less interesting and impactful (e.g., effects on response boundaries, no-decision times, or evidence accumulation).

Fourth, the manuscript is very well written and provides just the right amount of theoretical introduction and balanced discussion for the reader to understand the approach, the conclusions, and the strengths and limitations.

Finally, the manuscript takes open science practices seriously and employed preregistration, a replication sample, and data sharing in line with good scientific practice.

We are grateful to the reviewer for their positive assessment of our work.

Having said all these positive things, there are some points where the manuscript is unclear or leaves some open questions. While the conclusions of the manuscript are not overstated, there are unclarities in the conceptual interpretation, the descriptions of the methods, some procedures of the methods themselves, and the interpretation of the results that make the reader wonder just how reliable and trustworthy some of the many findings are that together provide this integrated perspective.

We hope that our modifications and revisions in response to the criticisms listed below will be satisfactory. To avoid redundancies, we have combined each numbered comment with the corresponding recommendation for the Authors.

First, the study employs rather small sample sizes of N=12 and N=15 and some of the effects are rather weak (e.g., the non-significant CPP effects in study 1). This is somewhat ameliorated by the fact that a replication sample was used, but the robustness of the findings and their replicability in larger samples can be questioned.

Our study brings together questions from two distinct fields of neuroscience: perceptual decision making and social neuroscience. Each of these two fields have their own traditions and practical common sense. Typically, studies in perceptual decision making employ a small number of extensively trained participants (approximately 6 to 10 individuals). Social neuroscience studies, on the other hand, recruit larger samples (often more than 20 participants) without extensive training protocols. We therefore needed to strike a balance in this trade-off between number of participants and number of data points (e.g. trials) obtained from each participant. Note, for example, that each of our participants underwent around 4000 training trials. Strikingly, our initial study (N=12) yielded robust results that showed the hypothesized effects nearly completely, supporting the adequacy of our power estimate. However, we decided to replicate the findings because, like the reviewer, we believe in the importance of adequate sampling. We increased our sample size to N=15 participants to enhance the reliability of our findings. However, we acknowledge the limitation of generalizing to larger samples, which we have now discussed in our revised manuscript and included a cautionary note regarding further generalizations.

To complement our results and add a measure of their reliability, here we provide the results of a power analysis that we applied on the data from study 1 (i.e. the discovery phase). These results demonstrate that the sample size of study 2 (i.e. replication) was adequate when conditioned on the results from study 1 (see table and graph pasted below). The results showed that N=13 would be an adequate sample size for 80% power for behavoural and eye-tracking measurements. Power analysis for the EEG measurements indicated that we needed N=17. Combining these power analyses. Our sample size of N=15 for Study 2 was therefore reasonably justified.

We have now added a section to the discussion (Lines 790-805) that communicates these issues as follows:

“Our study brings together questions from two distinct fields of neuroscience: perceptual decision making and social neuroscience. Each of these two fields have their own traditions and practical common sense. Typically, studies in perceptual decision making employ a small number of extensively trained participants (approximately 6 to 10 individuals). Social neuroscience studies, on the other hand, recruit larger samples (often more than 20 participants) without extensive training protocols. We therefore needed to strike a balance in this trade-off between number of participants and number of data points (e.g. trials) obtained from each participant. Note, for example, that each of our participants underwent around 4000 training trials. Importantly, our initial study (N=12) yielded robust results that showed the hypothesized effects nearly completely, supporting the adequacy of our power estimate. However, we decided to replicate the findings in a new sample with N=15 participants to enhance the reliability of our findings and examine our hypothesis in a stringent discovery-replication design. In Figure 4-figure supplement 5, we provide the results of a power analysis that we applied on the data from study 1 (i.e. the discovery phase). These results demonstrate that the sample size of study 2 (i.e. replication) was adequate when conditioned on the results from study 1.”

We conducted Monte Carlo simulations to determine the sample size required to achieve sufficient statistical power (80%) (Szucs & Ioannidis, 2017). In these simulations, we utilized the data from study 1. Within each sample size (N, x-axis), we randomly selected N participants from our 12 partpincats in study 1. We employed the with-replacement sampling method. Subsequently, we applied the same GLMM model used in the main text to assess the dependency of EEG signal slopes on social conditions (HCA vs LCA). To obtain an accurate estimate, we repeated the random sampling process 1000 times for each given sample size (N). Consequently, for a given sample size, we performed 1000 statistical tests using these randomly generated datasets. The proportion of statistically significant tests among these 1000 tests represents the statistical power (y-axis). We gradually increased the sample size until achieving an 80% power threshold, as illustrated in the figure.The the number indicated by the red circle on the x axis of this graph represents the designated sample size.

Second, the manuscript interprets the effects of low-confidence partners as an impact of the partner's communicated "beliefs about uncertainty". However, it appears that the experimental setup also leads to greater outcome uncertainty (because the trial outcome is determined by the joint performance of both partners, which is normally reduced for low-confidence partners) and response uncertainty (because subjects need to consider not only their own confidence but also how that will impact on the low-confidence partner). While none of these other possible effects is conceptually unrelated to communicated confidence and the basic conclusions of the manuscript are therefore valid, the reader would like to understand to what degree the reported effects relate to slightly different types of uncertainty that can be elicited by communicated low confidence in this setup.

We appreciate the reviewer’s advice to remain cautious about the possible sources of uncertainty in our experiment. In the Discussion (lines 790-801) we have now added the following paragraph.

“We have interpreted our findings to indicate that social information, i.e. partner’s confidence, impacts the participants beliefs about uncertainty. It is important to underscore here that, similar to real life, there are other sources of uncertainty in our experimental setup that could affect the participants' belief. For example, under joint conditions, the group choice is determined through the comparison of the choices and confidences of the partners. As a result, the participant has a more complex task of matching their response not only with their perceptual experience but also coordinating it with the partner to achieve the best possible outcome. For the same reason, there is greater outcome uncertainty under joint vs individual conditions. Of course, these other sources of uncertainty are conceptually related to communicated confidence but our experimental design aimed to remove them, as much as possible, by comparing the impact of social information under high vs low confidence of the partner.”

In addition to the above, we would like to clarify one point here with specific respect to the comment. Note that the computer-generated partner’s accuracy was identical under high and low confidence. In addition, our behavioral findings did not show any difference in accuracy under HCA and LCA conditions. As a consequence, the argument that “the trial outcome is determined by the joint performance of both partners, which is normally reduced for low-confidence partners)” is not valid because the low-confidence partner’s performance is identical to that of the high-confidence partner. It is possible, of course, that we have misunderstood the reviewer’s point here and we would be happy to discuss this further if necessary.

Third, the methods used for measurement, signal processing, and statistical inference in the pupil analysis are questionable. For a start, the methods do not give enough details as to how the stimuli were calibrated in terms of luminance etc so that the pupil signals are interpretable.

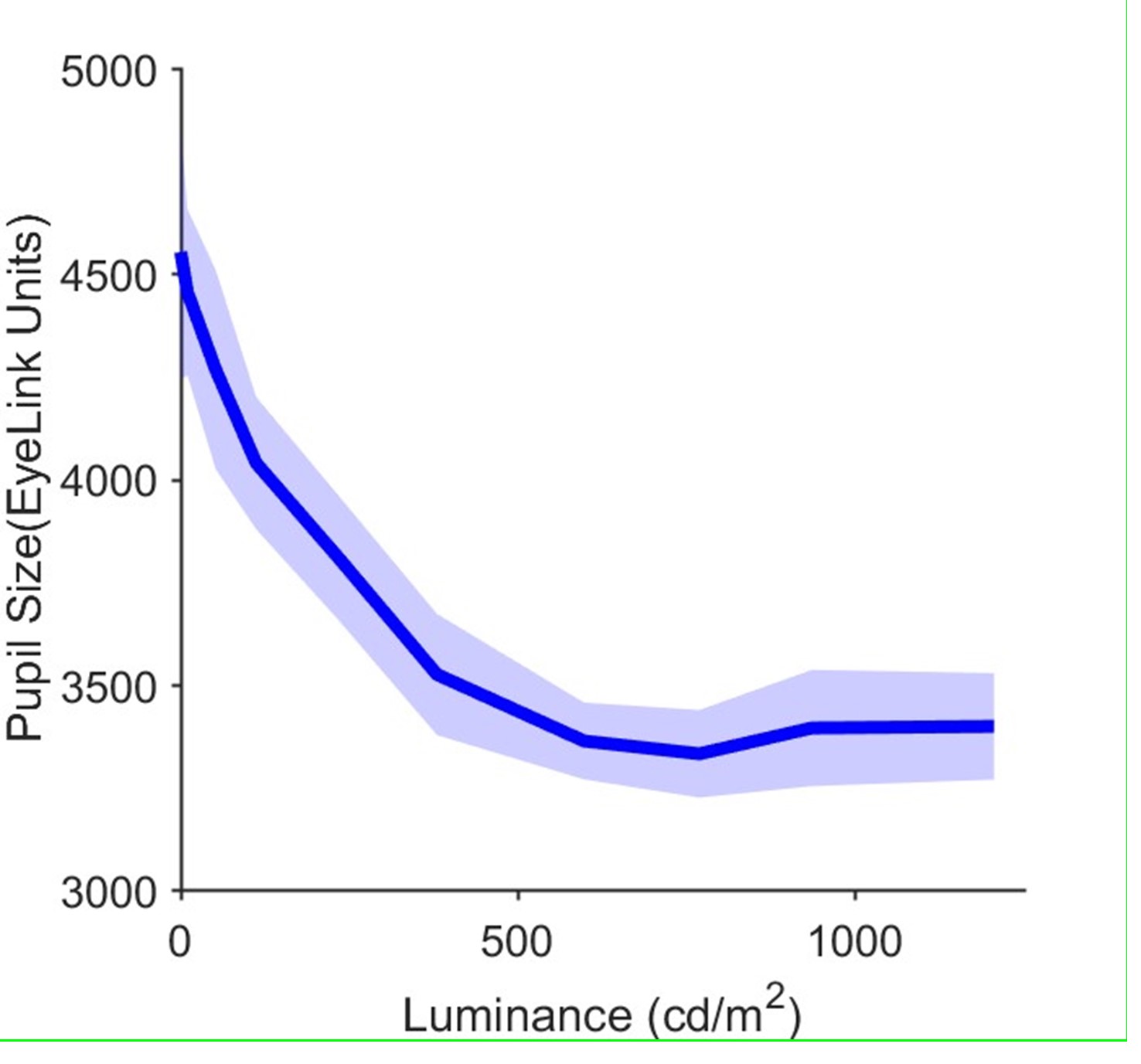

Here we provide in Author response image 1 the calibration plot for our eye tracking setup, describing the relationship between pupil size and display luminance. Luminance of the random dot motion stimuli (ie white dots on black background) was Cd/m2 and, importantly, identical across the two critical social conditions. We hope that this additional detail satisfies the reviewer’s concern. For the purpose of brevity, we have decided against adding this part to the manuscript and supplementary material.

Author response image 1.

Calibration plot for the experimental setup. Average pupil size (arbitrary units from eyelink device) is plotted against display luminance. The plot is obtained by presenting the participant with uniform full screen displays with 10 different luminance levels covering the entire range of the monitor RGB values (0 to 255) whose luminance was separately measured with a photometer. Each display lasted 10 seconds. Error bars are standard deviation between sessions.

Moreover, while the authors state that the traces were normalized to a value of 0 at the start of the ITI period, the data displayed in Figure 2 do not show this normalization but different non-zero values. Are these data not normalized, or was a different procedure used? Finally, the authors analyze the pupil signal averaged across a wide temporal ITI interval that may contain stimulus-locked responses (there is not enough information in the manuscript to clearly determine which temporal interval was chosen and averaged across, and how it was made sure that this signal was not contaminated by stimulus effects).

We have now added the following details to the Methods section in line 1106-1135.

“In both studies, the Eye movements were recorded by an EyeLink 1000 (SR- Research) device with a sampling rate of 1000Hz which was controlled by a dedicated host PC. The device was set in a desktop and pupil-corneal reflection mode while data from the left eye was recorded. At the beginning of each block, the system was recalibrated and then validated by 9-point schema presented on the screen. For one subject was, a 3-point schema was used due to repetitive calibration difficulty. Having reached a detection error of less than 0.5°, the participants proceeded to the main task. Acquired eye data for pupil size were used for further analysis. Data of one subject in the first study was removed from further analysis due to storage failure.

Pupil data were divided into separate epochs and data from Inter-Trials Interval (ITI) were selected for analysis. ITI interval was defined as the time between offset of trial (t) feedback screen and stimulus presentation of trial (t+1). Then, blinks and jitters were detected and removed using linear interpolation. Values of pupil size before and after the blink were used for this interpolation. Data was also mid-pass filtered using a Butterworth filter (second order,[0.01, 6] Hz)[50]. The pupil data was z-scored and then was baseline corrected by removing the average of signal in the period of [-1000 0] ms interval (before ITI onset). For the statistical analysis (GLMM) in Figure 2, we used the average of the pupil signal in the ITI period. Therefore, no pupil value is contaminated by the upcoming stimuli. Importantly, trials with ITI>3s were excluded from analysis (365 out of 8800 for study 1 and 128 out 6000 for study 2. Also see table S7 and Selection criteria for data analysis in Supplementary Materials)”

Fourth, while the EEG analysis in general provides interesting data, the link to the well-established CPP signal is not entirely convincing. CPP signals are usually identified and analyzed in a response-locked fashion, to distinguish them from other types of stimulus-locked potentials. One crucial feature here is that the CPPs in the different conditions reach a similar level just prior to the response. This is either not the case here, or the data are not shown in a format that allows the reader to identify these crucial features of the CPP. It is therefore questionable whether the reported signals indeed fully correspond to this decision-linked signal.

Fifth, the authors present some effective connectivity analysis to identify the neural mechanisms underlying the possible top-down drive due to communicated confidence. It is completely unclear how they select the "prefrontal cortex" signals here that are used for the transfer entropy estimations, and it is in fact even unclear whether the signals they employ originate in this brain structure. In the absence of clear methodical details about how these signals were identified and why the authors think they originate in the prefrontal cortex, these conclusions cannot be maintained based on the data that are presented.

Sixth, the description of the model fitting procedures and the parameter settings are missing, leaving it unclear for the reader how the models were "calibrated" to the data. Moreover, for many parameters of the biophysical model, the authors seem to employ fixed parameter values that may have been picked based on any criteria. This leaves the impression that the authors may even have manually changed parameter values until they found a set of values that produced the desired effects. The model would be even more convincing if the authors could for every parameter give the procedures that were used for fitting it to the data, or the exact criteria that were used to fix the parameter to a specific value.

Seventh, on a related note, the reader wonders about some of the decisions the authors took in the specification of their model. For example, why was it assumed that the parameters of interest in the three competing models could only be modulated by the partner's confidence in a linear fashion? A non-linear modulation appears highly plausible, so extreme values of confidence may have much more pronounced effects. Moreover, why were the confidence computations assumed to be finished at the end of the stimulus presentation, given that for trials with RTs longer than the stimulus presentation, the sensory information almost certainly reverberated in the brain network and continued to be accumulated (in line with the known timing lags in cortical areas relative to objective stimulus onset)? It would help if these model specification choices were better justified and possibly even backed up with robustness checks.

Eight, the fake interaction partners showed several properties that were highly unnatural (they did not react to the participant's confidence communications, and their response times were random and thus unrelated to confidence and accuracy). This questions how much the findings from this specific experimental setting would transfer to other real-life settings, and whether participants showed any behavioral reactions to the random response time variations as well (since several studies have shown that for binary choices like here, response times also systematically communicate uncertainty to others). Moreover, it is also unclear how the confidence convergence simulated in Figure 3d can conceptually apply to the data, given that the fake subjects did not react to the subject's communicated confidence as in the simulation.

-

eLife assessment

In this important study, Esmaily and colleagues investigate the "confidence matching" between two agents and present a useful exploration of its computational and physiological correlates. Further analyses would be helpful to provide a tighter link between fluctuations of confidence, pupil size, EEG response, and computational variables, to delineate the causal relations between these quantities, which are nevertheless incompletely documented at present.

-

Reviewer #1 (Public Review):

Esmaily and colleagues report two experimental studies in which participants make simple perceptual decisions, either in isolation or in the context of a joint decision-making procedure. In this "social" condition, participants are paired with a partner (in fact, a computer), they learn the decision and confidence of the partner after making their own decision, and the joint decision is made on the basis of the most confident decision between the participant and the partner. The authors found that participants' confidence, response times, pupil dilation, and CPP (i.e. the increase of centro-parietal EEG over time during the decision process) are all affected by the overall confidence of the partner, which was manipulated across blocks in the experiments. They describe a computational model in which decisions …

Reviewer #1 (Public Review):

Esmaily and colleagues report two experimental studies in which participants make simple perceptual decisions, either in isolation or in the context of a joint decision-making procedure. In this "social" condition, participants are paired with a partner (in fact, a computer), they learn the decision and confidence of the partner after making their own decision, and the joint decision is made on the basis of the most confident decision between the participant and the partner. The authors found that participants' confidence, response times, pupil dilation, and CPP (i.e. the increase of centro-parietal EEG over time during the decision process) are all affected by the overall confidence of the partner, which was manipulated across blocks in the experiments. They describe a computational model in which decisions result from a competition between two accumulators, and in which the confidence of the partner would be an input to the activity of both accumulators. This model qualitatively produced the variation in confidence and RTs across blocks.

The major strength of this work is that it puts together many ingredients (behavioral data, pupil and EEG signals, computational analysis) to build a picture of how the confidence of a partner, in the context of joint decision-making, would influence our own decision process and confidence evaluations. Many of these effects are well described already in the literature, but putting them all together remains a challenge. However, the construction is fragile in many places: the causal links between the different variables are not firmly established, and it is not clear how pupil and EEG signals mediate the effect of the partner's confidence on the participant's behavior.

Finally, one limitation of this setting is that the situation being studied is very specific, with a joint decision that is not the result of an agreement between partners, but the automatic selection of the most confident decisions. Thus, whether the phenomena of confidence matching also occurs outside of this very specific setting is unclear.

-

Reviewer #2 (Public Review):

This study is impressive in several ways and will be of interest to behavioral and brain scientists working on diverse topics.

First, from a theoretical point of view, it very convincingly integrates several lines of research (confidence, interpersonal alignment, psychophysical, and neural evidence accumulation) into a mechanistic computational framework that explains the existing data and makes novel predictions that can inspire further research. It is impressive to read that the corresponding model can account for rather non-intuitive findings, such as that information about high confidence by your collaborators means people are faster but not more accurate in their judgements.

Second, from a methodical point of view, it combines several sophisticated approaches (psychophysical measurements, psychophysical …

Reviewer #2 (Public Review):

This study is impressive in several ways and will be of interest to behavioral and brain scientists working on diverse topics.

First, from a theoretical point of view, it very convincingly integrates several lines of research (confidence, interpersonal alignment, psychophysical, and neural evidence accumulation) into a mechanistic computational framework that explains the existing data and makes novel predictions that can inspire further research. It is impressive to read that the corresponding model can account for rather non-intuitive findings, such as that information about high confidence by your collaborators means people are faster but not more accurate in their judgements.

Second, from a methodical point of view, it combines several sophisticated approaches (psychophysical measurements, psychophysical and neural modelling, electrophysiological and pupil measurements) in a manner that draws on their complementary strengths and that is most compelling (but see further below for some open questions). The appeal of the study in that respect is that it combines these methods in creative ways that allow it to answer its specific questions in a much more convincing manner than if it had used just either of these approaches alone.

Third, from a computational point of view, it proposes several interesting ways by which biologically realistic models of perceptual decision-making can incorporate socially communicated information about other's confidence, to explain and predict the effects of such interpersonal alignment on behavior, confidence, and neural measurements of the processes related to both. It is nice to see that explicit model comparison favor one of these ways (top-down driving inputs to the competing accumulators) over others that may a priori have seemed more plausible but mechanistically less interesting and impactful (e.g., effects on response boundaries, no-decision times, or evidence accumulation).

Fourth, the manuscript is very well written and provides just the right amount of theoretical introduction and balanced discussion for the reader to understand the approach, the conclusions, and the strengths and limitations.

Finally, the manuscript takes open science practices seriously and employed preregistration, a replication sample, and data sharing in line with good scientific practice.

Having said all these positive things, there are some points where the manuscript is unclear or leaves some open questions. While the conclusions of the manuscript are not overstated, there are unclarities in the conceptual interpretation, the descriptions of the methods, some procedures of the methods themselves, and the interpretation of the results that make the reader wonder just how reliable and trustworthy some of the many findings are that together provide this integrated perspective.

First, the study employs rather small sample sizes of N=12 and N=15 and some of the effects are rather weak (e.g., the non-significant CPP effects in study 1). This is somewhat ameliorated by the fact that a replication sample was used, but the robustness of the findings and their replicability in larger samples can be questioned.

Second, the manuscript interprets the effects of low-confidence partners as an impact of the partner's communicated "beliefs about uncertainty". However, it appears that the experimental setup also leads to greater outcome uncertainty (because the trial outcome is determined by the joint performance of both partners, which is normally reduced for low-confidence partners) and response uncertainty (because subjects need to consider not only their own confidence but also how that will impact on the low-confidence partner). While none of these other possible effects is conceptually unrelated to communicated confidence and the basic conclusions of the manuscript are therefore valid, the reader would like to understand to what degree the reported effects relate to slightly different types of uncertainty that can be elicited by communicated low confidence in this setup.

Third, the methods used for measurement, signal processing, and statistical inference in the pupil analysis are questionable. For a start, the methods do not give enough details as to how the stimuli were calibrated in terms of luminance etc so that the pupil signals are interpretable. Moreover, while the authors state that the traces were normalized to a value of 0 at the start of the ITI period, the data displayed in Figure 2 do not show this normalization but different non-zero values. Are these data not normalized, or was a different procedure used? Finally, the authors analyze the pupil signal averaged across a wide temporal ITI interval that may contain stimulus-locked responses (there is not enough information in the manuscript to clearly determine which temporal interval was chosen and averaged across, and how it was made sure that this signal was not contaminated by stimulus effects).

Fourth, while the EEG analysis in general provides interesting data, the link to the well-established CPP signal is not entirely convincing. CPP signals are usually identified and analyzed in a response-locked fashion, to distinguish them from other types of stimulus-locked potentials. One crucial feature here is that the CPPs in the different conditions reach a similar level just prior to the response. This is either not the case here, or the data are not shown in a format that allows the reader to identify these crucial features of the CPP. It is therefore questionable whether the reported signals indeed fully correspond to this decision-linked signal.

Fifth, the authors present some effective connectivity analysis to identify the neural mechanisms underlying the possible top-down drive due to communicated confidence. It is completely unclear how they select the "prefrontal cortex" signals here that are used for the transfer entropy estimations, and it is in fact even unclear whether the signals they employ originate in this brain structure. In the absence of clear methodical details about how these signals were identified and why the authors think they originate in the prefrontal cortex, these conclusions cannot be maintained based on the data that are presented.

Sixth, the description of the model fitting procedures and the parameter settings are missing, leaving it unclear for the reader how the models were "calibrated" to the data. Moreover, for many parameters of the biophysical model, the authors seem to employ fixed parameter values that may have been picked based on any criteria. This leaves the impression that the authors may even have manually changed parameter values until they found a set of values that produced the desired effects. The model would be even more convincing if the authors could for every parameter give the procedures that were used for fitting it to the data, or the exact criteria that were used to fix the parameter to a specific value.

Seventh, on a related note, the reader wonders about some of the decisions the authors took in the specification of their model. For example, why was it assumed that the parameters of interest in the three competing models could only be modulated by the partner's confidence in a linear fashion? A non-linear modulation appears highly plausible, so extreme values of confidence may have much more pronounced effects. Moreover, why were the confidence computations assumed to be finished at the end of the stimulus presentation, given that for trials with RTs longer than the stimulus presentation, the sensory information almost certainly reverberated in the brain network and continued to be accumulated (in line with the known timing lags in cortical areas relative to objective stimulus onset)? It would help if these model specification choices were better justified and possibly even backed up with robustness checks.

Eight, the fake interaction partners showed several properties that were highly unnatural (they did not react to the participant's confidence communications, and their response times were random and thus unrelated to confidence and accuracy). This questions how much the findings from this specific experimental setting would transfer to other real-life settings, and whether participants showed any behavioral reactions to the random response time variations as well (since several studies have shown that for binary choices like here, response times also systematically communicate uncertainty to others). Moreover, it is also unclear how the confidence convergence simulated in Figure 3d can conceptually apply to the data, given that the fake subjects did not react to the subject's communicated confidence as in the simulation.

-