Quantifying decision-making in dynamic, continuously evolving environments

Curation statements for this article:-

Curated by eLife

eLife assessment

The authors use a clever experimental design and approach to tackle an important set of questions in the field of decision-making. From this work, the authors have a number of intriguing results. However, questions remain regarding the extent to which a number of alternative models and interpretations, not considered in the paper, could account for the observed effects.

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

During perceptual decision-making tasks, centroparietal electroencephalographic (EEG) potentials report an evidence accumulation-to-bound process that is time locked to trial onset. However, decisions in real-world environments are rarely confined to discrete trials; they instead unfold continuously, with accumulation of time-varying evidence being recency-weighted towards its immediate past. The neural mechanisms supporting recency-weighted continuous decision-making remain unclear. Here, we use a novel continuous task design to study how the centroparietal positivity (CPP) adapts to different environments that place different constraints on evidence accumulation. We show that adaptations in evidence weighting to these different environments are reflected in changes in the CPP. The CPP becomes more sensitive to fluctuations in sensory evidence when large shifts in evidence are less frequent, and the potential is primarily sensitive to fluctuations in decision-relevant (not decision-irrelevant) sensory input. A complementary triphasic component over occipito-parietal cortex encodes the sum of recently accumulated sensory evidence, and its magnitude covaries with parameters describing how different individuals integrate sensory evidence over time. A computational model based on leaky evidence accumulation suggests that these findings can be accounted for by a shift in decision threshold between different environments, which is also reflected in the magnitude of pre-decision EEG activity. Our findings reveal how adaptations in EEG responses reflect flexibility in evidence accumulation to the statistics of dynamic sensory environments.

Article activity feed

-

-

Author Response

Reviewer #1:

This is a very timely paper that addresses an important and difficult-to-address question in the decision-making field - the degree to which information leakage can be strategically adapted to optimise decisions in a task-dependent fashion. The authors apply a sophisticated suite of analyses that are appropriate and yield a range of very interesting observations. The paper centres on analyses of one possible model that hinges on certain assumptions about the nature of the decision process for this task which raises questions about whether leak adjustments are the only possible explanation for the current data. I think the conclusions would be greatly strengthened if they were supported by the application and/or simulation of alternative model structures.

We thank the reviewer for this positive appraisal …

Author Response

Reviewer #1:

This is a very timely paper that addresses an important and difficult-to-address question in the decision-making field - the degree to which information leakage can be strategically adapted to optimise decisions in a task-dependent fashion. The authors apply a sophisticated suite of analyses that are appropriate and yield a range of very interesting observations. The paper centres on analyses of one possible model that hinges on certain assumptions about the nature of the decision process for this task which raises questions about whether leak adjustments are the only possible explanation for the current data. I think the conclusions would be greatly strengthened if they were supported by the application and/or simulation of alternative model structures.

We thank the reviewer for this positive appraisal of our study. We now entirely agree with their central comment about whether leak adjustments are the only (or even the best) explanation for the current data. We hope that the additional modelling sections that we have discussed in response to main comment 1 above have strengthened the paper. We have responded point-by-point to their public review, as this contained their main recommendations for revision.

The behavioural trends when comparing blocks with frequent versus rare response periods seem difficult to tally with a change in the leak. […] Are there other models that could reproduce such effects? For example, could a model in which the drift rate varies between Rare and Frequent trials do a similar or better job of explaining the data?

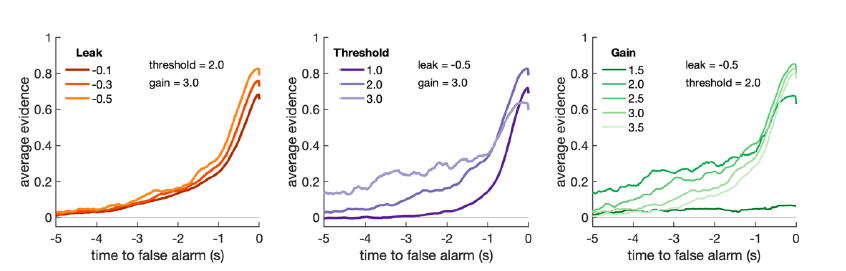

We can see why the reviewer has advocated for a possible change of drift rate (or ‘gain’ applied to sensory evidence) between conditions to explain our behavioural findings. We found, however, that changes in drift rate could elicit qualitatively similar changes in integration kernels to changes in decision threshold:

Author response image 1.

Changes in gain applied to incoming sensory evidence (A parameter in model) have similar effects on recovered integration kernels from Ornstein-Uhlenbeck simulation as changes in decision threshold.

The likely reason for this is that the overall probability of emitting a response at any point in the continuous decision process is determined by the ratio of accumulated evidence to decision threshold. A similar logic applies to effects on reactions times and detection probability (main figure 2): increasing sensory gain/decreasing decision threshold will lead to faster reaction times and increased detection probability during response periods.

Both parameters may even have a similar effect on ‘false alarms’, because (as the reviewer notes below) false alarms in our paradigm are primarily being driven by the occurrence of stimulus changes as well as internal noise. In fact, the false alarm findings mean it is difficult to fully reconcile all of our behavioural findings in terms of changes in a single set of model parameters in the O-U process. It is possible that other changes not considered within our model (such as expectations of hazard rates of inter-response intervals leading to dynamic thresholds etc.) may have had a strong impact upon the resulting false alarm rates. A full exploration of different variations in O-U model (with varying urgency signals, hazard rates, etc.) is beyond the scope of this paper.

For this reason, we have decided in our new modelling section to focus primarily on a single, well-established model (the O-U process) and explore how changes in leak and threshold affect task performance and the resulting integration kernels. We note that this is in line with the suggestion of reviewer #2, who focussed on similar behavioural findings to reviewer #1 but suggested that we look at decision threshold rather than drift rate as our primary focus.

This ties in to a related query about the nature of the task employed by the authors. Due to the very significant volatility of the stimulus, it seems likely that the participants are not solely making judgments about the presence/absence of coherent motion but also making judgments about its duration (because strong coherent motion frequently occurs in the inter-target intervals). If that is so, then could the Rare condition equate to less evidence because there is an increased probability that an extended period of coherent motion could be an outlier generated from the noise distribution? Note that a drift rate reduction would also be expected to result in fewer hits and slower reaction times, as observed.

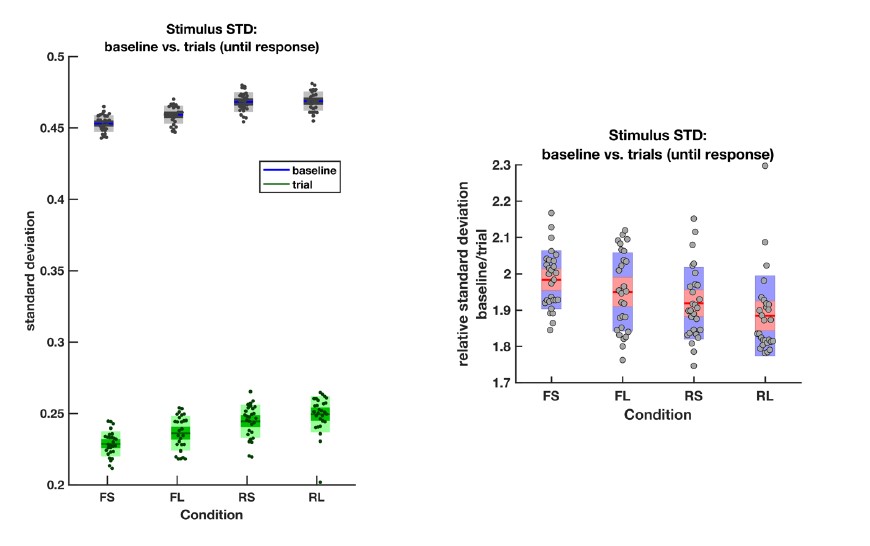

As mentioned above, the rare and frequent targets are indeed matched in terms of the ease with which they can be distinguished from the intervening noise intervals. To confirm this, we directly calculated the variance (across frames) of the motion coherence presented during baseline periods and response periods (until response) in all four conditions:

Author response image 2.

The average empirical standard deviation of the stimulus stream presented during each baseline period (‘baseline’) and response period (‘trial’), separated by each of the four conditions (F = frequent response periods, R = rare, L = long response periods, S = short). Data were averaged across all response/baseline periods within the stimuli presented to each participant (each dot = 1 participant). Note that the standard deviation shown here is the standard deviation of motion coherence across frames of sensory evidence. This is smaller than the standard deviation of the generative distribution of ‘step’-changes in the motion coherence (std = 0.5 for baseline and 0.3 for response periods), because motion coherence remains constant for a period after each ‘step’ occurs.

Some adjustment of the language used when discussing FAs seems merited. If I have understood correctly, the sensory samples encountered by the participants during the inter-response intervals can at times favour a particular alternative just as strongly (or more strongly) than that encountered during the response interval itself. In that sense, the responses are not necessarily real false alarms because the physical evidence itself does not distinguish the target from the non-target. I don't think this invalidates the authors' approach but I think it should be acknowledged and considered in light of the comment above regarding the nature of the decision process employed on this task.

This is a good point. We hope that the reviewer will allow us to keep the term ‘false alarms’ in the paper, as it does conveniently distinguish responses during baseline periods from those during response periods, but we have sought to clarify the point that the reviewer makes when we first introduce the term.

“Indeed, participants would occasionally make ‘false alarms’ during baseline periods in which the structure of the preceding noise stream mistakenly convinced them they were in a response period (see Figure 4, below). Indeed, this means that a ‘false alarm’ in our paradigm has a slightly different meaning than in most psychophysics experiments; rather than it referring to participants responding when a stimulus was not present, we use the term to refer to participants responding when there was no shift in the mean signal from baseline.”

And:

“The fact that evidence integration kernels naturally arise from false alarms, in the same manner as from correct responses, demonstrates that false alarms were not due to motor noise or other spurious causes. Instead, false alarms were driven by participants treating noise fluctuations during baseline periods as sensory evidence to be integrated across time, and the physical evidence preceding ‘false alarms’ need not even distinguish targets from non-targets.”

The authors report that preparatory motor activity over central electrodes reached a larger decision threshold for RARE vs. FREQUENT response periods. It is not clear what identifies this signal as reflecting motor preparation. Did the authors consider using other effectorselective EEG signatures of motor preparation such as beta-band activity which has been used elsewhere to make inferences about decision bounds? Assuming that this central ERP signal does reflect the decision bounds, the observation that it has a larger amplitude at the response on Rare trials appears to directly contradict the kernel analyses which suggest no difference in the cumulative evidence required to trigger commitment.

Thanks for this comment. First, we should simply comment that this finding emerged from an agnostic time-domain analysis of the data time-locked to button presses, in which we simply observed that the negative-going potential was greater (more negative) in RARE vs. FREQUENT trials. So it is simply the fact that it precedes each button press that we relate it to motor preparation; nonetheless, we note that (Kelly and O’Connell, 2013) found similar negative-going potentials at central sensors without applying CSD transform (as in this study). Like them, we would relate this potential to either the well-established Bereitschaftpotential or the contingent negative potential (CNV).

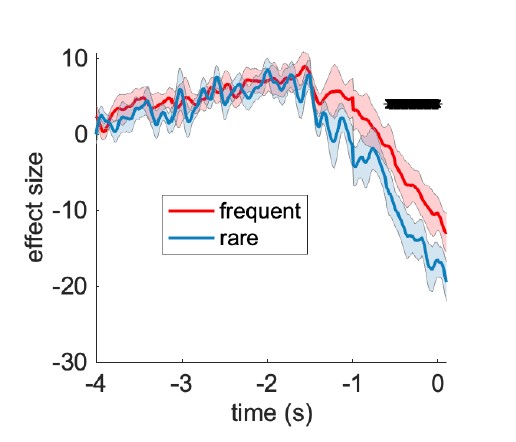

We agree that many other studies have focussed on beta-band activity as another measure of motor preparation, and to make inferences about decision bounds. To investigate this, we used a Morlet wavelet transform to examine the time-varying power estimate at a central frequency of 20Hz (wavelet factor 7). We repeated the convolutional GLM analysis on this time-varying power estimate.

We first examined average beta desynchonisation at a central cluster of electrodes (CPz, CP1, CP2, C1, Cz, C2) in the run-up to correct button presses during response periods. We found a reliable beta desynchonisation occurred, and, just as in the time-domain signal, this reached a greater threshold in the RARE trials than in the FREQUENT trials:

Author response image 3.

Beta desynchronisation prior to a correct response is greater over central electrodes in the RARE condition than in the FREQUENT condition.

We agree with the reviewer that this is likely indicative of a change in decision threshold between rare and frequent trials. We also note that our new computational modelling of the O-U process suggests that this in fact reconciles well with the behavioural findings (changes in integration kernels). We now mention this at the relevant point in the results section:

“As large changes in mean evidence are less frequent in the RARE condition, the increased neural response to |Devidence| may reflect the increased statistical surprise associated with the same magnitude of change in evidence in this condition. In addition, when making a correct response, preparatory motor activity over central electrodes reached a larger decision threshold for RARE vs. FREQUENT response periods (Figure 7b; p=0.041, cluster-based permutation test). We found similar effects in beta-band desynchronisation prior, averaged over the same electrodes; beta desynchronisation was greater in RARE than FREQUENT response periods. As discussed in the computational modelling section above, this is consistent with the changes in integration kernels between these conditions as it may reflect a change in decision threshold (figure 2d, 3c/d). It is also consistent with the lower detection rates and slower reaction times when response periods are RARE (figure 2 b/c).”

We did also investigate the lateralised response (left minus right beta-desynchronisation, contrasted on left minus right responses). We found, however, that we were simply unable to detect a reliable lateralised signal in either condition using these lateralised responses. We suspect that this is because we have far fewer response periods than conventional trialbased EEG experiments of decision making, and so we did not have sufficient SNR to reliably detect this signal. This is consistent with standard findings in the literature, which report that the magnitude of the lateralised signal is far smaller than the magnitude of the overall beta desynchronisation (e.g. (Doyle et al., 2005))

P11, the "absolute sensory evidence" regressor elicited a triphasic potential over centroparietal electrodes. The first two phases of this component look to have an occipital focus. The third phase has a more centroparietal focus but appears markedly more posterior than the change in evidence component. This raises the question of whether it is safe to assume that they reflect the same process.

We agree. We have now referred to this as a ‘triphasic component over occipito-parietal cortex’ rather than centroparietal electrodes.

Reviewer #2:

Overall, the authors use a clever experimental design and approach to tackle an important set of questions in the field of decision-making. The manuscript is easy to follow with clear writing. The analyses are well thought-out and generally appropriate for the questions at hand. From these analyses, the authors have a number of intriguing results. So, there is considerable potential and merit in this work. That said, I have a number of important questions and concerns that largely revolve around putting all the pieces together. I describe these below.

Thanks to the reviewer for their positive appraisal of the manuscript; we are obviously pleased that they found our work to have considerable potential and merit. We seek to address the main comments from their public review and recommendations below.

- It is unclear to what extent the decision threshold is changing between subjects and conditions, how that might affect the empirical integration kernel, and how well these two factors can together explain the overall changes in behavior.

I would expect that less decay in RARE would have led to more false alarms, higher detection rates, and faster RTs unless the decision threshold also increased (or there was some other additional change to the decision process). The CPP for motor preparatory activity reported in Fig. 5 is also potentially consistent with a change in the decision threshold between RARE and FREQUENT. If the decision threshold is changing, how would that affect the empirical integration kernel? These are important questions on their own and also for interpreting the EEG changes.

This important comment, alongside the comments of reviewer 1 above, made us carefully consider the effects of changes in decision threshold on the evidence integration kernel via simulation. As discussed above (in response to ‘essential revisions for the authors’), we now include an entirely new section on how changes in decision threshold and leak may affect the evidence integration kernel, and be used to optimise performance across the different sensory environments. In particular, we agree with the reviewer that the motor preparatory activity that differs between RARE and FREQUENT is consistent with a change in decision threshold, and our simulations have suggested that our behavioural findings on evidence integration are also consistent with this change as well. These are detailed on pp.1-4 of the rebuttal, above.

- The authors find an interesting difference in the CPP for the FREQUENT vs RARE conditions where they also show differences in the decay time constant from the empirical integration kernel. As mentioned above, I'm wondering what else may be different between these conditions. Do the authors have any leverage in addressing whether the decision threshold differs? What about other factors that could be important for explaining the CPP difference between conditions? Big picture, the change in CPP becomes increasingly interesting the more tightly it can be tied to a particular change in the decision process.

We fully agree with the spirit of this comment, and we’ve tried much more carefully to consider what the influences of decision threshold and leak would be on our behavioural analyses. As discussed in the response to reviewer 1, we think that the negative-going potential at the time of responses (which is greater in RARE vs. FREQUENT, main figure 7b, and mirrored by equivalent changes in beta desynchronisation, see Reviewer Response Figure 5 above) are both reflective of a change in decision threshold between RARE and FREQUENT conditions. We have tried to make this link explicit in the revised results section:

“As large changes in mean evidence are less frequent in the RARE condition, the increased neural response to |Devidence| may reflect the increased statistical surprise associated with the same magnitude of change in evidence in this condition. In addition, when making a correct response, preparatory motor activity over central electrodes reached a larger decision threshold for RARE vs. FREQUENT response periods (Figure 7b; p=0.041, cluster-based permutation test). We found similar effects in beta-band desynchronisation prior, averaged over the same electrodes; beta desynchronisation was greater in RARE than FREQUENT response periods. As discussed in the computational modelling section above, this is consistent with the changes in integration kernels between these conditions as it may reflect a change in decision threshold (figure 2d, 3c/d). It is also consistent with the lower detection rates and slower reaction times when response periods are RARE (figure 2 b/c).”

I'll note that I'm also somewhat skeptical of the statements by the authors that large shifts in evidence are less frequent in the RARE compared to FREQUENT conditions (despite the names) - a central part of their interpretation of the associated CPP change. The FREQUENT condition obviously has more frequent deviations from the baseline, but this is countered to some extent by the experimental design that has reduced the standard deviation of the coherence for these response periods. I think a calculation of overall across-time standard deviation of motion coherence between the RARE and FREQUENT conditions is needed to support these statements, and I couldn't find that calculation reported. The authors could easily do this, so I encourage them to check and report it.

See Author response image 2.

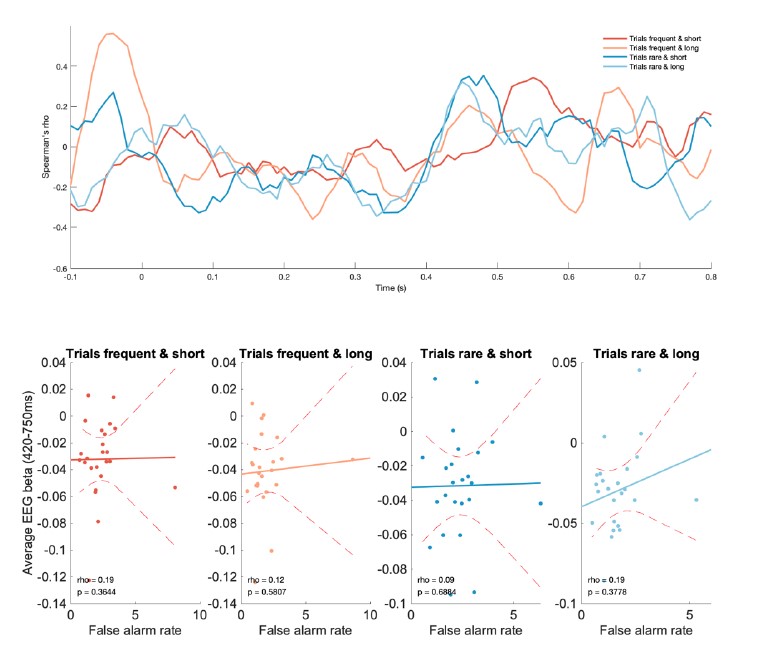

- The wide range of decay time constants between subjects and the correlation of this with another component of the CPP is also interesting. However, in trying to interpret this change in CPP, I'm wondering what else might be changing in the inter-subject behavior. For instance, it looks like there could be up to 4 fold changes in false alarm rates. Are there other changes as well? Do these correlate with the CPP? Similar to my point above, the changes in CPP across subjects become increasingly interesting the more tightly it can be tied to a particular difference in subject behavior. So, I would encourage the authors to examine this in more depth.

Thanks for the interesting suggestion. We explored whether there might be any interindividual correlation in this measure with the false alarm rate across participants, but found that there was no such correlation. (See Author response image 4; plotting conventions are as in main figure 9).

Author response image 4.

No evidence of between-subject correlations in CPP responses and false alarm rates, in any of the four conditions.

We hope instead that the extended discussion of how the integration kernel should be interpreted (in light of computational modelling) provides at least some increased interpretability of the between-subject effects that we report in figure 9.

Reviewer #3 (Public Review):

The main strength is in the task design which is novel and provides an interesting approach to studying continuous evidence accumulation. Because of the continuous nature of the task, the authors design new ways to look at behavioral and neural traces of evidence. The reverse-correlation method looking at the average of past coherence signals enables us to characterize the changes in signal leading to a decision bound and its neural correlate. By varying the frequency and length of the so-called response period, that the participants have to identify, the method potentially offers rich opportunities to the wider community to look at various aspects of decision-making under sensory uncertainty.

We are pleased that the reviewer agrees with our general approach as a novel way of characterising various aspects of decision-making under uncertainty.

The main weaknesses that I see lie within the description and rigor of the method. The authors refer multiple times to the time constant of the exponential fit to the signal before the decision but do not provide a rigorous method for its calculation and neither a description of the goodness of the fit. The variable names seem to change throughout the text which makes the argumentation confusing to the reader. The figure captions are incomplete and lack clarity.

We apologise that some of our original submission was difficult to follow in places, and we are very grateful to the reviewer for their thorough suggestions for how this could be improved. We address these in turn below, and we hope that this answers their questions, and has also led to a significant improvement in the description and rigour of the methodology.

-

eLife assessment

The authors use a clever experimental design and approach to tackle an important set of questions in the field of decision-making. From this work, the authors have a number of intriguing results. However, questions remain regarding the extent to which a number of alternative models and interpretations, not considered in the paper, could account for the observed effects.

-

Reviewer #1 (Public Review):

Ruesseler and colleagues combine careful paradigm design, psychophysical and EEG analyses to determine whether information leakage during decision formation is strategically adjusted to meet changing task demands. Participants made motion direction judgments that required monitoring a continuous stream of dot motion for 'response periods' characterised by a sustained period of coherent motion in a leftward or rightward direction. Coherence was modulated on a frame-to-frame basis throughout the task furnishing a parametric regressor that could be used to interrogate the longevity of sensory samples in the decision process and their influence on corresponding EEG signals. Participants completed the task under varying conditions of response period length and frequency. Psychophysical kernel analyses suggest …

Reviewer #1 (Public Review):

Ruesseler and colleagues combine careful paradigm design, psychophysical and EEG analyses to determine whether information leakage during decision formation is strategically adjusted to meet changing task demands. Participants made motion direction judgments that required monitoring a continuous stream of dot motion for 'response periods' characterised by a sustained period of coherent motion in a leftward or rightward direction. Coherence was modulated on a frame-to-frame basis throughout the task furnishing a parametric regressor that could be used to interrogate the longevity of sensory samples in the decision process and their influence on corresponding EEG signals. Participants completed the task under varying conditions of response period length and frequency. Psychophysical kernel analyses suggest that sensory samples had a more short-lived impact on the participants' choices when response periods were rare, suggestive of greater information leakage. When the stimulus perturbations were regressed against the EEG data, it highlighted a centro-parietal component that showed increased responsiveness to large shifts in evidence when those shifts were more rare, suggestive of a role in representing surprise. An additional triphasic component was found to correlate with the time constant of integration as estimated from the kernel analyses.

This is a very timely paper that addresses an important and difficult-to-address question in the decision-making field - the degree to which information leakage can be strategically adapted to optimise decisions in a task-dependent fashion. The authors apply a sophisticated suite of analyses that are appropriate and yield a range of very interesting observations. The paper centres on analyses of one possible model that hinges on certain assumptions about the nature of the decision process for this task which raises questions about whether leak adjustments are the only possible explanation for the current data. I think the conclusions would be greatly strengthened if they were supported by the application and/or simulation of alternative model structures.

The behavioural trends when comparing blocks with frequent versus rare response periods seem difficult to tally with a change in the leak. The greater leak should result in a reduction in the rate of false alarms yet no significant differences were observed between these two conditions. Meanwhile, false alarms did vary as a function of short/long target durations which did not show any leak effect in the psychophysical kernel analyses. Are there other models that could reproduce such effects? For example, could a model in which the drift rate varies between Rare and Frequent trials do a similar or better job of explaining the data? This ties in to a related query about the nature of the task employed by the authors. Due to the very significant volatility of the stimulus, it seems likely that the participants are not solely making judgments about the presence/absence of coherent motion but also making judgments about its duration (because strong coherent motion frequently occurs in the inter-target intervals). If that is so, then could the Rare condition equate to less evidence because there is an increased probability that an extended period of coherent motion could be an outlier generated from the noise distribution? Note that a drift rate reduction would also be expected to result in fewer hits and slower reaction times, as observed.

Some adjustment of the language used when discussing FAs seems merited. If I have understood correctly, the sensory samples encountered by the participants during the inter-response intervals can at times favour a particular alternative just as strongly (or more strongly) than that encountered during the response interval itself. In that sense, the responses are not necessarily real false alarms because the physical evidence itself does not distinguish the target from the non-target. I don't think this invalidates the authors' approach but I think it should be acknowledged and considered in light of the comment above regarding the nature of the decision process employed on this task.

The authors report that preparatory motor activity over central electrodes reached a larger decision threshold for RARE vs. FREQUENT response periods. It is not clear what identifies this signal as reflecting motor preparation. Did the authors consider using other effector-selective EEG signatures of motor preparation such as beta-band activity which has been used elsewhere to make inferences about decision bounds? Assuming that this central ERP signal does reflect the decision bounds, the observation that it has a larger amplitude at the response on Rare trials appears to directly contradict the kernel analyses which suggest no difference in the cumulative evidence required to trigger commitment.

P11, the "absolute sensory evidence" regressor elicited a triphasic potential over centroparietal electrodes. The first two phases of this component look to have an occipital focus. The third phase has a more centroparietal focus but appears markedly more posterior than the change in evidence component. This raises the question of whether it is safe to assume that they reflect the same process.

-

Reviewer #2 (Public Review):

In this manuscript, Ruesseler and colleagues use a continuous task to examine how neural correlates of decision-making change when subjects face conditions with different durations and frequencies of occurrence of signals embedded in noise. The authors develop a novel task where subjects must report the direction of relatively sustained (3 or 5 s) signal changes in average coherence of a random dot kinetogram that are intermittent among relatively transient noise fluctuations (<1 s) of motion coherence that is continuous. Subjects adjust their behavior to changes in the duration of signal events and the frequency of their occurrence. The authors estimate a decay time constant of leaky integration of evidence based on the average coherence leading up to decision responses. Interestingly, there is considerable …

Reviewer #2 (Public Review):

In this manuscript, Ruesseler and colleagues use a continuous task to examine how neural correlates of decision-making change when subjects face conditions with different durations and frequencies of occurrence of signals embedded in noise. The authors develop a novel task where subjects must report the direction of relatively sustained (3 or 5 s) signal changes in average coherence of a random dot kinetogram that are intermittent among relatively transient noise fluctuations (<1 s) of motion coherence that is continuous. Subjects adjust their behavior to changes in the duration of signal events and the frequency of their occurrence. The authors estimate a decay time constant of leaky integration of evidence based on the average coherence leading up to decision responses. Interestingly, there is considerable inter-subject variability in decay time constants even under identical conditions. In addition, the average time constants are shorter when signal periods occur more frequently as opposed to when they are more rare. The authors use EEG to find that a component of the Centroparietal Positivity (CPP) regressed to the magnitude of changes in the noise coherence is larger in conditions when the signal periods occur less frequently. Using a control condition, the authors show that this component of the CPP is not simply based on surprise because it is smaller for changes in motion coherence in irrelevant directions with matched statistics as the changes in relevant directions. The authors also find that a different component of the CPP related to the magnitude of the motion coherence co-varies with the inter-subject variability in decay time constants estimated from behavior.

Overall, the authors use a clever experimental design and approach to tackle an important set of questions in the field of decision-making. The manuscript is easy to follow with clear writing. The analyses are well thought-out and generally appropriate for the questions at hand. From these analyses, the authors have a number of intriguing results. So, there is considerable potential and merit in this work. That said, I have a number of important questions and concerns that largely revolve around putting all the pieces together. I describe these below.

- Quite sensibly, the authors hypothesize that "decay time constant" for past evidence and "decision threshold" would be altered between the different task conditions. They find clear and compelling evidence of behavioral alterations with the conditions. They also have a method to estimate the decay time constant. However, it is unclear to what extent the decision threshold is changing between subjects and conditions, how that might affect the empirical integration kernel, and how well these two factors can together explain the overall changes in behavior.

To be more specific, the authors state that the lower false alarm rates and slower reaction times for the LONG condition are consistent with a more cautious response threshold for LONG. The empirical integration kernels lead to the suggestion that the decay time constant is not changing between SHORT and LONG, while it is changing between FREQUENT and RARE. Does the lack of change in false alarm rate between FREQUENT and RARE imply no change in the decision threshold? Is this consistent with the behavior shown in Figure 2? I would expect that less decay in RARE would have led to more false alarms, higher detection rates, and faster RTs unless the decision threshold also increased (or there was some other additional change to the decision process). The CPP for motor preparatory activity reported in Fig. 5 is also potentially consistent with a change in the decision threshold between RARE and FREQUENT. If the decision threshold is changing, how would that affect the empirical integration kernel? These are important questions on their own and also for interpreting the EEG changes.

- The authors find an interesting difference in the CPP for the FREQUENT vs RARE conditions where they also show differences in the decay time constant from the empirical integration kernel. As mentioned above, I'm wondering what else may be different between these conditions. Do the authors have any leverage in addressing whether the decision threshold differs? What about other factors that could be important for explaining the CPP difference between conditions? Big picture, the change in CPP becomes increasingly interesting the more tightly it can be tied to a particular change in the decision process.

I'll note that I'm also somewhat skeptical of the statements by the authors that large shifts in evidence are less frequent in the RARE compared to FREQUENT conditions (despite the names) - a central part of their interpretation of the associated CPP change. The FREQUENT condition obviously has more frequent deviations from the baseline, but this is countered to some extent by the experimental design that has reduced the standard deviation of the coherence for these response periods. I think a calculation of overall across-time standard deviation of motion coherence between the RARE and FREQUENT conditions is needed to support these statements, and I couldn't find that calculation reported. The authors could easily do this, so I encourage them to check and report it.

- The wide range of decay time constants between subjects and the correlation of this with another component of the CPP is also interesting. However, in trying to interpret this change in CPP, I'm wondering what else might be changing in the inter-subject behavior. For instance, it looks like there could be up to 4 fold changes in false alarm rates. Are there other changes as well? Do these correlate with the CPP? Similar to my point above, the changes in CPP across subjects become increasingly interesting the more tightly it can be tied to a particular difference in subject behavior. So, I would encourage the authors to examine this in more depth.

-

Reviewer #3 (Public Review):

The authors are designing a novel continuous evidence accumulation task to look at neural and behavioral adaptations of continuously changing evidence. They particularly focus on centroparietal EEG potential that has been previously linked with evidence accumulation. This paper provides a novel method and analysis to investigate evidence accumulation in a continuous task set-up.

I am not familiar with either the EEG or evidence accumulation literature, therefore cannot comment on the strength of the findings related to centroparietal EEG in evidence accumulation. I have therefore commented only on the coherence and details of the method and clarity of the argumentation and results.

The main strength is in the task design which is novel and provides an interesting approach to studying continuous evidence …

Reviewer #3 (Public Review):

The authors are designing a novel continuous evidence accumulation task to look at neural and behavioral adaptations of continuously changing evidence. They particularly focus on centroparietal EEG potential that has been previously linked with evidence accumulation. This paper provides a novel method and analysis to investigate evidence accumulation in a continuous task set-up.

I am not familiar with either the EEG or evidence accumulation literature, therefore cannot comment on the strength of the findings related to centroparietal EEG in evidence accumulation. I have therefore commented only on the coherence and details of the method and clarity of the argumentation and results.

The main strength is in the task design which is novel and provides an interesting approach to studying continuous evidence accumulation. Because of the continuous nature of the task, the authors design new ways to look at behavioral and neural traces of evidence. The reverse-correlation method looking at the average of past coherence signals enables us to characterize the changes in signal leading to a decision bound and its neural correlate.

By varying the frequency and length of the so-called response period, that the participants have to identify, the method potentially offers rich opportunities to the wider community to look at various aspects of decision-making under sensory uncertainty.The main weaknesses that I see lie within the description and rigor of the method. The authors refer multiple times to the time constant of the exponential fit to the signal before the decision but do not provide a rigorous method for its calculation and neither a description of the goodness of the fit. The variable names seem to change throughout the text which makes the argumentation confusing to the reader. The figure captions are incomplete and lack clarity.

The authors claim that the method enables continuous analysis of decision-making and evidence accumulation which is true. The analysis of the signals that come prior to the decision provides a rich opportunity to characterize decision bound in this task. The behavioral and neural analyses globally lack clarity and description and thus do not strongly support the claims of the paper. The interpretation of the figures within the figure caption and the lack of a neutral and exhaustive description of what is being shown prevent the claims to be strongly supported.The continuous nature of the task and the computation of those evidence kernels are valuable methods to look at evidence accumulation that could be of use within the community. However, due to the lack of rigor in the analysis and description of the method, it is hard to know if the current dataset is under-exploited or whether the choice of the parameters for this set of experiment does not enable stronger claims.

-

eLife assessment

The authors use a clever experimental design and approach to tackle an important set of questions in the field of decision-making. From this work, the authors have a number of intriguing results. However, questions remain regarding the extent to which a number of alternative models and interpretations, not considered in the paper, could account for the observed effects.

-

Reviewer #1 (Public Review):

Ruesseler and colleagues combine careful paradigm design, psychophysical and EEG analyses to determine whether information leakage during decision formation is strategically adjusted to meet changing task demands. Participants made motion direction judgments that required monitoring a continuous stream of dot motion for 'response periods' characterised by a sustained period of coherent motion in a leftward or rightward direction. Coherence was modulated on a frame-to-frame basis throughout the task furnishing a parametric regressor that could be used to interrogate the longevity of sensory samples in the decision process and their influence on corresponding EEG signals. Participants completed the task under varying conditions of response period length and frequency. Psychophysical kernel analyses suggest …

Reviewer #1 (Public Review):

Ruesseler and colleagues combine careful paradigm design, psychophysical and EEG analyses to determine whether information leakage during decision formation is strategically adjusted to meet changing task demands. Participants made motion direction judgments that required monitoring a continuous stream of dot motion for 'response periods' characterised by a sustained period of coherent motion in a leftward or rightward direction. Coherence was modulated on a frame-to-frame basis throughout the task furnishing a parametric regressor that could be used to interrogate the longevity of sensory samples in the decision process and their influence on corresponding EEG signals. Participants completed the task under varying conditions of response period length and frequency. Psychophysical kernel analyses suggest that sensory samples had a more short-lived impact on the participants' choices when response periods were rare, suggestive of greater information leakage. When the stimulus perturbations were regressed against the EEG data, it highlighted a centro-parietal component that showed increased responsiveness to large shifts in evidence when those shifts were more rare, suggestive of a role in representing surprise. An additional triphasic component was found to correlate with the time constant of integration as estimated from the kernel analyses.

This is a very timely paper that addresses an important and difficult-to-address question in the decision-making field - the degree to which information leakage can be strategically adapted to optimise decisions in a task-dependent fashion. The authors apply a sophisticated suite of analyses that are appropriate and yield a range of very interesting observations. The paper centres on analyses of one possible model that hinges on certain assumptions about the nature of the decision process for this task which raises questions about whether leak adjustments are the only possible explanation for the current data. I think the conclusions would be greatly strengthened if they were supported by the application and/or simulation of alternative model structures.

The behavioural trends when comparing blocks with frequent versus rare response periods seem difficult to tally with a change in the leak. The greater leak should result in a reduction in the rate of false alarms yet no significant differences were observed between these two conditions. Meanwhile, false alarms did vary as a function of short/long target durations which did not show any leak effect in the psychophysical kernel analyses. Are there other models that could reproduce such effects? For example, could a model in which the drift rate varies between Rare and Frequent trials do a similar or better job of explaining the data? This ties in to a related query about the nature of the task employed by the authors. Due to the very significant volatility of the stimulus, it seems likely that the participants are not solely making judgments about the presence/absence of coherent motion but also making judgments about its duration (because strong coherent motion frequently occurs in the inter-target intervals). If that is so, then could the Rare condition equate to less evidence because there is an increased probability that an extended period of coherent motion could be an outlier generated from the noise distribution? Note that a drift rate reduction would also be expected to result in fewer hits and slower reaction times, as observed.

Some adjustment of the language used when discussing FAs seems merited. If I have understood correctly, the sensory samples encountered by the participants during the inter-response intervals can at times favour a particular alternative just as strongly (or more strongly) than that encountered during the response interval itself. In that sense, the responses are not necessarily real false alarms because the physical evidence itself does not distinguish the target from the non-target. I don't think this invalidates the authors' approach but I think it should be acknowledged and considered in light of the comment above regarding the nature of the decision process employed on this task.

The authors report that preparatory motor activity over central electrodes reached a larger decision threshold for RARE vs. FREQUENT response periods. It is not clear what identifies this signal as reflecting motor preparation. Did the authors consider using other effector-selective EEG signatures of motor preparation such as beta-band activity which has been used elsewhere to make inferences about decision bounds? Assuming that this central ERP signal does reflect the decision bounds, the observation that it has a larger amplitude at the response on Rare trials appears to directly contradict the kernel analyses which suggest no difference in the cumulative evidence required to trigger commitment.

P11, the "absolute sensory evidence" regressor elicited a triphasic potential over centroparietal electrodes. The first two phases of this component look to have an occipital focus. The third phase has a more centroparietal focus but appears markedly more posterior than the change in evidence component. This raises the question of whether it is safe to assume that they reflect the same process.

-

Reviewer #2 (Public Review):

In this manuscript, Ruesseler and colleagues use a continuous task to examine how neural correlates of decision-making change when subjects face conditions with different durations and frequencies of occurrence of signals embedded in noise. The authors develop a novel task where subjects must report the direction of relatively sustained (3 or 5 s) signal changes in average coherence of a random dot kinetogram that are intermittent among relatively transient noise fluctuations (<1 s) of motion coherence that is continuous. Subjects adjust their behavior to changes in the duration of signal events and the frequency of their occurrence. The authors estimate a decay time constant of leaky integration of evidence based on the average coherence leading up to decision responses. Interestingly, there is considerable …

Reviewer #2 (Public Review):

In this manuscript, Ruesseler and colleagues use a continuous task to examine how neural correlates of decision-making change when subjects face conditions with different durations and frequencies of occurrence of signals embedded in noise. The authors develop a novel task where subjects must report the direction of relatively sustained (3 or 5 s) signal changes in average coherence of a random dot kinetogram that are intermittent among relatively transient noise fluctuations (<1 s) of motion coherence that is continuous. Subjects adjust their behavior to changes in the duration of signal events and the frequency of their occurrence. The authors estimate a decay time constant of leaky integration of evidence based on the average coherence leading up to decision responses. Interestingly, there is considerable inter-subject variability in decay time constants even under identical conditions. In addition, the average time constants are shorter when signal periods occur more frequently as opposed to when they are more rare. The authors use EEG to find that a component of the Centroparietal Positivity (CPP) regressed to the magnitude of changes in the noise coherence is larger in conditions when the signal periods occur less frequently. Using a control condition, the authors show that this component of the CPP is not simply based on surprise because it is smaller for changes in motion coherence in irrelevant directions with matched statistics as the changes in relevant directions. The authors also find that a different component of the CPP related to the magnitude of the motion coherence co-varies with the inter-subject variability in decay time constants estimated from behavior.

Overall, the authors use a clever experimental design and approach to tackle an important set of questions in the field of decision-making. The manuscript is easy to follow with clear writing. The analyses are well thought-out and generally appropriate for the questions at hand. From these analyses, the authors have a number of intriguing results. So, there is considerable potential and merit in this work. That said, I have a number of important questions and concerns that largely revolve around putting all the pieces together. I describe these below.

- Quite sensibly, the authors hypothesize that "decay time constant" for past evidence and "decision threshold" would be altered between the different task conditions. They find clear and compelling evidence of behavioral alterations with the conditions. They also have a method to estimate the decay time constant. However, it is unclear to what extent the decision threshold is changing between subjects and conditions, how that might affect the empirical integration kernel, and how well these two factors can together explain the overall changes in behavior.

To be more specific, the authors state that the lower false alarm rates and slower reaction times for the LONG condition are consistent with a more cautious response threshold for LONG. The empirical integration kernels lead to the suggestion that the decay time constant is not changing between SHORT and LONG, while it is changing between FREQUENT and RARE. Does the lack of change in false alarm rate between FREQUENT and RARE imply no change in the decision threshold? Is this consistent with the behavior shown in Figure 2? I would expect that less decay in RARE would have led to more false alarms, higher detection rates, and faster RTs unless the decision threshold also increased (or there was some other additional change to the decision process). The CPP for motor preparatory activity reported in Fig. 5 is also potentially consistent with a change in the decision threshold between RARE and FREQUENT. If the decision threshold is changing, how would that affect the empirical integration kernel? These are important questions on their own and also for interpreting the EEG changes.

- The authors find an interesting difference in the CPP for the FREQUENT vs RARE conditions where they also show differences in the decay time constant from the empirical integration kernel. As mentioned above, I'm wondering what else may be different between these conditions. Do the authors have any leverage in addressing whether the decision threshold differs? What about other factors that could be important for explaining the CPP difference between conditions? Big picture, the change in CPP becomes increasingly interesting the more tightly it can be tied to a particular change in the decision process.

I'll note that I'm also somewhat skeptical of the statements by the authors that large shifts in evidence are less frequent in the RARE compared to FREQUENT conditions (despite the names) - a central part of their interpretation of the associated CPP change. The FREQUENT condition obviously has more frequent deviations from the baseline, but this is countered to some extent by the experimental design that has reduced the standard deviation of the coherence for these response periods. I think a calculation of overall across-time standard deviation of motion coherence between the RARE and FREQUENT conditions is needed to support these statements, and I couldn't find that calculation reported. The authors could easily do this, so I encourage them to check and report it.

- The wide range of decay time constants between subjects and the correlation of this with another component of the CPP is also interesting. However, in trying to interpret this change in CPP, I'm wondering what else might be changing in the inter-subject behavior. For instance, it looks like there could be up to 4 fold changes in false alarm rates. Are there other changes as well? Do these correlate with the CPP? Similar to my point above, the changes in CPP across subjects become increasingly interesting the more tightly it can be tied to a particular difference in subject behavior. So, I would encourage the authors to examine this in more depth.

-

Reviewer #3 (Public Review):

The authors are designing a novel continuous evidence accumulation task to look at neural and behavioral adaptations of continuously changing evidence. They particularly focus on centroparietal EEG potential that has been previously linked with evidence accumulation. This paper provides a novel method and analysis to investigate evidence accumulation in a continuous task set-up.

I am not familiar with either the EEG or evidence accumulation literature, therefore cannot comment on the strength of the findings related to centroparietal EEG in evidence accumulation. I have therefore commented only on the coherence and details of the method and clarity of the argumentation and results.

The main strength is in the task design which is novel and provides an interesting approach to studying continuous evidence …

Reviewer #3 (Public Review):

The authors are designing a novel continuous evidence accumulation task to look at neural and behavioral adaptations of continuously changing evidence. They particularly focus on centroparietal EEG potential that has been previously linked with evidence accumulation. This paper provides a novel method and analysis to investigate evidence accumulation in a continuous task set-up.

I am not familiar with either the EEG or evidence accumulation literature, therefore cannot comment on the strength of the findings related to centroparietal EEG in evidence accumulation. I have therefore commented only on the coherence and details of the method and clarity of the argumentation and results.

The main strength is in the task design which is novel and provides an interesting approach to studying continuous evidence accumulation. Because of the continuous nature of the task, the authors design new ways to look at behavioral and neural traces of evidence. The reverse-correlation method looking at the average of past coherence signals enables us to characterize the changes in signal leading to a decision bound and its neural correlate.

By varying the frequency and length of the so-called response period, that the participants have to identify, the method potentially offers rich opportunities to the wider community to look at various aspects of decision-making under sensory uncertainty.The main weaknesses that I see lie within the description and rigor of the method. The authors refer multiple times to the time constant of the exponential fit to the signal before the decision but do not provide a rigorous method for its calculation and neither a description of the goodness of the fit. The variable names seem to change throughout the text which makes the argumentation confusing to the reader. The figure captions are incomplete and lack clarity.

The authors claim that the method enables continuous analysis of decision-making and evidence accumulation which is true. The analysis of the signals that come prior to the decision provides a rich opportunity to characterize decision bound in this task. The behavioral and neural analyses globally lack clarity and description and thus do not strongly support the claims of the paper. The interpretation of the figures within the figure caption and the lack of a neutral and exhaustive description of what is being shown prevent the claims to be strongly supported.The continuous nature of the task and the computation of those evidence kernels are valuable methods to look at evidence accumulation that could be of use within the community. However, due to the lack of rigor in the analysis and description of the method, it is hard to know if the current dataset is under-exploited or whether the choice of the parameters for this set of experiment does not enable stronger claims.

-