Dopamine enhances model-free credit assignment through boosting of retrospective model-based inference

Curation statements for this article:-

Curated by eLife

Evaluation Summary:

This paper provides behavioral and modeling evidence for the hypothesis that dopamine is involved in the interaction between distinct model-based and model-free control systems. The issue addressed is timely and clinically relevant, and will be of interest to a broad audience interested in dopamine, learning, choice and planning.

(This preprint has been reviewed by eLife. We include the public reviews from the reviewers here; the authors also receive private feedback with suggested changes to the manuscript. Reviewer #3 agreed to share their name with the authors.)

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Dopamine is implicated in representing model-free (MF) reward prediction errors a as well as influencing model-based (MB) credit assignment and choice. Putative cooperative interactions between MB and MF systems include a guidance of MF credit assignment by MB inference. Here, we used a double-blind, placebo-controlled, within-subjects design to test an hypothesis that enhancing dopamine levels boosts the guidance of MF credit assignment by MB inference. In line with this, we found that levodopa enhanced guidance of MF credit assignment by MB inference, without impacting MF and MB influences directly. This drug effect correlated negatively with a dopamine-dependent change in purely MB credit assignment, possibly reflecting a trade-off between these two MB components of behavioural control. Our findings of a dopamine boost in MB inference guidance of MF learning highlight a novel DA influence on MB-MF cooperative interactions.

Article activity feed

-

-

Author Response:

Reviewer #3 (Public Review):

This paper reports that levodopa administration to healthy volunteers enhances the guidance of model-free credit assignment (MFCA) by model-based (MB) inference without altering MF and MB learning per se. The issue addressed is fascinating, timely and clinically relevant, the experimental design and analysis strategy (reported previously) are complex, but sophisticated and clever and the results are tantalizing. They suggest that ldopa boosts model-based instruction about what (unobserved or inferred) state the model-free system might learn about. As such, the paper substantiates the hypothesis that dopamine plays a role specifically in the interaction between distinct model-based and model-free systems. This is really a very valuable contribution, one that my lab and I expect many other …

Author Response:

Reviewer #3 (Public Review):

This paper reports that levodopa administration to healthy volunteers enhances the guidance of model-free credit assignment (MFCA) by model-based (MB) inference without altering MF and MB learning per se. The issue addressed is fascinating, timely and clinically relevant, the experimental design and analysis strategy (reported previously) are complex, but sophisticated and clever and the results are tantalizing. They suggest that ldopa boosts model-based instruction about what (unobserved or inferred) state the model-free system might learn about. As such, the paper substantiates the hypothesis that dopamine plays a role specifically in the interaction between distinct model-based and model-free systems. This is really a very valuable contribution, one that my lab and I expect many other labs had already picked up immediately after it appeared as a preprint.

Major strengths include the combination of pharmacology with a substantial sample size, clever theory-driven experimental design and application of advanced computational modeling. The key effect of ldopa on retroactive MF inference is not large, but substantiated by both model-agnostic and model-informed analyses and therefore the primary conclusion is supported by the results.

The paper raises the following questions.

What putative neural mechanism led the authors to predict this selective modulation of the interaction? The introduction states that "Given DA's contribution to both MF and MB systems, we set out to examine whether this aspect of MB-MF cooperation is subject to DA influence." This is vague. For the hypothesis to be plausible, it would need to be grounded in some idea about how the effect would be implemented. Where exactly does dopamine act to elicit an effect on retroactive MB inference, but not MB learning per se? If the mechanism is a modulation of working memory and/or replay itself, then shouldn't that lead to boosting of both MB learning as well as MB influences on MF learning? Addressing this involves specification of the mechanistic basis of the hypothesis in the introduction, but the question also pertains to the discussion section. Hippocampal replay is invoked, but can the authors clarify why a prefrontal working memory (retrieval) mechanism invoked in the preceding paragraph would not suffice. In any case, it seems that an effect of dopamine on replay would also alter MB choice/planning?

In sum, we agree with this criticism and have now revised the relevant intro paragraph (p. 3/4).

We now discuss DAergic manipulation of replay in particular (p. 24). We infer that a component of a MB influence over choice comes from the way it trains a putative MF system (something explicitly modelled in Mattar & Daw, 2018, and a new preprint from Antonov et al., 2021, referencing data from Eldar et al., 2020) – and consider what happens if this is boosted by DA manipulations. The difference between the standard two-step task and the present task is that in our task there is extra work for the MB system in order to perform inference so as to resolve uncertainty for MFCA. We later suggest that the anticorrelation we found between the effect of DA on MB influence over choice and MB guidance of MFCA arises from this extra work.

The broader questions raised about (prefrontal) working memory and (hippocampal) replay pertains to recent and ongoing work, and we feel this should be part of the discussion, which we have re-written this to detail more clearly different possible mechanistic explanations, pointing to how they might be tested in the future (p. 23/24).

A second issue is that the critical drug effects seems somewhat marginally significant and the key plots (e.g. Fig3b and Fig 44b,c, but also other plots) do not visualize relevant variability in the drug effect. I would recommend plotting differences between LDopa and placebo, allowing readers to appreciate the relevant individual variability in the drug effects.

We have now replotted the data in the new Figures 4 and 5 to reflect drug-related variability.

Third, I do wonder how to reconcile the lack of a drug x common reward effect (the lack of a dopamine effect on MF learning) as well as the lack of a drug effect on choice generalization with the long literature on dopamine and MF reinforcement and newer literature on dopamine effects on MB learning and inference. The authors mention this in the discussion, but do not provide an account. Can they elaborate on what makes these pure MB and MF metrics here less sensitive than in various other studies, and/or what are the implications of the lack of these effects for our understanding of dopamine's contributions to learning?

Regarding a lack of a drug effect on MF learning or control, we now elaborate on this on p. 22/23:

“With respect to our current task, and an established two-step task designed to dissociate MF and MB influences (Daw et al., 2011), there is as yet no compelling evidence for an direct impact of DA on MF learning or control (Deserno et al., 2015a; Kroemer et al., 2019; Sharp et al., 2016; Wunderlich et al., 2012, Kroemer et al., 2019). A commonality of our novel and the two-step task is dynamically changing reward contingencies. As MF learning is by definition incremental, slowly accumulating reward value over extended time-periods, it follows that dynamic reward schedules may lessen a sensitivity to detect changes in MF processes (see Doll et al., 2016 for discussion). In line with this, experiments in humans indicate that value-based choices performed without feedback-based learning (for reviews see, Maia & Frank, 2011; Collins and Frank, 2014), as well as learning in stable environments (Pessiglione et al., 2006), are susceptible to DA drug influences (or genetic proxies thereof) as expected under an MF RL account. Thus, the impact of DA boosting agents may vary as a function of contextual task demands. This resonates with features of our pharmacological manipulation using levodopa, which impacts primarily on presynaptic synthesis. Thus, instead of necessarily directly altering phasic DA release, levodopa impacts on baseline storage (Kumakura and Cumming, 2009), likely reflected in overall DA tone. DA tone is proposed to encode average environmental reward rate (Mohebi et al., 2019; Niv et al., 2007), a putative environmental summary statistic that might in turn impact an arbitration between behavioural control strategies according to environmental demands (Cools, 2019).”

As pointed out by the reviewer as well, in the present task we did not find an effect of levodopa on MB influences per se and now discuss this on p. 22:

“In this context, a primary drug effect on prefrontal DA might result in a boosting of purely MB influences. However, we found no such influence at a group level – unlike that seen previously in tasks that used only a single measure of MB influences (Sharpe et al., 2017; Wunderlich et al., 2012). Our novel task systematically separates two MB processes: a guidance of MFCA by MB inference and pure MB control. While we found that only one of these, namely guidance of MFCA by MB inference, was sensitive to enhancement of DA levels at a group level, we did detect a negative correlation between the DA drug effects on MB guidance of MFCA and on pure MBCA. One explanation is that a DA-dependent enhancement in pure MB influences was masked by this boosting in the guidance of MFCA by MB inference. In this regard, our data is suggestive of between-subject heterogeneity in the effects of boosting DA on distinct aspects of MB influences.”

Another open question remains as to why different task conditions (guidance of MFCA by MB vs. pure MB control) apparently differ in their sensitivity to the drug manipulation. We discuss this (p. 22) by proposing that a cost-benefit trade-off might play an important role (Westbrook et al., 2020).

Fourth, the correlation with WM and drug effect on preferential MBCA for non-informative but not informative destination is really quite small, and while I understand that WM should be associated with preferential MBCA under placebo, it does not become clear what makes the authors predict specifically that WM predicts a dopa effect on this metric, rather than the metric taken under placebo, for example.

Our initial reasoning was that MFCA based on reward at the non-informative destination should be particularly sensitive to WM, on the basis that the reward is no longer perceptually available once state uncertainty can be resolved by the MB system. However, we agree with the reviewer that this reasoning does not indicate why it should specifically effect the drug-induced change. In light of this critique, we have removed this part from the abstract, introduction and the main results but still report this relation to WM in Appendix 1 (p. 44/45, subheading “Drug effect on guidance of MFCA and working memory”, Appendix 1 - Figure 11) as an exploratory analysis as suggested in the editor’s summary.

A fifth issue is that I am not quite convinced about the negative link between dopamine's effects on MBCA and on PMFCA. The rationale for including WM, informativeness as well as DA effects on MBCA in the model of DA effects on PMFCA wasn't clear to me. The reported correlation is statistically quite marginal, and given that it was probably not the first one tested and given the multiple factors involved, I am somewhat concerned about the degree to which this reflects overfitting. I also find the pattern of effects rather difficult to make sense of: in high WM individuals, the drug-effects on PMFCA and MBCA are negatively related for informative and non-informative destinations. In low WM individuals, the drug-effects on PMFCA and MBCA are negatively related for informative, but not non-informative destinations. It is unclear to me how this pattern leads to the conclusion that there is a tradeoff between PMFCA and MBCA. And even if so, why would this be the case? It would be relevant to report the simple effects, that is the pattern of correlations under placebo separately from those under ldopa.

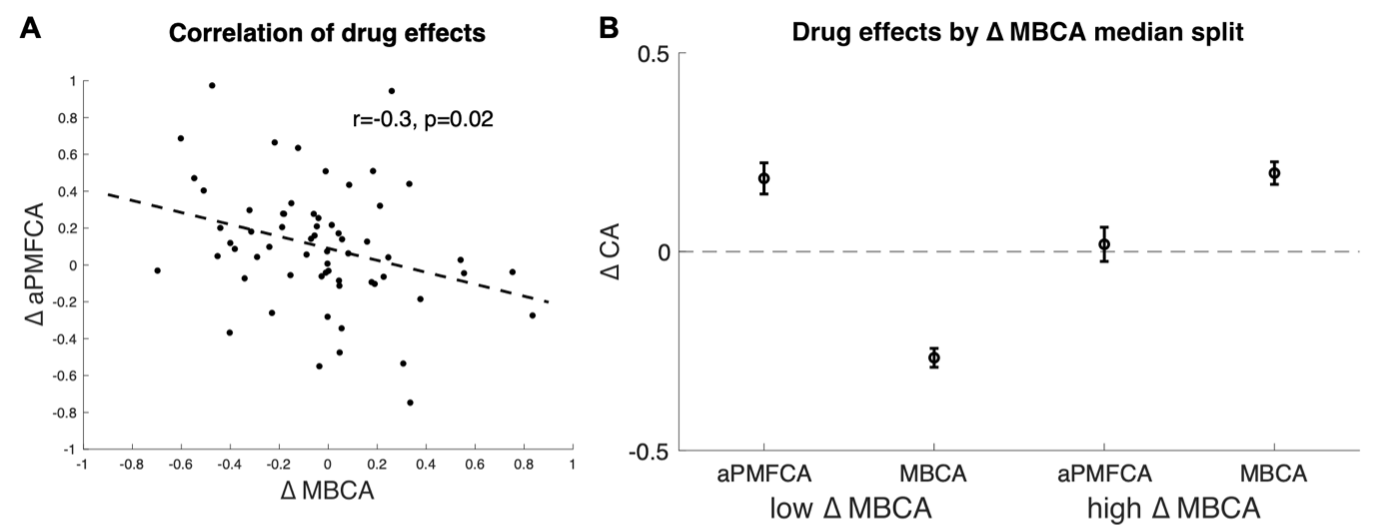

The reviewer’s critique is well taken. In connection to the working memory finding reported in the previous section of the initial manuscript, we reasoned that it would be necessary to include WM in the model as well. We still consider this analysis on inter-individual differences in drug effects from different task conditions is important because it connects our current work to previous work linking DA to MB control. However, we now perform a simplified analysis on this where we leave out WM and instead average PMFCA across informative and non-informative destinations (since we had no prior hypothesis that these conditions should differ, p. 19/20). This results in a significant negative correlation of drug-related change in average PMFCA and MB control (Figure 6A, r=-.31,p=.02 Pearson r=-.30, p=.017, Spearman r=-.33, p=.009). In addition, we also ran extended simulations to verify that this negative correlation does not result from correlations among model parameters (see Appendix 1 - Figure 10 for control analysis verifying that this negative correlation survives control for parameter-tradeoff).

Figure 6. Inter-individual differences in drug effects in MBCA and in preferential MFCA, averaged across informative and non-informative destinations (aPMFCA). A) Scatter plot of the drug effects (levodopa minus placebo; ∆ aPMFCA, ∆ MBCA). Dashed regression line and r Pearson correlation coefficient. B) Drug effects in credit assignment (∆ CA) based on a median on ∆ MBCA. Error bars correspond to SEM reflecting variability between participants.

As suggested by the reviewer, we unpack this correlation further (p. 19/20) by taking the median on Δ MBCA (-0.019) and split the sample in lower/higher median groups. The higher median group showed a positive (M= 0.197, t(30)= 4.934, p<.001) and the lower-median group showed a negative (M= -0.267, t(30)= -7.97, p<.001) drug effect on MBCA, respectively (Figure 6B). In a mixed effects model (see Methods), we regressed aPMFCA against drug and a group indicator of lower/higher median Δ MBCA groups. This revealed a significant drug x Δ MBCA-split interaction (b=-0.17, t(120)=-2.05, p=0.042). In the negative Δ MBCA group (Figure 6B), a significantly positive drug effect on aPMFCA was detected (simple effect: b=.18, F(120,1)=10.35, p=.002) while in the positive Δ MBCA group a drug-dependent change in aPMFCA was not significant (Figure 6B, simple effect: b=.02, F(120,1)=0.10, p=.749).

We have changed the respective section of the results accordingly (p. 19/20). Further, we have motivated this exploratory analysis more clearly in the introduction (p. 3/4) in terms of it providing a link to previous relevant studies (Deserno et al., 2015a; Groman et al., 2019; Sharp et al., 2016; Wunderlich et al., 2012). Lastly, we have endeavoured to improve the discussion on this (p. 21/22).

More generally I would recommend that the authors refrain from putting too much emphasis on these between-subject correlations. Simple power calculation indicates that the sample size one would need to detect a realistically small to medium between-subject effect (that interacts with all kinds of within-subject factors) is in any case much larger than the sample size in this study.

We agree with this and have, as mentioned above, substantially adjusted the section on inter-individual differences. We have moved the WM analysis to Appendix 1 (p. 44/45, subheading “Drug effect on guidance of MFCA and working memory”, Appendix 1 - Figure 11) and greatly simplified the analysis of inter-individual differences in drug effects (see previous paragraph). We also mention the overall small to moderate effects in the limitations section (p. 25/26).

Another question is how worried should we be that the critical MB guidance of MFCA effect was not observed under placebo (Figure 3b)? I realize that the computational model-based analyses do speak to this issue, but here I had some questions too. Are the results from the model-informed and model-agnostic analyses otherwise consistent? Model-agnostic analyses reveal a greater effect of LDopa on informative destination for the ghost-nominated than the ghost-rejected trials and no effect for noninformative destination. Conversely model-informed analyses reveal a nomination effect of ldopa across informative and noninformative trials. This was not addressed, or am I missing something? In fact, regarding the modeling, I am not the best person to evaluate the details of the model comparison, fitting and recovery procedures, but the question that does rise is, and I would make explicit in the current paper how does this model space, the winning model and the modeling exercise differ (or not) from that in the previous paper by Moran et al without LDopa administration.

A detailed response to this was provided in replay to point 6 as summarized by the editor. And we provide a summary here as well.

Firstly, we clearly indicate discrepancies between our model-agnostic and computational modelling analyse and acknowledge that discrepancies may be expected when effects of interest are weak to moderate, which we acknowledge (p. 25/26, limitations).

Secondly, the results from the computational model are generally statistically stronger, which is not surprising given that they are based on influences from far more trials. We now include a discussion of this in more detail in the section on limitations (p. 25/26).

Thirdly, although the computational model uses a slightly different parameterization from that reported in Moran et al. (2019), it is a formal extension of that model, allowing the strength of effects for informative and uninformative destinations to differ. We now include a reference to this change in parameterization in the limitation section (p. 25/26), and include a more detailed description in Appendix 1 (p. 45-47).

Finally, to test if the current models support our main conclusion from Moran et al. (2019) that retrospective MB inference guides MFCA for both the informative and non-informative destinations, we reanalysed the Moran et al. (2019) data using the current novel models and found converging support, as we now report (Appendix 1 – Figure 8).

Finally, the general story that dopamine boosts model-based instruction about what the model-free system should learn is reminiscent of the previous work showing that prefrontal dopamine alters instruction biasing of reinforcement learning (Doll and Frank) and I would have thought this might deserve a little more attention, earlier on in the intro.

The reviewer is indeed correct and we now reference this line of work (Doll et al., 2009, 2011) in the intro (p. 4).

-

Evaluation Summary:

This paper provides behavioral and modeling evidence for the hypothesis that dopamine is involved in the interaction between distinct model-based and model-free control systems. The issue addressed is timely and clinically relevant, and will be of interest to a broad audience interested in dopamine, learning, choice and planning.

(This preprint has been reviewed by eLife. We include the public reviews from the reviewers here; the authors also receive private feedback with suggested changes to the manuscript. Reviewer #3 agreed to share their name with the authors.)

-

Reviewer #1 (Public Review):

This manuscript presents a study on the effects of dopamine on the interactions between model-based (MB) and model-free (MF) systems. Sixty-two subjects were tested on a variant of the classic two-step task after receiving 150 mg levodopa or placebo, in a double-blind fashion. The results show that dopamine has no effects on MF learning or MB inference. However, dopamine modulates interactions between MB and MF systems, such that MF credit assignment that requires retrospective MB inference is enhanced. Additional analyses using computational modeling confirm these basic behavioral findings. Finally, the authors show that some of the effects are related to working memory.

Strengths

1. The study addresses a timely and interesting question about interactions between MB and MF learning, and how dopamine …

Reviewer #1 (Public Review):

This manuscript presents a study on the effects of dopamine on the interactions between model-based (MB) and model-free (MF) systems. Sixty-two subjects were tested on a variant of the classic two-step task after receiving 150 mg levodopa or placebo, in a double-blind fashion. The results show that dopamine has no effects on MF learning or MB inference. However, dopamine modulates interactions between MB and MF systems, such that MF credit assignment that requires retrospective MB inference is enhanced. Additional analyses using computational modeling confirm these basic behavioral findings. Finally, the authors show that some of the effects are related to working memory.

Strengths

1. The study addresses a timely and interesting question about interactions between MB and MF learning, and how dopamine contributes to this interaction. The experiment is well-designed and rigorous (within-subject, double-blind, placebo-controlled) and the sample is large, leading to robust results.

2. The experimental task is a real strength of the study. It offers a variety of conditions in which the effects of MF credit assignment and MB inference, and the effects of retrospective MB inference on MF credit assignment can be isolated. In particular, the latter effect is evident in a higher probability to repeat inferred selected choice option (GN) after a rewarded informative state, relative to the inferred non-selected option (GR). This difference shows a significant group difference (group by GN vs GN interaction). These findings are further backed up by a computational model, revealing no effect of dopamine on either MB or MF learning, but an effect of MF credit assignment guided by retrospective MB inference.

Weaknesses

1. The authors present evidence that dopamine enhances MF credit assignment guided by retrospective MB inference for rewards at informative locations (Figure 3B), but not for non-informative locations (Figure 3D), at least not in the model-agnostic analysis. It is somewhat unclear why dopamine would modulate MF credit assignment guided by retrospective MB inference only in some conditions (although it should be noted that the computational modeling results show no difference between informative and non-informative locations).

2. The authors show that individual differences in the effect of dopamine on MB inference are negatively correlated with the effects of retrospective MB inference on MF credit assignment (depending on working memory). This in itself is a surprising finding which the authors interpret as competition between these two forms of MB inference. However, they do not show an effect of dopamine on MB inference as such, which is also surprising given previous evidence.

3. The logic of the different sets of the analysis is complex. This makes reading the paper a bit complicated and sometimes hard to understand.

-

Reviewer #2 (Public Review):

In this work, Deserno, Moran and others have studied the effects of dopamine on the credit assignment problem in reinforcement learning (RL). In a placebo-controlled within-subject design authors have tested the effects of levodopa on credit assignment using a task that has been introduced recently by the authors (Moran et al. 2019). There are two types of trials in this task, standard trials and uncertainty trials. In standard trials, participants perform a two-step decision task (similar to Daw et al, 2011; but with important differences), in which multiple choices might be presented as a pair in the first step. In the uncertainty trials, participants witness the outcome of a "ghost" choice, and their knowledge of task allows them to update value of each task (i.e. credit assignment).

This study addresses …

Reviewer #2 (Public Review):

In this work, Deserno, Moran and others have studied the effects of dopamine on the credit assignment problem in reinforcement learning (RL). In a placebo-controlled within-subject design authors have tested the effects of levodopa on credit assignment using a task that has been introduced recently by the authors (Moran et al. 2019). There are two types of trials in this task, standard trials and uncertainty trials. In standard trials, participants perform a two-step decision task (similar to Daw et al, 2011; but with important differences), in which multiple choices might be presented as a pair in the first step. In the uncertainty trials, participants witness the outcome of a "ghost" choice, and their knowledge of task allows them to update value of each task (i.e. credit assignment).

This study addresses an important and overlooked problem in the decision neuroscience literature. The pharmacological design and task are well-designed, the manuscript is well-written and analyses sound rigorous. However, I believe, based on these results, that the interpretation of data that dopamine influences credit assignment through MB inference (hence the title and abstract) is not justified.

I believe that the most important effect in this study is the one presented in Figure 3C and 3D, which authors have called the "Clash condition". In this one, the same chosen pair on the preceding uncertainty trial is presented in a standard trial and subjects are asked to choose between the two choices. This is, I believe, is the ultimate test trials in the study; and there is no significant effect of drug in those trials. Looking at Table S2, it seems to me that authors have done a very good job in increasing the within-subject power for that trial type (mixed-effect df for the clash trials is 4861; the df for the repeat/switch trials is 239 according to Table S2). Related to this point, authors have found no significant effect of DA on credit assignment in their computational modeling analysis.

- I don't think that authors statement of Cools' theory in terms of DA synthesis capacity is correct (page 17). I believe the main prediction, based on that theory, or at least its recent form, is that DA effect is baseline-dependent and therefore it is actually quite consistent with what authors found. Based on this theory, WM-span test is a good "proxy" of baseline dopamine synthesis capacity. I suggest to revise that part of the discussion.

- I believe that a note on how implication of this study to psychiatric disorders that are related to dopamine would be of interest for many readers.

-

Reviewer #3 (Public Review):

This paper reports that levodopa administration to healthy volunteers enhances the guidance of model-free credit assignment (MFCA) by model-based (MB) inference without altering MF and MB learning per se. The issue addressed is fascinating, timely and clinically relevant, the experimental design and analysis strategy (reported previously) are complex, but sophisticated and clever and the results are tantalizing. They suggest that ldopa boosts model-based instruction about what (unobserved or inferred) state the model-free system might learn about. As such, the paper substantiates the hypothesis that dopamine plays a role specifically in the interaction between distinct model-based and model-free systems. This is really a very valuable contribution, one that my lab and I expect many other labs had already …

Reviewer #3 (Public Review):

This paper reports that levodopa administration to healthy volunteers enhances the guidance of model-free credit assignment (MFCA) by model-based (MB) inference without altering MF and MB learning per se. The issue addressed is fascinating, timely and clinically relevant, the experimental design and analysis strategy (reported previously) are complex, but sophisticated and clever and the results are tantalizing. They suggest that ldopa boosts model-based instruction about what (unobserved or inferred) state the model-free system might learn about. As such, the paper substantiates the hypothesis that dopamine plays a role specifically in the interaction between distinct model-based and model-free systems. This is really a very valuable contribution, one that my lab and I expect many other labs had already picked up immediately after it appeared as a preprint.

Major strengths include the combination of pharmacology with a substantial sample size, clever theory-driven experimental design and application of advanced computational modeling. The key effect of ldopa on retroactive MF inference is not large, but substantiated by both model-agnostic and model-informed analyses and therefore the primary conclusion is supported by the results.

The paper raises the following questions.

What putative neural mechanism led the authors to predict this selective modulation of the interaction? The introduction states that "Given DA's contribution to both MF and MB systems, we set out to examine whether this aspect of MB-MF cooperation is subject to DA influence." This is vague. For the hypothesis to be plausible, it would need to be grounded in some idea about how the effect would be implemented. Where exactly does dopamine act to elicit an effect on retroactive MB inference, *but not* MB learning per se? If the mechanism is a modulation of working memory and/or replay itself, then shouldn't that lead to boosting of both MB learning as well as MB influences on MF learning? Addressing this involves specification of the mechanistic basis of the hypothesis in the introduction, but the question also pertains to the discussion section. Hippocampal replay is invoked, but can the authors clarify why a prefrontal working memory (retrieval) mechanism invoked in the preceding paragraph would not suffice. In any case, it seems that an effect of dopamine on replay would also alter MB choice/planning?

A second issue is that the critical drug effects seems somewhat marginally significant and the key plots (e.g. Fig3b and Fig 44b,c, but also other plots) do not visualize relevant variability in the drug effect. I would recommend plotting differences between LDopa and placebo, allowing readers to appreciate the relevant individual variability in the drug effects.

Third, I do wonder how to reconcile the lack of a drug x common reward effect (the lack of a dopamine effect on MF learning) as well as the lack of a drug effect on choice generalization with the long literature on dopamine and MF reinforcement and newer literature on dopamine effects on MB learning and inference. The authors mention this in the discussion, but do not provide an account. Can they elaborate on what makes these pure MB and MF metrics here less sensitive than in various other studies, and/or what are the implications of the lack of these effects for our understanding of dopamine's contributions to learning?

Fourth, the correlation with WM and drug effect on preferential MBCA for non-informative but not informative destination is really quite small, and while I understand that WM should be associated with preferential MBCA under placebo, it does not become clear what makes the authors predict specifically that WM predicts a dopa effect on this metric, rather than the metric taken under placebo, for example.

A fifth issue is that I am not quite convinced about the negative link between dopamine's effects on MBCA and on PMFCA. The rationale for including WM, informativeness as well as DA effects on MBCA in the model of DA effects on PMFCA wasn't clear to me. The reported correlation is statistically quite marginal, and given that it was probably not the first one tested and given the multiple factors involved, I am somewhat concerned about the degree to which this reflects overfitting. I also find the pattern of effects rather difficult to make sense of: in high WM individuals, the drug-effects on PMFCA and MBCA are negatively related for informative and non-informative destinations. In low WM individuals, the drug-effects on PMFCA and MBCA are negatively related for informative, but not non-informative destinations. It is unclear to me how this pattern leads to the conclusion that there is a tradeoff between PMFCA and MBCA. And even if so, why would this be the case? It would be relevant to report the simple effects, that is the pattern of correlations under placebo separately from those under ldopa.

More generally I would recommend that the authors refrain from putting too much emphasis on these between-subject correlations. Simple power calculation indicates that the sample size one would need to detect a realistically small to medium between-subject effect (that interacts with all kinds of within-subject factors) is in any case much larger than the sample size in this study.

Another question is how worried should we be that the critical MB guidance of MFCA effect was not observed under placebo (Figure 3b)? I realize that the computational model-based analyses do speak to this issue, but here I had some questions too. Are the results from the model-informed and model-agnostic analyses otherwise consistent? Model-agnostic analyses reveal a greater effect of LDopa on informative destination for the ghost-nominated than the ghost-rejected trials and no effect for noninformative destination. Conversely model-informed analyses reveal a nomination effect of ldopa across informative and noninformative trials. This was not addressed, or am I missing something? In fact, regarding the modeling, I am not the best person to evaluate the details of the model comparison, fitting and recovery procedures, but the question that does rise is, and I would make explicit in the current paper how does this model space, the winning model and the modeling exercise differ (or not) from that in the previous paper by Moran et al without LDopa administration.

Finally, the general story that dopamine boosts model-based instruction about what the model-free system should learn is reminiscent of the previous work showing that prefrontal dopamine alters instruction biasing of reinforcement learning (Doll and Frank) and I would have thought this might deserve a little more attention, earlier on in the intro.

-