Mapping Brain-Behavior Space Relationships Along the Psychosis Spectrum

Curation statements for this article:-

Curated by eLife

Evaluation Summary:

The authors assessed multivariate relations between a dimensionality-reduced symptom space and brain imaging features, using a large database of individuals with psychosis-spectrum disorders (PSD). Demonstrating both high stability and reproducibility of their approaches, this work showed a promise that diagnosis or treatment of PSD can benefit from a proposed data-driven brain-symptom mapping framework. It is therefore of broad potential interest across cognitive and translational neuroscience.

(This preprint has been reviewed by eLife. We include the public reviews from the reviewers here; the authors also receive private feedback with suggested changes to the manuscript. The reviewers remained anonymous to the authors.)

This article has been Reviewed by the following groups

Discuss this preprint

Start a discussion What are Sciety discussions?Listed in

- Evaluated articles (eLife)

Abstract

Difficulties in advancing effective patient-specific therapies for psychiatric disorders highlight a need to develop a stable neurobiologically-grounded mapping between neural and symptom variation. This gap is particularly acute for psychosis-spectrum disorders (PSD). Here, in a sample of 436 cross-diagnostic PSD patients, we derived and replicated a dimensionality-reduced symptom space across hallmark psychopathology symptoms and cognitive deficits. In turn, these symptom axes mapped onto distinct, reproducible brain maps. Critically, we found that multivariate brain-behavior mapping techniques (e.g. canonical correlation analysis) do not produce stable results. Instead, we show that a univariate brain-behavioral space (BBS) can resolve stable individualized prediction. Finally, we show a proof-of-principle framework for relating personalized BBS metrics with molecular targets via serotonin and glutamate receptor manipulations and gene expression maps. Collectively, these results highlight a stable and data-driven BBS mapping across PSD, which offers an actionable path that can be iteratively optimized for personalized clinical biomarker endpoints.

Article activity feed

-

Author Response:

Evaluation Summary:

The authors assessed multivariate relations between a dimensionality-reduced symptom space and brain imaging features, using a large database of individuals with psychosis-spectrum disorders (PSD). Demonstrating both high stability and reproducibility of their approaches, this work showed a promise that diagnosis or treatment of PSD can benefit from a proposed data-driven brain-symptom mapping framework. It is therefore of broad potential interest across cognitive and translational neuroscience.

We are very grateful for the positive feedback and the careful read of our paper. We would especially like to thank the Reviewers for taking the time to read this lengthy and complex manuscript and for providing their helpful and highly constructive feedback. Overall, we hope the Editor and the Reviewers …

Author Response:

Evaluation Summary:

The authors assessed multivariate relations between a dimensionality-reduced symptom space and brain imaging features, using a large database of individuals with psychosis-spectrum disorders (PSD). Demonstrating both high stability and reproducibility of their approaches, this work showed a promise that diagnosis or treatment of PSD can benefit from a proposed data-driven brain-symptom mapping framework. It is therefore of broad potential interest across cognitive and translational neuroscience.

We are very grateful for the positive feedback and the careful read of our paper. We would especially like to thank the Reviewers for taking the time to read this lengthy and complex manuscript and for providing their helpful and highly constructive feedback. Overall, we hope the Editor and the Reviewers will find that our responses address all the comments and that the requested changes and edits improved the paper.

Reviewer 1 (Public Review):

The paper assessed the relationship between a dimensionality-reduced symptom space and functional brain imaging features based on the large multicentric data of individuals with psychosis-spectrum disorders (PSD).

The strength of this study is that i) in every analysis, the authors provided high-level evidence of reproducibility in their findings, ii) the study included several control analyses to test other comparable alternatives or independent techniques (e.g., ICA, univariate vs. multivariate), and iii) correlating to independently acquired pharmacological neuroimaging and gene expression maps, the study highlighted neurobiological validity of their results.

Overall the study has originality and several important tips and guidance for behavior-brain mapping, although the paper contains heavy descriptions about data mining techniques such as several dimensionality reduction algorithms (e.g., PCA, ICA, and CCA) and prediction models.

We thank the Reviewer for their insightful comments and we appreciate the positive feedback. Regarding the descriptions of methods and analytical techniques, we have removed these descriptions out of the main Results text and figure captions. Detailed descriptions are still provided in the Methods, so that they do not detract from the core message of the paper but can still be referenced if a reader wishes to look up the details of these methods within the context of our analyses.

Although relatively minors, I also have few points on the weaknesses, including i) an incomplete description about how to tell the PSD effects from the normal spectrum, ii) a lack of overarching interpretation for other principal components rather than only the 3rd one, and iii) somewhat expected results in the stability of PC and relevant indices.

We are very appreciative of the constructive feedback and feel that these revisions have strengthened our paper. We have addressed these points in the revision as following:

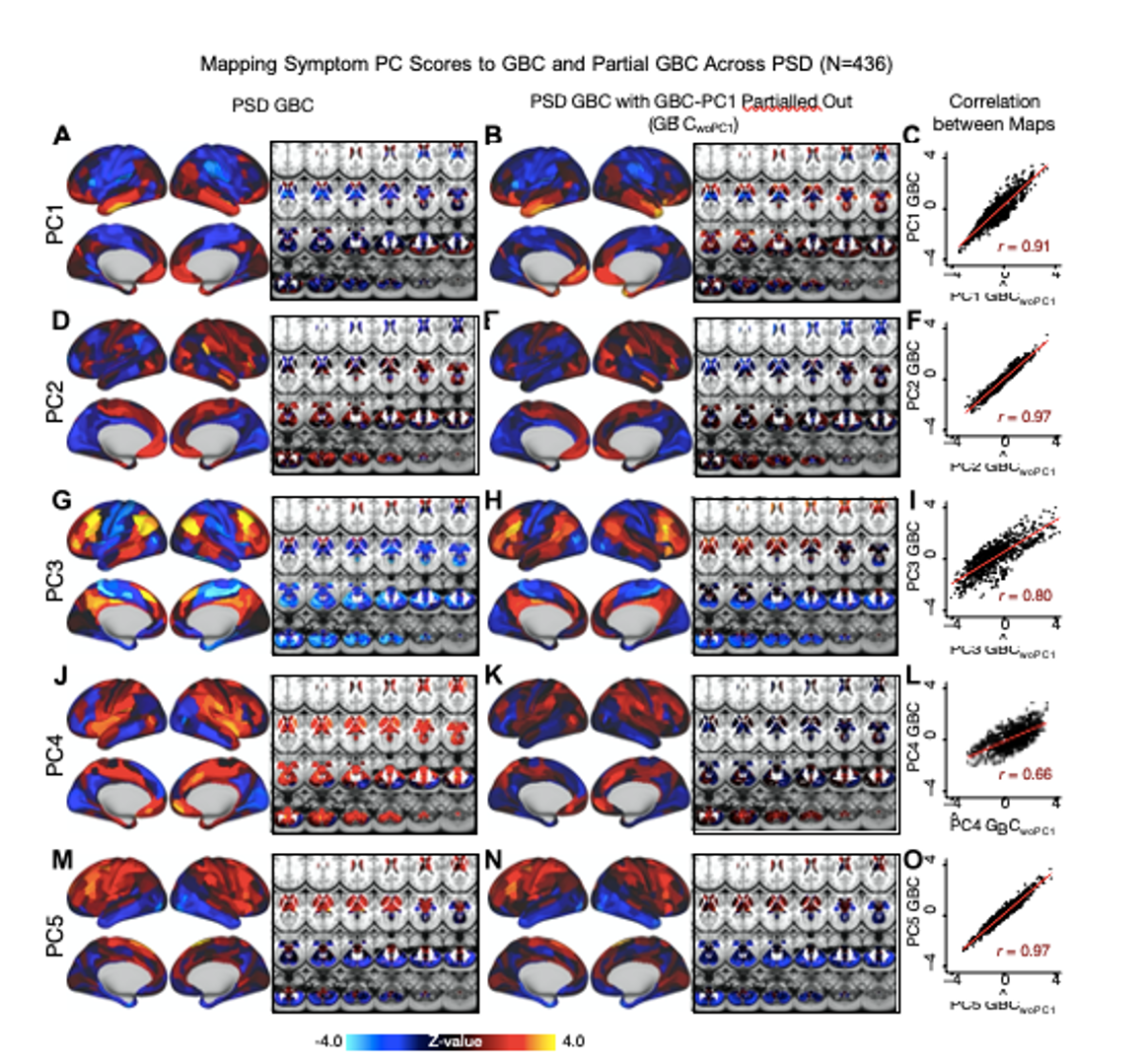

i) We are grateful to the Reviewer for bringing up this point as it has allowed us to further explore the interesting observation we made regarding shared versus distinct neural variance in our data. It is important to not confuse the neural PCA (i.e. the independent neural features that can be detected in the PSD and healthy control samples) versus the neuro-behavioral mapping. In other words, both PSD patients and healthy controls are human and therefore there are a number of neural functions that both cohorts exhibit that may have nothing to do with the symptom mapping in PSD patients. For instance, basic regulatory functions such as control of cardiac and respiratory cycles, motor functions, vision, etc. We hypothesized therefore that there are more common than distinct neural features that are on average shared across humans irrespective of their psychopathology status. Consequently, there may only be a ‘residual’ symptom-relevant neural variance. Therefore, in the manuscript we bring up the possibility that a substantial proportion of neural variance may not be clinically relevant. If this is in fact true then removing the shared neural variance between PSD and CON should not drastically affect the reported symptom-neural univariate mapping solution, because this common variance does not map to clinical features and therefore is orthogonal statistically. We have now verified this hypothesis quantitatively and have added extensive analyses to highlight this important observation made the the Reviewer. We first conducted a PCA using the parcellated GBC data from all 436 PSD and 202 CON (a matrix with dimensions 638 subjects x 718 parcels). We will refer to this as the GBC-PCA to avoid confusion with the symptom/behavioral PCA described elsewhere in the manuscript. This GBC-PCA resulted in 637 independent GBC-PCs. Since PCs are orthogonal to each other, we then partialled out the variance attributable to GBC-PC1 from the PSD data by reconstructing the PSD GBC matrix using only scores and coefficients from the remaining 636 GBC-PCs (GBˆCwoP C1). We then reran the univariate regression as described in Fig. 3, using the same five symptom PC scores across 436 PSD. The results are shown in Fig. S21 and reproduced below. Removing the first PC of shared neural variance (which accounted for about 15.8% of the total GBC variance across CON and PSD) from PSD data attenuated the statistics slightly (not unexpected as the variance was by definition reduced) but otherwise did not strongly affect the univariate mapping solution.

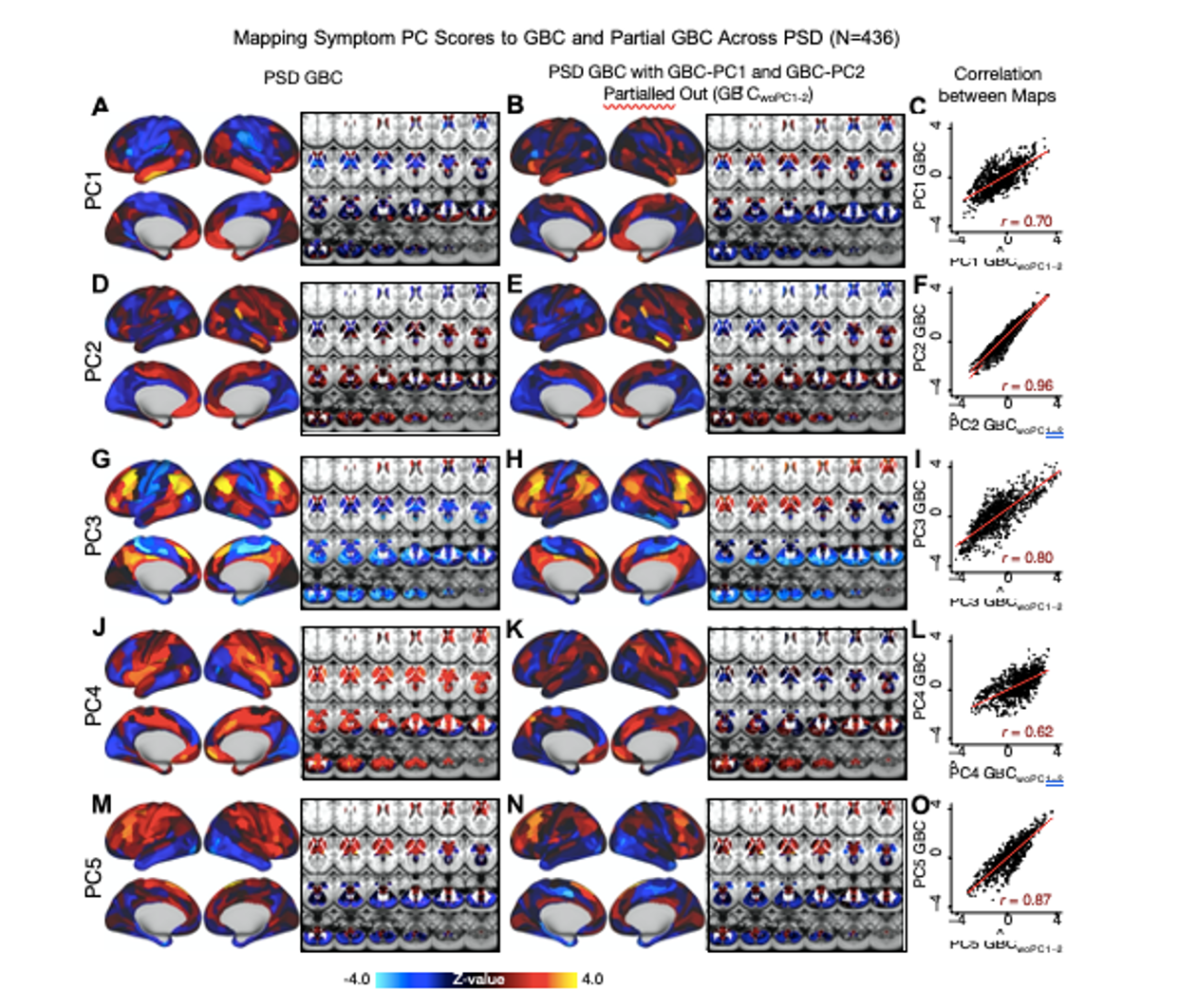

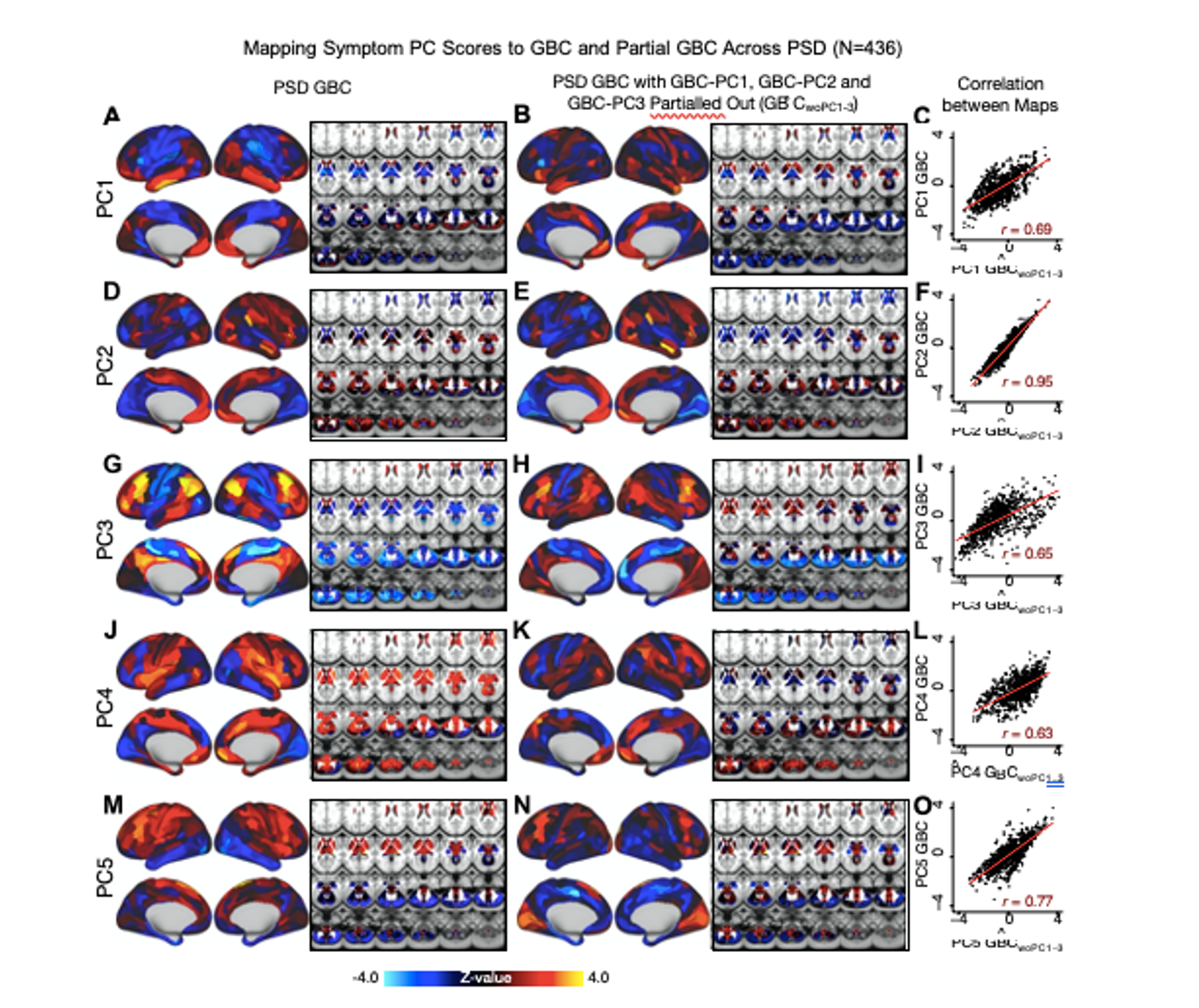

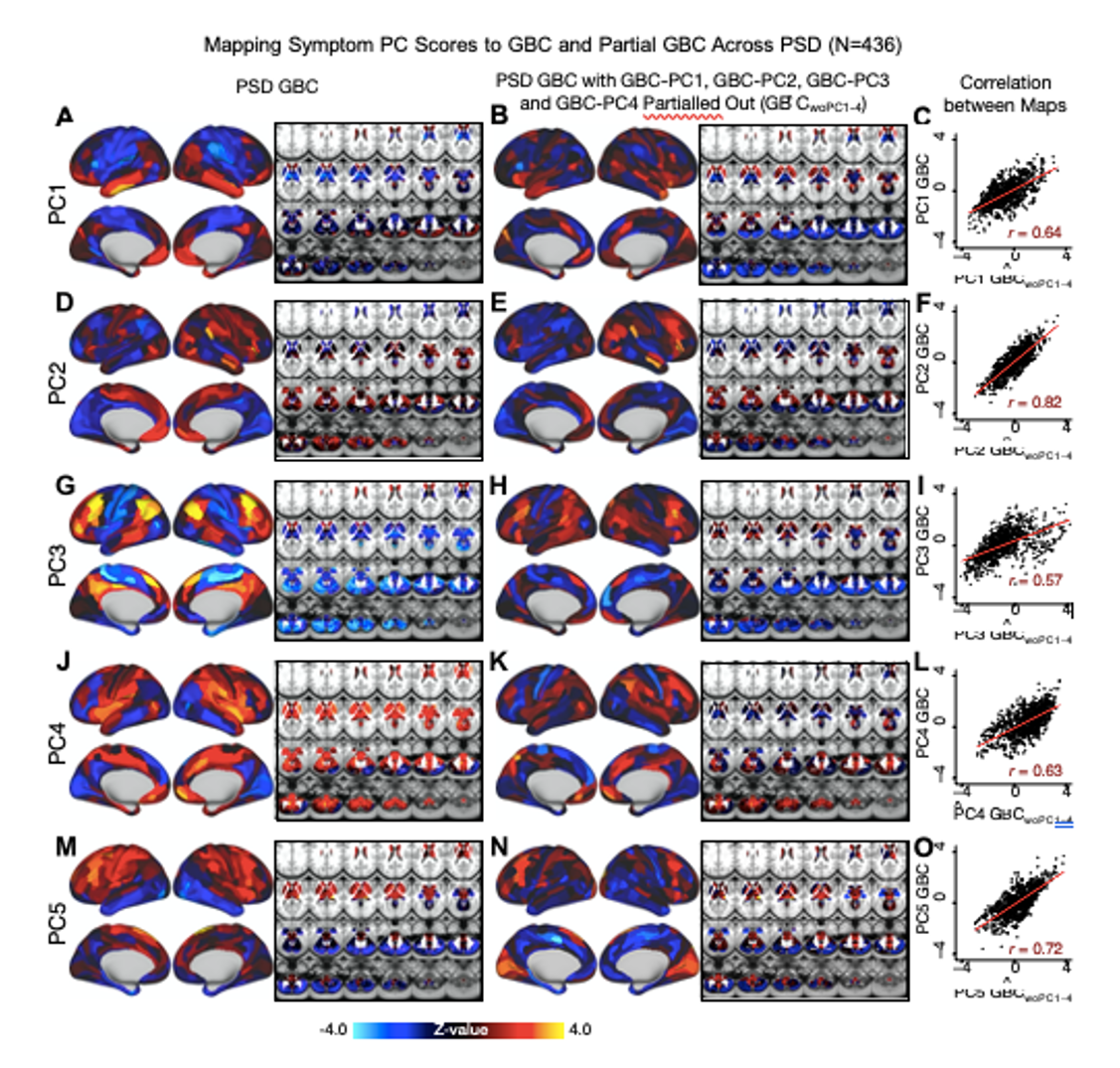

We repeated the symptom-neural regression next with the first 2 GBC-PCs partialled out of the PSD data Fig. S22, with the first 3 PCs parsed out Fig. S23, and with the first 4 neural PCs parsed out Fig. S24. The symptom-neural maps remain fairly robust, although the similarity with the original βP CGBC maps does drop as more common neural variance is parsed out. These figures are also shown below:

Fig. S21. Comparison between the PSD βP CGBC maps computed using GBC and GBC with the first neural PC parsed out. If a substantial proportion of neural variance is not be clinically relevant, then removing the shared neural variance between PSD and CON should not drastically affect the reported symptom-neural univariate mapping solution, because this common variance will not map to clinical features. We therefore performed a PCA on CON and PSD GBC to compute the shared neural variance (see Methods), and then parsed out the first GBC-PC from the PSD GBC data (GBˆCwoP C1). We then reran the univariate regression as described in Fig. 3, using the same five symptom PC scores across 436 PSD. (A) The βP C1GBC map, also shown in Fig. S10. (B) The first GBC-PC accounted for about 15.8% of the total GBC variance across CON and PSD. Removing GBC-PC1 from PSD data attenuated the βP C1GBC statistics slightly (not unexpected as the variance was by definition reduced) but otherwise did not strongly affect the univariate mapping solution. (C) Correlation across 718 parcels between the two βP C1GBC map shown in A and B. (D-O) The same results are shown for βP C2GBC to βP C5GBC maps.

Fig. S22. Comparison between the PSD βP CGBC maps computed using GBC and GBC with the first two neural PCs parsed out. We performed a PCA on CON and PSD GBC and then parsed out the first three GBC-PC from the PSD GBC data (GBˆCwoP C1−2, see Methods). We then reran the univariate regression as described in Fig. 3, using the same five symptom PC scores across 436 PSD. (A) The βP C1GBC map, also shown in Fig. S10. (B) The second GBC-PC accounted for about 9.5% of the total GBC variance across CON and PSD. (C) Correlation across 718 parcels between the two βP C1GBC map shown in A and B. (D-O) The same results are shown for βP C2GBC to βP C5GBC maps.

Fig. S23. Comparison between the PSD βP CGBC maps computed using GBC and GBC with the first three neural PCs parsed out. We performed a PCA on CON and PSD GBC and then parsed out the first three GBC-PC from the PSD GBC data (GBˆCwoP C1−3, see Methods). We then reran the univariate regression as described in Fig. 3, using the same five symptom PC scores across 436 PSD. (A) The βP C1GBC map, also shown in Fig. S10. (B) The second GBC-PC accounted for about 9.5% of the total GBC variance across CON and PSD. (C) Correlation across 718 parcels between the two βP C1GBC map shown in A and B. (D-O) The same results are shown for βP C2GBC to βP C5GBC maps.

Fig. S24. Comparison between the PSD βP CGBC maps computed using GBC and GBC with the first four neural PCs parsed out. We performed a PCA on CON and PSD GBC and then parsed out the first four GBC-PC from the PSD GBC data (GBˆCwoP C1−4, see Methods). We then reran the univariate regression as described in Fig. 3, using the same five symptom PC scores across 436 PSD. (A) The βP C1GBC map, also shown in Fig. S10. (B) The second GBC-PC accounted for about 9.5% of the total GBC variance across CON and PSD. (C) Correlation across 718 parcels between the two βP C1GBC map shown in A and B. (D-O) The same results are shown for βP C2GBC to βP C5GBC maps.

For comparison, we also computed the βP CGBC maps for control subjects, shown in Fig. S11. In support of the βP CGBC in PSD being circuit-relevant, we observed only mild associations between GBC and PC scores in healthy controls:

Results: All 5 PCs captured unique patterns of GBC variation across the PSD (Fig. S10), which were not observed in CON (Fig. S11). ... Discussion: On the contrary, this bi-directional “Psychosis Configuration” axis also showed strong negative variation along neural regions that map onto the sensory-motor and associative control regions, also strongly implicated in PSD (1, 2). The “bi-directionality” property of the PC symptom-neural maps may thus be desirable for identifying neural features that support individual patient selection. For instance, it may be possible that PC3 reflects residual untreated psychosis symptoms in this chronic PSD sample, which may reveal key treatment neural targets. In support of this circuit being symptom-relevant, it is notable that we observed a mild association between GBC and PC scores in the CON sample (Fig. S11).

ii) In our original submission we spotlighted PC3 because of its pattern of loadings on to hallmark symptoms of PSD, including strong positive loadings across Positive symptom items in the PANSS and conversely strong negative loadings on to most Negative items. It was necessary to fully examine this dimension in particular because these are key characteristics of the target psychiatric population, and we found that the focus on PC3 was innovative because it provided an opportunity to quantify a fully data-driven dimension of symptom variation that is highly characteristic of the PSD patient population. Additionally, this bi-directional axis captured shared variance from measures in other traditional symptoms factors, such the PANSS General factor and cognition. This is a powerful demonstration of how data-driven techniques such as PCA can reveal properties intrinsic to the structure of PSD-relevant symptom data which may in turn improve the mapping of symptom-neural relationships. We refrained from explaining each of the five PCs in detail in the main text as we felt that it would further complicate an already dense manuscript. Instead, we opted to provide the interpretation and data from all analyses for all five PCs in the Supplement. However, in response to the Reviewers’ thoughtful feedback that more focus should be placed on other components, we have expanded the presentation and discussion of all five components (both regarding the symptom profiles and neural maps) in the main text:

Results: Because PC3 loads most strongly on to hallmark symptoms of PSD (including strong positive loadings across PANSS Positive symptom measures in the PANSS and strong negative loadings onto most Negative measures), we focus on this PC as an opportunity to quantify an innovative, fully data-driven dimension of symptom variation that is highly characteristic of the PSD patient population. Additionally, this bi-directional symptom axis captured shared variance from measures in other traditional symptoms factors, such the PANSS General factor and cognition. We found that the PC3 result provided a powerful empirical demonstration of how using a data-driven dimensionality-reduced solution (via PCA) can reveal novel patterns intrinsic to the structure of PSD psychopathology.

iii) We felt that demonstrating the stability of the PCA solution was extremely important, given that this degree of rigor has not previously been tested using broad behavioral measures across psychosis symptoms and cognition in a cross-diagnostic PSD sample. Additionally, we demonstrated reproducibility of the PCA solution using independent split-half samples. Furthermore, we derived stable neural maps using the PCA solution. In our original submission we show that the CCA solution was not reproducible in our dataset. Following the Reviewers’ feedback, we computed the estimated sample sizes needed to sufficiently power our multivariate analyses for stable/reproducible solutions. using the methods in (3). These results are discussed in detail in our resubmitted manuscript and in our response to the Critiques section below.

Reviewer 2 (Public Review):

The work by Ji et al is an interesting and rather comprehensive analysis of the trend of developing data-driven methods for developing brain-symptom dimension biomarkers that bring a biological basis to the symptoms (across PANSS and cognitive features) that relate to psychotic disorders. To this end, the authors performed several interesting multivariate analyses to decompose the symptom/behavioural dimensions and functional connectivity data. To this end, the authors use data from individuals from a transdiagnostic group of individuals recruited by the BSNIP cohort and combine high-level methods in order to integrate both types of modalities. Conceptually there are several strengths to this paper that should be applauded. However, I do think that there are important aspects of this paper that need revision to improve readability and to better compare the methods to what is in the field and provide a balanced view relative to previous work with the same basic concepts that they are building their work around. Overall, I feel as though the work could advance our knowledge in the development of biomarkers or subject level identifiers for psychiatric disorders and potentially be elevated to the level of an individual "subject screener". While this is a noble goal, this will require more data and information in the future as a means to do this. This is certainly an important step forward in this regard.

We thank the Reviewer for their insightful and constructive comments about our manuscript. We have revised the text to make it easier to read and to clarify our results in the context of prior works in the field. We fully agree that a great deal more work needs to be completed before achieving single-subject level treatment selection, but we hope that our manuscript provides a helpful step towards this goal.

Strengths:

- Combined analysis of canonical psychosis symptoms and cognitive deficits across multiple traditional psychosis-related diagnoses offers one of the most comprehensive mappings of impairments experienced within PSD to brain features to date

- Cross-validation analyses and use of various datasets (diagnostic replication, pharmacological neuroimaging) is extremely impressive, well motivated, and thorough. In addition the authors use a large dataset and provide "out of sample" validity

- Medication status and dosage also accounted for

- Similarly, the extensive examination of both univariate and multivariate neuro-behavioural solutions from a methodological viewpoint, including the testing of multiple configurations of CCA (i.e. with different parcellation granularities), offers very strong support for the selected symptom-to-neural mapping

- The plots of the obtained PC axes compared to those of standard clinical symptom aggregate scales provide a really elegant illustration of the differences and demonstrate clearly the value of data-driven symptom reduction over conventional categories

- The comparison of the obtained neuro-behavioural map for the "Psychosis configuration" symptom dimension to both pharmacological neuroimaging and neural gene expression maps highlights direct possible links with both underlying disorder mechanisms and possible avenues for treatment development and application

- The authors' explicit investigation of whether PSD and healthy controls share a major portion of neural variance (possibly present across all people) has strong implications for future brain-behaviour mapping studies, and provides a starting point for narrowing the neural feature space to just the subset of features showing symptom-relevant variance in PSD

We are very grateful for the positive feedback. We would like to thank the Reviewers for taking the time to read this admittedly dense manuscript and for providing their helpful critique.

Critiques:

- Overall I found the paper very hard to read. There are abbreviation everywhere for every concept that is introduced. The paper is methods heavy (which I am not opposed to and quite like). It is clear that the authors took a lot of care in thinking about the methods that were chosen. That said, I think that the organization would benefit from a more traditional Intro, Methods, Results, and Discussion formatting so that it would be easier to parse the Results. The figures are extremely dense and there are often terms that are coined or used that are not or poorly defined.

We appreciate the constructive feedback around how to remove the dense content and to pay more attention to the frequency of abbreviations, which impact readability. We implemented the strategies suggested by the Reviewer and have moved the Methods section after the Introduction to make the subsequent Results section easier to understand and contextualize. For clarity and length, we have moved methodological details previously in the Results and figure captions to the Methods (e.g. descriptions of dimensionality reduction and prediction techniques). This way, the Methods are now expanded for clarity without detracting from the readability of the core results of the paper. Also, we have also simplified the text in places where there was room for more clarity. For convenience and ease of use of the numerous abbreviations, we have also added a table to the Supplement (Supplementary Table S1).

- One thing I found conceptually difficult is the explicit comparison to the work in the Xia paper from the Satterthwaite group. Is this a fair comparison? The sample is extremely different as it is non clinical and comes from the general population. Can it be suggested that the groups that are clinically defined here are comparable? Is this an appropriate comparison and standard to make. To suggest that the work in that paper is not reproducible is flawed in this light.

This is an extremely important point to clarify and we apologize that we did not make it sufficiently clear in the initial submission. Here we are not attempting to replicate the results of Xia et al., which we understand were derived in a fundamentally different sample than ours both demographically and clinically, with testing very different questions. Rather, this paper is just one example out of a number of recent papers which employed multivariate methods (CCA) to tackle the mapping between neural and behavioral features. The key point here is that this approach does not produce reproducible results due to over-fitting, as demonstrated robustly in the present paper. It is very important to highlight that in fact we did not single out any one paper when making this point. In fact, we do not mention the Xia paper explicitly anywhere and we were very careful to cite multiple papers in support of the multivariate over-fitting argument, which is now a well-know issue (4). Nevertheless, the Reviewers make an excellent point here and we acknowledge that while CCA was not reproducible in the present dataset, this does not explicitly imply that the results in the Xia et al. paper (or any other paper for that matter) are not reproducible by definition (i.e. until someone formally attempts to falsify them). We have made this point explicit in the revised paper, as shown below. Furthermore, in line with the provided feedback, we also applied the multivariate power calculator derived by Helmer et al. (3), which quantitatively illustrates the statistical point around CCA instability.

Results: Several recent studies have reported “latent” neuro-behavioral relationships using multivariate statistics (5–7), which would be preferable because they simultaneously solve for maximal covariation across neural and behavioral features. Though concerns have emerged whether such multivariate results will replicate due to the size of the feature space relative to the size of the clinical samples (4), Given the possibility of deriving a stable multivariate effect, here we tested if results improve with canonical correlation analysis (CCA) (8) which maximizes relationships between linear combinations of symptom (B) and neural features (N) across all PSD (Fig. 5A).

Discussion: Here we attempted to use multivariate solutions (i.e. CCA) to quantify symptom and neural feature co- variation. In principle, CCA is well-suited to address the brain-behavioral mapping problem. However, symptom-neural mapping using CCA across either parcel-level or network-level solutionsin our sample was not reproducible even when using a low-dimensional symptom solution and parcellated neural data as a starting point. Therefore, while CCA (and related multivariate methods such as partial least squares) are theoretically appropriate and may be helped by regularization methods such as sparse CCA, in practice many available psychiatric neuroimaging datasets may not provide sufficient power to resolve stable multivariate symptom-neural solutions (3). A key pressing need for forthcoming studies will be to use multivariate power calculators to inform sample sizes needed for resolving stable symptom-neural geometries at the single subject level. Of note, though we were unable to derive a stable CCA in the present sample, this does not imply that the multivariate neuro-behavioral effect may not be reproducible with larger effect sizes and/or sample sizes. Critically, this does highlight the importance of power calculations prior to computing multivariate brain-behavioral solutions (3).

- Why was PCA selected for the analysis rather than ICA? Authors mention that PCA enables the discovery of orthogonal symptom dimensions, but don't elaborate on why this is expected to better capture behavioural variation within PSD compared to non-orthogonal dimensions. Given that symptom and/or cognitive items in conventional assessments are likely to be correlated in one way or another, allowing correlations to be present in the low-rank behavioural solution may better represent the original clinical profiles and drive more accurate brain-behaviour mapping. Moreover, as alluded to in the Discussion, employing an oblique rotation in the identification of dimensionality-reduced symptom axes may have actually resulted in a brain-behaviour space that is more generalizable to other psychiatric spectra. Why not use something more relevant to symptom/behaviour data like a factor analysis?

This is a very important point! We agree with the Reviewer that an oblique solution may better fit the data. For this reason, we performed an ICA as shown in the Supplement. We chose to show PCA for the main analyses here because it is a deterministic solution and the number of significant components could be computed via permutation testing. Importantly, certain components from the ICA solution in this sample were highly similar to the PCs shown in the main solution (Supplementary Note 1), as measured by comparing the subject behavioral scores (Fig. S4), and neural maps (Fig. S13). However, notably, certain components in the ICA and PCA solutions did not appear to have a one-to-one mapping (e.g. PCs 1-3 and ICs 1-3). The orthogonality of the PCA solution forces the resulting components to capture maximally separated, unique symptom variance, which in turn map robustly on to unique neural circuits. We observed that the data may be distributed in such a way that in the ICA highly correlated independent components emerge, which do not maximally separate the symptom variance associate with neural variance. We demonstrate this by plotting the relationship between parcel beta coefficients for the βP C3GBC map versus the βIC2GBC and βIC3GBC maps. The sigmoidal shape of the distribution indicates an improvement in the Z-statistics for the βP C3GBC map relative to the βIC2GBC and βIC3GBC maps. We have added this language to the main text Results:

Notably, independent component analysis (ICA), an alternative dimensionality reduction procedure which does not enforce component orthogonality, produced similar effects for this PSD sample, see Supplementary Note 1 & Fig. S4A). Certain pairs of components between the PCA and ICA solutions appear to be highly similar and exclusively mapped (IC5 and PC4; IC4 and PC5) (Fig. S4B). On the other hand, PCs 1-3 and ICs 1-3 do not exhibit a one-to-one mapping. For example, PC3 appears to correlate positively with IC2 and equally strongly negatively with IC3, suggesting that these two ICs are oblique to the PC and perhaps reflect symptom variation that is explained by a single PC. The orthogonality of the PCA solution forces the resulting components to capture maximally separated, unique symptom variance, which in turn map robustly on to unique neural circuits. We observed that the data may be distributed in such a way that in the ICA highly correlated independent components emerge, which do not maximally separate the symptom variance associate with neural variance. We demonstrate this by plotting the relationship between parcel beta coefficients for the βP C3GBC map versus the βIC2GBC and βIC3GBC maps Fig. ??G). The sigmoidal shape of the distribution indicates an improvement in the Z-statistics for the βP C3GBC map relative to the βIC2GBC and βIC3GBC maps.

Additionally, the Reviewer raises an important point, and we agree that orthogonal versus oblique solutions warrant further investigation especially with regards to other psychiatric spectra and/or other stages in disease progression. For example, oblique components may better capture dimensions of behavioral variation in prodromal individuals, as these individuals are in the early stages of exhibiting psychosis-relevant symptoms and may show early diverging of dimensions of behavioral variation. We elaborate on this further in the Discussion:

Another important aspect that will require further characterization is the possibility of oblique axes in the symptom-neural geometry. While orthogonal axes derived via PCA were appropriate here and similar to the ICA-derived axes in this solution, it is possible that oblique dimensions more clearly reflect the geometry of other psychiatric spectra and/or other stages in disease progression. For example, oblique components may better capture dimensions of neuro-behavioral variation in a sample of prodromal individuals, as these patients are exhibiting early-stage psychosis-like symptoms and may show signs of diverging along different trajectories.

Critically, these factors should constitute key extensions of an iteratively more robust model for indi- vidualized symptom-neural mapping across the PSD and other psychiatric spectra. Relatedly, it will be important to identify the ‘limits’ of a given BBS solution – namely a PSD-derived effect may not generalize into the mood spectrum (i.e. both the symptom space and the resulting symptom-neural mapping is orthogonal). It will be important to evaluate if this framework can be used to initialize symptom-neural mapping across other mental health symptom spectra, such as mood/anxiety disorders.

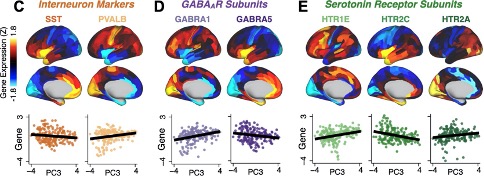

- The gene expression mapping section lacks some justification for why the 7 genes of interest were specifically chosen from among the numerous serotonin and GABA receptors and interneuron markers (relevant for PSD) available in the AHBA. Brief reference to the believed significance of the chosen genes in psychosis pathology would have helped to contextualize the observed relationship with the neuro-behavioural map.

We thank the Reviewer for providing this suggestion and agree that it will strengthen the section on gene expression analysis. Of note, we did justify the choice for these genes, but we appreciate the opportunity to expand on the neurobiology of selected genes and their relevance to PSD. We have made these edits to the text:

We focus here on serotonin receptor subunits (HTR1E, HTR2C, HTR2A), GABA receptor subunits (GABRA1, GABRA5), and the interneuron markers somatostatin (SST) and parvalbumin (PVALB). Serotonin agonists such as LSD have been shown to induce PSD-like symptoms in healthy adults (9) and the serotonin antagonism of “second-generation” antipsychotics are thought to contribute to their efficacy in targeting broad PSD symptoms (10–12). Abnormalities in GABAergic interneurons, which provide inhibitory control in neural circuits, may contribute to cognitive deficits in PSD (13–15) and additionally lead to downstream excitatory dysfunction that underlies other PSD symptoms (16, 17). In particular, a loss of prefrontal parvalbumin-expression fast-spiking interneurons has been implicated in PSD (18–21).

- What the identified univariate neuro-behavioural mapping for PC3 ("psychosis configuration") actually means from an empirical or brain network perspective is not really ever discussed in detail. E.g., in Results, "a high positive PC3 score was associated with both reduced GBC across insular and superior dorsal cingulate cortices, thalamus, and anterior cerebellum and elevated GBC across precuneus, medial prefrontal, inferior parietal, superior temporal cortices and posterior lateral cerebellum." While the meaning and calculation of GBC can be gleaned from the Methods, a direct interpretation of the neuro-behavioural results in terms of the types of symptoms contributing to PC3 and relative hyper-/hypo-connectivity of the DMN compared to e.g. healthy controls could facilitate easier comparisons with the findings of past studies (since GBC does not seem to be a very commonly-used measure in the psychosis fMRI literature). Also important since GBC is a summary measure of the average connectivity of a region, and doesn't provide any specificity in terms of which regions in particular are more or less connected within a functional network (an inherent limitation of this measure which warrants further attention).

We acknowledge that GBC is a linear combination measure that by definition does not provide information on connectivity between any one specific pair of neural regions. However, as shown by highly robust and reproducible neurobehavioral maps, GBC seems to be suitable as a first-pass metric in the absence of a priori assumptions of how specific regional connectivity may map to the PC symptom dimensions, and it has been shown to be sensitive to altered patterns of overall neural connectivity in PSD cohorts (22–25) as well as in models of psychosis (9, 26). Moreover, it is an assumption free method for dimensionality reduction of the neural connectivity matrix (which is a massive feature space). Furthermore, GBC provides neural maps (where each region can be represented by a value, in contrast to full functional connectivity matrices), which were necessary for quantifying the relationship with independent molecular benchmark maps (i.e. pharmacological maps and gene expression maps). We do acknowledge that there are limitations to the method which we now discuss in the paper. Furthermore we agree with the Reviewer that the specific regions implicated in these symptom-neural relationships warrants a more detailed investigation and we plan to develop this further in future studies, such as with seed-based functional connectivity using regions implicated in PSD (e.g. thalamus (2, 27)) or restricted GBC (22) which can summarize connectivity information for a specific network or subset of neural regions. We have provided elaboration and clarification regarding this point in the Discussion:

Another improvement would be to optimize neural data reduction sensitivity for specific symptom variation (28). We chose to use GBC for our initial geometry characterizations as it is a principled and assumption-free data-reduction metric that captures (dys)connectivity across the whole brain and generates neural maps (where each region can be represented by a value, in contrast to full functional connectivity matrices) that are necessary for benchmarking against molecular imaging maps. However, GBC is a summary measure that by definition does not provide information regarding connectivity between specific pairs of neural regions, which may prove to be highly symptom-relevant and informative. Thus symptom-neural relationships should be further explored with higher-resolution metrics, such as restricted GBC (22) which can summarize connectivity information for a specific network or subset of neural regions, or seed-based FC using regions implicated in PSD (e.g. thalamus (2, 27)).

- Possibly a nitpick, but while the inclusion of cognitive measures for PSD individuals is a main (self-)selling point of the paper, there's very limited focus on the "Cognitive functioning" component (PC2) of the PCA solution. Examining Fig. S8K, the GBC map for this cognitive component seems almost to be the inverse for that of the "Psychosis configuration" component (PC3) focused on in the rest of the paper. Since PC3 does not seem to have high loadings from any of the cognitive items, but it is known that psychosis spectrum individuals tend to exhibit cognitive deficits which also have strong predictive power for illness trajectory, some discussion of how multiple univariate neuro-behavioural features could feasibly be used in conjunction with one another could have been really interesting.

This is an important piece of feedback concerning the cognitive measure aspect of the study. As the Reviewer recognizes, cognition is a core element of PSD symptoms and the key reason for including this symptom into the model. Notably, the finding that one dimension captures a substantial proportion of cognitive performance-related variance, independent of other residual symptom axes, has not previously been reported and we fully agree that expanding on this effect is important and warrants further discussion. We would like to take two of the key points from the Reviewers’ feedback and expand further. First, we recognize that upon qualitative inspection PC2 and PC3 neural maps appear strongly anti-correlated. However, as demonstrated in Fig. S9O, PC2 and PC3 maps were anti-correlated at r=-0.47. For comparison, the PC2 map was highly anti-correlated with the BACS composite cognitive map (r=-0.81). This implies that the PC2 map in fact reflects unique neural circuit variance that is relevant for cognition, but not necessarily an inverse of the PC3.

In other words, these data suggest that there are PSD patients with more (or less) severe cognitive deficits independent of any other symptom axis, which would be in line with the observation that these symptoms are not treatable with antipsychotic medication (and therefore should not correlate with symptoms that are treatable by such medications; i.e. PC3). We have now added these points into the revised paper:

Results Fig. 1E highlights loading configurations of symptom measures forming each PC. To aid interpretation, we assigned a name for each PC based on its most strongly weighted symptom measures. This naming is qualitative but informed by the pattern of loadings of the original 36 symptom measures (Fig. 1). For example, PC1 was highly consistent with a general impairment dimension (i.e. “Global Functioning”); PC2 reflected more exclusively variation in cognition (i.e. “Cognitive Functioning”); PC3 indexed a complex configuration of psychosis-spectrum relevant items (i.e. “Psy- chosis Configuration”); PC4 generally captured variation mood and anxiety related items (i.e. “Affective Valence”); finally, PC5 reflected variation in arousal and level of excitement (i.e. “Agitation/Excitation”). For instance, a generally impaired patient would have a highly negative PC1 score, which would reflect low performance on cognition and elevated scores on most other symptomatic items. Conversely, an individual with a high positive PC3 score would exhibit delusional, grandiose, and/or hallucinatory behavior, whereas a person with a negative PC3 score would exhibit motor retardation, social avoid- ance, possibly a withdrawn affective state with blunted affect (29). Comprehensive loadings for all 5 PCs are shown in Fig. 3G. Fig. 1F highlights the mean of each of the 3 diagnostic groups (colored spheres) and healthy controls (black sphere) projected into a 3-dimensional orthogonal coordinate system for PCs 1,2 & 3 (x,y,z axes respectively; alternative views of the 3-dimensional coordinate system with all patients projected are shown in Fig. 3). Critically, PC axes were not parallel with traditional aggregate symptom scales. For instance, PC3 is angled at 45◦ to the dominant direction of PANSS Positive and Negative symptom variation (purple and blue arrows respectively in Fig. 1F). ... Because PC3 loads most strongly on to hallmark symptoms of PSD (including strong positive load- ings across PANSS Positive symptom measures in the PANSS and strong negative loadings onto most Negative measures), we focus on this PC as an opportunity to quantify an innovative, fully data-driven dimension of symptom variation that is highly characteristic of the PSD patient population. Additionally, this bi-directional symptom axis captured shared variance from measures in other traditional symptoms factors, such the PANSS General factor and cognition. We found that the PC3 result provided a powerful empirical demonstration of how using a data-driven dimensionality-reduced solution (via PCA) can reveal novel patterns intrinsic to the structure of PSD psychopathology.

Another nitpick, but the Y axes of Fig. 8C-E are not consistent, which causes some of the lines of best fit to be a bit misleading (e.g. GABRA1 appears to have a more strongly positive gene-PC relationship than HTR1E, when in reality the opposite is true.)

We have scaled each axis to best show the data in each plot but see how this is confusing and recognise the need to correct this. We have remade the plots with consistent axes labelling.

- The authors explain the apparent low reproducibility of their multivariate PSD neuro-behavioural solution using the argument that many psychiatric neuroimaging datasets are too small for multivariate analyses to be sufficiently powered. Applying an existing multivariate power analysis to their own data as empirical support for this idea would have made it even more compelling. The following paper suggests guidelines for sample sizes required for CCA/PLS as well as a multivariate calculator: Helmer, M., Warrington, S. D., Mohammadi-Nejad, A.-R., Ji, J. L., Howell, A., Rosand, B., Anticevic, A., Sotiropoulos, S. N., & Murray, J. D. (2020). On stability of Canonical Correlation Analysis and Partial Least Squares with application to brain-behavior associations (p. 2020.08.25.265546). https://doi.org/10.1101/2020.08.25.265546

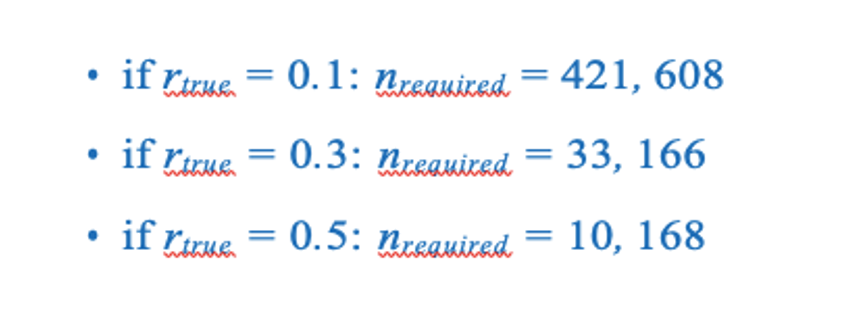

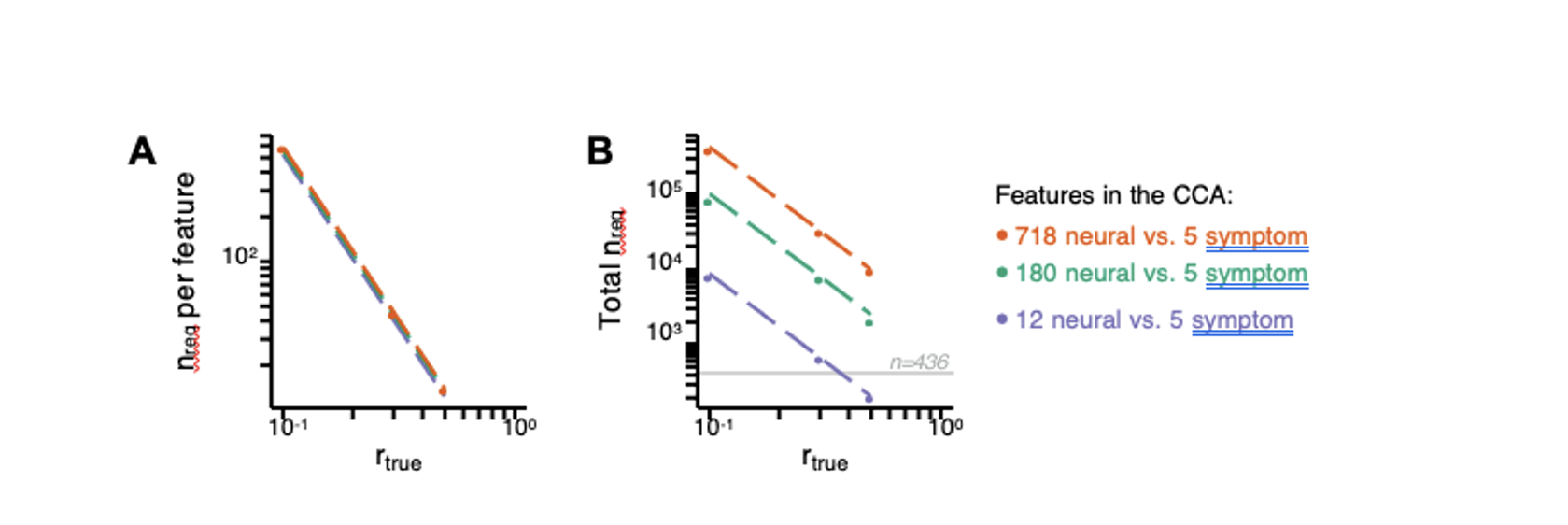

We deeply appreciate the Reviewer’s suggestion and the opportunity to incorporate the methods from the Helmer et al. paper. We now highlight the importance of having sufficiently powered samples for multivariate analyses in our other manuscript first-authored by our colleague Dr. Markus Helmer (3). Using the method described in the above paper (GEMMR version 0.1.2), we computed the estimated sample sizes required to power multivariate CCA analyses with 718 neural features and 5 behavioral (PC) features (i.e. the feature set used throughout the rest of the paper):

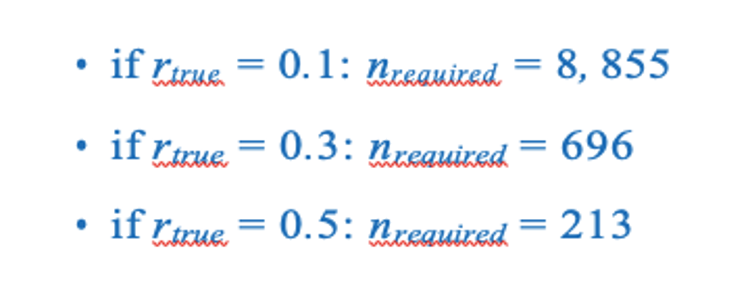

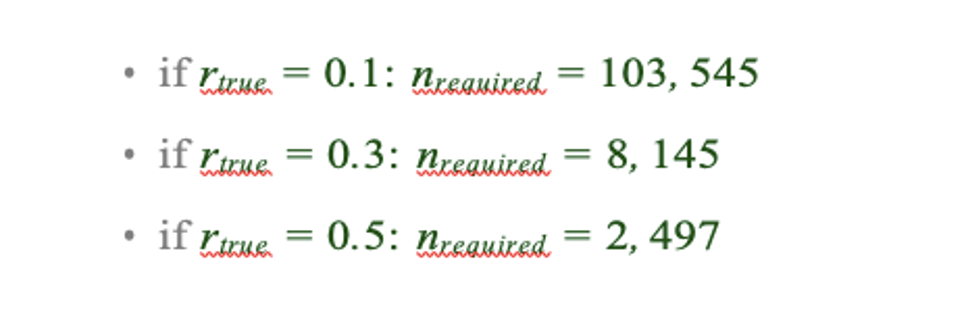

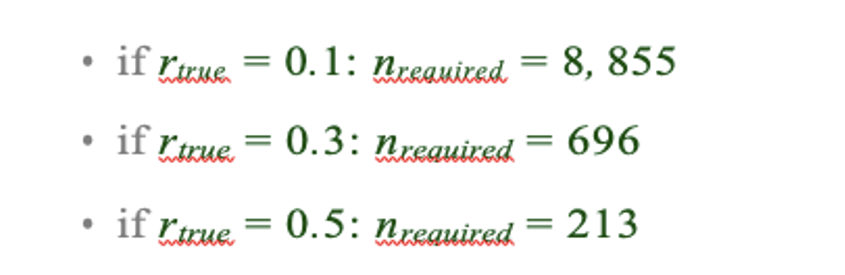

As argued in Helmer et al., rtrue is likely below 0.3 in many cases, thus the estimated sample size of 33k is likely a lower bound for the required sample size for sufficiently-powered CCA analyses using the 718+5 features leveraged throughout the univariate analyses in the present manuscript. This number is two orders of magnitude greater than our available sample (and at least one order of magnitude greater than any single existing clinical dataset). Even if rtrue is 0.5, a sample size of ∼10k would likely be required.

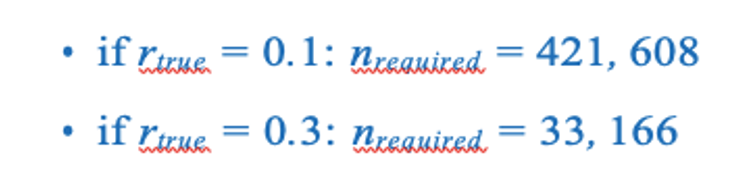

As argued in Helmer et al., rtrue is likely below 0.3 in many cases, thus the estimated sample size of 33k is likely a lower bound for the required sample size for sufficiently-powered CCA analyses using the 718+5 features leveraged throughout the univariate analyses in the present manuscript. This number is two orders of magnitude greater than our available sample (and at least one order of magnitude greater than any single existing clinical dataset). Even if rtrue is 0.5, a sample size of ∼10k would likely be required. We also computed the estimated sample sizes required for 180 neural features (symmetrized neural cortical parcels) and 5 symptom PC features, consistent with the CCA reported in our main text:

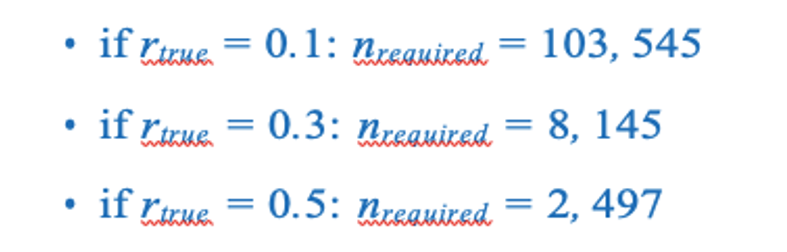

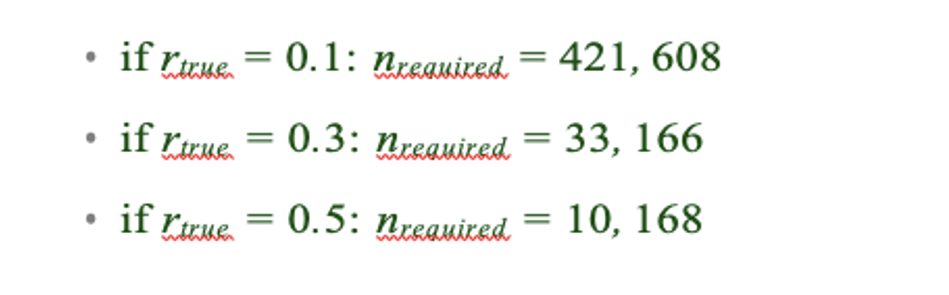

Assuming that rtrue is likely below 0.3, this minimal required sample size remains at least an order of magnitude greater than the size of our present sample, consistent with the finding that the CCA solution computed using these data was unstable. As a lower limit for the required sample size plausible using the feature sets reported in our paper, we additionally computed for comparison the estimated N needed with the smallest number of features explored in our analyses, i.e. 12 neural functional network features and 5 symptom PC features:

These required sample sizes are closer to the N=436 used in the present sample and samples reported in the clinical neuroimaging literature. This is consistent with the observation that when using 12 neural and 5 symptom features (Fig. S15C) the detected canonical correlation r = 0.38 for CV1 is much lower (and likely not inflated due to overfitting) and may be closer to the true effect because with the n=436 this effect is resolvable. This is in contrast to the 180 neural features and 5 symptom feature CCA solution where we observed a null CCA effect around r > 0.6 across all 5 CVs. This clearly highlights the inflation of the effect in the situation where the feature space grows. There is no a priori plausible reason to believe that the effect for 180 vs. 5 feature mapping is literally double the effect when using 12 vs. 5 feature mapping - especially as the 12 features are networks derived from the 180 parcels (i.e. the effect should be comparable rather than 2x smaller). Consequently, if the true CCA effect with 180 vs. 5 features was actually in the more comparable r = 0.38, we would need >5,000 subjects to resolve a reproducible neuro-behavioral CCA map (an order of magnitude more than in the BSNIP sample). Moreover, to confidently detect effects if rtrue is actually less than 0.3, we would require a sample size >8,145 subjects. We have added this to the Results section on our CCA results:

Next, we tested if the 180-parcel CCA solution is stable and reproducible, as done with PC-to-GBC univariate results. The CCA solution was robust when tested with k-fold and leave-site-out cross- validation (Fig. S16) likely because these methods use CCA loadings derived from the full sample. However, the CCA loadings did not replicate in non-overlapping split-half samples (Fig. 5L, see see Supplementary Note 4). Moreover, a leave-one-subject-out cross-validation revealed that removing a single subject from the sample affected the CCA solution such that it did not generalize to the left-out subject (Fig. 5M). This is in contrast to the PCA-to-GBC univariate mapping, which was substantially more reproducible for all attempted cross-validations relative to the CCA approach. This is likely because substantially more power is needed to resolve a stable multivariate neuro-behavioral effect with this many features. Indeed, a multivariate power analysis using 180 neural features and 5 symptom features, and assuming a true canonical correlation of r = 0.3, suggests that a minimal sample size of N = 8145 is needed to sufficiently detect the effect (3), an order of magnitude greater than the available sample size. Therefore, we leverage the univariate neuro-behavioral result for subsequent subject-specific model optimization and comparisons to molecular neuroimaging maps.

Additionally, we added the following to Supplementary Note 4: Establishing the Reproducibility of the CCA Solution:

Here we outline the details of the split-half replication for the CCA solution. Specifically, the full patient sample was randomly split (referred to as “H1” and “H2” respectively), while preserving the proportion of patients in each diagnostic group. Then, CCA was performed independently for H1 and H2. While the loadings for behavioral PCs and original behavioral items are somewhat similar (mean r 0.5) between the two CCAs in each run, the neural loadings were not stable across H1 and H2 CCA solutions. Critically, CCA results did not perform well for leave-one-subject-out cross-validation (Fig. 5M). Here, one patient was held out while CCA was performed using all data from the remaining 435 patients. The loadings matrices Ψ and Θ from the CCA were then used to calculate the “predicted” neural and behavioral latent scores for all 5 CVs for the patient that was held out of the CCA solution. This process was repeated for every patient and the final result was evaluated for reproducibility. As described in the main text, this did not yield reproducible CCA effects (Fig. 5M). Of note, CCA may yield higher reproducibility if the neural feature space were to be further reduced. As noted, our approach was to first parcellate the BOLD signal and then use GBC as a data-driven method to yield a neuro-biologically and quantitatively interpretable neural data reduction, and we additionally symmetrized the result across hemispheres. Nevertheless, in sharp contrast to the PCA univariate feature selection approach, the CCA solutions were still not stable in the present sample size of N = 436. Indeed, a multivariate power analysis (3) estimates that the following sample sizes will be required to sufficiently power a CCA between 180 neural features and 5 symptom features, at different levels of true canonical correlation (rtrue):

To test if further neural feature space reduction may be improve reproducibility, we also evaluated CCA solutions with neural GBC parcellated according to 12 brain-wide functional networks derived from the recent HCP driven network parcellation (30). Again, we computed the CCA for all 36 item-level symptom as well as 5 PCs (Fig. S15). As with the parcel-level effects, the network-level CCA analysis produced significant results (for CV1 when using 36 item-level scores and for all 5 CVs when using the 5 PC-derived scores). Here the result produced much lower canonical correlations ( 0.3-0.5); however, these effects (for CV1) clearly exceeded the 95% confidence interval generated via random permutations, suggesting that they may reflect the true canonical correlation. We observed a similar result when we evaluated CCAs computed with neural GBC from 192 symmetrized subcortical parcels and 36 symptoms or 5 PCs (Fig. S14). In other words, data-reducing the neural signal to 12 functional networks likely averaged out parcel-level information that may carry symptom-relevant variance, but may be closer to capturing the true effect. Indeed, the power analysis suggests that the current sample size is closer to that needed to detect an effect with 12 + 5 features:

Note that we do not present a CCA conducted with parcels across the whole brain, as the number of variables would exceed the number of observations. However, the multivariate power analysis using 718 neural features and 5 symptom features estimates that the following sample sizes would be required to detect the following effects:

This analysis suggests that even the lowest bound of 10k samples exceeds the present available sample size by two orders of magnitude.

We have also added Fig. S19, illustrating these power analyses results:

Fig. S19. Multivariate power analysis for CCA. Sample sizes were calculated according to (3), see also https://gemmr.readthedocs.io/en/latest/. We computed the multivariate power analyses for three versions of CCA reported in this manuscript: i) 718 neural vs. 5 symptom features; ii) 180 neural vs. 5 symptom features; iii) 12 neural vs. 5 symptom features. (A) At different levels of features, the ratio of samples (i.e. subjects) required per feature to derive a stable CCA solution remains approximately the same across all values of rtrue. As discussed in (3), at rtrue = 0.3 the number of samples required per feature is about 40, which is much greater than the ratio of samples to features available in our dataset. (B) The total number of samples required (nreq)) for a stable CCA solution given the total number of neural and symptom features used in our analyses, at different values of rtrue. In general these required sample sizes are much greater than the N=436 (light grey line) PSD in our present dataset, consistent with the finding that the CCA solutions computed using our data were unstable. Notably, the ‘12 vs. 5’ CCA assuming rtrue = 0.3 requires only 700 subjects, which is closest to the N=436 (horizontal grey line) used in the present sample. This may be in line with the observation of the CCA with 12 neural vs 5 symptom features (Fig. S15C) that the canonical correlation (r = 0.38 for CV1) clearly exceeds the 95% confidence interval, and may be closer to the true effect. However, to confidently detect effects in such an analysis (particularly if rtrue is actually less than 0.3), a larger sample would likely still be needed.

We also added the corresponding methods in the Methods section:

Multivariate CCA Power Analysis. Multivariate power analyses to estimate the minimum sample size needed to sufficiently power a CCA were computed using methods described in (3), using the Genera- tive Modeling of Multivariate Relationships tool (gemmr, https://github.com/murraylab/ gemmr (v0.1.2)). Briefly, a model was built by: 1) Generating synthetic datasets for the two input data matrices, by sampling from a multivariate normal distribution with a joint covariance matrix that was structured to encode CCA solutions with specified properties; 2) Performing CCAs on these synthetic datasets. Because the joint covariance matrix is known, the true values of estimated association strength, weights, scores, and loadings of the CCA, as well as the errors for these four metrics, can also be computed. In addition, statistical power that the estimated association strength is different from 0 is determined through permutation testing; 3) Varying parameters of the generative model (number of features, assumed true between-set correlation, within-set variance structure for both datasets) the required sample size Nreq is determined in each case such that statistical power reaches 90% and all of the above described error metrics fall to a target level of 10%; and 4) Fitting and validating a linear model to predict the required sample size Nreq from parameters of the generative model. This linear model was then used to calculate Nreq for CCA in three data scenarios: i) 718 neural vs. 5 symptom features; ii) 180 neural vs. 5 symptom features; iii) 12 neural vs. 5 symptom features.

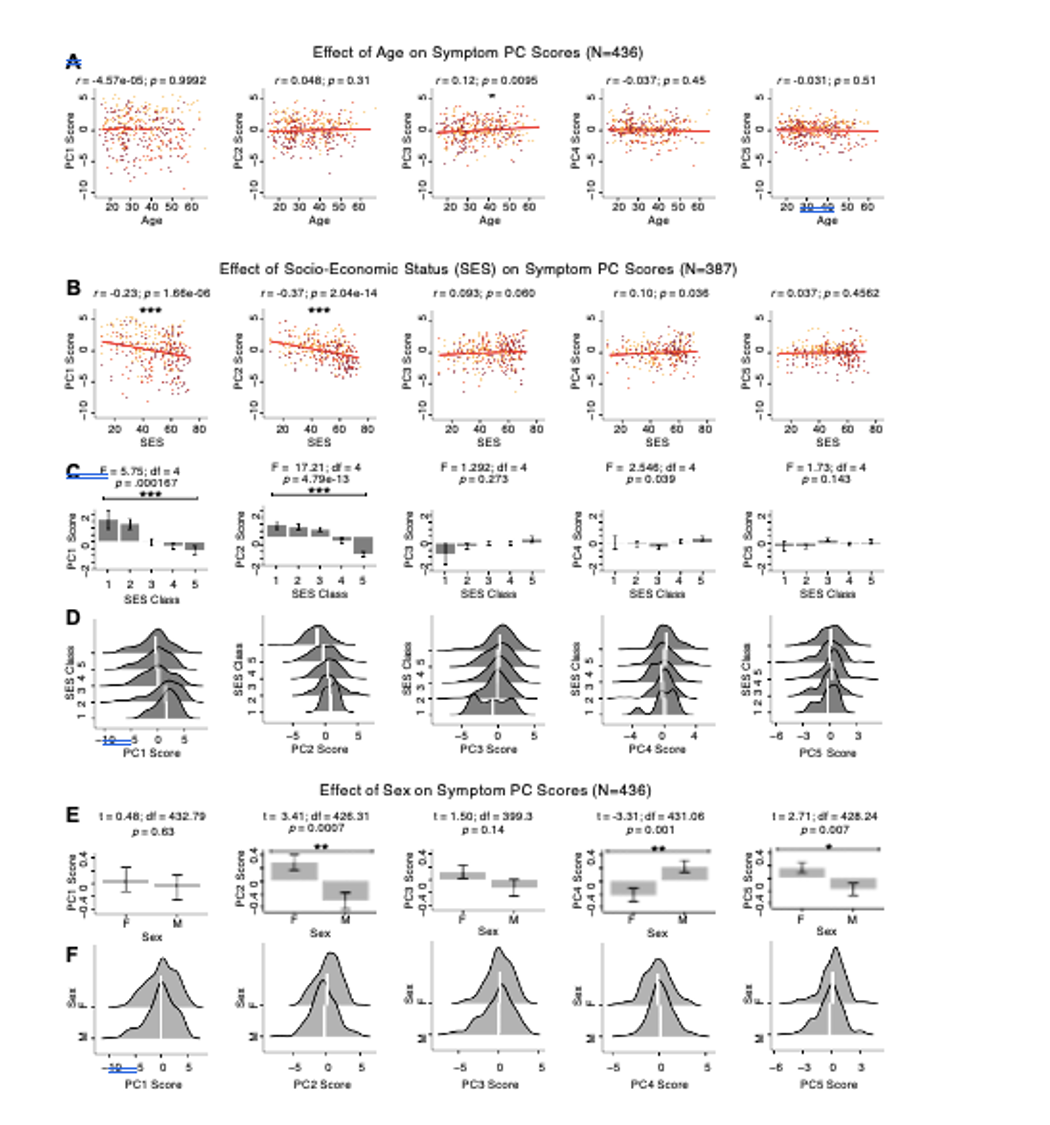

- Given the relatively even distribution of males and females in the dataset, some examination of sex effects on symptom dimension loadings or neuro-behavioural maps would have been interesting (other demographic characteristics like age and SES are summarized for subjects but also not investigated). I think this is a missed opportunity.

We have now provided additional analyses for the core PCA and univariate GBC mapping results, testing for effects of age, sex, and SES in Fig. S8. Briefly, we observed a significant positive relationship between age and PC3 scores, which may be because older patients (whom presumably have been ill for a longer time) exhibit more severe symptoms along the positive PC3 – Psychosis Configuration dimension. We also observed a significant negative relationship between Hollingshead index of SES and PC1 and PC2 scores. Lower PC1 and PC2 scores indicate poorer general functioning and cognitive performance respectively, which is consistent with higher Hollingshead indices (i.e. lower-skilled jobs or unemployment and fewer years of education). We also found significant sex differences in PC2 – Cognitive Functioning, PC4 – Affective Valence, and PC5 – Agitation/Excitement scores.

Fig. S8. Effects of age, socio-economic status, and sex on symptom PCA solution. (A) Correlations between symptom PC scores and age (years) across N=436 PSD. Pearson’s correlation value and uncorrected p-values are reported above scatterplots. After Bonferroni correction, we observed a significant positive relationship between age and PC3 score. This may be because older patients have been ill for a longer period of time and exhibit more severe symptoms along the positive PC3 dimension. (B) Correlations between symptom PC scores and socio-economic status (SES) as measured by the Hollingshead Index of Social Position (31), across N=387 PSD with available data. The index is computed as (Hollingshead occupation score * 7) + (Hollingshead education score * 4); a higher score indicates lower SES (32). We observed a significant negative relationship between Hollingshead index and PC1 and PC2 scores. Lower PC1 and PC2 scores indicate poorer general functioning and cognitive performance respectively, which is consistent with higher Hollingshead indices (i.e. lower-skilled jobs or unemployment and fewer years of education). (C) The Hollingshead index can be split into five classes, with 1 being the highest and 5 being the lowest SES class (31). Consistent with (B) we found a significant difference between the classes after Bonferroni correction for PC1 and PC2 scores. (D) Distributions of PC scores across Hollingshead SES classes show the overlap in scores. White lines indicate the mean score in each class. (E) Differences in PC scores between (M)ale and (F)emale PSD subjects. We found a significant difference between sexes in PC2 – Cognitive Functioning, PC4 – Affective Valence, and PC5 – Agitation/Excitement scores. (F) Distributions of PC scores across M and F subjects show the overlap in scores. White lines indicate the mean score for each sex.

Bibliography

- Jie Lisa Ji, Caroline Diehl, Charles Schleifer, Carol A Tamminga, Matcheri S Keshavan, John A Sweeney, Brett A Clementz, S Kristian Hill, Godfrey Pearlson, Genevieve Yang, et al. Schizophrenia exhibits bi-directional brain-wide alterations in cortico-striato-cerebellar circuits. Cerebral Cortex, 29(11):4463–4487, 2019.

- Alan Anticevic, Michael W Cole, Grega Repovs, John D Murray, Margaret S Brumbaugh, Anderson M Winkler, Aleksandar Savic, John H Krystal, Godfrey D Pearlson, and David C Glahn. Characterizing thalamo-cortical disturbances in schizophrenia and bipolar illness. Cerebral cortex, 24(12):3116–3130, 2013.

- Markus Helmer, Shaun D Warrington, Ali-Reza Mohammadi-Nejad, Jie Lisa Ji, Amber Howell, Benjamin Rosand, Alan Anticevic, Stamatios N Sotiropoulos, and John D Murray. On stability of canonical correlation analysis and partial least squares with application to brain-behavior associations. bioRxiv, 2020. .

- Richard Dinga, Lianne Schmaal, Brenda WJH Penninx, Marie Jose van Tol, Dick J Veltman, Laura van Velzen, Maarten Mennes, Nic JA van der Wee, and Andre F Marquand. Evaluating the evidence for biotypes of depression: Methodological replication and extension of. NeuroImage: Clinical, 22:101796, 2019.

- Cedric Huchuan Xia, Zongming Ma, Rastko Ciric, Shi Gu, Richard F Betzel, Antonia N Kaczkurkin, Monica E Calkins, Philip A Cook, Angel Garcia de la Garza, Simon N Vandekar, et al. Linked dimensions of psychopathology and connectivity in functional brain networks. Nature communications, 9(1):3003, 2018.

- Andrew T Drysdale, Logan Grosenick, Jonathan Downar, Katharine Dunlop, Farrokh Mansouri, Yue Meng, Robert N Fetcho, Benjamin Zebley, Desmond J Oathes, Amit Etkin, et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nature medicine, 23(1):28, 2017.

- Meichen Yu, Kristin A Linn, Russell T Shinohara, Desmond J Oathes, Philip A Cook, Romain Duprat, Tyler M Moore, Maria A Oquendo, Mary L Phillips, Melvin McInnis, et al. Childhood trauma history is linked to abnormal brain connectivity in major depression. Proceedings of the National Academy of Sciences, 116(17):8582–8590, 2019.

- David R Hardoon, Sandor Szedmak, and John Shawe-Taylor. Canonical correlation analysis: An overview with application to learning methods. Neural computation, 16(12):2639–2664, 2004.

- Katrin H Preller, Joshua B Burt, Jie Lisa Ji, Charles H Schleifer, Brendan D Adkinson, Philipp Stämpfli, Erich Seifritz, Grega Repovs, John H Krystal, John D Murray, et al. Changes in global and thalamic brain connectivity in LSD-induced altered states of consciousness are attributable to the 5-HT2A receptor. eLife, 7:e35082, 2018.

- Mark A Geyer and Franz X Vollenweider. Serotonin research: contributions to understanding psychoses. Trends in pharmacological sciences, 29(9):445–453, 2008.

- H Y Meltzer, B W Massey, and M Horiguchi. Serotonin receptors as targets for drugs useful to treat psychosis and cognitive impairment in schizophrenia. Current pharmaceutical biotechnology, 13(8):1572–1586, 2012.

- Anissa Abi-Dargham, Marc Laruelle, George K Aghajanian, Dennis Charney, and John Krystal. The role of serotonin in the pathophysiology and treatment of schizophrenia. The Journal of neuropsychiatry and clinical neurosciences, 9(1):1–17, 1997.

- Francine M Benes and Sabina Berretta. Gabaergic interneurons: implications for understanding schizophrenia and bipolar disorder. Neuropsychopharmacology, 25(1):1–27, 2001.

- Melis Inan, Timothy J. Petros, and Stewart A. Anderson. Losing your inhibition: Linking cortical gabaergic interneurons to schizophrenia. Neurobiology of Disease, 53:36–48, 2013. ISSN 0969-9961. . What clinical findings can teach us about the neurobiology of schizophrenia?

- Samuel J Dienel and David A Lewis. Alterations in cortical interneurons and cognitive function in schizophrenia. Neurobiology of disease, 131:104208, 2019.

- John E Lisman, Joseph T Coyle, Robert W Green, Daniel C Javitt, Francine M Benes, Stephan Heckers, and Anthony A Grace. Circuit-based framework for understanding neurotransmitter and risk gene interactions in schizophrenia. Trends in neurosciences, 31(5):234–242, 2008.

- Anthony A Grace. Dysregulation of the dopamine system in the pathophysiology of schizophrenia and depression. Nature Reviews Neuroscience, 17(8):524, 2016.

- John F Enwright III, Zhiguang Huo, Dominique Arion, John P Corradi, George Tseng, and David A Lewis. Transcriptome alterations of prefrontal cortical parvalbumin neurons in schizophrenia. Molecular psychiatry, 23(7): 1606–1613, 2018.

- Daniel J Lodge, Margarita M Behrens, and Anthony A Grace. A loss of parvalbumin-containing interneurons is associated with diminished oscillatory activity in an animal model of schizophrenia. Journal of Neuroscience, 29(8): 2344–2354, 2009.

- Clare L Beasley and Gavin P Reynolds. Parvalbumin-immunoreactive neurons are reduced in the prefrontal cortex of schizophrenics. Schizophrenia research, 24(3):349–355, 1997.

- David A Lewis, Allison A Curley, Jill R Glausier, and David W Volk. Cortical parvalbumin interneurons and cognitive dysfunction in schizophrenia. Trends in neurosciences, 35(1):57–67, 2012.

- Alan Anticevic, Margaret S Brumbaugh, Anderson M Winkler, Lauren E Lombardo, Jennifer Barrett, Phillip R Corlett, Hedy Kober, June Gruber, Grega Repovs, Michael W Cole, et al. Global prefrontal and fronto-amygdala dysconnectivity in bipolar i disorder with psychosis history. Biological psychiatry, 73(6):565–573, 2013.

- Alex Fornito, Jong Yoon, Andrew Zalesky, Edward T Bullmore, and Cameron S Carter. General and specific functional connectivity disturbances in first-episode schizophrenia during cognitive control performance. Biological psychiatry, 70(1):64–72, 2011.

- Avital Hahamy, Vince Calhoun, Godfrey Pearlson, Michal Harel, Nachum Stern, Fanny Attar, Rafael Malach, and Roy Salomon. Save the global: global signal connectivity as a tool for studying clinical populations with functional magnetic resonance imaging. Brain connectivity, 4(6):395–403, 2014.

- Michael W Cole, Alan Anticevic, Grega Repovs, and Deanna Barch. Variable global dysconnectivity and individual differences in schizophrenia. Biological psychiatry, 70(1):43–50, 2011.

- Naomi R Driesen, Gregory McCarthy, Zubin Bhagwagar, Michael Bloch, Vincent Calhoun, Deepak C D’Souza, Ralitza Gueorguieva, George He, Ramani Ramachandran, Raymond F Suckow, et al. Relationship of resting brain hyperconnectivity and schizophrenia-like symptoms produced by the nmda receptor antagonist ketamine in humans. Molecular psychiatry, 18(11):1199–1204, 2013.

- Neil D Woodward, Baxter Rogers, and Stephan Heckers. Functional resting-state networks are differentially affected in schizophrenia. Schizophrenia research, 130(1-3):86–93, 2011.

- Zarrar Shehzad, Clare Kelly, Philip T Reiss, R Cameron Craddock, John W Emerson, Katie McMahon, David A Copland, F Xavier Castellanos, and Michael P Milham. A multivariate distance-based analytic framework for connectome-wide association studies. Neuroimage, 93 Pt 1:74–94, Jun 2014. .

- Alan J Gelenberg. The catatonic syndrome. The Lancet, 307(7973):1339–1341, 1976.

- Jie Lisa Ji, Marjolein Spronk, Kaustubh Kulkarni, Grega Repovš, Alan Anticevic, and Michael W Cole. Mapping the human brain’s cortical-subcortical functional network organization. NeuroImage, 185:35–57, 2019.

- August B Hollingshead et al. Four factor index of social status. 1975.

- Jaya L Padmanabhan, Neeraj Tandon, Chiara S Haller, Ian T Mathew, Shaun M Eack, Brett A Clementz, Godfrey D Pearlson, John A Sweeney, Carol A Tamminga, and Matcheri S Keshavan. Correlations between brain structure and symptom dimensions of psychosis in schizophrenia, schizoaffective, and psychotic bipolar i disorders. Schizophrenia bulletin, 41(1):154–162, 2015.

-

Evaluation Summary:

The authors assessed multivariate relations between a dimensionality-reduced symptom space and brain imaging features, using a large database of individuals with psychosis-spectrum disorders (PSD). Demonstrating both high stability and reproducibility of their approaches, this work showed a promise that diagnosis or treatment of PSD can benefit from a proposed data-driven brain-symptom mapping framework. It is therefore of broad potential interest across cognitive and translational neuroscience.

(This preprint has been reviewed by eLife. We include the public reviews from the reviewers here; the authors also receive private feedback with suggested changes to the manuscript. The reviewers remained anonymous to the authors.)

-

Reviewer 1 (Public Review):

The paper assessed the relationship between a dimensionality-reduced symptom space and functional brain imaging features based on the large multicentric data of individuals with psychosis-spectrum disorders (PSD).

The strength of this study is that i) in every analysis, the authors provided high-level evidence of reproducibility in their findings, ii) the study included several control analyses to test other comparable alternatives or independent techniques (e.g., ICA, univariate vs. multivariate), and iii) correlating to independently acquired pharmacological neuroimaging and gene expression maps, the study highlighted neurobiological validity of their results.

Overall the study has originality and several important tips and guidance for behavior-brain mapping, although the paper contains heavy descriptions …

Reviewer 1 (Public Review):

The paper assessed the relationship between a dimensionality-reduced symptom space and functional brain imaging features based on the large multicentric data of individuals with psychosis-spectrum disorders (PSD).

The strength of this study is that i) in every analysis, the authors provided high-level evidence of reproducibility in their findings, ii) the study included several control analyses to test other comparable alternatives or independent techniques (e.g., ICA, univariate vs. multivariate), and iii) correlating to independently acquired pharmacological neuroimaging and gene expression maps, the study highlighted neurobiological validity of their results.

Overall the study has originality and several important tips and guidance for behavior-brain mapping, although the paper contains heavy descriptions about data mining techniques such as several dimensionality reduction algorithms (e.g., PCA, ICA, and CCA) and prediction models.

Although relatively minors, I also have few points on the weaknesses, including i) an incomplete description about how to tell the PSD effects from the normal spectrum, ii) a lack of overarching interpretation for other principal components rather than only the 3rd one, and iii) somewhat expected results in the stability of PC and relevant indices.

-

Reviewer 2 (Public Review):

The work by Ji et al is an interesting and rather comprehensive analysis of the trend of developing data-driven methods for developing brain-symptom dimension biomarkers that bring a biological basis to the symptoms (across PANSS and cognitive features) that relate to psychotic disorders. To this end, the authors performed several interesting multivariate analyses to decompose the symptom/behavioural dimensions and functional connectivity data. To this end, the authors use data from individuals from a transdiagnostic group of individuals recruited by the BSNIP cohort and combine high-level methods in order to integrate both types of modalities. Conceptually there are several strengths to this paper that should be applauded. However, I do think that there are important aspects of this paper that need revision …

Reviewer 2 (Public Review):

The work by Ji et al is an interesting and rather comprehensive analysis of the trend of developing data-driven methods for developing brain-symptom dimension biomarkers that bring a biological basis to the symptoms (across PANSS and cognitive features) that relate to psychotic disorders. To this end, the authors performed several interesting multivariate analyses to decompose the symptom/behavioural dimensions and functional connectivity data. To this end, the authors use data from individuals from a transdiagnostic group of individuals recruited by the BSNIP cohort and combine high-level methods in order to integrate both types of modalities. Conceptually there are several strengths to this paper that should be applauded. However, I do think that there are important aspects of this paper that need revision to improve readability and to better compare the methods to what is in the field and provide a balanced view relative to previous work with the same basic concepts that they are building their work around. Overall, I feel as though the work could advance our knowledge in the development of biomarkers or subject level identifiers for psychiatric disorders and potentially be elevated to the level of an individual "subject screener". While this is a noble goal, this will require more data and information in the future as a means to do this. This is certainly an important step forward in this regard.

Strengths:

- Combined analysis of canonical psychosis symptoms and cognitive deficits across multiple traditional psychosis-related diagnoses offers one of the most comprehensive mappings of impairments experienced within PSD to brain features to date

- Cross-validation analyses and use of various datasets (diagnostic replication, pharmacological neuroimaging) is extremely impressive, well motivated, and thorough. In addition the authors use a large dataset and provide "out of sample" validity

- Medication status and dosage also accounted for

- Similarly, the extensive examination of both univariate and multivariate neuro-behavioural solutions from a methodological viewpoint, including the testing of multiple configurations of CCA (i.e. with different parcellation granularities), offers very strong support for the selected symptom-to-neural mapping

- The plots of the obtained PC axes compared to those of standard clinical symptom aggregate scales provide a really elegant illustration of the differences and demonstrate clearly the value of data-driven symptom reduction over conventional categories

- The comparison of the obtained neuro-behavioural map for the "Psychosis configuration" symptom dimension to both pharmacological neuroimaging and neural gene expression maps highlights direct possible links with both underlying disorder mechanisms and possible avenues for treatment development and application

- The authors' explicit investigation of whether PSD and healthy controls share a major portion of neural variance (possibly present across all people) has strong implications for future brain-behaviour mapping studies, and provides a starting point for narrowing the neural feature space to just the subset of features showing symptom-relevant variance in PSDCritiques:

- Overall I found the paper very hard to read. There are abbreviation everywhere for every concept that is introduced. The paper is methods heavy (which I am not opposed to and quite like). It is clear that the authors took a lot of care in thinking about the methods that were chosen. That said, I think that the organization would benefit from a more traditional Intro, Methods, Results, and Discussion formatting so that it would be easier to parse the Results. The figures are extremely dense and there are often terms that are coined or used that are not or poorly defined.

- One thing I found conceptually difficult is the explicit comparison to the work in the Xia paper from the Satterthwaite group. Is this a fair comparison? The sample is extremely different as it is non clinical and comes from the general population. Can it be suggested that the groups that are clinically defined here are comparable? Is this an appropriate comparison and standard to make. To suggest that the work in that paper is not reproducible is flawed in this light.

- Why was PCA selected for the analysis rather than ICA? Authors mention that PCA enables the discovery of orthogonal symptom dimensions, but don't elaborate on why this is expected to better capture behavioural variation within PSD compared to non-orthogonal dimensions. Given that symptom and/or cognitive items in conventional assessments are likely to be correlated in one way or another, allowing correlations to be present in the low-rank behavioural solution may better represent the original clinical profiles and drive more accurate brain-behaviour mapping. Moreover, as alluded to in the Discussion, employing an oblique rotation in the identification of dimensionality-reduced symptom axes may have actually resulted in a brain-behaviour space that is more generalizable to other psychiatric spectra. Why not use something more relevant to symptom/behaviour data like a factor analysis?

- The gene expression mapping section lacks some justification for why the 7 genes of interest were specifically chosen from among the numerous serotonin and GABA receptors and interneuron markers (relevant for PSD) available in the AHBA. Brief reference to the believed significance of the chosen genes in psychosis pathology would have helped to contextualize the observed relationship with the neuro-behavioural map.

- What the identified univariate neuro-behavioural mapping for PC3 ("psychosis configuration") actually means from an empirical or brain network perspective is not really ever discussed in detail. E.g., in Results, "a high positive PC3 score was associated with both reduced GBC across insular and superior dorsal cingulate cortices, thalamus, and anterior cerebellum and elevated GBC across precuneus, medial prefrontal, inferior parietal, superior temporal cortices and posterior lateral cerebellum." While the meaning and calculation of GBC can be gleaned from the Methods, a direct interpretation of the neuro-behavioural results in terms of the types of symptoms contributing to PC3 and relative hyper-/hypo-connectivity of the DMN compared to e.g. healthy controls could facilitate easier comparisons with the findings of past studies (since GBC does not seem to be a very commonly-used measure in the psychosis fMRI literature). Also important since GBC is a summary measure of the average connectivity of a region, and doesn't provide any specificity in terms of which regions in particular are more or less connected within a functional network (an inherent limitation of this measure which warrants further attention).

- Possibly a nitpick, but while the inclusion of cognitive measures for PSD individuals is a main (self-)selling point of the paper, there's very limited focus on the "Cognitive functioning" component (PC2) of the PCA solution. Examining Fig. S8K, the GBC map for this cognitive component seems almost to be the inverse for that of the "Psychosis configuration" component (PC3) focused on in the rest of the paper. Since PC3 does not seem to have high loadings from any of the cognitive items, but it is known that psychosis spectrum individuals tend to exhibit cognitive deficits which also have strong predictive power for illness trajectory, some discussion of how multiple univariate neuro-behavioural features could feasibly be used in conjunction with one another could have been really interesting.

Another nitpick, but the Y axes of Fig. 8C-E are not consistent, which causes some of the lines of best fit to be a bit misleading (e.g. GABRA1 appears to have a more strongly positive gene-PC relationship than HTR1E, when in reality the opposite is true.)

- The authors explain the apparent low reproducibility of their multivariate PSD neuro-behavioural solution using the argument that many psychiatric neuroimaging datasets are too small for multivariate analyses to be sufficiently powered. Applying an existing multivariate power analysis to their own data as empirical support for this idea would have made it even more compelling. The following paper suggests guidelines for sample sizes required for CCA/PLS as well as a multivariate calculator: Helmer, M., Warrington, S. D., Mohammadi-Nejad, A.-R., Ji, J. L., Howell, A., Rosand, B., Anticevic, A., Sotiropoulos, S. N., & Murray, J. D. (2020). On stability of Canonical Correlation Analysis and Partial Least Squares with application to brain-behavior associations (p. 2020.08.25.265546). https://doi.org/10.1101/2020.08.25.265546

- Given the relatively even distribution of males and females in the dataset, some examination of sex effects on symptom dimension loadings or neuro-behavioural maps would have been interesting (other demographic characteristics like age and SES are summarized for subjects but also not investigated). I think this is a missed opportunity. -